本文主要是介绍2.分布式文件系统-----mfs高可用,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

mfs高可用

- 一、环境配置

- 1.iscsi服务端的配置

- 2.客户端server1

- 3.客户端server2

- 二、结合pacemaker部署高可用

- 1.安装相关组件

- 2.整体部署

- 3.资源加入集群

- 4.内核崩溃,恢复集群状态

一、环境配置

1.iscsi服务端的配置

[root@server1 ~]# yum install iscsi-*取消server2的硬盘

[root@server2 ~]# systemctl stop moosefs-chunkserver.service

[root@server2 ~]# umount /mnt/chunk1/

server3:安装targetcli 添加一块10G硬盘

[root@server3 mfs]# yum install -y targetcli

[root@server3 mfs]# systemctl start target

[root@server3 mfs]# targetcli

Warning: Could not load preferences file /root/.targetcli/prefs.bin.

targetcli shell version 2.1.fb46

Copyright 2011-2013 by Datera, Inc and others.

For help on commands, type 'help'./> ls

o- / ................................................................... [...]o- backstores ........................................................ [...]| o- block ............................................ [Storage Objects: 0]| o- fileio ........................................... [Storage Objects: 0]| o- pscsi ............................................ [Storage Objects: 0]| o- ramdisk .......................................... [Storage Objects: 0]o- iscsi ...................................................... [Targets: 0]o- loopback ................................................... [Targets: 0]

/> cd backstores/

/backstores> cd block

/backstores/block> ls

o- block ................................................ [Storage Objects: 0]

/backstores/block> create my_disk /dev/vdb

Created block storage object my_disk using /dev/vdb.

/backstores/block> ls

o- block ................................................ [Storage Objects: 1]o- my_disk ..................... [/dev/vdb (10.0GiB) write-thru deactivated]o- alua ................................................. [ALUA Groups: 1]o- default_tg_pt_gp ..................... [ALUA state: Active/optimized]

/backstores/block> cd ..

/backstores> cd ..

/> ls

o- / ................................................................... [...]o- backstores ........................................................ [...]| o- block ............................................ [Storage Objects: 1]| | o- my_disk ................. [/dev/vdb (10.0GiB) write-thru deactivated]| | o- alua ............................................. [ALUA Groups: 1]| | o- default_tg_pt_gp ................. [ALUA state: Active/optimized]| o- fileio ........................................... [Storage Objects: 0]| o- pscsi ............................................ [Storage Objects: 0]| o- ramdisk .......................................... [Storage Objects: 0]o- iscsi ...................................................... [Targets: 0]o- loopback ................................................... [Targets: 0]

/> cd iscsi

/iscsi> ls

o- iscsi ........................................................ [Targets: 0]

/iscsi>

/iscsi> create iqn.2021-07.org.westos:target1

Created target iqn.2021-07.org.westos:target1.

Created TPG 1.

Global pref auto_add_default_portal=true

Created default portal listening on all IPs (0.0.0.0), port 3260.

/iscsi> ls

o- iscsi ........................................................ [Targets: 1]o- iqn.2021-07.org.westos:target1 ................................ [TPGs: 1]o- tpg1 ........................................... [no-gen-acls, no-auth]o- acls ...................................................... [ACLs: 0]o- luns ...................................................... [LUNs: 0]o- portals ................................................ [Portals: 1]o- 0.0.0.0:3260 ................................................. [OK]

/iscsi> cd iqn.2021-07.org.westos:target1/

/iscsi/iqn.20...estos:target1> ls

o- iqn.2021-07.org.westos:target1 .................................. [TPGs: 1]o- tpg1 ............................................. [no-gen-acls, no-auth]o- acls ........................................................ [ACLs: 0]o- luns ........................................................ [LUNs: 0]o- portals .................................................. [Portals: 1]o- 0.0.0.0:3260 ................................................... [OK]

/iscsi/iqn.20...estos:target1> cd tpg1/

/iscsi/iqn.20...:target1/tpg1> ls

o- tpg1 ............................................... [no-gen-acls, no-auth]o- acls .......................................................... [ACLs: 0]o- luns .......................................................... [LUNs: 0]o- portals .................................................... [Portals: 1]o- 0.0.0.0:3260 ..................................................... [OK]

/iscsi/iqn.20...:target1/tpg1> cd luns

/iscsi/iqn.20...et1/tpg1/luns> create /backstores/block/my_disk

Created LUN 0.

/iscsi/iqn.20...et1/tpg1/luns> ls

o- luns ............................................................ [LUNs: 1]o- lun0 ...................... [block/my_disk (/dev/vdb) (default_tg_pt_gp)]

/iscsi/iqn.20...et1/tpg1/luns> cd ..

/iscsi/iqn.20...:target1/tpg1> ls

o- tpg1 ............................................... [no-gen-acls, no-auth]o- acls .......................................................... [ACLs: 0]o- luns .......................................................... [LUNs: 1]| o- lun0 .................... [block/my_disk (/dev/vdb) (default_tg_pt_gp)]o- portals .................................................... [Portals: 1]o- 0.0.0.0:3260 ..................................................... [OK]

/iscsi/iqn.20...:target1/tpg1> cd acls

/iscsi/iqn.20...et1/tpg1/acls> ls

o- acls ............................................................ [ACLs: 0]

/iscsi/iqn.20...et1/tpg1/acls> create iqn.2021-07.org.westos:client

Created Node ACL for iqn.2021-07.org.westos:client

Created mapped LUN 0.

/iscsi/iqn.20...et1/tpg1/acls> ls

o- acls ............................................................ [ACLs: 1]o- iqn.2021-07.org.westos:client .......................... [Mapped LUNs: 1]o- mapped_lun0 ................................. [lun0 block/my_disk (rw)]

/iscsi/iqn.20...et1/tpg1/acls> exit

Global pref auto_save_on_exit=true

Configuration saved to /etc/target/saveconfig.json

[root@server3 mfs]#

2.客户端server1

[root@server1 ~]# cd /etc/iscsi/

[root@server1 iscsi]# ls

initiatorname.iscsi iscsid.conf

[root@server1 iscsi]# vim initiatorname.iscsi

InitiatorName=iqn.2021-07.org.westos:client

[root@server1 iscsi]# iscsiadm -m discovery -t st -p 172.25.15.3

172.25.15.3:3260,1 iqn.2021-07.org.westos:target1

[root@server1 iscsi]# iscsiadm -m node -l

Logging in to [iface: default, target: iqn.2021-07.org.westos:target1, portal: 172.25.15.3,3260] (multiple)

Login to [iface: default, target: iqn.2021-07.org.westos:target1, portal: 172.25.15.3,3260] successful.[root@server1 iscsi]# fdisk /dev/sda #磁盘分区

[root@server1 iscsi]# mkfs.xfs /dev/sda1 #

[root@server1 iscsi]# mount /dev/sda1 /mnt/

[root@server1 iscsi]# cd /var/lib/mfs/

[root@server1 mfs]# ls

changelog.0.mfs changelog.2.mfs changelog.5.mfs metadata.mfs.back metadata.mfs.empty

changelog.1.mfs changelog.4.mfs metadata.crc metadata.mfs.back.1 stats.mfs

[root@server1 mfs]# cp -p * /mnt/

[root@server1 mfs]# cd /mnt

[root@server1 mnt]# ls

changelog.0.mfs changelog.2.mfs changelog.5.mfs metadata.mfs.back metadata.mfs.empty

changelog.1.mfs changelog.4.mfs metadata.crc metadata.mfs.back.1 stats.mfs

[root@server1 mnt]# ll

total 4904

-rw-r----- 1 mfs mfs 43 Jul 8 04:06 changelog.0.mfs

-rw-r----- 1 mfs mfs 734 Jul 8 03:36 changelog.1.mfs

-rw-r----- 1 mfs mfs 1069 Jul 8 02:59 changelog.2.mfs

-rw-r----- 1 mfs mfs 949 Jul 8 00:52 changelog.4.mfs

-rw-r----- 1 mfs mfs 213 Jul 7 23:56 changelog.5.mfs

-rw-r----- 1 mfs mfs 120 Jul 8 04:00 metadata.crc

-rw-r----- 1 mfs mfs 3989 Jul 8 04:00 metadata.mfs.back

-rw-r----- 1 mfs mfs 3770 Jul 8 03:00 metadata.mfs.back.1

-rw-r--r-- 1 mfs mfs 8 Oct 8 2020 metadata.mfs.empty

-rw-r----- 1 mfs mfs 4984552 Jul 8 04:00 stats.mfs

[root@server1 mnt]#

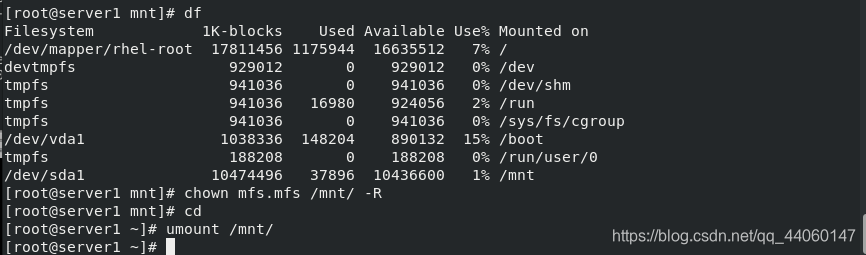

[root@server1 mnt]# df

Filesystem 1K-blocks Used Available Use% Mounted on

/dev/mapper/rhel-root 17811456 1175944 16635512 7% /

devtmpfs 929012 0 929012 0% /dev

tmpfs 941036 0 941036 0% /dev/shm

tmpfs 941036 16980 924056 2% /run

tmpfs 941036 0 941036 0% /sys/fs/cgroup

/dev/vda1 1038336 148204 890132 15% /boot

tmpfs 188208 0 188208 0% /run/user/0

/dev/sda1 10474496 37896 10436600 1% /mnt

[root@server1 mnt]# chown mfs.mfs /mnt/ -R

[root@server1 mnt]# cd

[root@server1 ~]# umount /mnt/

[root@server1 ~]# systemctl stop moosefs-master.service

3.客户端server2

[root@server2 ~]# yum install -y iscsi-*

[root@server2 ~]# vim /etc/iscsi/initiatorname.iscsi

[root@server2 ~]# cat /etc/iscsi/initiatorname.iscsi

InitiatorName=iqn.2021-07.org.westos:client[root@server2 ~]# iscsiadm -m discovery -t st -p 172.25.15.3

172.25.15.3:3260,1 iqn.2021-07.org.westos:target1

[root@server2 ~]# iscsiadm -m node -l

Logging in to [iface: default, target: iqn.2021-07.org.westos:target1, portal: 172.25.15.3,3260] (multiple)

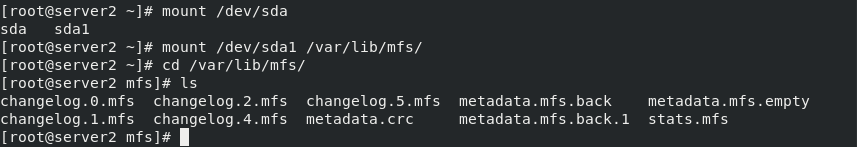

Login to [iface: default, target: iqn.2021-07.org.westos:target1, portal: 172.25.15.3,3260] successful.[root@server2 ~]# mount /dev/sda1 /var/lib/mfs/

[root@server2 ~]# cd /var/lib/mfs/

[root@server2 mfs]# ls

二、结合pacemaker部署高可用

1.安装相关组件

[root@server1 yum.repos.d]# yum install -y pacemaker pcs psmisc policycoreutils-python

[root@server1 yum.repos.d]# ssh-keygen

[root@server1 ~]# ssh-copy-id server2 #对server2免密

[root@server1 ~]# ssh server2 yum install -y pacemaker pcs psmisc policycoreutils-python

[root@server1 ~]# systemctl enable --now pcsd.service ##开启服务

[root@server1 ~]# ssh server2 systemctl enable --now pcsd.service

2.整体部署

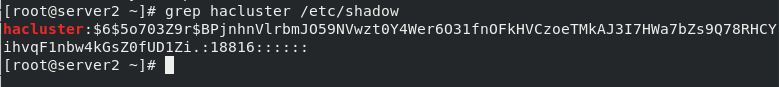

[root@server1 ~]# echo westos | passwd --stdin hacluster ##创建集群用户及密码

[root@server1 ~]# ssh server2 'echo westos | passwd --stdin hacluster'

[root@server1 ~]# grep hacluster /etc/shadow

hacluster:$6$DWL0YSw8$uIO2X//m106qpiyXaTL.haSSaoxfBDy/6OC7tdjaXUHQ8NyGtzAHDIMmQaHKRmE73pPVRxHIMAc9dV8QCUNfC/:18816::::::[root@server2 ~]# grep hacluster /etc/shadow

hacluster:$6$SZ8Kagxi$mmylOrHkjU2JwFYW7sbvF1k.kWdfOvODu9vljG2XRLBfR0Sgg6CXrcKc3cjoaoFZ7yCTz1bsT0CSlw6mQRE7K1:18740::::::

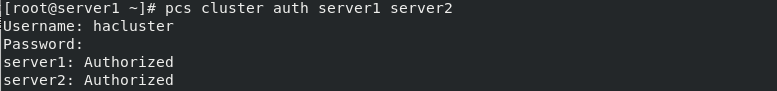

[root@server1 ~]# pcs cluster auth server1 server2 ##集群认证

Username: hacluster

Password:

server2: Authorized

server1: Authorized

[root@server1 ~]# pcs cluster setup --name mycluster server1 server2 ##corosync传递心跳信息,在同一节点上使用 pcs cluster setup生成并同步corosync配置

[root@server1 ~]# pcs cluster start --all ##启动集群

[root@server1 ~]# pcs status ##查看集群状态

[root@server1 ~]# crm_verify -LV ##检查集群配置[root@server1 ~]# pcs property set stonith-enabled=false ##禁用fence

[root@server1 ~]# pcs status

[root@server1 ~]# pcs resource create vip ocf:heartbeat:IPaddr2 ip=172.25.15.100 op monitor interval=30s ##为集群创建指定vip

[root@server1 ~]# pcs status

[root@server1 ~]# ip addrinet 172.25.15.100/24 brd 172.25.15.255 scope global secondary eth0

[root@server1 ~]# pcs node standby

[root@server1 ~]# pcs status

[root@server2 ~]# ip addr

[root@server1 ~]# pcs node unstandby

[root@server1 ~]# pcs status

vip创建成功,server1上被分配了vip

3.资源加入集群

[root@server1 ~]# pcs cluster enable --all ##开机自启

server1: Cluster Enabled

server2: Cluster Enabled

[root@server1 ~]# pcs status[root@server1 ~]# pcs resource create mfsdata ocf:heartbeat:Filesystem device="/dev/sda1" directory="/var/lib/mfs" fstype=xfs op monitor interval=60s

[root@server1 ~]# pcs status[root@server1 ~]# pcs resource create mfsmaster systemd:moosefs-master op monitor interval=60s ##在集群中以脚本的方式启动服务

[root@server1 ~]# pcs status

[root@server1 ~]# pcs resource group add mfsgroup vip mfsdata mfsmaster [root@server1 ~]# vim /etc/hosts

172.25.15.100 mfsmaster ## server 1 2 3 4 真机

[root@server1 ~]# systemctl restart moosefs-master.service

[root@server2 ~]# systemctl status moosefs-master.service

[root@server2 ~]# vim /usr/lib/systemd/system/moosefs-master.service[Service] #修改配置文件

Type=forking

ExecStart=/usr/sbin/mfsmaster -a

[root@server2 ~]# systemctl restart moosefs-master.service

[root@server2 ~]# systemctl daemon-reload #重启服务

[root@server2 ~]# systemctl start moosefs-master@

Failed to start moosefs-master@.service: Unit name moosefs-master@.service is missing the instance name.

See system logs and 'systemctl status moosefs-master@.service' for details.

[root@server2 ~]# systemctl start moosefs-master #启动

[root@server2 ~]# systemctl stop moosefs-master[root@server3 mfs]# systemctl restart moosefs-chunkserver.service

[root@server4 mfs]# systemctl restart moosefs-chunkserver.service

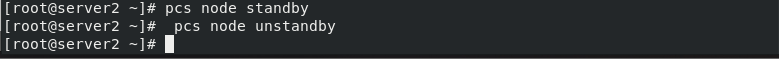

[root@server2 ~]# pcs node standby

[root@server2 ~]# pcs node unstandby

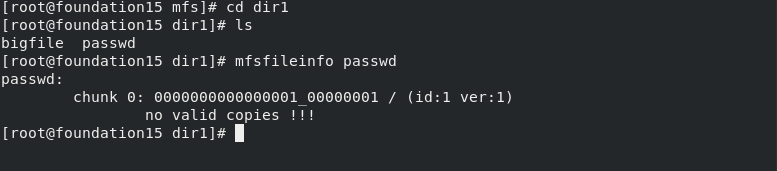

4.内核崩溃,恢复集群状态

[root@server1 ~]# echo c > /proc/sysrq-trigger

[root@server2 ~]# pcs status[root@foundation mfs]# pwd

/mnt/mfs

[root@foundation mfs]# ls

passwd

[root@foundation mfs]# mfsfileinfo passwd

passwd:chunk 0: 0000000000000008_00000001 / (id:8 ver:1)copy 1: 172.25.15.3:9422 (status:VALID)copy 2: 172.25.15.4:9422 (status:VALID)server1断电重启恢复

这篇关于2.分布式文件系统-----mfs高可用的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!