本文主要是介绍SparkStreaming wordcount demo,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

流数据统计,将每隔10s内的数据做一次单词统计

package com.streamingimport org.apache.spark.streaming._

import org.apache.spark.streaming.StreamingContext._

import org.apache.spark.SparkContext

import org.apache.spark.api.java.function._

import org.apache.spark.streaming._

import org.apache.spark.streaming.api._

import org.apache.spark.SparkConfobject WordCount {

def main(args: Array[String]): Unit = {

// Create a StreamingContext with a local master

// Spark Streaming needs at least two working threadval ssc = new StreamingContext(new SparkConf().setAppName("NetworkWordCount"),Seconds(10))

//val ssc = new StreamingContext("local[2]", "NetworkWordCount", Seconds(10))

// Create a DStream that will connect to serverIP:serverPort, like localhost:9999

val lines = ssc.socketTextStream("172.171.51.131", 9999)

// Split each line into words

val words = lines.flatMap(_.split(" "))

// Count each word in each batch

val pairs = words.map(word => (word, 1))

val wordCounts = pairs.reduceByKey(_+ _)

wordCounts.print()

ssc.start()

ssc.awaitTermination()

}

}

输入:分三个时段

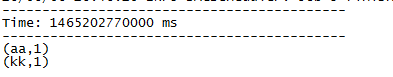

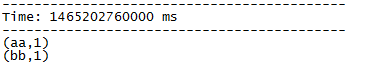

输出:

这篇关于SparkStreaming wordcount demo的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!