本文主要是介绍Kubernetes实战(十八)-Pod亲和与反亲和调度,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

1 调度Pod的主要方式

Pod调度到指定Node的方式主要有4种:

- nodeName调度:直接在Pod的yaml编排文件中指定

nodeName,调度到指定name的节点上。 - nodeSelector调度:直接在Pod的yaml编排文件中指定

nodeSelector,调度到带有指定label的节点上。 - 污点(Taints)和容忍度(Tolerations)调度:主要通过在Node节点上打污点,然后在Pod的yaml编排文件中配置容忍度,来实现调度。

- 亲和-反亲和调度:本文介绍。

2 为什么需要亲和调度?

有了nodeName调度、nodeSelector调度、污点(Taints)和容忍度(Tolerations)调度,为什么还需要亲和-反亲和调度呢?

为了应对更灵活更复杂的调度场景。比如有些场景想把2个Pod 调度到一台节点上,有的场景为了隔离性高可用性想把2个Pod分开到不同节点上,或者有的场景想把Pod调度到指定的一些特点节点上。

3 亲和调度的前置概念

label在K8S中是非常重要的概念,不管是什么场景,只要和选择、筛选相关的,基本是用label字段来匹配的。- 亲和性和反亲和性的调度,筛选的条件依旧用的是Node的

label字段。 - 不管是Node亲和性调度,还是Pod亲和性调度,被调度的主体都是Pod。都是讲的Pod根据亲和规则调度到某个节点,或者Pod跟随别的Pod调到到某个节点(比如Pod1跟随Pod2,Pod2被调度到B节点,那么Pod1也被调度到B节点)。

- Node亲和性调度 和 Pod亲和性调度 的配置都是写在 编排Pod的yaml里。因为被调度的主体是Pod。

- Node亲和性调度是指Pod和Node的亲密关系。

- Pod亲和性调度是指Pod和Pod的亲密关系。

- 硬亲和:亲和规则只有一种,必须符合该规则。

- 软亲和:规则有多种,每个权重不同,根据权重优先级去选择一个规则。

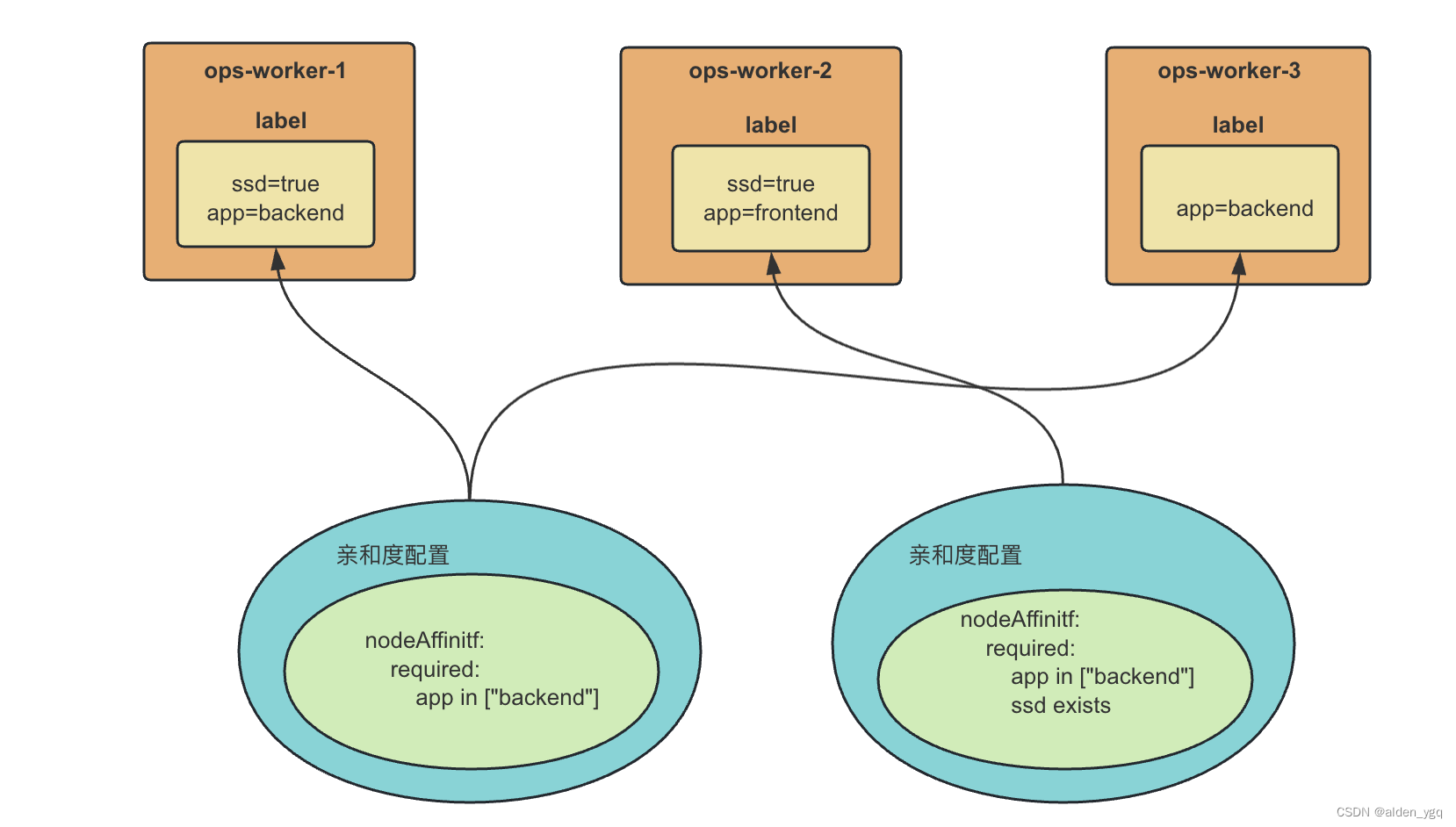

Node亲和性调度的图示如下,Pod亲和性调用和Pod反亲和性调用也类似。

4 亲和调度的具体概念

Affinity的中文意思是亲近,用来表述亲和性调度。

亲和性调度:指Node(或者Pod)和Pod的关联关系,Pod可以部署在符合这种label的Node,也可与其他Pod共享相同的调度策略。

反亲和性调度:主要针对两个pod相反的调度策略,即pod A选择node1,那么pod2绝对不会选择node1进行调度。

4.1 记住这3种调度关系

亲和性调度 和 反亲和性调度的关系就3种:

- node亲和调度:硬亲和、软亲和

- pod亲和调度:硬亲和、软亲和

- pod反亲和调度:硬亲和、软亲和

4.2 亲和性表达式

不管是Node亲和 还是Pod亲和,他们都有2种亲和性表达方式:

- RequiredDuringSchedulingIgnoredDuringExecution:是硬亲和的方式,必须满足指定的规则才可以把Pod调度到该Node上。这里注意

Required这个词,中文意思必须的。 - PreferredDuringSchedulingIgnoredDuringExecution:是软亲和的方式,强调优先满足某个规则,然后根据优先的规则,将Pod调度到节点上。这里注意

Preferred这个词,中文意思是首选,用来说明选择规则的优先级,确实比较合适。

这两个字段也比较长,我们来做下拆解,将RequiredDuringSchedulingIgnoredDuringExecution拆解为RequiredDuringScheduling和IgnoredDuringExecution。

RequiredDuringScheduling:定义的规则必须强制满足(Required)才会把Pod调度到节点上。IgnoredDuringExecution:已经在节点上运行的Pod不需要满足定义的规则,即使去除节点上的某个标签,那些需要节点包含该标签的Pod依旧会在该节点上运行。或者这么理解:如果Pod所在的节点在Pod运行期间标签被删除了,不再符合该Pod的节点亲和性规则,那也没关系,该Pod 还能继续在该节点上运行。

4.3 表达式操作符

亲和性表达方式需要用到如下几个可选的操作符operator:

- In:标签的值在某个列表中

- NotIn:标签的值不在某个列表中

- Exists:存在某个标签

- DoesNotExist:不存在某个标签

- Gt:标签的值大于某个值(字符串比较)

- Lt:标签的值小于某个值(字符串比较)

这些操作符里,虽然没有排斥某个节点的功能,但是用这几个标签也可以变相的实现排斥的功能。

4.4 作用域topologyKey

topologyKey很多地方解释为拓扑键,其实本质上就是个作用域的概念。

topologyKey配置了一个label的key,那么存在这个key对应的label的所有Node就在同一个作用域里。

5 亲和性与反亲和性实战

nodeName和NodeSelelctor调度实战参考:Kubernetes系列-Pod的定向调度_当创建一个pod实例,是怎么调度到node节点上面的-CSDN博客

Kubernetes系列-部署pod到集群中的指定node_kubectl 部署pod到某个节点-CSDN博客

5.1 nodeName调度

比如要将Pod调度到nodeName是ops-worker-2的节点上

$ vim webapp.yaml

apiVersion: v1

kind: Pod

metadata:name: webappnamespace: demolabels:app: webapp

spec:nodeName: 'k8s-worker-2'containers:- name: webappimage: nginxports:- containerPort: 80

$ kubectl apply -f webapp.yaml

pod/webapp created

$ kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

webapp 1/1 Running 0 8s 172.25.50.142 ops-worker-2 <none> <none>5.2 nodeSelector调度

比如要将Pod调度到具有"special-app=specialwebapp"的label节点上。

节点ops-worker-2打上"special-app=specialwebapp"标签:

$ kubectl label node ops-worker-1 special-app=specialwebapp

node/ops-worker-1 labeled查看节点信息:

$ kubectl describe node ops-worker-1

Name: ops-worker-1

Roles: <none>

Labels: beta.kubernetes.io/arch=amd64beta.kubernetes.io/os=linuxenv=uatkubernetes.io/arch=amd64kubernetes.io/hostname=ops-worker-1kubernetes.io/os=linuxspecial-app=specialwebapp

Annotations: kubeadm.alpha.kubernetes.io/cri-socket: /var/run/dockershim.socknode.alpha.kubernetes.io/ttl: 0projectcalico.org/IPv4Address: 10.220.43.204/20projectcalico.org/IPv4IPIPTunnelAddr: 172.25.78.64volumes.kubernetes.io/controller-managed-attach-detach: true

CreationTimestamp: Sun, 17 Dec 2023 15:32:04 +0800

Taints: <none>

Unschedulable: false

Lease:HolderIdentity: ops-worker-1AcquireTime: <unset>RenewTime: Mon, 22 Jan 2024 21:59:33 +0800

Conditions:Type Status LastHeartbeatTime LastTransitionTime Reason Message---- ------ ----------------- ------------------ ------ -------NetworkUnavailable False Sun, 17 Dec 2023 15:32:48 +0800 Sun, 17 Dec 2023 15:32:48 +0800 CalicoIsUp Calico is running on this nodeMemoryPressure False Mon, 22 Jan 2024 21:59:30 +0800 Sun, 17 Dec 2023 15:32:04 +0800 KubeletHasSufficientMemory kubelet has sufficient memory availableDiskPressure False Mon, 22 Jan 2024 21:59:30 +0800 Sun, 17 Dec 2023 15:32:04 +0800 KubeletHasNoDiskPressure kubelet has no disk pressurePIDPressure False Mon, 22 Jan 2024 21:59:30 +0800 Sun, 17 Dec 2023 15:32:04 +0800 KubeletHasSufficientPID kubelet has sufficient PID availableReady True Mon, 22 Jan 2024 21:59:30 +0800 Sun, 17 Dec 2023 15:32:54 +0800 KubeletReady kubelet is posting ready status

Addresses:InternalIP: 10.220.43.204Hostname: ops-worker-1

Capacity:cpu: 8ephemeral-storage: 103080204Kihugepages-1Gi: 0hugepages-2Mi: 0memory: 15583444Kipods: 110

Allocatable:cpu: 8ephemeral-storage: 94998715850hugepages-1Gi: 0hugepages-2Mi: 0memory: 15481044Kipods: 110

System Info:Machine ID: c72f33a969d84fac8d6f7b35c035bafaSystem UUID: e2ef28e5-4140-41a9-807d-78ecf09efb8dBoot ID: 879480b6-2f5a-45e5-9b31-4c7aab3caa33Kernel Version: 4.19.91-27.6.al7.x86_64OS Image: Alibaba Cloud Linux (Aliyun Linux) 2.1903 LTS (Hunting Beagle)Operating System: linuxArchitecture: amd64Container Runtime Version: docker://20.10.21Kubelet Version: v1.21.9Kube-Proxy Version: v1.21.9

PodCIDR: 172.25.1.0/24

PodCIDRs: 172.25.1.0/24

Non-terminated Pods: (11 in total)Namespace Name CPU Requests CPU Limits Memory Requests Memory Limits Age--------- ---- ------------ ---------- --------------- ------------- ---default nginx-node1-6c7874c7b8-q2swk 0 (0%) 0 (0%) 0 (0%) 0 (0%) 9dkube-system calico-kube-controllers-5d4b78db86-gmvg5 0 (0%) 0 (0%) 0 (0%) 0 (0%) 36dkube-system calico-kube-controllers-5d4b78db86-qvrnk 0 (0%) 0 (0%) 0 (0%) 0 (0%) 5d23hkube-system calico-node-jk7zc 250m (3%) 0 (0%) 0 (0%) 0 (0%) 36dkube-system coredns-59d64cd4d4-zr4hd 100m (1%) 0 (0%) 70Mi (0%) 170Mi (1%) 36dkube-system kube-proxy-rm64j 0 (0%) 0 (0%) 0 (0%) 0 (0%) 36dkube-system metrics-server-54cc454bdd-ds4zp 0 (0%) 0 (0%) 0 (0%) 0 (0%) 12dkube-system vpa-admission-controller-54d7b4896d-75g5d 50m (0%) 200m (2%) 200Mi (1%) 500Mi (3%) 8dkube-system vpa-admission-controller-558664548-fbhzt 50m (0%) 200m (2%) 200Mi (1%) 500Mi (3%) 5d23hkube-system vpa-recommender-84d88664b8-4kdn5 50m (0%) 200m (2%) 500Mi (3%) 1000Mi (6%) 12dkube-system vpa-updater-5545848b57-lq5sf 50m (0%) 200m (2%) 500Mi (3%) 1000Mi (6%) 5d23h

Allocated resources:(Total limits may be over 100 percent, i.e., overcommitted.)Resource Requests Limits-------- -------- ------cpu 550m (6%) 800m (10%)memory 1470Mi (9%) 3170Mi (20%)ephemeral-storage 0 (0%) 0 (0%)hugepages-1Gi 0 (0%) 0 (0%)hugepages-2Mi 0 (0%) 0 (0%)

Events: <none>Pod的yaml编排文件:

$ vim webapp2.yaml

apiVersion: v1

kind: Pod

metadata:name: webapp-2namespace: defaultlabels:app: webapp-2

spec:nodeSelector:special-app: specialwebappcontainers:- name: webapp-2image: nginxports:- containerPort: 80

$ kubectl apply -f webapp2.yaml

pod/webapp-2 created查看Pod被调度到哪台机器上:

$ kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

webapp-2 0/1 ContainerCreating 0 11s <none> ops-worker-1 <none> <none>pod被调度在label为 special-app的node上。

5.3 Node亲和调度

Node的亲和调度是指,Node和Pod的关系。

5.3.1 硬亲和

定义Pod-Node的硬亲和yaml文件:pod_node_required_affinity.yaml。文件内容如下:

$ vim pod_node_required_affinity.yaml

apiVersion: v1

kind: Pod

metadata:name: webapp-3namespace: defaultlabels:app: webapp-3

spec:containers:- name: webapp-3image: nginxports:- containerPort: 80affinity:nodeAffinity:requiredDuringSchedulingIgnoredDuringExecution:nodeSelectorTerms:- matchExpressions:- key: appoperator: Invalues:- backend

$ kubectl apply -f pod_node_required_affinity.yaml

pod/webapp-3 created给ops-master-3节点添加label:

$ kubectl label node ops-master-3 app=backend

node/ops-master-3 labeled查看ops-master-3节点的label情况:

$ kubectl get node ops-master-3 --show-labels

NAME STATUS ROLES AGE VERSION LABELS

ops-master-3 Ready control-plane,master 36d v1.21.9 app=backend,beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,env=uat,kubernetes.io/arch=amd64,kubernetes.io/hostname=ops-master-3,kubernetes.io/os=linux,node-role.kubernetes.io/control-plane=,node-role.kubernetes.io/master=,node.kubernetes.io/exclude-from-external-load-balancers=查看调度结果:

$ kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

webapp-3 1/1 Running 0 90s 172.25.186.68 ops-master-3 <none> <none>5.3.2 软亲和

软亲和调度,主要就是加入了多个规则,每个设置了权重,yaml文件如下:

$ vim pod_node_preferred_affinity.yaml

apiVersion: v1

kind: Pod

metadata:name: webapp-4namespace: defaultlabels:app: webapp-4

spec:containers:- name: webapp-4image: nginxports:- containerPort: 80affinity:nodeAffinity:preferredDuringSchedulingIgnoredDuringExecution:- weight: 80preference:matchExpressions:- key: app2operator: Exists- weight: 20preference:matchExpressions:- key: appoperator: Invalues:- backend2

给节点ops-master-2设置app2=backend的标签。

$ kubectl label node ops-master-2 app2=backend

node/ops-master-2 labeled$ kubectl apply -f webapp-4.yaml

pod/webapp-4 created

$ kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

webapp-4 1/1 Running 0 5s 172.25.78.133 ops-master-2 <none> <none>pod调度到ops-master-2上面。

5.4 Pod亲和调度

Pod亲和调度,是指Pod和Pod之间的关系。

5.4.1 硬亲和

比如Pod1跟随Pod2,Pod2被调度到B节点,那么Pod1也被调度到B节点。

所以需要部署2个Pod。Pod1使用上面的例子,让Pod1采用Node硬亲和调度到k8s-worker-3节点。然后再部署Pod2,让它跟随Pod1,也会被调度到k8s-worker-3节点。

准备Pod2的yaml编排文件pod_pod_required_affinity.yaml,如下:

$ vim pod_pod_required_affinity.yaml

apiVersion: v1

kind: Pod

metadata:name: webapp-5namespace: defaultlabels:app: webapp-5

spec:containers:- name: webapp-5image: nginxports:- containerPort: 80affinity:podAffinity:requiredDuringSchedulingIgnoredDuringExecution:- topologyKey: kubernetes.io/hostnamelabelSelector:matchExpressions:- key: appoperator: Invalues:- webapp-3

$ kubectl apply -f pod_pod_required_affinity.yaml

pod/webapp-5 created查看调度结果:

kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

webapp-3 1/1 Running 0 18m 172.25.186.68 ops-master-3 <none> <none>

webapp-4 1/1 Running 0 4m51s 172.25.78.133 ops-master-2 <none> <none>

webapp-5 1/1 Running 0 8s 172.25.186.69 ops-master-3 <none> <none>webapp-3和webapp-5调度在同一个node上。

5.4.2 软亲和

软亲和和硬亲和类似,只是多了权重。

$ vim webapp-6.yaml

apiVersion: v1# 选择调度到具有这个label的节点

kind: Pod

metadata:name: webapp-6namespace: defaultlabels:app: webapp-6

spec:containers:- name: webapp-6image: nginxports:- containerPort: 80affinity:podAffinity:preferredDuringSchedulingIgnoredDuringExecution:- weight: 40podAffinityTerm:labelSelector:matchExpressions:- key: app2operator: ExiststopologyKey: kubernetes.io/hostname- weight: 60podAffinityTerm:labelSelector: matchExpressions:- key: appoperator: Invalues:- webapp-4topologyKey: kubernetes.io/hostname

$ kubectl apply -f webapp-6.yaml

pod/webapp-6 created

$ kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-node1-6c7874c7b8-d6cnw 1/1 Running 0 6d 172.25.78.131 ops-master-2 <none> <none>

nginx-node1-6c7874c7b8-q2swk 1/1 Running 0 9d 172.25.78.80 ops-worker-1 <none> <none>

nginx-test-6b7c99bbb-b6smk 0/1 Pending 0 6d <none> <none> <none> <none>

nginx-test-6b7c99bbb-jd5xt 0/1 Pending 0 6d <none> <none> <none> <none>

webapp 1/1 Running 0 63m 172.25.50.142 ops-worker-2 <none> <none>

webapp-1 1/1 Running 0 56m 172.25.50.143 ops-worker-2 <none> <none>

webapp-2 1/1 Running 0 51m 172.25.78.85 ops-worker-1 <none> <none>

webapp-3 1/1 Running 0 46m 172.25.186.68 ops-master-3 <none> <none>

webapp-4 1/1 Running 0 33m 172.25.78.133 ops-master-2 <none> <none>

webapp-5 1/1 Running 0 28m 172.25.186.69 ops-master-3 <none> <none>

webapp-6 0/1 ContainerCreating 0 3s <none> ops-master-2 <none> <none>5.5 Pod反亲和调度

5.5.1 反亲和的硬亲和

$ vim webapp-8.yaml

apiVersion: v1

kind: Pod

metadata:name: webapp-2namespace: demolabels:app: webapp-2

spec:containers:- name: webappimage: nginxports:- containerPort: 80affinity:podAntiAffinity:requiredDuringSchedulingIgnoredDuringExecution:- topologyKey: kubernetes.io/hostnamelabelSelector:matchExpressions:- key: appoperator: Invalues:- webapp

$ kubectl apply -f webapp-7.yaml

pod/webapp-8 created

$ kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-node1-6c7874c7b8-d6cnw 1/1 Running 0 6d 172.25.78.131 ops-master-2 <none> <none>

nginx-node1-6c7874c7b8-q2swk 1/1 Running 0 9d 172.25.78.80 ops-worker-1 <none> <none>

nginx-test-6b7c99bbb-b6smk 0/1 Pending 0 6d <none> <none> <none> <none>

nginx-test-6b7c99bbb-jd5xt 0/1 Pending 0 6d <none> <none> <none> <none>

webapp 1/1 Running 0 66m 172.25.50.142 ops-worker-2 <none> <none>

webapp-1 1/1 Running 0 59m 172.25.50.143 ops-worker-2 <none> <none>

webapp-2 1/1 Running 0 55m 172.25.78.85 ops-worker-1 <none> <none>

webapp-3 1/1 Running 0 49m 172.25.186.68 ops-master-3 <none> <none>

webapp-4 1/1 Running 0 36m 172.25.78.133 ops-master-2 <none> <none>

webapp-5 1/1 Running 0 31m 172.25.186.69 ops-master-3 <none> <none>

webapp-6 1/1 Running 0 3m13s 172.25.78.134 ops-master-2 <none> <none>

webapp-8 1/1 Running 0 5s 172.25.186.78 ops-master-3 <none> <none>webapp-8没有和webapp调度到同一个node上。

5.5.2 反亲和的软亲和

反亲和的软亲和 和 硬亲和类似,只是多了权重,此处不做测试。

这篇关于Kubernetes实战(十八)-Pod亲和与反亲和调度的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!