本文主要是介绍python爬虫实战(8)--获取虎pu热榜,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

1. 需要的类库

import requests

from bs4 import BeautifulSoup

import pandas as pd

2. 请求地址

def fetch_data():url = "https://bbs.xxx.com/" # Replace with the actual base URLresponse = requests.get(url)if response.status_code == 200:return response.contentelse:print(f"Error fetching data. Status code: {response.status_code}")return None

3. 编码

def parse_html(html_content, base_url):soup = BeautifulSoup(html_content, 'html.parser')items = soup.find_all('div', class_='text-list-model')first_item = items[0]contents = first_item.contentsdata = []for item in contents:if item.select_one('.t-title') == None:continuetitle = item.select_one('.t-title').text.strip()relative_url = item.select_one('a')['href']full_url = base_url + relative_urllights = item.select_one('.t-lights').text.strip()replies = item.select_one('.t-replies').text.strip()data.append({'Title': title,'URL': full_url,'Lights': lights,'Replies': replies})return data

注意:分析标签,这里加了非意向标签的跳过处理

4. 导出表格

def create_excel(data):df = pd.DataFrame(data)df.to_excel('hupu-top.xlsx', index=False)print("Excel file created successfully.")测试

base_url = "https://bbs.xx.com" #替换成虎pu首页地址html_content = fetch_data()if html_content:forum_data = parse_html(html_content, base_url)create_excel(forum_data)else:print("Failed to create Excel file.")

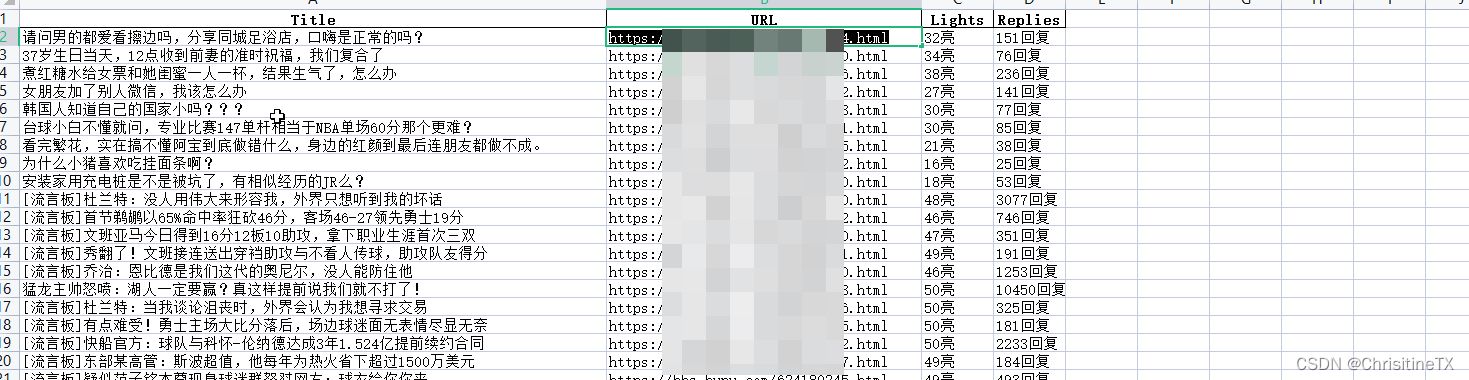

5. 成果展示

这篇关于python爬虫实战(8)--获取虎pu热榜的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!