本文主要是介绍大数据实时计算Spark学习笔记(10)—— Spar SQL(2) -JDBC方式操作表,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

1 Spark SQL 的 JDBC 方式

- POM 文件添加依赖

<dependency><groupId>mysql</groupId><artifactId>mysql-connector-java</artifactId><version>5.1.38</version></dependency>1.1 查询数据库

import org.apache.spark.sql.Dataset;

import org.apache.spark.sql.Row;

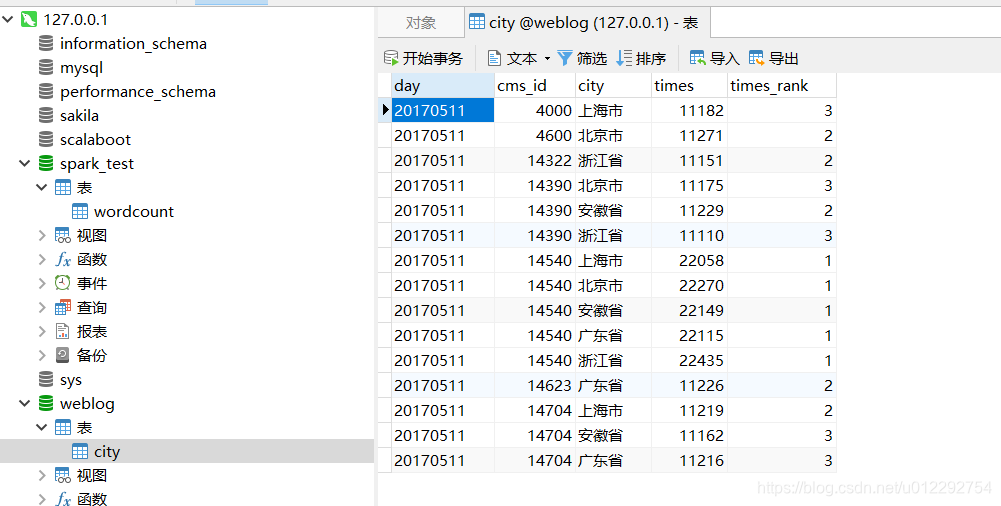

import org.apache.spark.sql.SparkSession;public class SQLJDBCJava {public static void main(String[] args) {SparkSession session = SparkSession.builder().appName("JDBC").config("spark.master","local[2]").getOrCreate();String url = "jdbc:mysql://localhost:3306/weblog";String table = "city";Dataset<Row> df= session.read().format("jdbc").option("url", url).option("dbtable",table).option("user", "root").option("password", "root").option("driver","com.mysql.jdbc.Driver").load();df.show();}

}

1.1.1 向数据库写数据

import org.apache.spark.sql.Column;

import org.apache.spark.sql.Dataset;

import org.apache.spark.sql.Row;

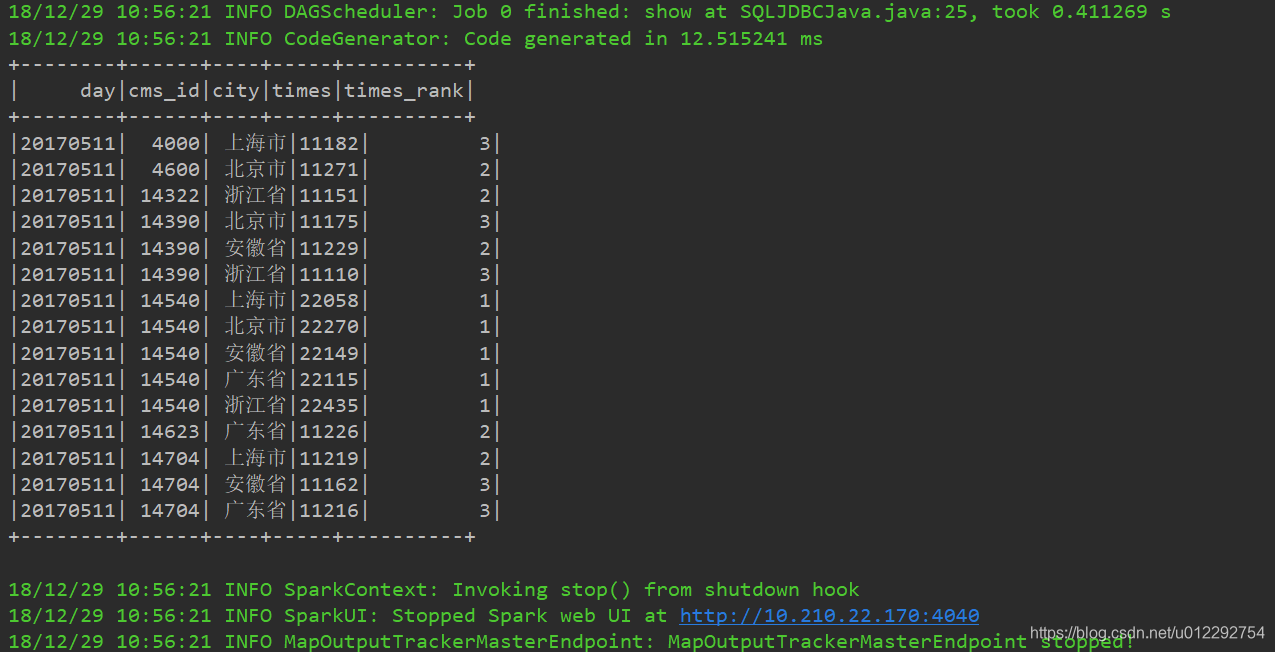

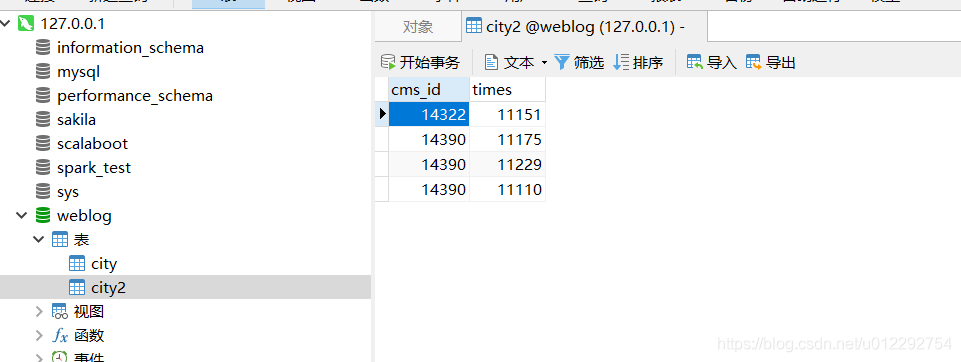

import org.apache.spark.sql.SparkSession;import java.util.Properties;public class SQLJDBCJava {public static void main(String[] args) {SparkSession session = SparkSession.builder().appName("JDBC").config("spark.master","local[2]").getOrCreate();String url = "jdbc:mysql://localhost:3306/weblog?useSSL=false";String table = "city";Dataset<Row> df= session.read().format("jdbc").option("url", url).option("dbtable",table).option("user", "root").option("password", "root").option("driver","com.mysql.jdbc.Driver").load();//df.show();//投影查询Dataset<Row> df2 = df.select(new Column("cms_id"),new Column("times"));df2 = df2.where("cms_id like '143%'");Properties prop = new Properties();prop.put("user","root");prop.put("password","root");prop.put("driver","com.mysql.jdbc.Driver");//写入df2.write().jdbc(url,"city2",prop);df2.show();}

}

这篇关于大数据实时计算Spark学习笔记(10)—— Spar SQL(2) -JDBC方式操作表的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!