1.爬取数据需要的类库

import requests import re from bs4 import BeautifulSoup import jieba.analyse from PIL import Image,ImageSequence import numpy as np import matplotlib.pyplot as plt from wordcloud import WordCloud,ImageColorGenerator

2.安装wordcloud库时候回发生报错

解决方法是:

- 安装提示报错去官网下载vc++的工具,但是安装的内存太大只是几个G

- 去https://www.lfd.uci.edu/~gohlke/pythonlibs/#wordcloud下载whl文件,选取对应python的版本号和系统位数

3.爬取的基本思路

查看网站的html节点,爬取虎扑NBA新闻的标题和内容页,将爬取的内容保存为txt文件,对其进行分词,生成词云。

爬取1万2千条数据,共三百万字(最初我也不知道这么多)

import requests import re from bs4 import BeautifulSoup import jieba.analyse from PIL import Image,ImageSequence import numpy as np import matplotlib.pyplot as plt from wordcloud import WordCloud,ImageColorGenerator url ='https://voice.hupu.com/nba/1' # 获得虎扑网nba新闻前12000条信息的标题和内容 def AlltitleAndUrl(url):j=0reslist = requests.get(url)reslist.encoding = 'utf-8'soup_list = BeautifulSoup(reslist.text, 'html.parser')for news in soup_list.select('li'): # 首页if len(news.select('h4')) > 0:j=j+1print(j)# 标题title = news.find('h4').texthref=news.find('h4').a['href']reslist = requests.get(href)reslist.encoding = 'utf-8'soup = BeautifulSoup(reslist.text, 'html.parser')context=soup.select('div .artical-main-content')[0].textf = open('dongman.txt', 'a', encoding='utf-8')f.write(title)f.write(context)f.close()print("文章标题:" + title)print(context)# print('https://voice.hupu.com/nba/%s' %i)# 后面的页数for i in range(2, 201):pages = i;nexturl = 'https://voice.hupu.com/nba/%s' % (pages)# nexturl = '%s%s%s' % (head, pages, tail)newcontent = requests.get(nexturl)newcontent.encoding = 'utf-8'soup_alllist = BeautifulSoup(newcontent.text, 'html.parser')for news in soup_list.select('li'):if len(news.select('h4')) > 0:j = j + 1# 标题title = news.find('h4').texthref = news.find('h4').a['href']reslist = requests.get(href)reslist.encoding = 'utf-8'soup = BeautifulSoup(reslist.text, 'html.parser')context = soup.select('div .artical-main-content')[0].textf = open('dongman.txt', 'a', encoding='utf-8')f.write(title)f.write(context)f.close()print("文章标题:" + title)print(context)print(j)def getWord():lyric = ''f = open('3.txt', 'r', encoding='utf-8')# 将文档里面的数据进行单个读取,便于生成词云for i in f:lyric += f.read()# 进行分析result = jieba.analyse.textrank(lyric, topK=2000, withWeight=True)keywords = dict()for i in result:keywords[i[0]] = i[1]print(keywords)# 获取词云生成所需要的模板图片image = Image.open('body.png')graph = np.array(image)# 进行词云的设置wc = WordCloud(font_path='./fonts/simhei.ttf', background_color='White',max_words=230, mask=graph, random_state=30,scale=1.5)wc.generate_from_frequencies(keywords)image_color = ImageColorGenerator(graph)plt.imshow(wc)plt.imshow(wc.recolor(color_func=image_color))plt.axis("off")plt.show()wc.to_file('dream.png')getWord() AlltitleAndUrl(url)

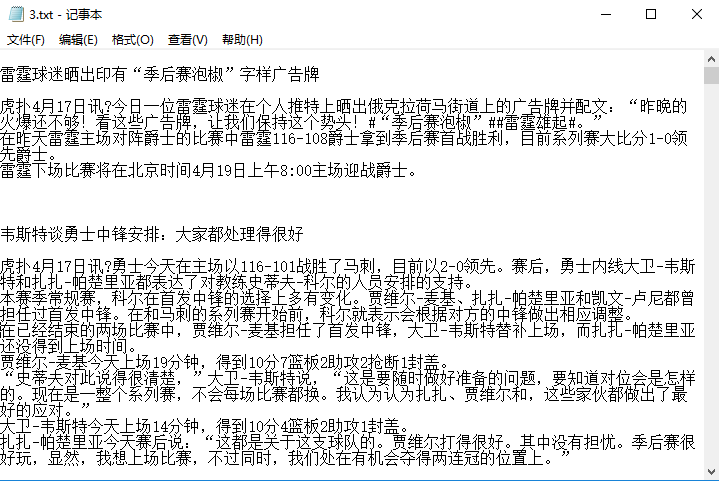

数据截图:

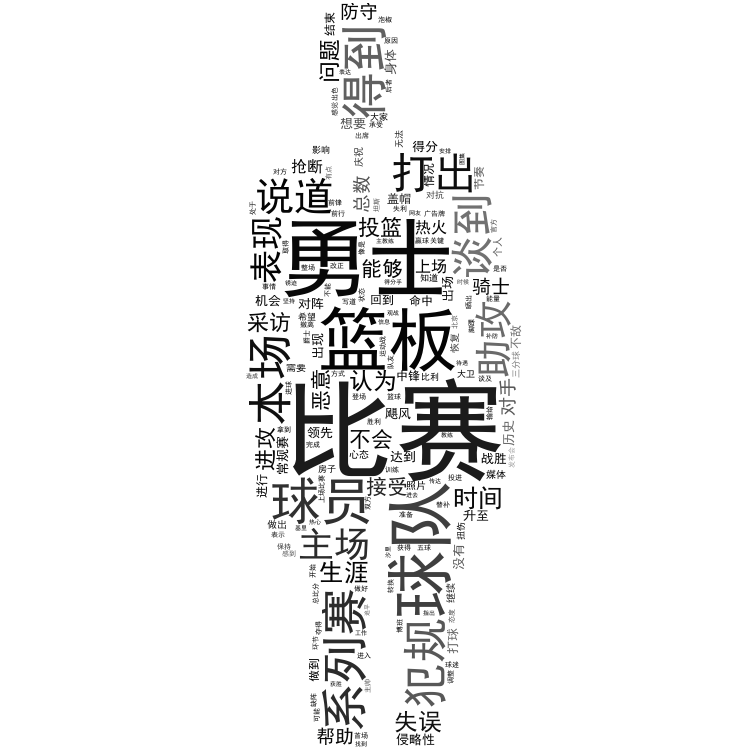

结果截图: