本文主要是介绍Hive on Tez map阶段task划分源码分析(map task个数),希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

Hive on Tez中map和reduce阶段task的划分在SQL调优和跑批中比较重要,在调优时我们会遇到maptask分配个数不合理(太少或太多),map 各task运行时间存在倾斜等相关问题。

难点在于说Tez引擎有自己的map Task划分逻辑,这一点与MR引擎或者spark引擎等直接按照文件split划分策略存在不同,所以在实际生产环境中有时候map task的划分比较不可控。

本文从源码角度分析Hive on Tez在map阶段是如何划分task的。以下涉及到的一些参数可以作为调优参数设置,例如tez.grouping.max-size和min-size、tez.grouping.split-count、tez.grouping.by-length和tez.grouping.by-count等等;

Hive on Tez中map task的划分逻辑在Tez源码中,总体实现逻辑如下:

(1)Tez源码中实现map task划分的逻辑为TezSplitGrouper类;具体实现方法为getGroupedSplits;

(2)Tez源码中对应该部分的单元测试类为TestGroupedSplits.java

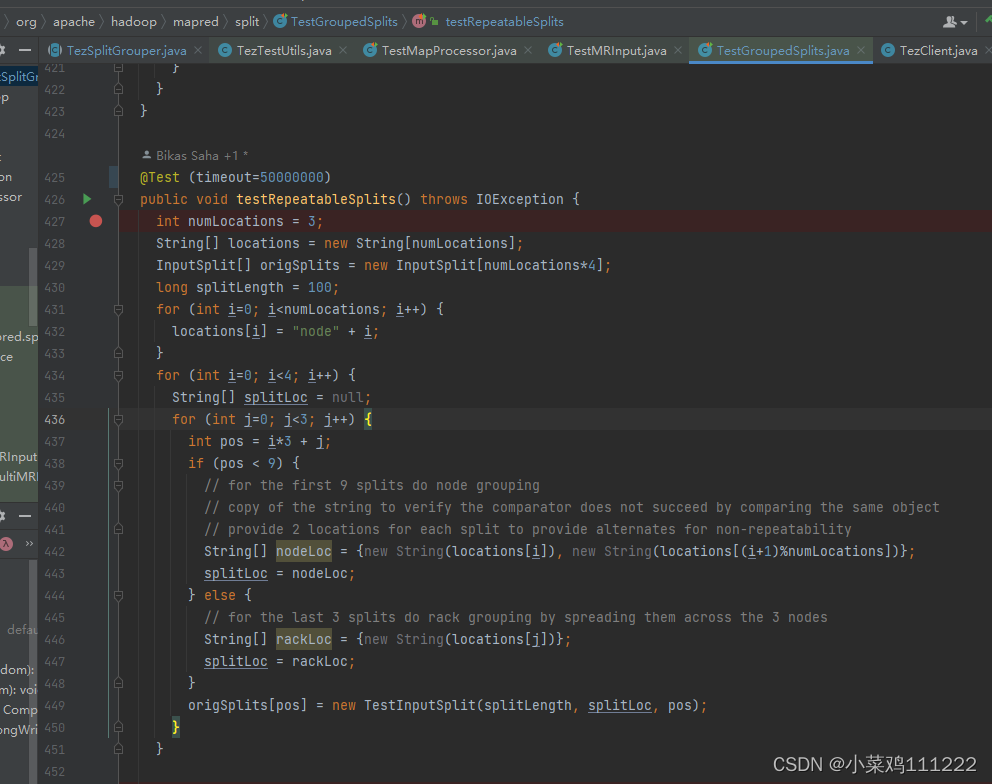

(3)选择单元测试中testRepeatableSplits进行单元测试;如下图:

(4)该部分可以自由造数据,例如有多少个文件目录,filesplit目录、副本路径位置、文件的大小、机架等等;

(5)上述代码的造数逻辑是有3个节点,并造TestInputSplit对象作为数据文件表示,所以各个split文件就是origSplits;

(6)开始启动getGroupedSplit的测试,conf设置tez.grouping.max-size和min-size为300和3000;tez.grouping.rack-split-reduction为1;并开始调用getGroupedSplits;

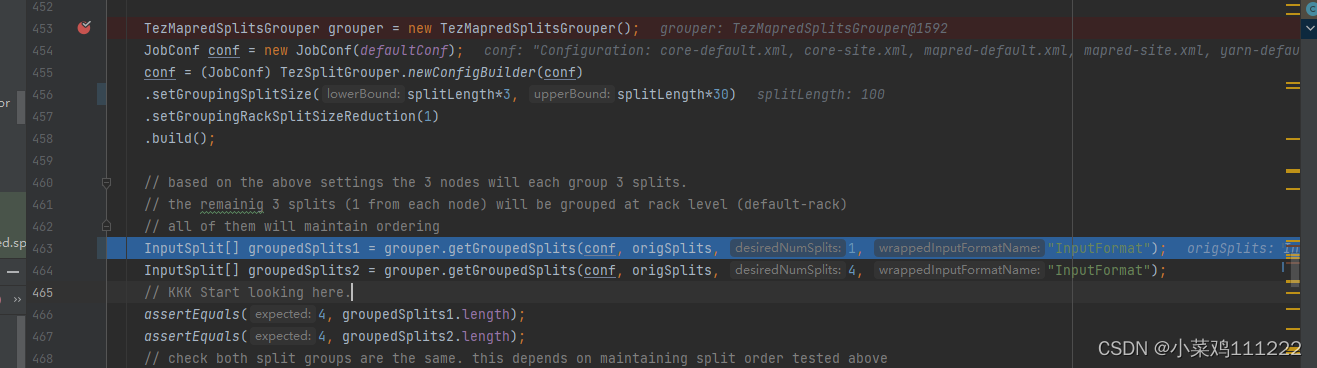

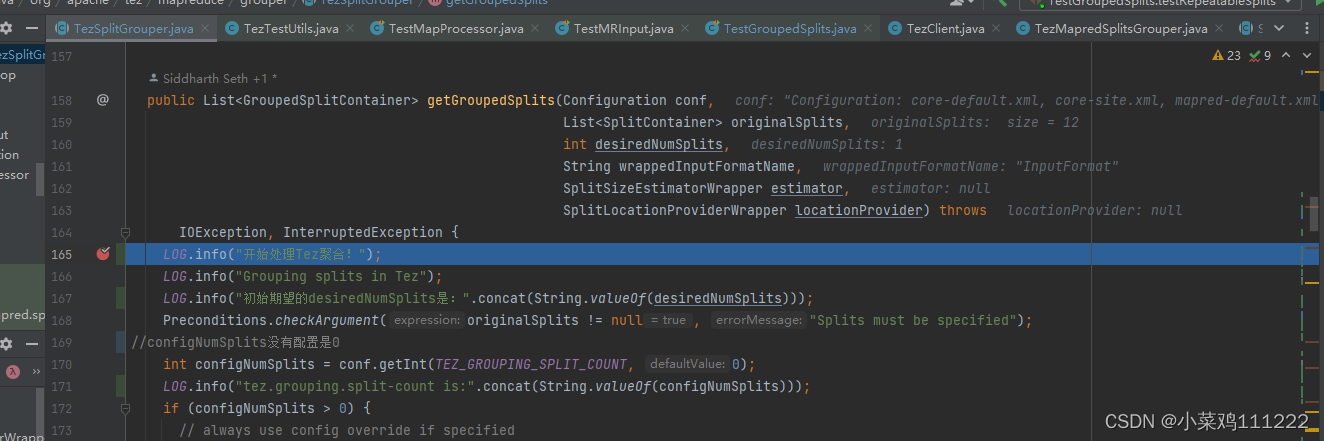

(7)进入TezSplitGrouper开始处理getGroupedSplits逻辑;

(8)刚开始desiredNumSplits设置的是1,tez.grouping.split-count没有设置,所以默认是0;

(9)遍历文件,按照读取的hdfs文件,划分路径到distinctLocations中,相当于这些文件分布在哪些节点上;

(10)遍历文件,累加文件的大小,得到总大小totalLength,这个参数很重要;并且按照之前的desiredNumSplits计算平均每个spali文件大小lengthPerGroup;

(11)然后就是对比计算出来的平均split大小和设置的split-min和max大小做对比,如果太大或者太小都需要重新调整desiredNumSplits的值,以希望平均的split大小lengthPerGroup在min和max范围内;

(12)另外就是计算平均一个节点上几个split、一个Group多少个split之类的参数;

(13)以节点为key值,将各个split filepath路径加入到distinctLocations中;这里面会涉及一个split文件多个副本分布在不同节点上。

(14)然后是读取参数,看看group的时候是按照length还是count来;对应的参数是tez.grouping.by-length和tez.grouping.by-count;

还有参数tez.grouping.node.local.only,貌似是看是不是要尽量本地计算;

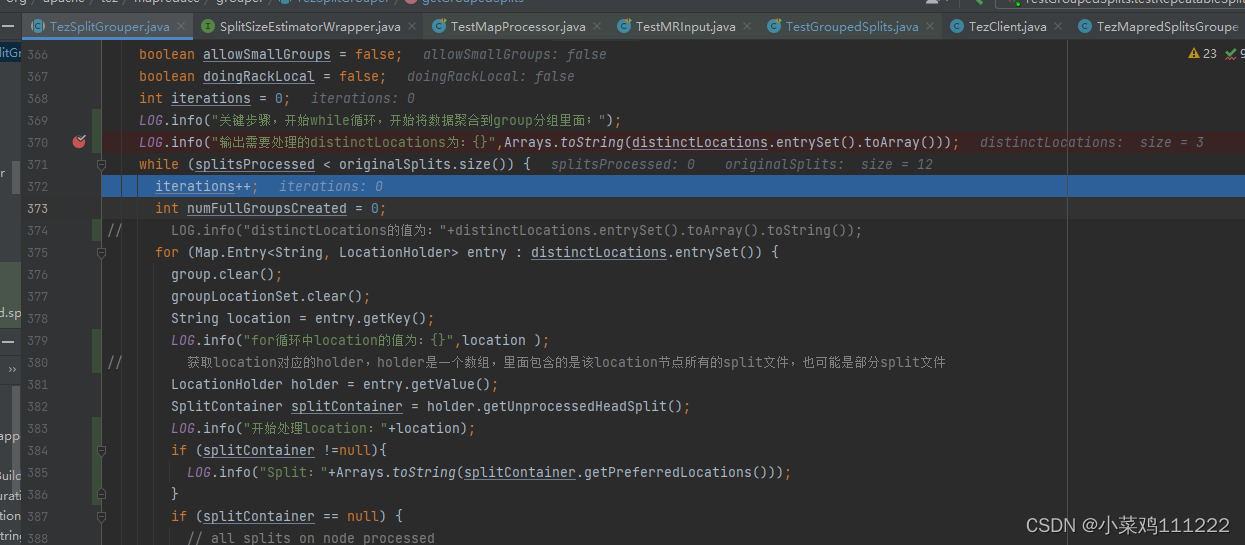

(15)之后就是关键的步骤,开始while循环,在循环中对split文件进行group;对应如下代码:

(16)按照节点进行遍历,遍历节点里面未被处理的split文件,终止条件有如下好几种:

这个节点所有splitcontainer都遍历过了就结束;

遍历的个数或者累计的Length大小或者groupNumSplits个数达到阈值就会结束;

如果group出来的大小小于lengthPerGroup的一半或者小于numSplitsInGroup的一半,先更新index值,然后留着后面remainingSplits处理。

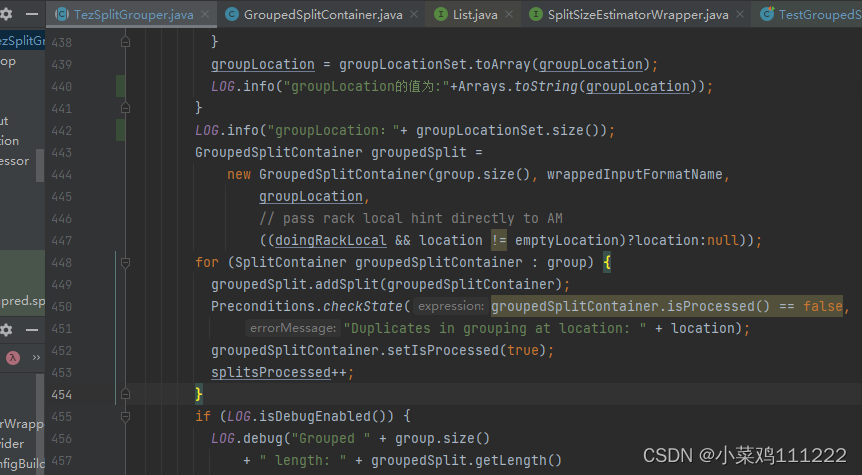

(17)对于当前节点符合条件,生成一个group;并且更新对应的split文件为setIsProcessed(true)已处理完毕;

需要注意什么情况下,这个split才算处理完成,有些之前虽然遍历过了,但是不符合条件,所以会重置index,也不算IsProcessed的。

如下代码:

(18)每个节点处理完成之后开始处理remainingSplits,按照rack机架的方式处理剩余的split数据

按照以上策略最后返回一个groupedSplits的划分结果;

以下按照自由组合测试是否符合预期:

测试用例验证:

以下按照自由组合测试是否符合预期:

测试用例:

文件名使用序号替代,文件大小在括号中,例如下面的数据样例:

node1:5(100)、8(300)代表node1上文件5的大小为100,文件8的大小为300;

测试数据样例:1(100)、2(200)、3(100)、4(200)、5(300)、6(100)

各节点数据分布如下:

node1:1(100)、3(100)、4(200)、6(100)

node2:2(200)、3(100)

node3:1(100)、5(300)、6(100)

node4:3(100)、4(200)、5(300)

node5:1(100)、4(200)、5(300)

数据说明,这里面共有5个节点,一共有6个文件,副本分布和文件大小如上,其中2(200)只有一个副本。

假设设置tez.grouping.max-size和min-size为800和100;

假设初始desiredNumSplits设置为1;

文件的总大小totalLength为1000;按照初始desiredNumSplits为1,算出来lengthPerGroup大小为1000/1=1000,该值超过了max-size的大小800. 说明desiredNumSplits太小了,对应源码里面就是“Desired splits: 1 too small.”

故需要重新计算desiredNumSplits为totalLength/maxLengthPerGroup+1,就是1000/800+1=2;

然后计算平均一个Group中有多少个Splits,对应的就是参数numSplitsInGroup,计算值为:6/2=3个;新计算出来的lengthPerGroup大小为1000/2=500;

开始进入循环,按照上述说的终止条件终止循环:(注意计算过的副本不重复计算)

(1)循环node1节点,提取1(100)、3(100)、4(200),共计3个文件达到终止条件,分为一个Group;

(2)循环node2节点,提取2(200),共计1个文件,但是累计大小只有200<250(lengthPerGroup/2)达到终止条件,不做处理留在最后;

(3)循环node3节点,提取5(300)、6(100),共计1个文件达到终止条件,分为一个Group;

(4)循环node4节点,当前节点所有副本提取完毕,不处理;

(5)循环node5节点,当前节点所有副本提取完毕,不处理;

(6)查看是否有剩余未处理的remainingSplits,有步骤2遗留的2(200),重新按照key为rack机架整理数据,并且重新计算lengthPerGroup等阈值,按照终止条件分为一个Group。【若有的话,会统一加到机架rack中,然后以机架rack为维度去循环,整个过程和循环节点过程类似】

故最终分为三个Group,对应Map阶段3个Task,分别为:

Map Task1:1(100)、3(100)、4(200)【按node遍历】

Map Task2:5(300)、6(100)【按node遍历】

Map Task3:2(200)【按rack遍历】

详细的代码备注及调试样例如下:

public List<GroupedSplitContainer> getGroupedSplits(Configuration conf,List<SplitContainer> originalSplits,int desiredNumSplits,String wrappedInputFormatName,SplitSizeEstimatorWrapper estimator,SplitLocationProviderWrapper locationProvider) throwsIOException, InterruptedException {LOG.info("开始处理Tez聚合!");LOG.info("Grouping splits in Tez");LOG.info("初始期望的desiredNumSplits是:".concat(String.valueOf(desiredNumSplits)));Preconditions.checkArgument(originalSplits != null, "Splits must be specified");

//configNumSplits没有配置是0int configNumSplits = conf.getInt(TEZ_GROUPING_SPLIT_COUNT, 0);LOG.info("tez.grouping.split-count is:".concat(String.valueOf(configNumSplits)));if (configNumSplits > 0) {// always use config override if specifieddesiredNumSplits = configNumSplits;LOG.info("Desired numSplits overridden by config to: " + desiredNumSplits);}LOG.info("再次查看desiredNumSplits是:".concat(String.valueOf(desiredNumSplits)));if (estimator == null) {estimator = DEFAULT_SPLIT_ESTIMATOR;}if (locationProvider == null) {locationProvider = DEFAULT_SPLIT_LOCATION_PROVIDER;}List<GroupedSplitContainer> groupedSplits = null;String emptyLocation = "EmptyLocation";String localhost = "localhost";String[] emptyLocations = {emptyLocation};groupedSplits = new ArrayList<GroupedSplitContainer>(desiredNumSplits);boolean allSplitsHaveLocalhost = true;long totalLength = 0;Map<String, LocationHolder> distinctLocations = createLocationsMap(conf);// go through splits and add them to locationsLOG.info("初始originalSplits的大小是:".concat(String.valueOf(originalSplits.size())));for (SplitContainer split : originalSplits) {LOG.info(Arrays.toString(split.getPreferredLocations())+"Split文件的对象类名为:" + split.getClass().getName()+ ((MapredSplitContainer)split).getRawSplit().getClass().getName());LOG.info("该split大小为:" + estimator.getEstimatedSize(split));totalLength += estimator.getEstimatedSize(split);String[] locations = locationProvider.getPreferredLocations(split);if (locations == null || locations.length == 0) {locations = emptyLocations;allSplitsHaveLocalhost = false;}for (String location : locations ) {if (location == null) {location = emptyLocation;allSplitsHaveLocalhost = false;}if (!location.equalsIgnoreCase(localhost)) {allSplitsHaveLocalhost = false;}distinctLocations.put(location, null);}}LOG.info("预估的totalLength是:".concat(String.valueOf(totalLength)));if (! (configNumSplits > 0 ||originalSplits.size() == 0)) {LOG.info("进入判断逻辑1");// numSplits has not been overridden by config// numSplits has been set at runtime// there are splits generated// desired splits is less than number of splits generated// Do sanity checks

//这个值算出来貌似是1int splitCount = desiredNumSplits>0?desiredNumSplits:originalSplits.size();//lengthPerGroup算的是87940940,是87M左右。所以totallength也是87940940long lengthPerGroup = totalLength/splitCount;LOG.info("计算出来的lengthPerGroup值为:".concat(String.valueOf(lengthPerGroup)));long maxLengthPerGroup = conf.getLong(TEZ_GROUPING_SPLIT_MAX_SIZE,TEZ_GROUPING_SPLIT_MAX_SIZE_DEFAULT);long minLengthPerGroup = conf.getLong(TEZ_GROUPING_SPLIT_MIN_SIZE,TEZ_GROUPING_SPLIT_MIN_SIZE_DEFAULT);LOG.info("两个默认值,tez.grouping.max-size为:".concat(String.valueOf(maxLengthPerGroup)));LOG.info("两个默认值,tez.grouping.min-size为:".concat(String.valueOf(minLengthPerGroup)));if (maxLengthPerGroup < minLengthPerGroup ||minLengthPerGroup <=0) {throw new TezUncheckedException("Invalid max/min group lengths. Required min>0, max>=min. " +" max: " + maxLengthPerGroup + " min: " + minLengthPerGroup);}if (lengthPerGroup > maxLengthPerGroup) {LOG.info("进入lengthPerGroup值大于maxLengthPerGroup判断逻辑!相当于split划分个数太小了");// splits too big to work. Need to override with max size.int newDesiredNumSplits = (int)(totalLength/maxLengthPerGroup) + 1;LOG.info("Desired splits: " + desiredNumSplits + " too small. " +" Desired splitLength: " + lengthPerGroup +" Max splitLength: " + maxLengthPerGroup +" New desired splits: " + newDesiredNumSplits +" Total length: " + totalLength +" Original splits: " + originalSplits.size());desiredNumSplits = newDesiredNumSplits;LOG.info("按照算法重新计算desiredNumSplits的值为:".concat(String.valueOf(newDesiredNumSplits)));} else if (lengthPerGroup < minLengthPerGroup) {// splits too small to work. Need to override with size.LOG.info("进入lengthPerGroup值小于minLengthPerGroup判断逻辑!相当于split划分个数太多了;");int newDesiredNumSplits = (int)(totalLength/minLengthPerGroup) + 1;/*** This is a workaround for systems like S3 that pass the same* fake hostname for all splits.*/if (!allSplitsHaveLocalhost) {desiredNumSplits = newDesiredNumSplits;}LOG.info("Desired splits: " + desiredNumSplits + " too large. " +" Desired splitLength: " + lengthPerGroup +" Min splitLength: " + minLengthPerGroup +" New desired splits: " + newDesiredNumSplits +" Final desired splits: " + desiredNumSplits +" All splits have localhost: " + allSplitsHaveLocalhost +" Total length: " + totalLength +" Original splits: " + originalSplits.size());}LOG.info("按照算法重新计算desiredNumSplits的值为:".concat(String.valueOf(desiredNumSplits)));}if (desiredNumSplits == 0 ||originalSplits.size() == 0 ||desiredNumSplits >= originalSplits.size()) {// nothing set. so return all the splits as isLOG.info("如果期望split的个数和原始文件split个数有0,或者期望的split个数大于源文件的个数则进入这个判断逻辑!");LOG.info("Using original number of splits: " + originalSplits.size() +" desired splits: " + desiredNumSplits);groupedSplits = new ArrayList<GroupedSplitContainer>(originalSplits.size());for (SplitContainer split : originalSplits) {GroupedSplitContainer newSplit =new GroupedSplitContainer(1, wrappedInputFormatName, cleanupLocations(locationProvider.getPreferredLocations(split)),null);newSplit.addSplit(split);groupedSplits.add(newSplit);}return groupedSplits;}LOG.info("以上逻辑,desiredNumSplits得到了重新计算,故开始计算lengthPerGroup值;");long lengthPerGroup = totalLength/desiredNumSplits;int numNodeLocations = distinctLocations.size();int numSplitsPerLocation = originalSplits.size()/numNodeLocations;int numSplitsInGroup = originalSplits.size()/desiredNumSplits;LOG.info("numSplitsInGroup的值为计算平均几个文件要group为一个文件:".concat(String.valueOf(numSplitsInGroup)));// allocation loop here so that we have a good initial size for the listsfor (String location : distinctLocations.keySet()) {LOG.info("循环put distinctLocations的值为:{},LocationHolder的size为:{}",distinctLocations,numSplitsPerLocation+1);distinctLocations.put(location, new LocationHolder(numSplitsPerLocation+1));}Set<String> locSet = new HashSet<String>();

// 下面的for循环是将各个节点里面填充split的file文件for (SplitContainer split : originalSplits) {locSet.clear();

// 这里面获取的应该是该文件的几个副本位置String[] locations = locationProvider.getPreferredLocations(split);LOG.info("循环location的值为:"+Arrays.toString(locations));if (locations == null || locations.length == 0) {locations = emptyLocations;}for (String location : locations) {if (location == null) {location = emptyLocation;}locSet.add(location);}

// 以上遍历位置,然后加到locSet里面,一般是三个副本,三个节点,然后将for (String location : locSet) {LocationHolder holder = distinctLocations.get(location);

// LOG.info("将location:{}中加入split:{}",location);holder.splits.add(split);}}boolean groupByLength = conf.getBoolean(TEZ_GROUPING_SPLIT_BY_LENGTH,TEZ_GROUPING_SPLIT_BY_LENGTH_DEFAULT);boolean groupByCount = conf.getBoolean(TEZ_GROUPING_SPLIT_BY_COUNT,TEZ_GROUPING_SPLIT_BY_COUNT_DEFAULT);boolean nodeLocalOnly = conf.getBoolean(TEZ_GROUPING_NODE_LOCAL_ONLY,TEZ_GROUPING_NODE_LOCAL_ONLY_DEFAULT);if (!(groupByLength || groupByCount)) {throw new TezUncheckedException("None of the grouping parameters are true: "+ TEZ_GROUPING_SPLIT_BY_LENGTH + ", "+ TEZ_GROUPING_SPLIT_BY_COUNT);}LOG.info("开始获取groupByLength、groupByCount、nodeLocalOnly几个参数的值!");LOG.info("Desired numSplits: " + desiredNumSplits +" lengthPerGroup: " + lengthPerGroup +" numLocations: " + numNodeLocations +" numSplitsPerLocation: " + numSplitsPerLocation +" numSplitsInGroup: " + numSplitsInGroup +" totalLength: " + totalLength +" numOriginalSplits: " + originalSplits.size() +" . Grouping by length: " + groupByLength +" count: " + groupByCount +" nodeLocalOnly: " + nodeLocalOnly);// go through locations and group splitsint splitsProcessed = 0;List<SplitContainer> group = new ArrayList<SplitContainer>(numSplitsInGroup);Set<String> groupLocationSet = new HashSet<String>(10);boolean allowSmallGroups = false;boolean doingRackLocal = false;int iterations = 0;LOG.info("关键步骤,开始while循环,开始将数据聚合到group分组里面;");LOG.info("输出需要处理的distinctLocations为:{}",Arrays.toString(distinctLocations.entrySet().toArray()));while (splitsProcessed < originalSplits.size()) {iterations++;int numFullGroupsCreated = 0;

// LOG.info("distinctLocations的值为:"+distinctLocations.entrySet().toArray().toString());for (Map.Entry<String, LocationHolder> entry : distinctLocations.entrySet()) {group.clear();groupLocationSet.clear();String location = entry.getKey();LOG.info("for循环中location的值为:{}",location );

// 获取location对应的holder,holder是一个数组,里面包含的是该location节点所有的split文件,也可能是部分split文件LocationHolder holder = entry.getValue();SplitContainer splitContainer = holder.getUnprocessedHeadSplit();LOG.info("开始处理location:"+location);if (splitContainer !=null){LOG.info("Split:"+Arrays.toString(splitContainer.getPreferredLocations()));}if (splitContainer == null) {// all splits on node processedcontinue;}int oldHeadIndex = holder.headIndex;long groupLength = 0;int groupNumSplits = 0;do {LOG.info("还在dowhile循环!");group.add(splitContainer);groupLength += estimator.getEstimatedSize(splitContainer);groupNumSplits++;holder.incrementHeadIndex();splitContainer = holder.getUnprocessedHeadSplit();

// 循环到最后splitContainer应该为null,添加判断逻辑打印日志if (splitContainer !=null){

// LOG.info("split的名称为:{}",splitContainer.toString());LOG.info("Split:"+Arrays.toString(splitContainer.getPreferredLocations()));}} while(splitContainer != null&& (!groupByLength ||(groupLength + estimator.getEstimatedSize(splitContainer) <= lengthPerGroup))&& (!groupByCount ||(groupNumSplits + 1 <= numSplitsInGroup)));LOG.info("dowhile循环终止,group定下来了,打印值:"+groupNumSplits+"|||"+groupLength+"|||"+lengthPerGroup);if (holder.isEmpty()&& !allowSmallGroups&& (!groupByLength || groupLength < lengthPerGroup/2)&& (!groupByCount || groupNumSplits < numSplitsInGroup/2)) {// group too small, reset itholder.headIndex = oldHeadIndex;continue;}numFullGroupsCreated++;// One split group createdString[] groupLocation = {location};if (location == emptyLocation) {groupLocation = null;} else if (doingRackLocal) {for (SplitContainer splitH : group) {String[] locations = locationProvider.getPreferredLocations(splitH);if (locations != null) {for (String loc : locations) {if (loc != null) {groupLocationSet.add(loc);}}}}groupLocation = groupLocationSet.toArray(groupLocation);LOG.info("groupLocation的值为:"+Arrays.toString(groupLocation));}LOG.info("groupLocation:"+ groupLocationSet.size());GroupedSplitContainer groupedSplit =new GroupedSplitContainer(group.size(), wrappedInputFormatName,groupLocation,// pass rack local hint directly to AM((doingRackLocal && location != emptyLocation)?location:null));for (SplitContainer groupedSplitContainer : group) {groupedSplit.addSplit(groupedSplitContainer);Preconditions.checkState(groupedSplitContainer.isProcessed() == false,"Duplicates in grouping at location: " + location);groupedSplitContainer.setIsProcessed(true);splitsProcessed++;}if (LOG.isDebugEnabled()) {LOG.debug("Grouped " + group.size()+ " length: " + groupedSplit.getLength()+ " split at: " + location);}LOG.info("Grouped " + group.size()+ " length: " + groupedSplit.getLength()+ " split at: " + location);groupedSplits.add(groupedSplit);LOG.info("结束!");}

//以上for循环结束,对于单个文件,group猜测只有一个if (!doingRackLocal && numFullGroupsCreated < 1) {// no node could create a regular node-local group.// Allow small groups if that is configured.if (nodeLocalOnly && !allowSmallGroups) {LOG.info("Allowing small groups early after attempting to create full groups at iteration: {}, groupsCreatedSoFar={}",iterations, groupedSplits.size());allowSmallGroups = true;continue;}// else go rack-localdoingRackLocal = true;// re-create locationsint numRemainingSplits = originalSplits.size() - splitsProcessed;LOG.info("还剩余需要处理的split个数为:{}",numRemainingSplits);Set<SplitContainer> remainingSplits = new HashSet<SplitContainer>(numRemainingSplits);// gather remaining splits.for (Map.Entry<String, LocationHolder> entry : distinctLocations.entrySet()) {LocationHolder locHolder = entry.getValue();while (!locHolder.isEmpty()) {SplitContainer splitHolder = locHolder.getUnprocessedHeadSplit();if (splitHolder != null) {remainingSplits.add(splitHolder);locHolder.incrementHeadIndex();}}}if (remainingSplits.size() != numRemainingSplits) {throw new TezUncheckedException("Expected: " + numRemainingSplits+ " got: " + remainingSplits.size());}// doing all this now instead of up front because the number of remaining// splits is expected to be much smallerRackResolver.init(conf);Map<String, String> locToRackMap = new HashMap<String, String>(distinctLocations.size());Map<String, LocationHolder> rackLocations = createLocationsMap(conf);for (String location : distinctLocations.keySet()) {

// 这里应该是获取location文件的rack机架信息String rack = emptyLocation;if (location != emptyLocation) {rack = RackResolver.resolve(location).getNetworkLocation();}LOG.info("文件的位置为:{},对应的机架为:{}",location,rack);locToRackMap.put(location, rack);if (rackLocations.get(rack) == null) {// splits will probably be located in all racksrackLocations.put(rack, new LocationHolder(numRemainingSplits));}}distinctLocations.clear();HashSet<String> rackSet = new HashSet<String>(rackLocations.size());int numRackSplitsToGroup = remainingSplits.size();for (SplitContainer split : originalSplits) {if (numRackSplitsToGroup == 0) {break;}// Iterate through the original splits in their order and consider them for grouping.// This maintains the original ordering in the list and thus subsequent grouping will// maintain that orderif (!remainingSplits.contains(split)) {continue;}numRackSplitsToGroup--;rackSet.clear();String[] locations = locationProvider.getPreferredLocations(split);if (locations == null || locations.length == 0) {locations = emptyLocations;}for (String location : locations ) {if (location == null) {location = emptyLocation;}rackSet.add(locToRackMap.get(location));}for (String rack : rackSet) {rackLocations.get(rack).splits.add(split);}}LOG.info("输出机架类型rackset为:{},", Arrays.toString(rackSet.toArray()));remainingSplits.clear();distinctLocations = rackLocations;// adjust split length to be smaller because the data is non localfloat rackSplitReduction = conf.getFloat(TEZ_GROUPING_RACK_SPLIT_SIZE_REDUCTION,TEZ_GROUPING_RACK_SPLIT_SIZE_REDUCTION_DEFAULT);LOG.info("输出rackSplitReduction的参数为:"+ rackSplitReduction);if (rackSplitReduction > 0) {long newLengthPerGroup = (long)(lengthPerGroup*rackSplitReduction);int newNumSplitsInGroup = (int) (numSplitsInGroup*rackSplitReduction);if (newLengthPerGroup > 0) {lengthPerGroup = newLengthPerGroup;}if (newNumSplitsInGroup > 0) {numSplitsInGroup = newNumSplitsInGroup;}}//执行racklocal之后新的参数值LOG.info("Doing rack local after iteration: " + iterations +" splitsProcessed: " + splitsProcessed +" numFullGroupsInRound: " + numFullGroupsCreated +" totalGroups: " + groupedSplits.size() +" lengthPerGroup: " + lengthPerGroup +" numSplitsInGroup: " + numSplitsInGroup);// dont do smallGroups for the first passcontinue;}if (!allowSmallGroups && numFullGroupsCreated <= numNodeLocations/10) {// a few nodes have a lot of data or data is thinly spread across nodes// so allow small groups nowallowSmallGroups = true;LOG.info("Allowing small groups after iteration: " + iterations +" splitsProcessed: " + splitsProcessed +" numFullGroupsInRound: " + numFullGroupsCreated +" totalGroups: " + groupedSplits.size());}if (LOG.isDebugEnabled()) {LOG.debug("Iteration: " + iterations +" splitsProcessed: " + splitsProcessed +" numFullGroupsInRound: " + numFullGroupsCreated +" totalGroups: " + groupedSplits.size());}LOG.info("Iteration: " + iterations +" splitsProcessed: " + splitsProcessed +" numFullGroupsInRound: " + numFullGroupsCreated +" totalGroups: " + groupedSplits.size());}LOG.info("Number of splits desired: " + desiredNumSplits +" created: " + groupedSplits.size() +" splitsProcessed: " + splitsProcessed);return groupedSplits;}这篇关于Hive on Tez map阶段task划分源码分析(map task个数)的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!