本文主要是介绍机器学习之逻辑回归实践:购买意向预测与其他预测,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

逻辑回归的主要用途有预测(如预测用户购买意向)、判别(如判别某人是否会患胃癌)等。

今天使用逻辑回归做了个购买意向的预测。

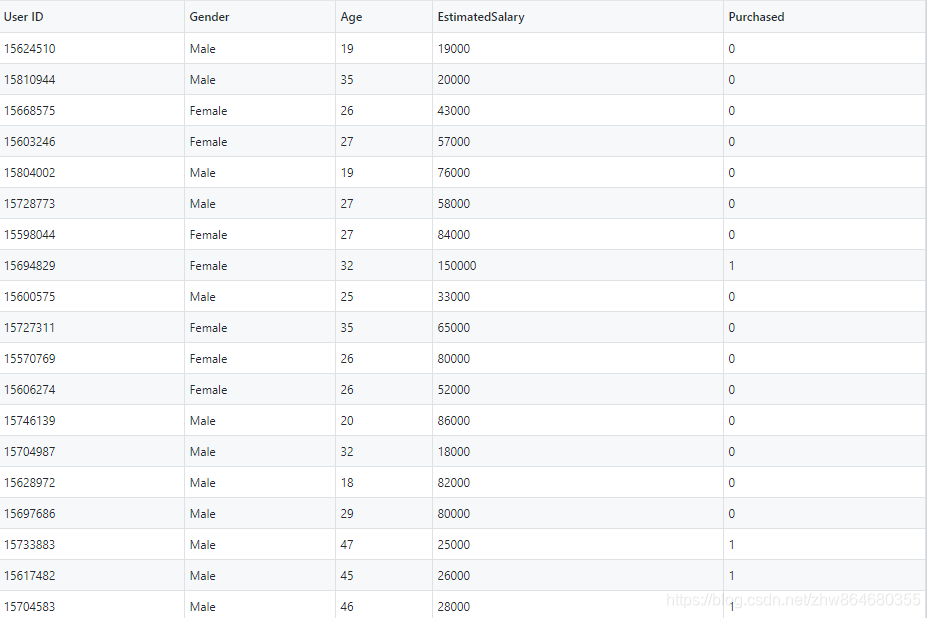

数据集如下(共400条数据,4个特征,这里我们不使用ID和性别,只使用年龄和收入两个特征):

具体实现代码如下:

import pandas as pd

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import StandardScaler

from sklearn.linear_model import LogisticRegression

#import matplotlib.pyplot as plt

from sklearn.metrics import accuracy_scoredataset = pd.read_csv('Social_Network_Ads.csv')

X = dataset.iloc[ : ,2:4].values

Y = dataset.iloc[ : ,4].valuesX_train, X_test, Y_train, Y_test = train_test_split(X, Y, test_size = 0.2)sc = StandardScaler()

X_train = sc.fit_transform(X_train)

X_test = sc.transform(X_test )classifier = LogisticRegression()

classifier.fit(X_train, Y_train)y_pred = classifier.predict(X_test)acc = accuracy_score(Y_test, y_pred)

print("准确率为:", acc)此外还有一个其他方面的预测代码示例如下:

# encoding: utf-8

from sklearn.model_selection import train_test_split

from sklearn.linear_model import LogisticRegression

from sklearn.metrics import accuracy_score

import numpy as np

import pandas as pd

import matplotlib.pyplot as pltdataset = pd.read_csv('dataset.csv', delimiter=',')

X = np.asarray(dataset.get(['x1', 'x2']))

y = np.asarray(dataset.get('y'))# 划分为训练集和测试集

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.5)# 使用 sklearn 的 LogisticRegression 作为模型,其中有 penalty,solver,dual 几个比较重要的参数,不同的参数有不同的准确率,这里为了简便都使用默认的,详细的请参考 sklearn 文档

model = LogisticRegression(solver='liblinear')# 拟合

model.fit(X, y)# 预测测试集

predictions = model.predict(X_test)# 打印准确率

print('测试集准确率:', accuracy_score(y_test, predictions))weights = np.column_stack((model.intercept_, model.coef_)).transpose()n = np.shape(X_train)[0]

xcord1 = []

ycord1 = []

xcord2 = []

ycord2 = []

for i in range(n):if int(y_train[i]) == 1:xcord1.append(X_train[i, 0])ycord1.append(X_train[i, 1])else:xcord2.append(X_train[i, 0])ycord2.append(X_train[i, 1])

fig = plt.figure()

ax = fig.add_subplot(111)

ax.scatter(xcord1, ycord1, s=30, c='red', marker='s')

ax.scatter(xcord2, ycord2, s=30, c='green')

x_ = np.arange(-3.0, 3.0, 0.1)

y_ = (-weights[0] - weights[1] * x_) / weights[2]

ax.plot(x_, y_)

plt.xlabel('x1')

plt.ylabel('x2')

plt.show()

该出原文原文链接:https://blog.csdn.net/qq_24671941/article/details/94767008

癌症预测

示例代码如下:

# -*- coding: utf-8 -*-from sklearn.datasets import load_breast_cancer

from sklearn.model_selection import train_test_split

from sklearn.linear_model import LogisticRegression

from sklearn.preprocessing import StandardScaler

from sklearn.metrics import classification_report# 加载数据

breast = load_breast_cancer()# 数据拆分

X_train, X_test, y_train, y_test = train_test_split(breast.data, breast.target)# 数据标准化

std = StandardScaler()

X_train = std.fit_transform(X_train)

X_test = std.transform(X_test)# 训练预测

lg = LogisticRegression()lg.fit(X_train, y_train)y_predict = lg.predict(X_test)# 查看训练准确度和预测报告

print(lg.score(X_test, y_test))

print(classification_report(y_test, y_predict, labels=[0, 1], target_names=["良性", "恶性"]))"""

0.958041958041958precision recall f1-score support良性 0.98 0.90 0.93 48恶性 0.95 0.99 0.97 95avg / total 0.96 0.96 0.96 143"""

该处原文链接为:https://blog.csdn.net/mouday/article/details/86653227

这篇关于机器学习之逻辑回归实践:购买意向预测与其他预测的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!