本文主要是介绍基于YOLO-V5的农林害虫智能检测系统【毕业设计】,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

项目完整演示视频:

【毕业设计】基于YOLO-V5的农林害虫智能检测系统演示视频

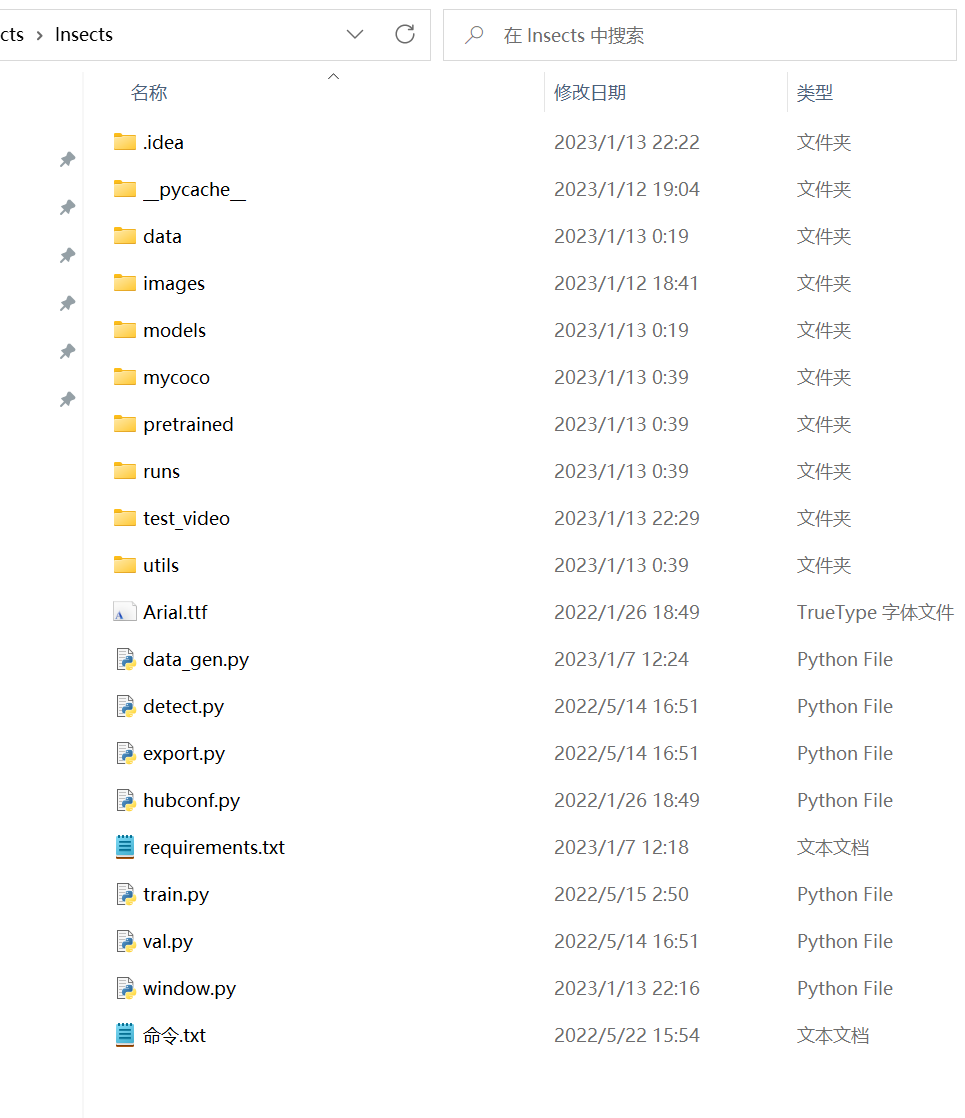

项目代码结构截图如下:

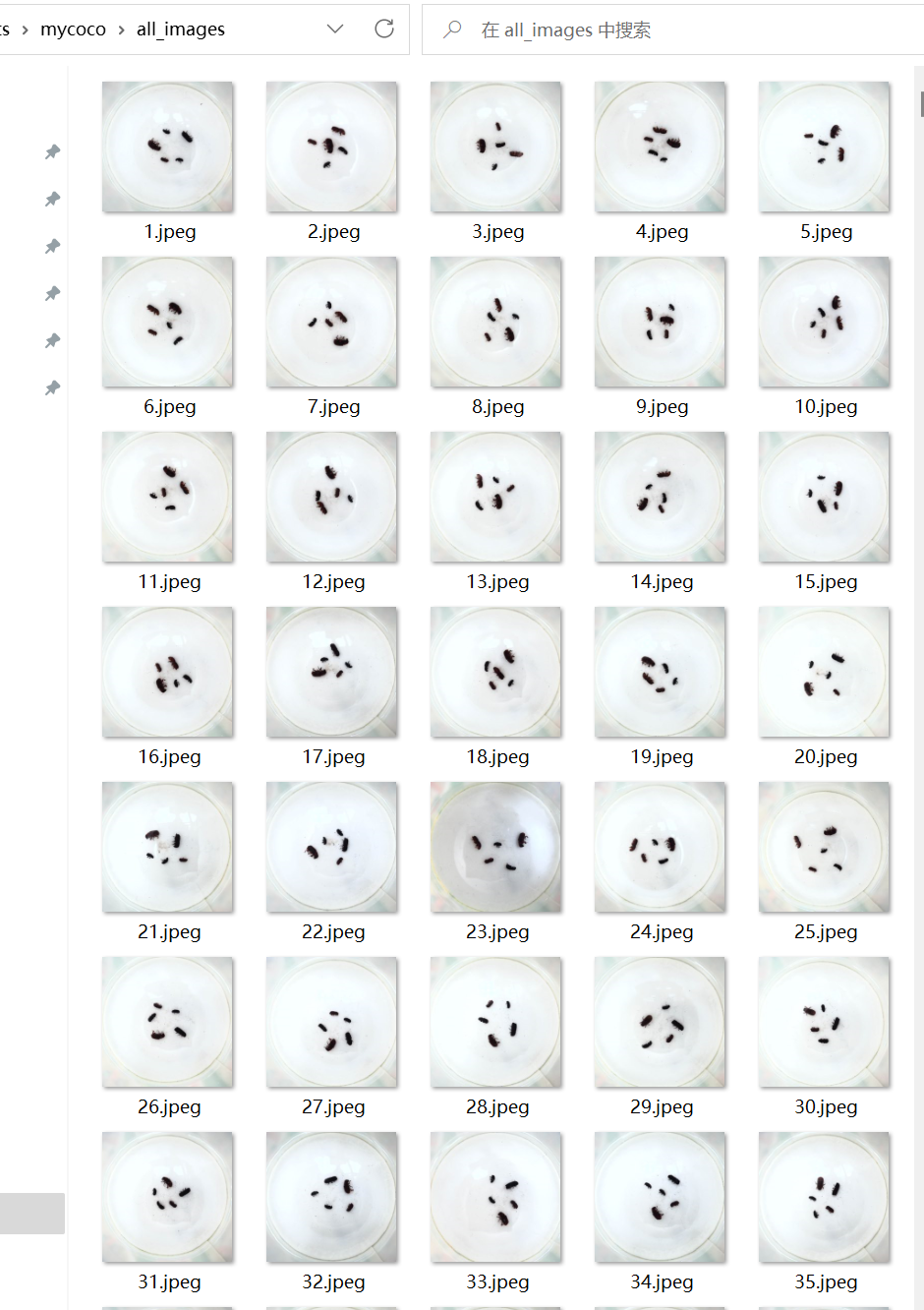

数据集部分图片展示:

yolov5 训练脚本:

# YOLOv5 🚀 by Ultralytics, GPL-3.0 license

"""

Train a YOLOv5 model on a custom datasetUsage:$ python path/to/train.py --data coco128.yaml --weights best.pt --img 640

"""

# 执行命令例子:python train.py --data mycoco.yaml --cfg my_yolov5s.yaml --weights pretrained/yolov5s.pt --epoch 300 --batch-size 8 --device 0

import argparse

import math

import os

import random

import sys

import time

from copy import deepcopy

from datetime import datetime

from pathlib import Pathimport numpy as np

import torch

import torch.distributed as dist

import torch.nn as nn

import yaml

from torch.cuda import amp

from torch.nn.parallel import DistributedDataParallel as DDP

from torch.optim import SGD, Adam, lr_scheduler

from tqdm import tqdmFILE = Path(__file__).resolve()

ROOT = FILE.parents[0] # YOLOv5 root directory

if str(ROOT) not in sys.path:sys.path.append(str(ROOT)) # add ROOT to PATH

ROOT = Path(os.path.relpath(ROOT, Path.cwd())) # relativeimport val # for end-of-epoch mAP

from models.experimental import attempt_load

from models.yolo import Model

from utils.autoanchor import check_anchors

from utils.autobatch import check_train_batch_size

from utils.callbacks import Callbacks

from utils.datasets import create_dataloader

from utils.downloads import attempt_download

from utils.general import (LOGGER, NCOLS, check_dataset, check_file, check_git_status, check_img_size,check_requirements, check_suffix, check_yaml, colorstr, get_latest_run, increment_path,init_seeds, intersect_dicts, labels_to_class_weights, labels_to_image_weights, methods,one_cycle, print_args, print_mutation, strip_optimizer)

from utils.loggers import Loggers

from utils.loggers.wandb.wandb_utils import check_wandb_resume

from utils.loss import ComputeLoss

from utils.metrics import fitness

from utils.plots import plot_evolve, plot_labels

from utils.torch_utils import EarlyStopping, ModelEMA, de_parallel, select_device, torch_distributed_zero_firstLOCAL_RANK = int(os.getenv('LOCAL_RANK', -1)) # https://pytorch.org/docs/stable/elastic/run.html

RANK = int(os.getenv('RANK', -1))

WORLD_SIZE = int(os.getenv('WORLD_SIZE', 1))def train(hyp, # path/to/hyp.yaml or hyp dictionaryopt,device,callbacks):save_dir, epochs, batch_size, weights, single_cls, evolve, data, cfg, resume, noval, nosave, workers, freeze, = \Path(opt.save_dir), opt.epochs, opt.batch_size, opt.weights, opt.single_cls, opt.evolve, opt.data, opt.cfg, \opt.resume, opt.noval, opt.nosave, opt.workers, opt.freeze# Directoriesw = save_dir / 'weights' # weights dir(w.parent if evolve else w).mkdir(parents=True, exist_ok=True) # make dirlast, best = w / 'last.pt', w / 'best.pt'# Hyperparametersif isinstance(hyp, str):with open(hyp, errors='ignore') as f:hyp = yaml.safe_load(f) # load hyps dictLOGGER.info(colorstr('hyperparameters: ') + ', '.join(f'{k}={v}' for k, v in hyp.items()))# Save run settingswith open(save_dir / 'hyp.yaml', 'w') as f:yaml.safe_dump(hyp, f, sort_keys=False)with open(save_dir / 'opt.yaml', 'w') as f:yaml.safe_dump(vars(opt), f, sort_keys=False)data_dict = None# Loggersif RANK in [-1, 0]:loggers = Loggers(save_dir, weights, opt, hyp, LOGGER) # loggers instanceif loggers.wandb:data_dict = loggers.wandb.data_dictif resume:weights, epochs, hyp = opt.weights, opt.epochs, opt.hyp# Register actionsfor k in methods(loggers):callbacks.register_action(k, callback=getattr(loggers, k))# Configplots = not evolve # create plotscuda = device.type != 'cpu'init_seeds(1 + RANK)with torch_distributed_zero_first(LOCAL_RANK):data_dict = data_dict or check_dataset(data) # check if Nonetrain_path, val_path = data_dict['train'], data_dict['val']nc = 1 if single_cls else int(data_dict['nc']) # number of classesnames = ['item'] if single_cls and len(data_dict['names']) != 1 else data_dict['names'] # class namesassert len(names) == nc, f'{len(names)} names found for nc={nc} dataset in {data}' # checkis_coco = isinstance(val_path, str) and val_path.endswith('coco/val2017.txt') # COCO dataset# Modelcheck_suffix(weights, '.pt') # check weightspretrained = weights.endswith('.pt')if pretrained:with torch_distributed_zero_first(LOCAL_RANK):weights = attempt_download(weights) # download if not found locallyckpt = torch.load(weights, map_location=device) # load checkpointmodel = Model(cfg or ckpt['model'].yaml, ch=3, nc=nc, anchors=hyp.get('anchors')).to(device) # createexclude = ['anchor'] if (cfg or hyp.get('anchors')) and not resume else [] # exclude keyscsd = ckpt['model'].float().state_dict() # checkpoint state_dict as FP32csd = intersect_dicts(csd, model.state_dict(), exclude=exclude) # intersectmodel.load_state_dict(csd, strict=False) # loadLOGGER.info(f'Transferred {len(csd)}/{len(model.state_dict())} items from {weights}') # reportelse:model = Model(cfg, ch=3, nc=nc, anchors=hyp.get('anchors')).to(device) # create# Freezefreeze = [f'model.{x}.' for x in range(freeze)] # layers to freezefor k, v in model.named_parameters():v.requires_grad = True # train all layersif any(x in k for x in freeze):LOGGER.info(f'freezing {k}')v.requires_grad = False# Image sizegs = max(int(model.stride.max()), 32) # grid size (max stride)imgsz = check_img_size(opt.imgsz, gs, floor=gs * 2) # verify imgsz is gs-multiple# Batch sizeif RANK == -1 and batch_size == -1: # single-GPU only, estimate best batch sizebatch_size = check_train_batch_size(model, imgsz)# Optimizernbs = 64 # nominal batch sizeaccumulate = max(round(nbs / batch_size), 1) # accumulate loss before optimizinghyp['weight_decay'] *= batch_size * accumulate / nbs # scale weight_decayLOGGER.info(f"Scaled weight_decay = {hyp['weight_decay']}")g0, g1, g2 = [], [], [] # optimizer parameter groupsfor v in model.modules():if hasattr(v, 'bias') and isinstance(v.bias, nn.Parameter): # biasg2.append(v.bias)if isinstance(v, nn.BatchNorm2d): # weight (no decay)g0.append(v.weight)elif hasattr(v, 'weight') and isinstance(v.weight, nn.Parameter): # weight (with decay)g1.append(v.weight)if opt.adam:optimizer = Adam(g0, lr=hyp['lr0'], betas=(hyp['momentum'], 0.999)) # adjust beta1 to momentumelse:optimizer = SGD(g0, lr=hyp['lr0'], momentum=hyp['momentum'], nesterov=True)optimizer.add_param_group({'params': g1, 'weight_decay': hyp['weight_decay']}) # add g1 with weight_decayoptimizer.add_param_group({'params': g2}) # add g2 (biases)LOGGER.info(f"{colorstr('optimizer:')} {type(optimizer).__name__} with parameter groups "f"{len(g0)} weight, {len(g1)} weight (no decay), {len(g2)} bias")del g0, g1, g2# Schedulerif opt.linear_lr:lf = lambda x: (1 - x / (epochs - 1)) * (1.0 - hyp['lrf']) + hyp['lrf'] # linearelse:lf = one_cycle(1, hyp['lrf'], epochs) # cosine 1->hyp['lrf']scheduler = lr_scheduler.LambdaLR(optimizer, lr_lambda=lf) # plot_lr_scheduler(optimizer, scheduler, epochs)# EMAema = ModelEMA(model) if RANK in [-1, 0] else None# Resumestart_epoch, best_fitness = 0, 0.0if pretrained:# Optimizerif ckpt['optimizer'] is not None:optimizer.load_state_dict(ckpt['optimizer'])best_fitness = ckpt['best_fitness']# EMAif ema and ckpt.get('ema'):ema.ema.load_state_dict(ckpt['ema'].float().state_dict())ema.updates = ckpt['updates']# Epochsstart_epoch = ckpt['epoch'] + 1if resume:assert start_epoch > 0, f'{weights} training to {epochs} epochs is finished, nothing to resume.'if epochs < start_epoch:LOGGER.info(f"{weights} has been trained for {ckpt['epoch']} epochs. Fine-tuning for {epochs} more epochs.")epochs += ckpt['epoch'] # finetune additional epochsdel ckpt, csd# DP modeif cuda and RANK == -1 and torch.cuda.device_count() > 1:LOGGER.warning('WARNING: DP not recommended, use torch.distributed.run for best DDP Multi-GPU results.\n''See Multi-GPU Tutorial at https://github.com/ultralytics/yolov5/issues/475 to get started.')model = torch.nn.DataParallel(model)# SyncBatchNormif opt.sync_bn and cuda and RANK != -1:model = torch.nn.SyncBatchNorm.convert_sync_batchnorm(model).to(device)LOGGER.info('Using SyncBatchNorm()')# Trainloadertrain_loader, dataset = create_dataloader(train_path, imgsz, batch_size // WORLD_SIZE, gs, single_cls,hyp=hyp, augment=True, cache=opt.cache, rect=opt.rect, rank=LOCAL_RANK,workers=workers, image_weights=opt.image_weights, quad=opt.quad,prefix=colorstr('train: '), shuffle=True)mlc = int(np.concatenate(dataset.labels, 0)[:, 0].max()) # max label classnb = len(train_loader) # number of batchesassert mlc < nc, f'Label class {mlc} exceeds nc={nc} in {data}. Possible class labels are 0-{nc - 1}'# Process 0if RANK in [-1, 0]:val_loader = create_dataloader(val_path, imgsz, batch_size // WORLD_SIZE * 2, gs, single_cls,hyp=hyp, cache=None if noval else opt.cache, rect=True, rank=-1,workers=workers, pad=0.5,prefix=colorstr('val: '))[0]if not resume:labels = np.concatenate(dataset.labels, 0)# c = torch.tensor(labels[:, 0]) # classes# cf = torch.bincount(c.long(), minlength=nc) + 1. # frequency# model._initialize_biases(cf.to(device))if plots:plot_labels(labels, names, save_dir)# Anchorsif not opt.noautoanchor:check_anchors(dataset, model=model, thr=hyp['anchor_t'], imgsz=imgsz)model.half().float() # pre-reduce anchor precisioncallbacks.run('on_pretrain_routine_end')# DDP modeif cuda and RANK != -1:model = DDP(model, device_ids=[LOCAL_RANK], output_device=LOCAL_RANK)# Model attributesnl = de_parallel(model).model[-1].nl # number of detection layers (to scale hyps)hyp['box'] *= 3 / nl # scale to layershyp['cls'] *= nc / 80 * 3 / nl # scale to classes and layershyp['obj'] *= (imgsz / 640) ** 2 * 3 / nl # scale to image size and layershyp['label_smoothing'] = opt.label_smoothingmodel.nc = nc # attach number of classes to modelmodel.hyp = hyp # attach hyperparameters to modelmodel.class_weights = labels_to_class_weights(dataset.labels, nc).to(device) * nc # attach class weightsmodel.names = names# Start trainingt0 = time.time()nw = max(round(hyp['warmup_epochs'] * nb), 1000) # number of warmup iterations, max(3 epochs, 1k iterations)# nw = min(nw, (epochs - start_epoch) / 2 * nb) # limit warmup to < 1/2 of traininglast_opt_step = -1maps = np.zeros(nc) # mAP per classresults = (0, 0, 0, 0, 0, 0, 0) # P, R, mAP@.5, mAP@.5-.95, val_loss(box, obj, cls)scheduler.last_epoch = start_epoch - 1 # do not movescaler = amp.GradScaler(enabled=cuda)stopper = EarlyStopping(patience=opt.patience)compute_loss = ComputeLoss(model) # init loss classLOGGER.info(f'Image sizes {imgsz} train, {imgsz} val\n'f'Using {train_loader.num_workers * WORLD_SIZE} dataloader workers\n'f"Logging results to {colorstr('bold', save_dir)}\n"f'Starting training for {epochs} epochs...')for epoch in range(start_epoch, epochs): # epoch ------------------------------------------------------------------model.train()# Update image weights (optional, single-GPU only)if opt.image_weights:cw = model.class_weights.cpu().numpy() * (1 - maps) ** 2 / nc # class weightsiw = labels_to_image_weights(dataset.labels, nc=nc, class_weights=cw) # image weightsdataset.indices = random.choices(range(dataset.n), weights=iw, k=dataset.n) # rand weighted idx# Update mosaic border (optional)# b = int(random.uniform(0.25 * imgsz, 0.75 * imgsz + gs) // gs * gs)# dataset.mosaic_border = [b - imgsz, -b] # height, width bordersmloss = torch.zeros(3, device=device) # mean lossesif RANK != -1:train_loader.sampler.set_epoch(epoch)pbar = enumerate(train_loader)LOGGER.info(('\n' + '%10s' * 7) % ('Epoch', 'gpu_mem', 'box', 'obj', 'cls', 'labels', 'img_size'))if RANK in [-1, 0]:pbar = tqdm(pbar, total=nb, ncols=NCOLS, bar_format='{l_bar}{bar:10}{r_bar}{bar:-10b}') # progress baroptimizer.zero_grad()for i, (imgs, targets, paths, _) in pbar: # batch -------------------------------------------------------------ni = i + nb * epoch # number integrated batches (since train start)imgs = imgs.to(device, non_blocking=True).float() / 255 # uint8 to float32, 0-255 to 0.0-1.0# Warmupif ni <= nw:xi = [0, nw] # x interp# compute_loss.gr = np.interp(ni, xi, [0.0, 1.0]) # iou loss ratio (obj_loss = 1.0 or iou)accumulate = max(1, np.interp(ni, xi, [1, nbs / batch_size]).round())for j, x in enumerate(optimizer.param_groups):# bias lr falls from 0.1 to lr0, all other lrs rise from 0.0 to lr0x['lr'] = np.interp(ni, xi, [hyp['warmup_bias_lr'] if j == 2 else 0.0, x['initial_lr'] * lf(epoch)])if 'momentum' in x:x['momentum'] = np.interp(ni, xi, [hyp['warmup_momentum'], hyp['momentum']])# Multi-scaleif opt.multi_scale:sz = random.randrange(imgsz * 0.5, imgsz * 1.5 + gs) // gs * gs # sizesf = sz / max(imgs.shape[2:]) # scale factorif sf != 1:ns = [math.ceil(x * sf / gs) * gs for x in imgs.shape[2:]] # new shape (stretched to gs-multiple)imgs = nn.functional.interpolate(imgs, size=ns, mode='bilinear', align_corners=False)# Forwardwith amp.autocast(enabled=cuda):pred = model(imgs) # forwardloss, loss_items = compute_loss(pred, targets.to(device)) # loss scaled by batch_sizeif RANK != -1:loss *= WORLD_SIZE # gradient averaged between devices in DDP modeif opt.quad:loss *= 4.# Backwardscaler.scale(loss).backward()# Optimizeif ni - last_opt_step >= accumulate:scaler.step(optimizer) # optimizer.stepscaler.update()optimizer.zero_grad()if ema:ema.update(model)last_opt_step = ni# Logif RANK in [-1, 0]:mloss = (mloss * i + loss_items) / (i + 1) # update mean lossesmem = f'{torch.cuda.memory_reserved() / 1E9 if torch.cuda.is_available() else 0:.3g}G' # (GB)pbar.set_description(('%10s' * 2 + '%10.4g' * 5) % (f'{epoch}/{epochs - 1}', mem, *mloss, targets.shape[0], imgs.shape[-1]))callbacks.run('on_train_batch_end', ni, model, imgs, targets, paths, plots, opt.sync_bn)# end batch ------------------------------------------------------------------------------------------------# Schedulerlr = [x['lr'] for x in optimizer.param_groups] # for loggersscheduler.step()if RANK in [-1, 0]:# mAPcallbacks.run('on_train_epoch_end', epoch=epoch)ema.update_attr(model, include=['yaml', 'nc', 'hyp', 'names', 'stride', 'class_weights'])final_epoch = (epoch + 1 == epochs) or stopper.possible_stopif not noval or final_epoch: # Calculate mAPresults, maps, _ = val.run(data_dict,batch_size=batch_size // WORLD_SIZE * 2,imgsz=imgsz,model=ema.ema,single_cls=single_cls,dataloader=val_loader,save_dir=save_dir,plots=False,callbacks=callbacks,compute_loss=compute_loss)# Update best mAPfi = fitness(np.array(results).reshape(1, -1)) # weighted combination of [P, R, mAP@.5, mAP@.5-.95]if fi > best_fitness:best_fitness = filog_vals = list(mloss) + list(results) + lrcallbacks.run('on_fit_epoch_end', log_vals, epoch, best_fitness, fi)# Save modelif (not nosave) or (final_epoch and not evolve): # if saveckpt = {'epoch': epoch,'best_fitness': best_fitness,'model': deepcopy(de_parallel(model)).half(),'ema': deepcopy(ema.ema).half(),'updates': ema.updates,'optimizer': optimizer.state_dict(),'wandb_id': loggers.wandb.wandb_run.id if loggers.wandb else None,'date': datetime.now().isoformat()}# Save last, best and deletetorch.save(ckpt, last)if best_fitness == fi:torch.save(ckpt, best)if (epoch > 0) and (opt.save_period > 0) and (epoch % opt.save_period == 0):torch.save(ckpt, w / f'epoch{epoch}.pt')del ckptcallbacks.run('on_model_save', last, epoch, final_epoch, best_fitness, fi)# Stop Single-GPUif RANK == -1 and stopper(epoch=epoch, fitness=fi):break# Stop DDP TODO: known issues shttps://github.com/ultralytics/yolov5/pull/4576# stop = stopper(epoch=epoch, fitness=fi)# if RANK == 0:# dist.broadcast_object_list([stop], 0) # broadcast 'stop' to all ranks# Stop DPP# with torch_distributed_zero_first(RANK):# if stop:# break # must break all DDP ranks# end epoch ----------------------------------------------------------------------------------------------------# end training -----------------------------------------------------------------------------------------------------if RANK in [-1, 0]:LOGGER.info(f'\n{epoch - start_epoch + 1} epochs completed in {(time.time() - t0) / 3600:.3f} hours.')for f in last, best:if f.exists():strip_optimizer(f) # strip optimizersif f is best:LOGGER.info(f'\nValidating {f}...')results, _, _ = val.run(data_dict,batch_size=batch_size // WORLD_SIZE * 2,imgsz=imgsz,model=attempt_load(f, device).half(),iou_thres=0.65 if is_coco else 0.60, # best pycocotools results at 0.65single_cls=single_cls,dataloader=val_loader,save_dir=save_dir,save_json=is_coco,verbose=True,plots=True,callbacks=callbacks,compute_loss=compute_loss) # val best model with plotsif is_coco:callbacks.run('on_fit_epoch_end', list(mloss) + list(results) + lr, epoch, best_fitness, fi)callbacks.run('on_train_end', last, best, plots, epoch, results)LOGGER.info(f"Results saved to {colorstr('bold', save_dir)}")torch.cuda.empty_cache()return results# 明天把这些模型都试试效果先,一波波给他训练完毕,找个公开的数据集测试一下。

def parse_opt(known=False):parser = argparse.ArgumentParser()parser.add_argument('--weights', type=str, default=ROOT / 'pretrained/best.pt', help='initial weights path')parser.add_argument('--cfg', type=str, default=ROOT / 'models/yolov5s.yaml', help='model.yaml path')parser.add_argument('--data', type=str, default=ROOT / 'data/data.yaml', help='dataset.yaml path')parser.add_argument('--hyp', type=str, default=ROOT / 'data/hyps/hyp.scratch.yaml', help='hyperparameters path')parser.add_argument('--epochs', type=int, default=300)parser.add_argument('--batch-size', type=int, default=4, help='total batch size for all GPUs, -1 for autobatch')parser.add_argument('--imgsz', '--img', '--img-size', type=int, default=640, help='train, val image size (pixels)')parser.add_argument('--rect', action='store_true', help='rectangular training')parser.add_argument('--resume', nargs='?', const=True, default=False, help='resume most recent training')parser.add_argument('--nosave', action='store_true', help='only save final checkpoint')parser.add_argument('--noval', action='store_true', help='only validate final epoch')parser.add_argument('--noautoanchor', action='store_true', help='disable autoanchor check')parser.add_argument('--evolve', type=int, nargs='?', const=300, help='evolve hyperparameters for x generations')parser.add_argument('--bucket', type=str, default='', help='gsutil bucket')parser.add_argument('--cache', type=str, nargs='?', const='ram', help='--cache images in "ram" (default) or "disk"')parser.add_argument('--image-weights', action='store_true', help='use weighted image selection for training')parser.add_argument('--device', default='', help='cuda device, i.e. 0 or 0,1,2,3 or cpu')# parser.add_argument('--multi-scale', action='store_true', help='vary img-size +/- 50%%')parser.add_argument('--multi-scale', default=True, help='vary img-size +/- 50%%')parser.add_argument('--single-cls', action='store_true', help='train multi-class data as single-class')parser.add_argument('--adam', action='store_true', help='use torch.optim.Adam() optimizer')parser.add_argument('--sync-bn', action='store_true', help='use SyncBatchNorm, only available in DDP mode')parser.add_argument('--workers', type=int, default=0, help='max dataloader workers (per RANK in DDP mode)')parser.add_argument('--project', default=ROOT / 'runs/train', help='save to project/name')parser.add_argument('--name', default='exp', help='save to project/name')parser.add_argument('--exist-ok', action='store_true', help='existing project/name ok, do not increment')parser.add_argument('--quad', action='store_true', help='quad dataloader')parser.add_argument('--linear-lr', action='store_true', help='linear LR')parser.add_argument('--label-smoothing', type=float, default=0.0, help='Label smoothing epsilon')parser.add_argument('--patience', type=int, default=100, help='EarlyStopping patience (epochs without improvement)')parser.add_argument('--freeze', type=int, default=0, help='Number of layers to freeze. backbone=10, all=24')parser.add_argument('--save-period', type=int, default=-1, help='Save checkpoint every x epochs (disabled if < 1)')parser.add_argument('--local_rank', type=int, default=-1, help='DDP parameter, do not modify')# Weights & Biases argumentsparser.add_argument('--entity', default=None, help='W&B: Entity')parser.add_argument('--upload_dataset', action='store_true', help='W&B: Upload dataset as artifact table')parser.add_argument('--bbox_interval', type=int, default=-1, help='W&B: Set bounding-box image logging interval')parser.add_argument('--artifact_alias', type=str, default='latest', help='W&B: Version of dataset artifact to use')opt = parser.parse_known_args()[0] if known else parser.parse_args()return optdef main(opt, callbacks=Callbacks()):# Checksif RANK in [-1, 0]:print_args(FILE.stem, opt)check_git_status()check_requirements(exclude=['thop'])# Resumeif opt.resume and not check_wandb_resume(opt) and not opt.evolve: # resume an interrupted runckpt = opt.resume if isinstance(opt.resume, str) else get_latest_run() # specified or most recent pathassert os.path.isfile(ckpt), 'ERROR: --resume checkpoint does not exist'with open(Path(ckpt).parent.parent / 'opt.yaml', errors='ignore') as f:opt = argparse.Namespace(**yaml.safe_load(f)) # replaceopt.cfg, opt.weights, opt.resume = '', ckpt, True # reinstateLOGGER.info(f'Resuming training from {ckpt}')else:opt.data, opt.cfg, opt.hyp, opt.weights, opt.project = \check_file(opt.data), check_yaml(opt.cfg), check_yaml(opt.hyp), str(opt.weights), str(opt.project) # checksassert len(opt.cfg) or len(opt.weights), 'either --cfg or --weights must be specified'if opt.evolve:opt.project = str(ROOT / 'runs/evolve')opt.exist_ok, opt.resume = opt.resume, False # pass resume to exist_ok and disable resumeopt.save_dir = str(increment_path(Path(opt.project) / opt.name, exist_ok=opt.exist_ok))# DDP modedevice = select_device(opt.device, batch_size=opt.batch_size)if LOCAL_RANK != -1:assert torch.cuda.device_count() > LOCAL_RANK, 'insufficient CUDA devices for DDP command'assert opt.batch_size % WORLD_SIZE == 0, '--batch-size must be multiple of CUDA device count'assert not opt.image_weights, '--image-weights argument is not compatible with DDP training'assert not opt.evolve, '--evolve argument is not compatible with DDP training'torch.cuda.set_device(LOCAL_RANK)device = torch.device('cuda', LOCAL_RANK)dist.init_process_group(backend="nccl" if dist.is_nccl_available() else "gloo")# Trainif not opt.evolve:train(opt.hyp, opt, device, callbacks)if WORLD_SIZE > 1 and RANK == 0:LOGGER.info('Destroying process group... ')dist.destroy_process_group()# Evolve hyperparameters (optional)else:# Hyperparameter evolution metadata (mutation scale 0-1, lower_limit, upper_limit)meta = {'lr0': (1, 1e-5, 1e-1), # initial learning rate (SGD=1E-2, Adam=1E-3)'lrf': (1, 0.01, 1.0), # final OneCycleLR learning rate (lr0 * lrf)'momentum': (0.3, 0.6, 0.98), # SGD momentum/Adam beta1'weight_decay': (1, 0.0, 0.001), # optimizer weight decay'warmup_epochs': (1, 0.0, 5.0), # warmup epochs (fractions ok)'warmup_momentum': (1, 0.0, 0.95), # warmup initial momentum'warmup_bias_lr': (1, 0.0, 0.2), # warmup initial bias lr'box': (1, 0.02, 0.2), # box loss gain'cls': (1, 0.2, 4.0), # cls loss gain'cls_pw': (1, 0.5, 2.0), # cls BCELoss positive_weight'obj': (1, 0.2, 4.0), # obj loss gain (scale with pixels)'obj_pw': (1, 0.5, 2.0), # obj BCELoss positive_weight'iou_t': (0, 0.1, 0.7), # IoU training threshold'anchor_t': (1, 2.0, 8.0), # anchor-multiple threshold'anchors': (2, 2.0, 10.0), # anchors per output grid (0 to ignore)'fl_gamma': (0, 0.0, 2.0), # focal loss gamma (efficientDet default gamma=1.5)'hsv_h': (1, 0.0, 0.1), # image HSV-Hue augmentation (fraction)'hsv_s': (1, 0.0, 0.9), # image HSV-Saturation augmentation (fraction)'hsv_v': (1, 0.0, 0.9), # image HSV-Value augmentation (fraction)'degrees': (1, 0.0, 45.0), # image rotation (+/- deg)'translate': (1, 0.0, 0.9), # image translation (+/- fraction)'scale': (1, 0.0, 0.9), # image scale (+/- gain)'shear': (1, 0.0, 10.0), # image shear (+/- deg)'perspective': (0, 0.0, 0.001), # image perspective (+/- fraction), range 0-0.001'flipud': (1, 0.0, 1.0), # image flip up-down (probability)'fliplr': (0, 0.0, 1.0), # image flip left-right (probability)'mosaic': (1, 0.0, 1.0), # image mixup (probability)'mixup': (1, 0.0, 1.0), # image mixup (probability)'copy_paste': (1, 0.0, 1.0)} # segment copy-paste (probability)with open(opt.hyp, errors='ignore') as f:hyp = yaml.safe_load(f) # load hyps dictif 'anchors' not in hyp: # anchors commented in hyp.yamlhyp['anchors'] = 3opt.noval, opt.nosave, save_dir = True, True, Path(opt.save_dir) # only val/save final epoch# ei = [isinstance(x, (int, float)) for x in hyp.values()] # evolvable indicesevolve_yaml, evolve_csv = save_dir / 'hyp_evolve.yaml', save_dir / 'evolve.csv'if opt.bucket:os.system(f'gsutil cp gs://{opt.bucket}/evolve.csv {save_dir}') # download evolve.csv if existsfor _ in range(opt.evolve): # generations to evolveif evolve_csv.exists(): # if evolve.csv exists: select best hyps and mutate# Select parent(s)parent = 'single' # parent selection method: 'single' or 'weighted'x = np.loadtxt(evolve_csv, ndmin=2, delimiter=',', skiprows=1)n = min(5, len(x)) # number of previous results to considerx = x[np.argsort(-fitness(x))][:n] # top n mutationsw = fitness(x) - fitness(x).min() + 1E-6 # weights (sum > 0)if parent == 'single' or len(x) == 1:# x = x[random.randint(0, n - 1)] # random selectionx = x[random.choices(range(n), weights=w)[0]] # weighted selectionelif parent == 'weighted':x = (x * w.reshape(n, 1)).sum(0) / w.sum() # weighted combination# Mutatemp, s = 0.8, 0.2 # mutation probability, sigmanpr = np.randomnpr.seed(int(time.time()))g = np.array([meta[k][0] for k in hyp.keys()]) # gains 0-1ng = len(meta)v = np.ones(ng)while all(v == 1): # mutate until a change occurs (prevent duplicates)v = (g * (npr.random(ng) < mp) * npr.randn(ng) * npr.random() * s + 1).clip(0.3, 3.0)for i, k in enumerate(hyp.keys()): # plt.hist(v.ravel(), 300)hyp[k] = float(x[i + 7] * v[i]) # mutate# Constrain to limitsfor k, v in meta.items():hyp[k] = max(hyp[k], v[1]) # lower limithyp[k] = min(hyp[k], v[2]) # upper limithyp[k] = round(hyp[k], 5) # significant digits# Train mutationresults = train(hyp.copy(), opt, device, callbacks)# Write mutation resultsprint_mutation(results, hyp.copy(), save_dir, opt.bucket)# Plot resultsplot_evolve(evolve_csv)LOGGER.info(f'Hyperparameter evolution finished\n'f"Results saved to {colorstr('bold', save_dir)}\n"f'Use best hyperparameters example: $ python train.py --hyp {evolve_yaml}')def run(**kwargs):# Usage: import train; train.run(data='coco128.yaml', imgsz=320, weights='yolov5m.pt')opt = parse_opt(True)for k, v in kwargs.items():setattr(opt, k, v)main(opt)# python train.py --data mask_data.yaml --cfg mask_yolov5s.yaml --weights pretrained/best.pt --epoch 100 --batch-size 4 --device cpu

# python train.py --data mask_data.yaml --cfg mask_yolov5l.yaml --weights pretrained/yolov5l.pt --epoch 100 --batch-size 4

# python train.py --data mask_data.yaml --cfg mask_yolov5m.yaml --weights pretrained/yolov5m.pt --epoch 100 --batch-size 4

if __name__ == "__main__":opt = parse_opt()main(opt)

这篇关于基于YOLO-V5的农林害虫智能检测系统【毕业设计】的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!