本文主要是介绍netfilter之conntrack连接跟踪,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

连接跟踪conntrack是状态防火墙和NAT的基础,每个经过conntrack处理的数据包的skb->nfctinfo都会设置如下值之一,后续流程中NAT模块根据此值做不同的处理,filter模块可以在扩展匹配中指定state进行不同的处理。

enum ip_conntrack_info {/* Part of an established connection (either direction). *///收到双向报文,连接已经建立,对original方向报文设置此标志IP_CT_ESTABLISHED,/* Like NEW, but related to an existing connection, or ICMP error(in either direction). */IP_CT_RELATED,/* Started a new connection to track (onlyIP_CT_DIR_ORIGINAL); may be a retransmission. *///收到original方向数据包,连接还未建立IP_CT_NEW,/* >= this indicates reply direction *///收到reply方向数据包,说明连接建立IP_CT_IS_REPLY,IP_CT_ESTABLISHED_REPLY = IP_CT_ESTABLISHED + IP_CT_IS_REPLY,IP_CT_RELATED_REPLY = IP_CT_RELATED + IP_CT_IS_REPLY,IP_CT_NEW_REPLY = IP_CT_NEW + IP_CT_IS_REPLY, /* Number of distinct IP_CT types (no NEW in reply dirn). */IP_CT_NUMBER = IP_CT_IS_REPLY * 2 - 1

};

nf hook函数注册

跟连接跟踪相关的hook函数包含下面两个:重组相关的和conntrack处理相关的。

注册报文重组hook函数。

static struct nf_hook_ops ipv4_defrag_ops[] = {{.hook = ipv4_conntrack_defrag,.owner = THIS_MODULE,.pf = NFPROTO_IPV4,.hooknum = NF_INET_PRE_ROUTING,.priority = NF_IP_PRI_CONNTRACK_DEFRAG,},{.hook = ipv4_conntrack_defrag,.owner = THIS_MODULE,.pf = NFPROTO_IPV4,.hooknum = NF_INET_LOCAL_OUT,.priority = NF_IP_PRI_CONNTRACK_DEFRAG,},

};

static int __init nf_defrag_init(void)

{return nf_register_hooks(ipv4_defrag_ops, ARRAY_SIZE(ipv4_defrag_ops));

}module_init(nf_defrag_init);

注册conntrack hook函数。

/* Connection tracking may drop packets, but never alters them, somake it the first hook. */

static struct nf_hook_ops ipv4_conntrack_ops[] __read_mostly = {{.hook = ipv4_conntrack_in,.owner = THIS_MODULE,.pf = NFPROTO_IPV4,.hooknum = NF_INET_PRE_ROUTING,.priority = NF_IP_PRI_CONNTRACK,},{.hook = ipv4_conntrack_local,.owner = THIS_MODULE,.pf = NFPROTO_IPV4,.hooknum = NF_INET_LOCAL_OUT,.priority = NF_IP_PRI_CONNTRACK,},{.hook = ipv4_helper,.owner = THIS_MODULE,.pf = NFPROTO_IPV4,.hooknum = NF_INET_POST_ROUTING,.priority = NF_IP_PRI_CONNTRACK_HELPER,},{.hook = ipv4_confirm,.owner = THIS_MODULE,.pf = NFPROTO_IPV4,.hooknum = NF_INET_POST_ROUTING,.priority = NF_IP_PRI_CONNTRACK_CONFIRM,},{.hook = ipv4_helper,.owner = THIS_MODULE,.pf = NFPROTO_IPV4,.hooknum = NF_INET_LOCAL_IN,.priority = NF_IP_PRI_CONNTRACK_HELPER,},{.hook = ipv4_confirm,.owner = THIS_MODULE,.pf = NFPROTO_IPV4,.hooknum = NF_INET_LOCAL_IN,.priority = NF_IP_PRI_CONNTRACK_CONFIRM,},

};

static int __init nf_conntrack_l3proto_ipv4_init(void)

{...ret = nf_register_hooks(ipv4_conntrack_ops,ARRAY_SIZE(ipv4_conntrack_ops));...

}

上面的hook函数会注册到二维数组nf_hook中。

enum nf_inet_hooks {NF_INET_PRE_ROUTING,NF_INET_LOCAL_IN,NF_INET_FORWARD,NF_INET_LOCAL_OUT,NF_INET_POST_ROUTING,NF_INET_NUMHOOKS

};

enum {NFPROTO_UNSPEC = 0,NFPROTO_INET = 1,NFPROTO_IPV4 = 2,NFPROTO_ARP = 3,NFPROTO_BRIDGE = 7,NFPROTO_IPV6 = 10,NFPROTO_DECNET = 12,NFPROTO_NUMPROTO,

};

extern struct list_head nf_hooks[NFPROTO_NUMPROTO][NF_MAX_HOOKS];int nf_register_hooks(struct nf_hook_ops *reg, unsigned int n)

{unsigned int i;for (i = 0; i < n; i++) {nf_register_hook(®[i]);}

}int nf_register_hook(struct nf_hook_ops *reg)

{struct nf_hook_ops *elem;mutex_lock(&nf_hook_mutex);list_for_each_entry(elem, &nf_hooks[reg->pf][reg->hooknum], list) {if (reg->priority < elem->priority)break;}list_add_rcu(®->list, elem->list.prev);mutex_unlock(&nf_hook_mutex);

#ifdef HAVE_JUMP_LABELstatic_key_slow_inc(&nf_hooks_needed[reg->pf][reg->hooknum]);

#endifreturn 0;

}

重组和conntrack hook注册成功后,nf_hook内容如下标黄,这也是ipv4的连接跟踪模块用到的hook函数,小括号中的数字是hook函数的优先级。在同一个hook点上,数字越小优先级越高。

发送给本机的数据会经过 NF_INET_PRE_ROUTING 和 NF_INET_LOCAL_IN 两个hook点,所以hook函数调用顺序为:

ipv4_conntrack_defrag -> ipv4_conntrack_in -> ipv4_helper -> ipv4_confirm。

由本机转发的数据会经过 NF_INET_PRE_ROUTING 和 NF_INET_FORWARD 和 NF_INET_POST_ROUTING 三个hook点,所以hook函数调用顺序为:

ipv4_conntrack_defrag -> ipv4_conntrack_in -> ipv4_helper -> ipv4_confirm。

本机发送的数据会经过 NF_INET_LOCAL_OUT 和NF_INET_POST_ROUTING 两个hook点,所以hook函数调用顺序为:

ipv4_conntrack_defrag -> ipv4_conntrack_local -> ipv4_helper -> ipv4_confirm。

可看到不管数据包从哪来到哪去,经过的连接跟踪模块处理基本是一样的,唯一的区别是ipv4_conntrack_in和ipv4_conntrack_local,后者增加了对数据包长度的校验,即只有从本机发出去的报文才需要校验长度。

static unsigned int ipv4_conntrack_in(const struct nf_hook_ops *ops,struct sk_buff *skb,const struct net_device *in,const struct net_device *out,int (*okfn)(struct sk_buff *))

{return nf_conntrack_in(dev_net(in), PF_INET, ops->hooknum, skb);

}static unsigned int ipv4_conntrack_local(const struct nf_hook_ops *ops,struct sk_buff *skb,const struct net_device *in,const struct net_device *out,int (*okfn)(struct sk_buff *))

{/* root is playing with raw sockets. */if (skb->len < sizeof(struct iphdr) ||ip_hdrlen(skb) < sizeof(struct iphdr))return NF_ACCEPT;return nf_conntrack_in(dev_net(out), PF_INET, ops->hooknum, skb);

}

hook函数执行

下面分别分析这四个hook函数。

- ipv4_conntrack_defrag

重组分片报文。重组完整前不让数据包进行下一步

static unsigned int ipv4_conntrack_defrag(const struct nf_hook_ops *ops,struct sk_buff *skb,const struct net_device *in,const struct net_device *out,int (*okfn)(struct sk_buff *))

{struct sock *sk = skb->sk;struct inet_sock *inet = inet_sk(skb->sk);//对于PF_INET类型的socket,并且inet->nodefrag置位了,则//不允许重组,返回NF_ACCEPTif (sk && (sk->sk_family == PF_INET) &&inet->nodefrag)return NF_ACCEPT;#if IS_ENABLED(CONFIG_NF_CONNTRACK)

#if !IS_ENABLED(CONFIG_NF_NAT)/* Previously seen (loopback)? Ignore. Do this beforefragment check. *///nfct不为空,并且没有IPS_TEMPLATE_BIT标志,说明此ct是//在raw表匹配到target为notrack的规则。if (skb->nfct && !nf_ct_is_template((struct nf_conn *)skb->nfct))return NF_ACCEPT;

#endif

#endif/* Gather fragments. *///如果是分片报文,只处理分片报文if (ip_is_fragment(ip_hdr(skb))) {//获取重组的user。user表示谁来执行重组,或者说在哪个//模块哪个阶段重组enum ip_defrag_users user =nf_ct_defrag_user(ops->hooknum, skb);//返回值为非零表示未完成重组(只收到第一片或者某几//片),需要将skb保存到队列,或者重组过程出错,此时//需要释放skb。if (nf_ct_ipv4_gather_frags(skb, user))return NF_STOLEN;}return NF_ACCEPT;

}

- nf_conntrack_in

连接跟踪处理的主函数nf_conntrack_in,其会用到l3proto和l4proto函数,先看一下这两组函数的注册。

注册l3proto Ipv4的处理函数。

struct nf_conntrack_l3proto nf_conntrack_l3proto_ipv4 __read_mostly = {.l3proto = PF_INET,.name = "ipv4",.pkt_to_tuple = ipv4_pkt_to_tuple, //获取源目的ip.invert_tuple = ipv4_invert_tuple, //源目的ip调换.print_tuple = ipv4_print_tuple, //打印出源目的ip.get_l4proto = ipv4_get_l4proto, //获取ip报文总长度和四层协议号

#if IS_ENABLED(CONFIG_NF_CT_NETLINK).tuple_to_nlattr = ipv4_tuple_to_nlattr,.nlattr_tuple_size = ipv4_nlattr_tuple_size,.nlattr_to_tuple = ipv4_nlattr_to_tuple,.nla_policy = ipv4_nla_policy,

#endif

#if defined(CONFIG_SYSCTL) && defined(CONFIG_NF_CONNTRACK_PROC_COMPAT).ctl_table_path = "net/ipv4/netfilter",

#endif.init_net = ipv4_init_net,.me = THIS_MODULE,

};

static int __init nf_conntrack_l3proto_ipv4_init(void)ret = nf_ct_l3proto_register(&nf_conntrack_l3proto_ipv4);int nf_ct_l3proto_register(struct nf_conntrack_l3proto *proto)

{int ret = 0;struct nf_conntrack_l3proto *old;if (proto->l3proto >= AF_MAX)return -EBUSY;if (proto->tuple_to_nlattr && !proto->nlattr_tuple_size)return -EINVAL;mutex_lock(&nf_ct_proto_mutex);old = rcu_dereference_protected(nf_ct_l3protos[proto->l3proto],lockdep_is_held(&nf_ct_proto_mutex));if (proto->nlattr_tuple_size)proto->nla_size = 3 * proto->nlattr_tuple_size();rcu_assign_pointer(nf_ct_l3protos[proto->l3proto], proto);

}

注册l4proto处理函数

int nf_ct_l4proto_register(struct nf_conntrack_l4proto *l4proto)rcu_assign_pointer(nf_ct_protos[l4proto->l3proto][l4proto->l4proto], l4proto);//ipv4的l4注册了tcp,udp和icmp这三种协议

static int __init nf_conntrack_l3proto_ipv4_init(void)ret = nf_ct_l4proto_register(&nf_conntrack_l4proto_tcp4);ret = nf_ct_l4proto_register(&nf_conntrack_l4proto_udp4);ret = nf_ct_l4proto_register(&nf_conntrack_l4proto_icmp);//以udp为例说明

struct nf_conntrack_l4proto nf_conntrack_l4proto_udp4 __read_mostly =

{.l3proto = PF_INET,.l4proto = IPPROTO_UDP,.name = "udp",.pkt_to_tuple = udp_pkt_to_tuple, //获取源目的port.invert_tuple = udp_invert_tuple,//源目的port调换.print_tuple = udp_print_tuple,//打印源目的port.packet = udp_packet, //更新ct中定时器超时时间并更新统计计数.get_timeouts = udp_get_timeouts, //获取ct超时时间.new = udp_new, //创建新ct时调用.error = udp_error,

#if IS_ENABLED(CONFIG_NF_CT_NETLINK).tuple_to_nlattr = nf_ct_port_tuple_to_nlattr,.nlattr_to_tuple = nf_ct_port_nlattr_to_tuple,.nlattr_tuple_size = nf_ct_port_nlattr_tuple_size,.nla_policy = nf_ct_port_nla_policy,

#endif

#if IS_ENABLED(CONFIG_NF_CT_NETLINK_TIMEOUT).ctnl_timeout = {.nlattr_to_obj = udp_timeout_nlattr_to_obj,.obj_to_nlattr = udp_timeout_obj_to_nlattr,.nlattr_max = CTA_TIMEOUT_UDP_MAX,.obj_size = sizeof(unsigned int) * CTA_TIMEOUT_UDP_MAX,.nla_policy = udp_timeout_nla_policy,},

#endif /* CONFIG_NF_CT_NETLINK_TIMEOUT */.init_net = udp_init_net,.get_net_proto = udp_get_net_proto,

};

nf_conntrack_in 会用到上面注册的l3proto和l4proto函数

unsigned int

nf_conntrack_in(struct net *net, u_int8_t pf, unsigned int hooknum,struct sk_buff *skb)

{struct nf_conn *ct, *tmpl = NULL;enum ip_conntrack_info ctinfo;struct nf_conntrack_l3proto *l3proto;struct nf_conntrack_l4proto *l4proto;unsigned int *timeouts;unsigned int dataoff;u_int8_t protonum;int set_reply = 0;int ret;//如果skb已经有ct了,并且有template标志//IPS_TEMPLATE_BIT,说明报文在raw表经过了//notrack处理,不用记录在连接跟踪表,可以直接返回。//如果有template标志IPS_TEMPLATE_BIT,说明是helper相关 //的处理,保存tmpl,将skb->nfct置空,后面重新给它分配ctif (skb->nfct) {/* Previously seen (loopback or untracked)? Ignore. */tmpl = (struct nf_conn *)skb->nfct;if (!nf_ct_is_template(tmpl)) {NF_CT_STAT_INC_ATOMIC(net, ignore);return NF_ACCEPT;}skb->nfct = NULL;}/* rcu_read_lock()ed by nf_hook_slow *///根据pf到nf_ct_l3protos获取l3proto//对于ipv4,l3proto为nf_conntrack_l3proto_ipv4,用于获取三层源目的ip。l3proto = __nf_ct_l3proto_find(pf);ret = l3proto->get_l4proto(skb, skb_network_offset(skb),&dataoff, &protonum);if (ret <= 0) {pr_debug("not prepared to track yet or error occurred\n");NF_CT_STAT_INC_ATOMIC(net, error);NF_CT_STAT_INC_ATOMIC(net, invalid);ret = -ret;goto out;}//根据pf和四层协议号到nf_ct_protos找l4proto。//对于udp协议来说,l4proto就是nf_conntrack_l4proto_udp4,//用于获取四层源目的端口号等信息。l4proto = __nf_ct_l4proto_find(pf, protonum);/* It may be an special packet, error, unclean...* inverse of the return code tells to the netfilter* core what to do with the packet. */if (l4proto->error != NULL) {ret = l4proto->error(net, tmpl, skb, dataoff, &ctinfo,pf, hooknum);if (ret <= 0) {NF_CT_STAT_INC_ATOMIC(net, error);NF_CT_STAT_INC_ATOMIC(net, invalid);ret = -ret;goto out;}/* ICMP[v6] protocol trackers may assign one conntrack. */if (skb->nfct)goto out;}ct = resolve_normal_ct(net, tmpl, skb, dataoff, pf, protonum,l3proto, l4proto, &set_reply, &ctinfo);if (!ct) {/* Not valid part of a connection */NF_CT_STAT_INC_ATOMIC(net, invalid);ret = NF_ACCEPT;goto out;}if (IS_ERR(ct)) {/* Too stressed to deal. */NF_CT_STAT_INC_ATOMIC(net, drop);ret = NF_DROP;goto out;}NF_CT_ASSERT(skb->nfct);/* Decide what timeout policy we want to apply to this flow. *///不同的四层协议根据各自特点提供了不同的超时时间,udp提供如下两种//static unsigned int udp_timeouts[UDP_CT_MAX] = {// [UDP_CT_UNREPLIED] = 30*HZ,// [UDP_CT_REPLIED] = 180*HZ,//};timeouts = nf_ct_timeout_lookup(net, ct, l4proto);//对于udp来说,调用udp_packet->nf_ct_refresh_acct更新ct超//时时间,保证此条数据流不断,ct就不会被删除。并且更新统//计计数到acct中。ret = l4proto->packet(ct, skb, dataoff, ctinfo, pf, hooknum, timeouts);if (ret <= 0) {/* Invalid: inverse of the return code tells* the netfilter core what to do */pr_debug("nf_conntrack_in: Can't track with proto module\n");nf_conntrack_put(skb->nfct);skb->nfct = NULL;NF_CT_STAT_INC_ATOMIC(net, invalid);if (ret == -NF_DROP)NF_CT_STAT_INC_ATOMIC(net, drop);ret = -ret;goto out;}//对于一个新创建的连接跟踪项后,当第一次收到reply方向的数//据包后,则会设置nf_conn->status的IPS_SEEN_REPLY_BIT//位为1,当设置成功且IPS_SEEN_REPLY_BIT位的原来值为0//时,则调用nf_conntrack_event_cache ,由nfnetlink模块处理//状态改变的事件。if (set_reply && !test_and_set_bit(IPS_SEEN_REPLY_BIT, &ct->status))nf_conntrack_event_cache(IPCT_REPLY, ct);

out:if (tmpl) {/* Special case: we have to repeat this hook, assign the* template again to this packet. We assume that this packet* has no conntrack assigned. This is used by nf_ct_tcp. */if (ret == NF_REPEAT)skb->nfct = (struct nf_conntrack *)tmpl;elsenf_ct_put(tmpl);}return ret;

}

/* On success, returns conntrack ptr, sets skb->nfct and ctinfo */

static inline struct nf_conn *

resolve_normal_ct(struct net *net, struct nf_conn *tmpl,struct sk_buff *skb,unsigned int dataoff,u_int16_t l3num,u_int8_t protonum,struct nf_conntrack_l3proto *l3proto,struct nf_conntrack_l4proto *l4proto,int *set_reply,enum ip_conntrack_info *ctinfo)

{struct nf_conntrack_tuple tuple;struct nf_conntrack_tuple_hash *h;struct nf_conn *ct;u16 zone = tmpl ? nf_ct_zone(tmpl) : NF_CT_DEFAULT_ZONE;u32 hash;//获取五元组信息if (!nf_ct_get_tuple(skb, skb_network_offset(skb),dataoff, l3num, protonum, &tuple, l3proto,l4proto)) {pr_debug("resolve_normal_ct: Can't get tuple\n");return NULL;}/* look for tuple match */hash = hash_conntrack_raw(&tuple, zone);//到全局confirm表net->ct.hash中查找是否已经存在此条流h = __nf_conntrack_find_get(net, zone, &tuple, hash);if (!h) {//如果查找不到,需要分配一个ct。根据tuple获取反方向的//reply tuple,将他俩赋值给ct的tuplehash。并将original//的tuplehash挂到 net.ct.pcpu_lists->unconfirmed表中h = init_conntrack(net, tmpl, &tuple, l3proto, l4proto,skb, dataoff, hash);if (!h)return NULL;if (IS_ERR(h))return (void *)h;}ct = nf_ct_tuplehash_to_ctrack(h);//如果是reply方向的数据包,设置 ctinfo = //IP_CT_ESTABLISHED_REPLY,//如果是original方向的数据包,分为如下几种情况://a. original方向第一个数据包,则设置ctinfo = IP_CT_NEW//b. original方向的非第一个数据包,并且已经收到reply的数据//包,则设置ctinfo = IP_CT_ESTABLISHED//c.original方向的数据包,并且是其他连接的期望连接,则设置ctinfo = IP_CT_RELATED//d. original方向的非第一个数据包,但是还没有收到reply包,也设置ctinfo = IP_CT_NEW/* It exists; we have (non-exclusive) reference. */if (NF_CT_DIRECTION(h) == IP_CT_DIR_REPLY) {*ctinfo = IP_CT_ESTABLISHED_REPLY;/* Please set reply bit if this packet OK */*set_reply = 1;} else {/* Once we've had two way comms, always ESTABLISHED. */if (test_bit(IPS_SEEN_REPLY_BIT, &ct->status)) {pr_debug("nf_conntrack_in: normal packet for %p\n", ct);*ctinfo = IP_CT_ESTABLISHED;} else if (test_bit(IPS_EXPECTED_BIT, &ct->status)) {pr_debug("nf_conntrack_in: related packet for %p\n",ct);*ctinfo = IP_CT_RELATED;} else {pr_debug("nf_conntrack_in: new packet for %p\n", ct);*ctinfo = IP_CT_NEW;}*set_reply = 0;}//将ct和ctinfo保存到数据包skb->nfct = &ct->ct_general;skb->nfctinfo = *ctinfo;return ct;

}//只有original方向的报文才会执行此函数

//分配ct

/* Allocate a new conntrack: we return -ENOMEM if classificationfailed due to stress. Otherwise it really is unclassifiable. */

static struct nf_conntrack_tuple_hash *

init_conntrack(struct net *net, struct nf_conn *tmpl,const struct nf_conntrack_tuple *tuple,struct nf_conntrack_l3proto *l3proto,struct nf_conntrack_l4proto *l4proto,struct sk_buff *skb,unsigned int dataoff, u32 hash)

{struct nf_conn *ct;struct nf_conn_help *help;struct nf_conntrack_tuple repl_tuple;struct nf_conntrack_ecache *ecache;struct nf_conntrack_expect *exp = NULL;u16 zone = tmpl ? nf_ct_zone(tmpl) : NF_CT_DEFAULT_ZONE;struct nf_conn_timeout *timeout_ext;unsigned int *timeouts;//由tuple获取reply方向的tupleif (!nf_ct_invert_tuple(&repl_tuple, tuple, l3proto, l4proto)) {pr_debug("Can't invert tuple.\n");return NULL;}ct = __nf_conntrack_alloc(net, zone, tuple, &repl_tuple, GFP_ATOMIC,hash);if (IS_ERR(ct))return (struct nf_conntrack_tuple_hash *)ct;if (tmpl && nfct_synproxy(tmpl)) {nfct_seqadj_ext_add(ct);nfct_synproxy_ext_add(ct);}timeout_ext = tmpl ? nf_ct_timeout_find(tmpl) : NULL;if (timeout_ext)timeouts = NF_CT_TIMEOUT_EXT_DATA(timeout_ext);elsetimeouts = l4proto->get_timeouts(net);if (!l4proto->new(ct, skb, dataoff, timeouts)) {nf_conntrack_free(ct);pr_debug("init conntrack: can't track with proto module\n");return NULL;}if (timeout_ext)nf_ct_timeout_ext_add(ct, timeout_ext->timeout, GFP_ATOMIC);nf_ct_acct_ext_add(ct, GFP_ATOMIC);nf_ct_tstamp_ext_add(ct, GFP_ATOMIC);nf_ct_labels_ext_add(ct);ecache = tmpl ? nf_ct_ecache_find(tmpl) : NULL;nf_ct_ecache_ext_add(ct, ecache ? ecache->ctmask : 0,ecache ? ecache->expmask : 0,GFP_ATOMIC);local_bh_disable();//如果有了期望连接,则需要到net->ct.expect_hash查找自己是//否是期望连接,如果是,需要设置 IPS_EXPECTED_BIT,并//将 ct->master 指向主连接if (net->ct.expect_count) {spin_lock(&nf_conntrack_expect_lock);exp = nf_ct_find_expectation(net, zone, tuple);if (exp) {pr_debug("conntrack: expectation arrives ct=%p exp=%p\n",ct, exp);/* Welcome, Mr. Bond. We've been expecting you... */__set_bit(IPS_EXPECTED_BIT, &ct->status);/* exp->master safe, refcnt bumped in nf_ct_find_expectation */ct->master = exp->master;if (exp->helper) {help = nf_ct_helper_ext_add(ct, exp->helper,GFP_ATOMIC);if (help)rcu_assign_pointer(help->helper, exp->helper);}#ifdef CONFIG_NF_CONNTRACK_MARKct->mark = exp->master->mark;

#endif

#ifdef CONFIG_NF_CONNTRACK_SECMARKct->secmark = exp->master->secmark;

#endifNF_CT_STAT_INC(net, expect_new);}spin_unlock(&nf_conntrack_expect_lock);}if (!exp) {__nf_ct_try_assign_helper(ct, tmpl, GFP_ATOMIC);NF_CT_STAT_INC(net, new);}/* Now it is inserted into the unconfirmed list, bump refcount */nf_conntrack_get(&ct->ct_general);//暂时将ct保存到本cpu的unconfirm链表中nf_ct_add_to_unconfirmed_list(ct);local_bh_enable();if (exp) {if (exp->expectfn)exp->expectfn(ct, exp);nf_ct_expect_put(exp);}return &ct->tuplehash[IP_CT_DIR_ORIGINAL];

}

- ipv4_help

ipv4_help主要是执行匹配到的helper函数,进行一些扩展操作,比如ftp的数据通道建立,nat的转换。可参考ftp提供的helper:help

static unsigned int ipv4_helper(const struct nf_hook_ops *ops,struct sk_buff *skb,const struct net_device *in,const struct net_device *out,int (*okfn)(struct sk_buff *))

{struct nf_conn *ct;enum ip_conntrack_info ctinfo;const struct nf_conn_help *help;const struct nf_conntrack_helper *helper;/* This is where we call the helper: as the packet goes out. */ct = nf_ct_get(skb, &ctinfo);if (!ct || ctinfo == IP_CT_RELATED_REPLY)return NF_ACCEPT;//从ct的扩展区域尝试获取helperhelp = nfct_help(ct);if (!help)return NF_ACCEPT;/* rcu_read_lock()ed by nf_hook_slow */helper = rcu_dereference(help->helper);if (!helper)return NF_ACCEPT;return helper->help(skb, skb_network_offset(skb) + ip_hdrlen(skb),ct, ctinfo);

}

- ipv4_confirm

ipv4_confirm是优先级最低的hook函数,数据包能走到这里就肯定不会被netfilter丢弃,所以可以将它的ct从uncomfirm(per-cpu)转到confirm(全局的)链表上。

static unsigned int ipv4_confirm(const struct nf_hook_ops *ops,struct sk_buff *skb,const struct net_device *in,const struct net_device *out,int (*okfn)(struct sk_buff *))

{struct nf_conn *ct;enum ip_conntrack_info ctinfo;ct = nf_ct_get(skb, &ctinfo);if (!ct || ctinfo == IP_CT_RELATED_REPLY)goto out;/* adjust seqs for loopback traffic only in outgoing direction */if (test_bit(IPS_SEQ_ADJUST_BIT, &ct->status) &&!nf_is_loopback_packet(skb)) {if (!nf_ct_seq_adjust(skb, ct, ctinfo, ip_hdrlen(skb))) {NF_CT_STAT_INC_ATOMIC(nf_ct_net(ct), drop);return NF_DROP;}}

out:/* We've seen it coming out the other side: confirm it */return nf_conntrack_confirm(skb);

}/* Confirm a connection: returns NF_DROP if packet must be dropped. */

static inline int nf_conntrack_confirm(struct sk_buff *skb)

{struct nf_conn *ct = (struct nf_conn *)skb->nfct;int ret = NF_ACCEPT;if (ct && !nf_ct_is_untracked(ct)) {if (!nf_ct_is_confirmed(ct))//将 ct->tuplehash[IP_CT_DIR_ORIGINAL] 从//unconfirm hash链上删除,并将ct-//>tuplehash[IP_CT_DIR_ORIGINAL]//和ct->tuplehash[IP_CT_DIR_REPLY]根据hash同时//添加到全局confirm hash链上ret = __nf_conntrack_confirm(skb);if (likely(ret == NF_ACCEPT))//调用通知链上的函数通知netlink模块nf_ct_deliver_cached_events(ct);}return ret;

}

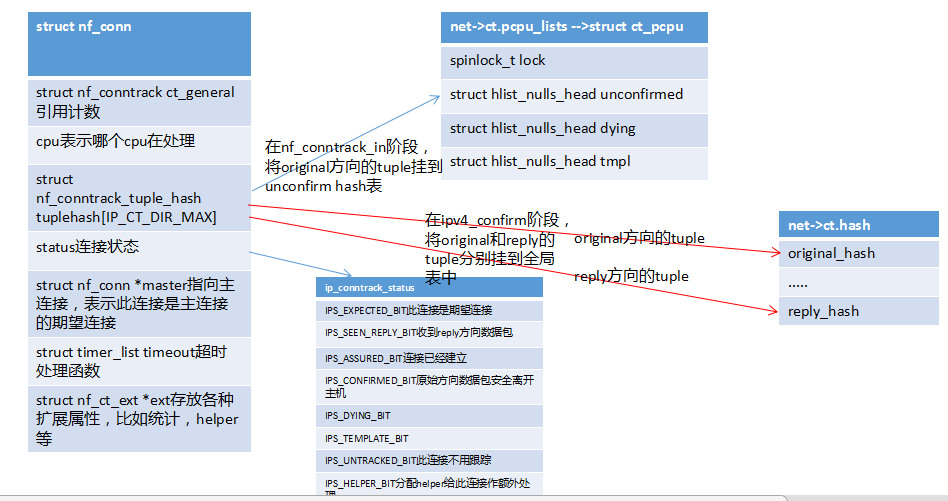

连接跟踪是个基础模块,总结如下图,其他利用它实现功能的模块在其他文章中记录。

也可参考:netfilter之conntrack连接跟踪 - 简书 (jianshu.com)

这篇关于netfilter之conntrack连接跟踪的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!