本文主要是介绍认知智能最新研究成果,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

声明:以下内容仅代表个人对现象和本质探索,不代表对学术成果评价。曾有幸和马文明斯基的学生段老师和方老师一起讨论过人工智能问题。随着自己对问题进一步理解,刚好18年左右开始接触认知智能理论核心认知计算部分。

第一:算法是一种处理问题的逻辑(从认识论看其实解决问题方法有很多种),并且能用计算机指令在有限步骤和时间根据特定输出给出特定输出。

第二:机器学习是通过某种单模态(其实本质是表示客观存在的数据类型单一描述)的数据通过近似计算方法解决大规模问题复杂性和不确定性。

第三:软件和硬件在实现逻辑应该是等效的(计算机组成原理有相关定义理论),离散数学和组合数学在理论上基本奠定了计算机本身的计算能力。

第四:深度学习是随着并行计算和异构计算发展起来的,并非算法本身有什么重大革命性的突破工作。ResNet在最大贡献是通过恒等映射理论实现了残差卷积。AlexNet的突破性成就是通过并行计算实现了多卡浮点运算解决了大规模矩阵在计算机视觉上的工程性突出贡献。

第五:深度学习的局限性并不是数据量多少问题的,而是在理论数据和实际数据中的模态关系,这才是导致过拟合和欠拟合现象存在的本质问题,多模态问题其实可以让问题本身在知识表示上更加准确。预训练解决了模型在不同数据上训练和泛化问题。

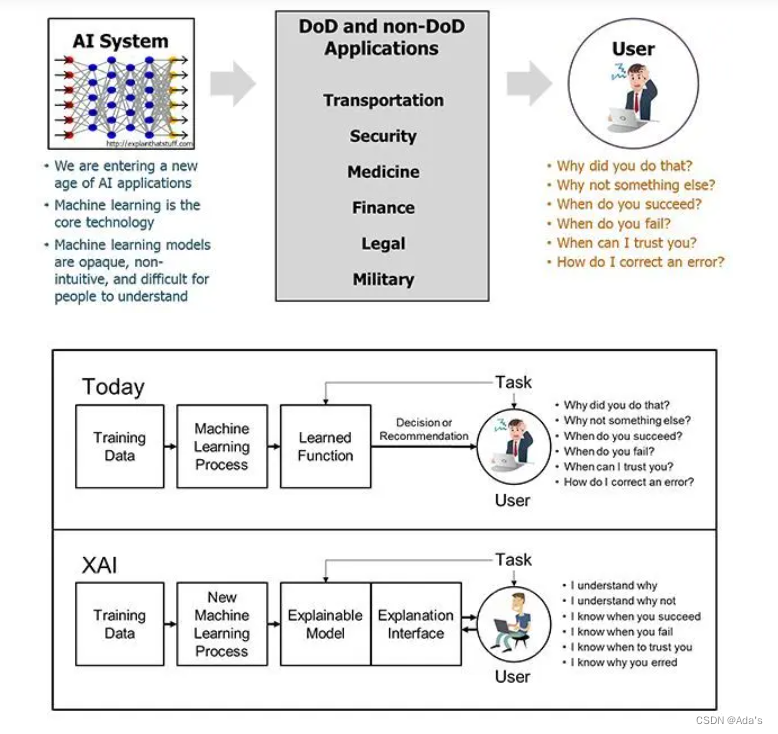

第六:从预训练到生成式大模型最大的特点是并不在编解码,而是基于思维链的近端策略优化强化学习。这个方向个人理解也是将基于深度学习的人工智能带入下一个阶段的认知智能与认知计算的开始。

第七:什么是认知智能和认知计算,个人在研究过程发现,认知智能与人工智能最大区别是多模态的因果结构化知识表示,因为这样可以指数级降低算法对数据依赖和模型复杂性,同时针对硬件结构不再是单一的并行计算,这种计算在数据层特别占用带宽,在计算层特别占用显存。

第八:如果通过科学方法进行下一步研究?按照目前问题统一做法是训练,这种训练本质上在科学里面就是归纳法,针对客观现象进行主观或客观统计归纳分析。那么,想更深入的研究其实就会到下一个阶段演绎法,探索一种通过几何或者代数方式针对深度学习现在的范式研究找到一种可以表示电路逻辑实现深度学习的可解释问题,从而这个问题就能以思维逻辑或者思维链及思维图或思维体,思维空间方式更好的进行发展下去。暴力计算的合理性是阶段性的,自然界的进化都是精密的。

以下是在研究过程发现的美国国防部高等研究计划局

参考文献:

一:累积推理《Cumulative Reasoning with Large Language Models》

Yifan Zhang, Jingqin Yang, Yang Yuan, Andrew Chi-Chih Yao

While language models are powerful and versatile, they often fail to address highly complex problems. This is because solving complex problems requires deliberate thinking, which has been only minimally guided during training. In this paper, we propose a new method called Cumulative Reasoning (CR), which employs language models in a cumulative and iterative manner to emulate human thought processes. By decomposing tasks into smaller components, CR streamlines the problem-solving process, rendering it both more manageable and effective. For logical inference tasks, CR consistently outperforms existing methods with an improvement up to 9.3%, and achieves the astonishing accuracy of 98.04% on the curated FOLIO wiki dataset. In the context of the Game of 24, CR achieves an accuracy of 98%, which signifies a substantial enhancement of 24% over the previous state-of-the-art method. Finally, on the MATH dataset, we establish new state-of-the-art results with 58.0% overall accuracy, surpassing the previous best approach by a margin of 4.2%, and achieving 43% relative improvement on the hardest level 5 problems (22.4% to 32.1%).

二:思维图《Graph of Thoughts: Solving Elaborate Problems with Large Language Models》

Maciej Besta, Nils Blach, Ales Kubicek, Robert Gerstenberger, Lukas Gianinazzi, Joanna Gajda, Tomasz Lehmann, Michal Podstawski, Hubert Niewiadomski, Piotr Nyczyk, Torsten Hoefler

We introduce Graph of Thoughts (GoT): a framework that advances prompting capabilities in large language models (LLMs) beyond those offered by paradigms such as Chain-of-Thought or Tree of Thoughts (ToT). The key idea and primary advantage of GoT is the ability to model the information generated by an LLM as an arbitrary graph, where units of information (“LLM thoughts”) are vertices, and edges correspond to dependencies between these vertices. This approach enables combining arbitrary LLM thoughts into synergistic outcomes, distilling the essence of whole networks of thoughts, or enhancing thoughts using feedback loops. We illustrate that GoT offers advantages over state of the art on different tasks, for example increasing the quality of sorting by 62% over ToT, while simultaneously reducing costs by >31%. We ensure that GoT is extensible with new thought transformations and thus can be used to spearhead new prompting schemes. This work brings the LLM reasoning closer to human thinking or brain mechanisms such as recurrence, both of which form complex networks.

总结,以上两篇论文都非常有价值,个人理解这两篇论文侧重在知识本身的表示,并没有研究知识这种表示的因果逻辑结构本身,如果可以从预训练大模型的结果可逆出输入数据特征编码器的思维链图知识叠加因果会更加令人惊叹!

这篇关于认知智能最新研究成果的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!