本文主要是介绍Course2-week3-hyperparameterTuning - BatchNormalization - Framework,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

hyperparameter tuning

1 - tuning process

How to systematically organize hyperparameters tuning process?

hyperparameters

- learning rate α α

- β β in momentum, or set the default 0.9

- mini-batch size

- # # hidden units

- # # layers

- learning rate decay

- β1=0.9,β2=0.999,ϵ=10−8 β 1 = 0.9 , β 2 = 0.999 , ϵ = 10 − 8 in Adam

If we are trying to tune some set of the hyperparameters, how do we select a set of values to explore?

In early machine learning era, it was common practice to sample the points in a grid and systematically explore these values. In deep learning what in partice we do is choose the point at random. Because it’s hard to know in advance which parameter turn out to be the really important hyperparameters for your applications, and some hyperparameters are actually much important than other. For example, let’s say the parameters 1 is the α α and parameters 2 is ϵ ϵ . So it’s turn out that sample at random rather than in the grid shows that you are more richly exploring set of possible values for the most important hyperparamters.

When we sample hyperparameters another common practice is to use a coarse to fine sampling scheme.

2 - using an appropriate scale to pick parameters

Sampling at random doesn’t mean sampling uniformly at random over the range of the valid value. Instead. it’s important to pick the appropriate scale.

For the number of layers or the number of units in a centain layer, sampling uniformly at random over the range might be a reasonable thing to do. But this is not ture for other hyperparameters.

For α α : using for determining the update

maybe the reasonable range of α α is 0.0001 to 1, if we pick the values uniformly at random between 0.0001 to 1, about 90% of the value we sample would be between 0.1 to 1. So we just only use 10% resources to research between 0.0001 and 0.01.

What we should to do:

r = -4 * np.random.rand() # r is a random number between -4 and 0alpha = 10**r # alpha is a number uniformly between 0.0001(10e-4) to 1(10e0) more general, if we are trying to sample between 10a 10 a and 10b 10 b

r = -(a + (b - a) * np.random.rand()) # r is a random number between -4 and 0alpha = 10**r # alpha is a number uniformly between 0.0001(10e-4) to 1(10e0) For β2 β 2 : using for computing exponentially weighted averages

Maybe the suspicious value of β2 β 2 is between 0.9 to 0.999, this is the range we want to search over. Remember using the β2=0.9 β 2 = 0.9 is like averaging over the last 10 values, whereas 0.999 is like to average over last 1000 values.

3 - hyperparameters tuning in practice: pandas vs. caviar

- babysitting one model(panda approach)

- not a lot of computational resource.

- training many models in parallel(caviar(fish) approach)

The way to choose between these two approaches is really depend on how much computational resource we have. if we have enough coumpuers to train a lot of models in parallel then by all means take caviar approach and try a lot of different hyperparameters setting and see what works best.

4 - normalizing activations in a network

Batch Normal makes hyperparameters search much easier, makes neural network much robust to the choice of hyperparameters, and will also enable we to much more easily train very deep network.

When training a model, such as logistic regression, normalizing the input feature can speed up learning.

this can turn the contour of learning problem from somthing might be very elongated to something that is more round and easier for optimization algorithm to optimize.

For the neural network, if we want to train the parameters W[l],b[l] W [ l ] , b [ l ] , it will be nice if we can normalize the mean and variance of the a[l−1] a [ l − 1 ] , and to make the training of W[l],b[l] W [ l ] , b [ l ] more efficient. So the question is** for amy hidden layer, can we normalize the value of a[l−1] a [ l − 1 ] so as to train W[l],b[l] W [ l ] , b [ l ] faster. This is what Batch Norm does.Although technically we will actually normalize the values of not a[l−1] a [ l − 1 ] but z[l−1] z [ l − 1 ] **.

Implementing Batch Norm:

given some intermediate value of NN, z(1),⋯,z(m) z ( 1 ) , ⋯ , z ( m ) :

but we don’t want the hidden units to always have mean 0 and variance 1, maybe it make sense for hidden units to have a different distribution.So what we do is :

The γ γ and β β are learnable parameters of model. so we use gradient descent to update the γ γ and β β just as the way we update the weight of the neural network. Notice that the effect of γ γ and β β is that it allow we to set the mean and variance of z~(i)norm z ~ n o r m ( i ) to whatever we want it to be by an appropriate setting of the γ γ and β β . So what BN really does is it normalize the mean and variance of these hidden units values z[l](i) z [ l ] ( i ) to have some fixed mean and variance controlled by γ γ and β β .

5 - fitting batch norm into a neural network

adding Batch Norm to a network

parameters:

These are the new parameters of your algotithms

because the Batch Norm zeros out the means of these z[l] z [ l ] values in the layer, these’s no point having the parameter b[l] b [ l ] , and so we get rid of it and replace by β[l] β [ l ] .

How can we implement gradient descent using BN?

for t = 1, ... num_mini_batches:compute forward propagation on X^{t}in each hidden layer, use BN to replace z^[l] with tildez^[l]user back prop to get dW, (db), dbeta, dgammaupdate parameters use gd:W = W - learning_rate * dWbeta = beta - learning_rate * dbeta...6 - why does batch norm work?

we have seen how normalizing the input feature can speed up learning. So the BN is doing the similar thing but for the values in the hidden units not just for the input.

A second reason why BN works is it makes weights later or deeper on network more robust to change than weights in earlier layer of the neural network.

Let’s say that you are trained a model on all images of black cats on train set, if we are now try to apply this network to data with colored cats, then the classifier might not do very well.

we might not expect a model trained on the data on the left to do very well on the data on the right even though there might the same function that actually work well. And we wouldn’t to expect our learning algorithm to discover the green decision boundary just look at the data on the left.

if we learn some mapping form x to y, if the distribution of x changes, then we might need to retrain the model.

from the perspective of the third hidden layer, the NN has learned the parameters W[3],b[3] W [ 3 ] , b [ 3 ] , it gets some set of values from earlier layers, and do some things to hopefully make make the y^ y ^ close to the y y . From the perspective of third layers, it gets some value and find a way to map them to y^ y ^ . But for a[2]1,a[2]2,a[2]3,a[2]4 a 1 [ 2 ] , a 2 [ 2 ] , a 3 [ 2 ] , a 4 [ 2 ] , as the parameters W[2],b[2],W[1],b[1] W [ 2 ] , b [ 2 ] , W [ 1 ] , b [ 1 ] changes, these values will also changes. So from the perspective of third hidden layer, it’s input values are changing all the time. So the BN does is it reduce the amount that the distribution of these input values shifts around. What BN is saying is that the values of the input can be change, and indeed they will be change when the neural network updates the parameters in the earlier layers, but BN ensures is that no matter how it changes, the mean and variance of the input values remain the same. Se even the exact value of the input changes, their mean and variance will at least stay same.

So it allows each layer of the network to learn by itself, a little bit more independently of other layers, and this has the effect of speeding up the learning in the whole network.

BN means that especially from the perspective of one of the hidden layer of the nn, the earlier layer don’t get to shift around as mcuh, because they are constrained to have the same mean and variance, and so this make the job of learning on the later layers easier.

Each mini-batch is scaled by the mean/variance computed on just that mini-batch. this add the noise to the value z[l] z [ l ] within that minibatch, so similar to dropout, it adds some noise to each hidden layer’s activations. This has a slight regularization effect.

By adding noise to the hidden units, it force the downstream hidden units not to rely too much on any one hidden unit. and by using a bigger minibatch size, will reduce the regularization effect.

BN handles data one mini-batch at a time, it compute the mean and variance on mini-batches. So at test time we need to do somethings slightly differently to make predictions.

7 - batch norm at test time

batch norm processes data one mini-batch at a time, but at the test time we need to process one example at a time.

where m is the number of examples in the mini-batch.

at the test time, we need some different way of computing μ μ and σ2 σ 2 . In the standerd implementation of BN what we do is estimate μ μ and σ2 σ 2 using a exponentially weighted average.

For a given layer l, across the different mini-batches X{1},X{2},X{3},⋯ X { 1 } , X { 2 } , X { 3 } , ⋯ , we can get the μ[l]{1},μ[l]{2},μ[l]{3},⋯ μ [ l ] { 1 } , μ [ l ] { 2 } , μ [ l ] { 3 } , ⋯ , and so that we can get the μ[l] μ [ l ] by exponentially weighted average, we can get σ2 σ 2 by the same way. Then in the test time, what we do is use the equation (3) to compute the znorm z n o r m whatever value the z z have using the exponentially weighted average of and σ2 σ 2

Summarize that during the training time μ μ and σ2 σ 2 are computed from the entire mini batch, but in test time, we need to process a single example at a time. So the way to do that is to estimate the μ μ and σ2 σ 2 from the training set, and there are many way to do that, but in practice, what prople usually do is implement an exponentially weighted average where just keep track of the μ μ and σ2 σ 2 value during training, and get a rough estimate of μ μ and σ2 σ 2 , and use those value at test time to do the scaling of the z z .

8 - softmax regression

There is a generalization of logistic regression called softmax regression, that let’s you make predictions where you are trying to recognize one of C classes.

Let’s say instead just recognize two classes, we want to recognize cats, dog, and baby chicks.

C = # classes

In this case, we are going to build a neural network where the output layer has 4 units, , and what we want is the number of units in the output layer to tell us what is the probability of each of these four classes,* because the probability it should sum to one, the 4 number in the output y^ y ^ should sum to 1. The standard model for getting model to this is uses what called a softmax layer.*

having compute Z[L] Z [ L ] , we need to apply the softmax avtivation function

A[L]=gsoftmax(Z[L]) A [ L ] = g s o f t m a x ( Z [ L ] )

9 - training a softmax classifier

We have learned the softmax activation function. Now we will learn how to train a model with a softmax layer.

Let’s define the loss function we use to train neural network. Let’s take an example, let’s see an example in training set where the target output, the ground true label is

represent this image is a cat, now let’s say the neural network outputting y^ y ^ as following:

So the neural network does not work well in this example, because this is a cat but assigned only 20% chance that this is a cat. In softmax classification, the loss we typically use is:

L(y,y^)=−y2logy2^=−logy2^ L ( y , y ^ ) = − y 2 l o g y 2 ^ = − l o g y 2 ^ , so if the learning algorithm is trying to make L(y,y^) L ( y , y ^ ) small, the only way to make L(y,y^) L ( y , y ^ ) is to make −logy2^ − l o g y 2 ^ small, and the only way to do that is to make y2^ y 2 ^ as big as possible, and this is a probability, so can never be bigger than 1. This kind of make sense.* So more generally, what the loss function does is it look at whatever is the ground truth class in your training set, and tries to make the corresponding probability of the class as high as possible.*

This is the loss on the single training example, how about the cost function J J on the entire training set.

then what we do is use gradient descent in order to try to minimize this cost.

Finally let’s look at how to implement the gradient descent when we have a softmax output layer.

the key equation we need to initialize backpropagation is the expression that the derivative with respect to z[l] z [ l ] :

with this we can start off the backward propagations, and to computer all the derivative we need throuthout neural network.

10 - deep learning frameworks

- caffe/caffe2

- CNTK

- DL4J

- Keras

- Lasagne

- mxnet

- paddlepaddle

- tensorflow

- theano

- torch

Chooseing deep learning frameworks:

- ease of programming (development and deployment)

- running speed

- truly open

11 - tensorflow

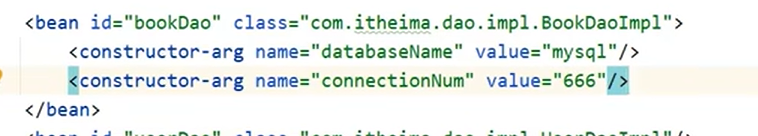

This is the cost function we want to minimize, Let’s see how can we implement somethings in tensorflow to minimize the J J . Because a very similiar structure of program can be used to train neural network where we can have some complicated cost function depending on all the parameters of neural network, and similiar we will use tensorflow to automatically try to find values of W,b W , b that minimize the cost J J .

import numpy as np

import tensorflow as tfw = tf.Variable(0, dtype=tf.float32) # use tf.Variable to define the parameters. init the w to 0

cost = w**2 - 10 * w + 25 # define the cost function

train = tf.train.GradientDescentOptimizer(0.01).minimize(cost) # use gradient descent optimizer to minimize the cost functioninit = tf.global_variables_initializer()

session = tf.Session() # start a Session

session.run(init) # to initialize the global variables

print(session.run(w)) # we haven't run anything yet0.0

session.run(train) # run one step of gradient descent

print(session.run(w))0.099999994

for i in range(5000):session.run(train)

print(session.run(w))

session.close()4.9999886

placeholder:above is a example minimize a fixed function of , What if the function you want to minimize is the function of your training set? when we train a neural network, the training set data x x <script type="math/tex" id="MathJax-Element-218">x</script> can change. So how do we get training data into a tensorflow program?

coefficient = np.array([[1.],[-20.],[100.]])w = tf.Variable(0, dtype = tf.float32)

x = tf.placeholder(tf.float32, [3, 1])

cost = x[0][0] * w**2 + x[1][0] * w + x[2][0]

train = tf.train.GradientDescentOptimizer(0.01).minimize(cost)init = tf.global_variables_initializer()

session = tf.Session()

session.run(init)for i in range(1000):session.run(train, feed_dict={x:coefficient})

print(session.run(w))9.999977

The placeholder in tensorflow is a variables whose value we assign later, this is a convenient way to get the training data into the cost function. When we are doing mini-batch gradient descent, where on each iteration we need to plug in a different mini-batch, now we can use the feed_dict to feed in different subsets of the training set on different interations.

The heart of a tensorflow program is something to compute the cost, and then the tensorflow automatically figure out the derivatives and how to minimize the cost. Programming framework like tensorflow have already built-in the necessary backward function, which why it can automatically do the backward functions as well as implement backward propagation. So that’s why we don’t need to explicitly implement backward propagation, and just define the forward propagation and cost function are enough.

这篇关于Course2-week3-hyperparameterTuning - BatchNormalization - Framework的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!