本文主要是介绍【模块缝合】【NIPS 2021】MLP-Mixer: An all-MLP Architecture for Vision,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

文章目录

- 简介

- 代码,from:https://github.com/huggingface/pytorch-image-models【多看看成熟仓库的代码】

- MixerBlock

paper and code: https://paperswithcode.com/paper/mlp-mixer-an-all-mlp-architecture-for-vision#code

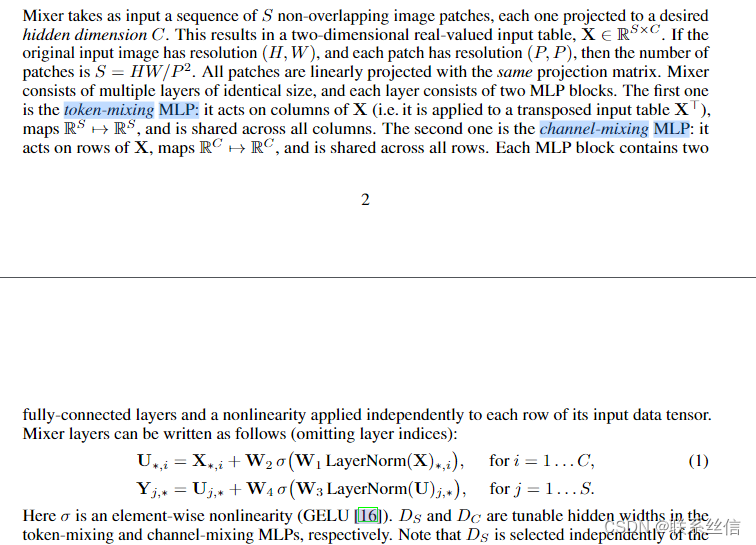

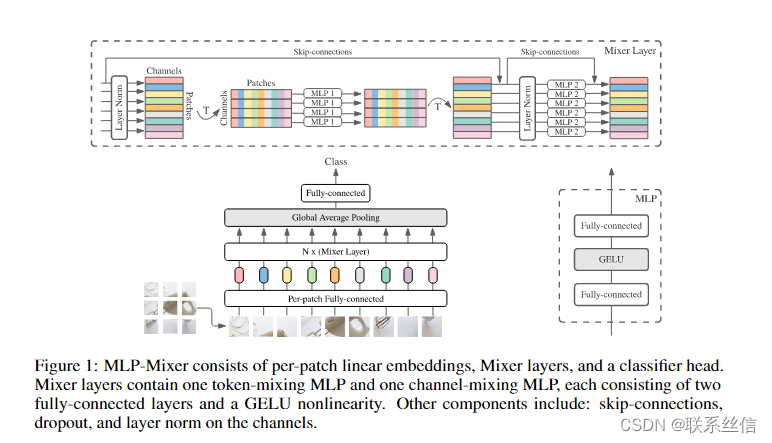

简介

这个转置 是什么操作?

代码,from:https://github.com/huggingface/pytorch-image-models【多看看成熟仓库的代码】

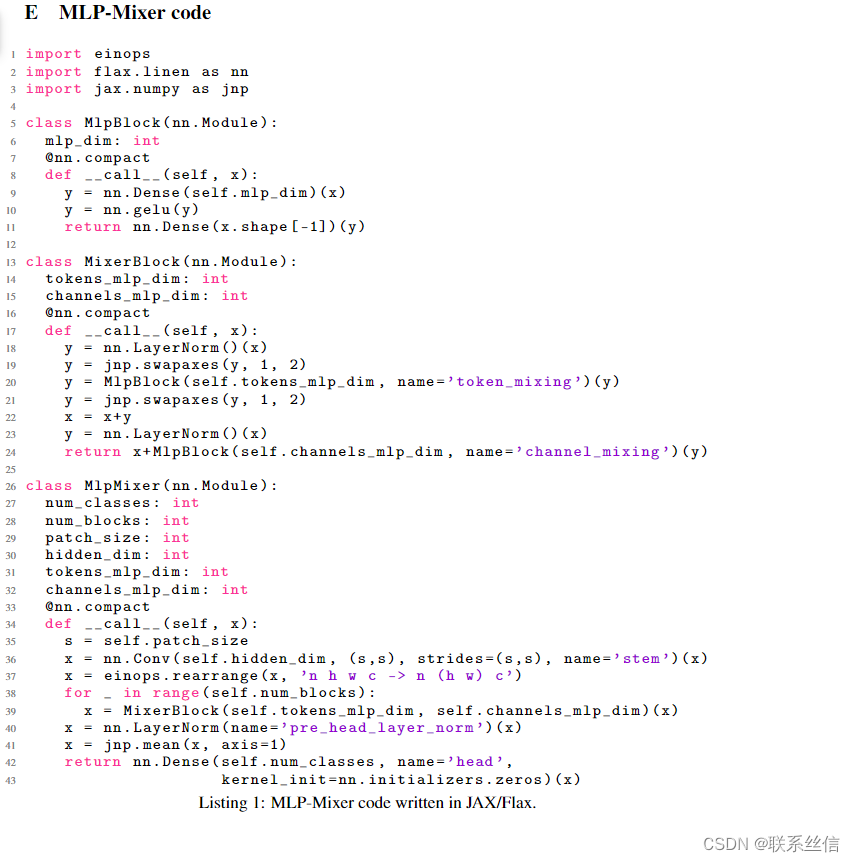

论文附录:

mlp module【一般双层:fc1,act,drop1,norm,fc2,drop2】

# from: https://github.com/huggingface/pytorch-image-models/blob/main/timm/layers/mlp.py#L13class Mlp(nn.Module):""" MLP as used in Vision Transformer, MLP-Mixer and related networks"""def __init__(self,in_features,hidden_features=None,out_features=None,act_layer=nn.GELU,norm_layer=None,bias=True,drop=0.,use_conv=False,):super().__init__()out_features = out_features or in_featureshidden_features = hidden_features or in_featuresbias = to_2tuple(bias)drop_probs = to_2tuple(drop)linear_layer = partial(nn.Conv2d, kernel_size=1) if use_conv else nn.Linearself.fc1 = linear_layer(in_features, hidden_features, bias=bias[0])self.act = act_layer()self.drop1 = nn.Dropout(drop_probs[0])self.norm = norm_layer(hidden_features) if norm_layer is not None else nn.Identity()self.fc2 = linear_layer(hidden_features, out_features, bias=bias[1])self.drop2 = nn.Dropout(drop_probs[1])def forward(self, x):x = self.fc1(x)x = self.act(x)x = self.drop1(x)x = self.norm(x)x = self.fc2(x)x = self.drop2(x)return xMixerBlock

# from: https://github.com/huggingface/pytorch-image-models/blob/main/timm/models/mlp_mixer.pyclass MixerBlock(nn.Module):""" Residual Block w/ token mixing and channel MLPsBased on: 'MLP-Mixer: An all-MLP Architecture for Vision' - https://arxiv.org/abs/2105.01601"""def __init__(self,dim,seq_len,mlp_ratio=(0.5, 4.0),mlp_layer=Mlp,norm_layer=partial(nn.LayerNorm, eps=1e-6),act_layer=nn.GELU,drop=0.,drop_path=0.,):super().__init__()tokens_dim, channels_dim = [int(x * dim) for x in to_2tuple(mlp_ratio)]self.norm1 = norm_layer(dim)self.mlp_tokens = mlp_layer(seq_len, tokens_dim, act_layer=act_layer, drop=drop)self.drop_path = DropPath(drop_path) if drop_path > 0. else nn.Identity()self.norm2 = norm_layer(dim)self.mlp_channels = mlp_layer(dim, channels_dim, act_layer=act_layer, drop=drop)def forward(self, x):x = x + self.drop_path(self.mlp_tokens(self.norm1(x).transpose(1, 2)).transpose(1, 2))x = x + self.drop_path(self.mlp_channels(self.norm2(x)))return x

这篇关于【模块缝合】【NIPS 2021】MLP-Mixer: An all-MLP Architecture for Vision的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!