本文主要是介绍第5章 Hadoop 2.6 Multi Node Cluster安裝指令,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

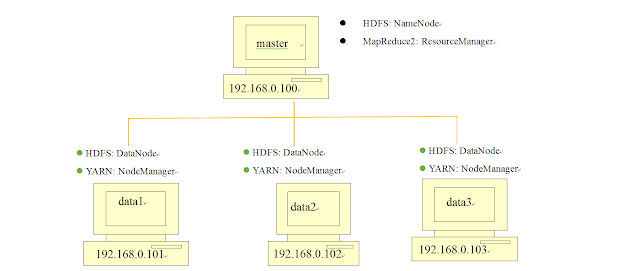

Hadoop Multi Node Cluster規劃如下圖,- 由多台電腦組成: 有一台主要的電腦master,在HDFS擔任NameNode角色,在MapReduce2(YARN)擔任ResourceManager角色

- 有多台的電腦data1、data2、data3,在HDFS擔任DataNode角色,在MapReduce2(YARN)擔任NodeManager角色

Hadoop Multi Node Cluster 規劃,整理如下表格:

| 伺服器名稱 | IP | HDFS | YARN |

| master | 192.168.0.100 | NameNode | ResourceManager |

| data1 | 192.168.0.101 | DataNode | NodeManager |

| data2 | 192.168.0.102 | DataNode | NodeManager |

| data3 | 192.168.0.103 | DataNode | NodeManager |

安裝步驟

1

2

3

4

5

6

7

8

9

10

5.1.複製Single Node Cluster到data1

我們將之前所建立的Single Node Cluster VirtualBox hadoop虛擬機器複製到data1

5.2.設定data1伺服器

Step2.編輯網路設定檔設定固定IP

sudo gedit /etc/network/interfaces輸入下列內容

# interfaces(5) file used by ifup(8) and ifdown(8) auto lo iface lo inet loopback auto eth0 iface eth0 inet static address 192.168.0.101 netmask 255.255.255.0 network 192.168.0.0 gateway 192.168.0.1 dns-nameservers 192.168.0.1

Step3.設定hostname

sudo gedit /etc/hostname輸入下列內容:

data1Step4.設定hosts檔案

sudo gedit /etc/hosts輸入下列內容:

127.0.0.1 localhost 127.0.1.1 hadoop 192.168.0.100 master 192.168.0.101 data1 192.168.0.102 data2 192.168.0.103 data3 # The following lines are desirable for IPv6 capable hosts ::1 ip6-localhost ip6-loopback fe00::0 ip6-localnet ff00::0 ip6-mcastprefix ff02::1 ip6-allnodes ff02::2 ip6-allroutersStep5.修改core-site.xml

sudo gedit /usr/local/hadoop/etc/hadoop/core-site.xml在之間,輸入下列內容:

fs.default.name hdfs://master:9000Step6.修改yarn-site.xml

sudo gedit /usr/local/hadoop/etc/hadoop/yarn-site.xml在之間,輸入下列內容:

yarn.resourcemanager.resource-tracker.address master:8025 yarn.resourcemanager.scheduler.address master:8030 yarn.resourcemanager.address master:8050Step7.修改mapred-site.xml

sudo gedit /usr/local/hadoop/etc/hadoop/mapred-site.xml在之間,輸入下列內容:

mapred.job.tracker master:54311Step8.修改hdfs-site.xml

sudo gedit /usr/local/hadoop/etc/hadoop/hdfs-site.xml在之間,輸入下列內容:

5.3.複製data1伺服器至data2、data3、masterdfs.replication 3 dfs.datanode.data.dir file:/usr/local/hadoop/hadoop_data/hdfs/datanode

5.4.設定data2、data3伺服器

Step2.設定data2固定IP

sudo gedit /etc/network/interfaces輸入下列內容

# interfaces(5) file used by ifup(8) and ifdown(8) auto lo iface lo inet loopback auto eth0 iface eth0 inet static address 192.168.0.102 netmask 255.255.255.0 network 192.168.0.0 gateway 192.168.0.1 dns-nameservers 192.168.0.1

Step3.設定data2主機名稱

sudo gedit /etc/hostname輸入下列內容:

data2

Step6.設定data3固定IP

sudo gedit /etc/network/interfaces輸入下列內容

# interfaces(5) file used by ifup(8) and ifdown(8) auto lo iface lo inet loopback auto eth0 iface eth0 inet static address 192.168.0.103 netmask 255.255.255.0 network 192.168.0.0 gateway 192.168.0.1 dns-nameservers 192.168.0.1

Step7.設定data3主機名稱

sudo gedit /etc/hostname輸入下列內容:

data3

5.5.設定master伺服器

Step2.設定master固定IP

sudo gedit /etc/network/interfaces輸入下列內容

# interfaces(5) file used by ifup(8) and ifdown(8) auto lo iface lo inet loopback auto eth0 iface eth0 inet static address 192.168.0.100 netmask 255.255.255.0 network 192.168.0.0 gateway 192.168.0.1 dns-nameservers 192.168.0.1

Step3.設定master主機名稱

sudo gedit /etc/hostname輸入下列內容:

master

Step4.設定hdfs-site.xml

sudo gedit /usr/local/hadoop/etc/hadoop/hdfs-site.xml輸入下列內容:

dfs.replication 3 dfs.namenode.name.dir file:/usr/local/hadoop/hadoop_data/hdfs/namenode

Step5.設定master檔案

sudo gedit /usr/local/hadoop/etc/hadoop/master輸入下列內容:

master

Step6.設定slaves檔案

sudo gedit /usr/local/hadoop/etc/hadoop/slaves輸入下列內容:

data1 data2 data3

5.6.master連線至data1、data2、data3建立HDFS目錄

master SSH連線至data1並建立HDFS目錄

ssh data1 sudo rm -rf /usr/local/hadoop/hadoop_data/hdfs sudo mkdir -p /usr/local/hadoop/hadoop_data/hdfs/datanode sudo chown hduser:hduser -R /usr/local/hadoop exitmaster SSH連線至data2並建立HDFS目錄

ssh data2 sudo rm -rf /usr/local/hadoop/hadoop_data/hdfs sudo mkdir -p /usr/local/hadoop/hadoop_data/hdfs/datanode sudo chown hduser:hduser -R /usr/local/hadoop exitmaster SSH連線至data3並建立HDFS目錄

ssh data3 sudo rm -rf /usr/local/hadoop/hadoop_data/hdfs sudo mkdir -p /usr/local/hadoop/hadoop_data/hdfs/datanode sudo chown hduser:hduser -R /usr/local/hadoop exit5.7.建立與格式化NameNode HDFS 目錄

Step1 重新建立NameNode HDFS 目錄

sudo rm -rf /usr/local/hadoop/hadoop_data/hdfs mkdir -p /usr/local/hadoop/hadoop_data/hdfs/namenode sudo chown -R hduser:hduser /usr/local/hadoopStep2 格式化NameNode HDFS 目錄

hadoop namenode -format5.8.啟動Hadoop

啟動start-dfs.sh,再啟動 start-yarn.sh

start-dfs.sh start-yarn.sh或

啟動全部

start-all.sh查看目前所執行的行程

jpsHadoop ResourceManager Web介面網址

http://master:8088/5.9.開啟Hadoop Resource-Manager Web介面

開啟HDFS Web UI網址

http://master:50070/5.10.開啟Hadoop Resource-Manager Web介面

開啟HDFS Web UI網址

http://master:50070/

这篇关于第5章 Hadoop 2.6 Multi Node Cluster安裝指令的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!