mitigating专题

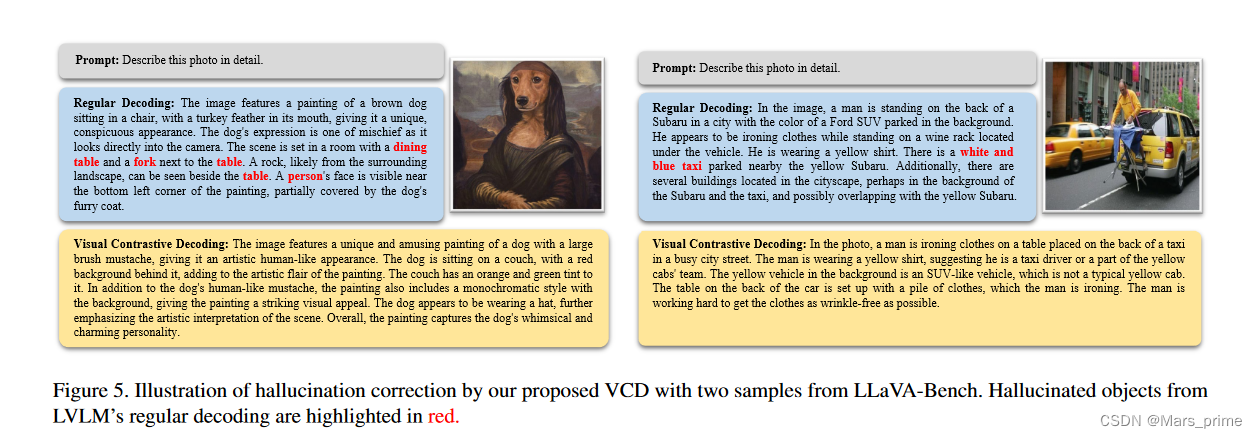

Mitigating Object Hallucinations in Large Vision-Language Models through Visual Contrastive Decoding

通过视觉对比解码减轻大型视觉语言模型中的物体幻觉 Abstract 大视觉语言模型(LVLM)已经取得了长足的进步,将视觉识别和语言理解交织在一起,生成不仅连贯而且与上下文相协调的内容。尽管取得了成功,LVLM 仍然面临物体幻觉的问题,即模型生成看似合理但不正确的输出,其中包括图像中不存在的物体。为了缓解这个问题,我们引入了视觉对比解码(VCD),这是一种简单且无需训练的方法,可以对比源自原始

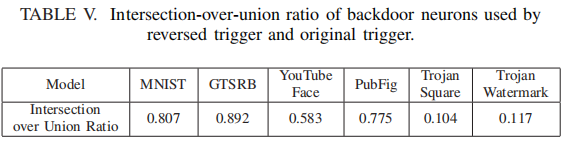

【翻译】Neural Cleanse: Identifying and Mitigating Backdoor Attacks in Neural Networks

文章目录 ABSTRACTI. INTRODUCTIONII. BACKGROUND: BACKDOOR INJECTION IN DNNSIII. OVERVIEW OF OUR APPROACH AGAINST BACKDOORSA. Attack ModelB. Defense Assumptions and GoalsC. Defense Intuition and Overview