本文主要是介绍MobileNet-v1网络框架,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

一、MobileNet

MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications

- 论文链接:https://arxiv.org/abs/1704.04861

- 论文翻译:https://blog.csdn.net/qq_31531635/article/details/80508306

- 论文详解:https://blog.csdn.net/hongbin_xu/article/details/82957426

- 论文代码:https://github.com/tensorflow/models/blob/master/research/slim/nets/mobilenet_v1.py

二、MobileNet算法

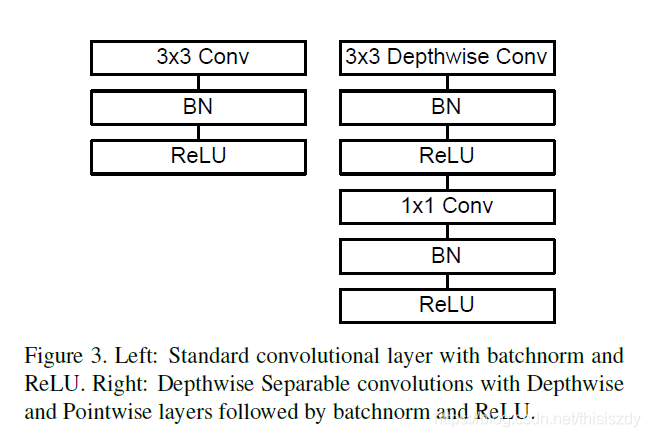

1、Depthwise Separable Convolution

- Depthwise Separable Convolution实质上是将标准卷积分成了两步:depthwise卷积和pointwise卷积,其输入与输出都是相同的;

- 假设输入特征图维度为: D F × D F × M D_F×D_F×M DF×DF×M, D F D_F DF为输入的宽/高, M M M为输入通道数;

- 假设输入特征图维度为: D G × D G × N D_G×D_G×N DG×DG×N, D G D_G DG为输入的宽/高, N N N为输出通道数;

- 假设卷积核尺寸为: D k × D k D_k \times D_k Dk×Dk, D k D_k Dk为卷积核的宽/高;

2、标准卷积

- 卷积核参数: D k × D k × M × N D_k \times D_k \times M \times N Dk×Dk×M×N;

- 计算量: D k × D k × M × N × D F × D F D_k \times D_k \times M \times N \times D_F \times D_F Dk×Dk×M×N×DF×DF;

3、深度可分离卷积

- 两个组成部分:depthwise卷积和pointwise卷积;

- depthwise卷积:对每个输入通道单独使用一个卷积核处理;

- pointwise卷积: 1 × 1 1 \times 1 1×1卷积,用于将depthwise卷积的输出组合起来;

4、depthwise卷积

- 输入: D F × D F × M D_F×D_F×M DF×DF×M,输出: D F × D F × M D_F×D_F×M DF×DF×M,卷积核: D k × D k D_k \times D_k Dk×Dk;

- 卷积核参数:分开为 M M M个通道,每个通道都是 D k × D k × 1 × 1 D_k \times D_k \times 1 \times 1 Dk×Dk×1×1,共 D k × D k × M D_k \times D_k \times M Dk×Dk×M;

- 计算量: D k × D k × M × D F × D F D_k \times D_k \times M \times D_F \times D_F Dk×Dk×M×DF×DF;

5、pointwise卷积

- 输入: D F × D F × M D_F×D_F×M DF×DF×M,输出: D F × D F × N D_F×D_F×N DF×DF×N,卷积核: 1 × 1 1 \times 1 1×1;

- 卷积核参数: 1 × 1 × M × N 1 \times 1 \times M \times N 1×1×M×N;

- 计算量: 1 × 1 × M × N × D F × D F 1\times 1\times M\times N\times D_F \times D_F 1×1×M×N×DF×DF;

6、上述第四第五步总的计算量:

D k × D k × M × D F × D F + M × N × D F × D F D_k \times D_k \times M \times D_F \times D_F +M\times N\times D_F \times D_F Dk×Dk×M×DF×DF+M×N×DF×DF

7、Standard Convolution 和Depthwise Separable Convolution对比

8、MobileNet结构

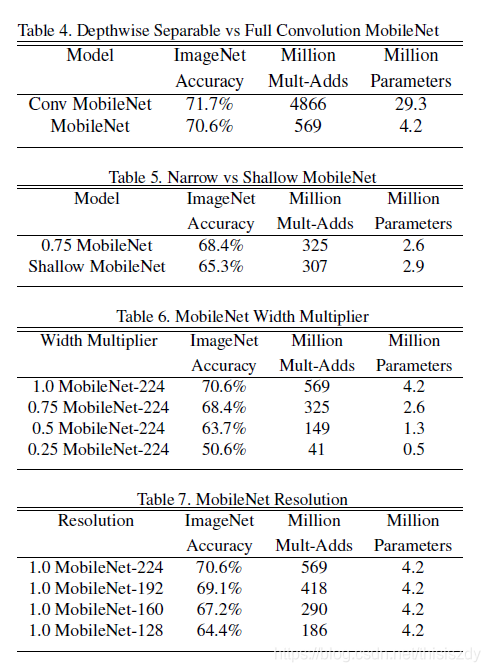

9、控制MobileNet模型大小的两个超参数

- Width Multiplier: Thinner Models:

1、用 α \alpha α表示,该参数用于控制特征图的维数,即通道数;

2、对于深度可分离卷积,其计算量为: D k × D k × α M × D F × D F + α M × α N × D F × D F D_k \times D_k \times \alpha M \times D_F \times D_F +\alpha M\times \alpha N\times D_F \times D_F Dk×Dk×αM×DF×DF+αM×αN×DF×DF; - Resolution Multiplier: Reduced Representation:

1、用 ρ \rho ρ表示,该参数用于控制特征图的宽/高,即分辨率;

2、对于深度可分离卷积,其计算量为: D k × D k × α M × ρ D F × ρ D F + α M × α N × ρ D F × ρ D F D_k \times D_k \times \alpha M \times \rho D_F \times \rho D_F +\alpha M\times \alpha N\times \rho D_F \times \rho D_F Dk×Dk×αM×ρDF×ρDF+αM×αN×ρDF×ρDF;

10、结果

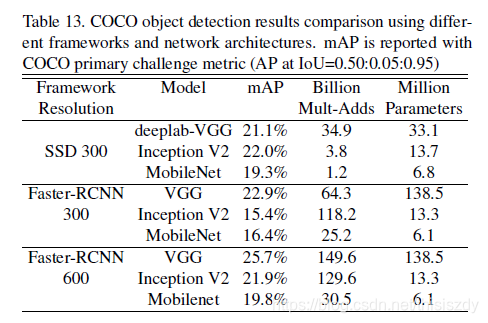

目标检测下使用MobileNet:

附:pytorch代码:

class MobileNet(nn.Module):def __init__(self):super(MobileNet, self).__init__()def conv_bn(inp, oup, stride): # 第一层传统的卷积:conv3*3+BN+ReLUreturn nn.Sequential(nn.Conv2d(inp, oup, 3, stride, 1, bias=False),nn.BatchNorm2d(oup),nn.ReLU(inplace=True))def conv_dw(inp, oup, stride): # 其它层的depthwise convolution:conv3*3+BN+ReLU+conv1*1+BN+ReLUreturn nn.Sequential(nn.Conv2d(inp, inp, 3, stride, 1, groups=inp, bias=False),nn.BatchNorm2d(inp),nn.ReLU(inplace=True),nn.Conv2d(inp, oup, 1, 1, 0, bias=False),nn.BatchNorm2d(oup),nn.ReLU(inplace=True),)self.model = nn.Sequential(conv_bn( 3, 32, 2), # 第一层传统的卷积conv_dw( 32, 64, 1), # 其它层depthwise convolutionconv_dw( 64, 128, 2),conv_dw(128, 128, 1),conv_dw(128, 256, 2),conv_dw(256, 256, 1),conv_dw(256, 512, 2),conv_dw(512, 512, 1),conv_dw(512, 512, 1),conv_dw(512, 512, 1),conv_dw(512, 512, 1),conv_dw(512, 512, 1),conv_dw(512, 1024, 2),conv_dw(1024, 1024, 1),nn.AvgPool2d(7),)self.fc = nn.Linear(1024, 1000) # 全连接层def forward(self, x):x = self.model(x)x = x.view(-1, 1024)x = self.fc(x)return x

这篇关于MobileNet-v1网络框架的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!