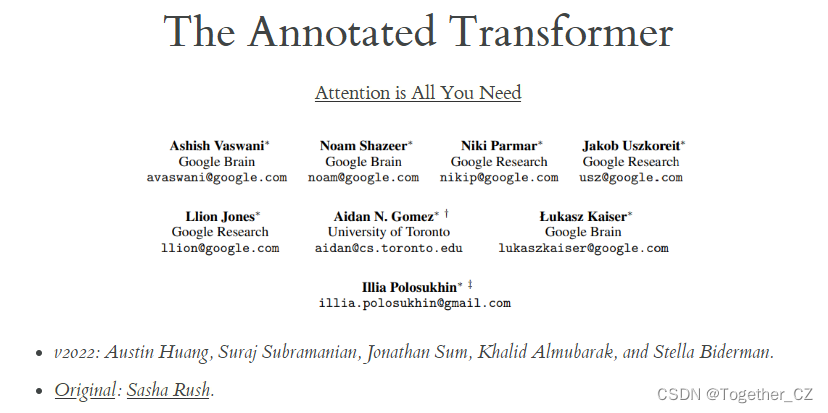

本文主要是介绍The Annotated Transformer 阅读学习,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

查资料的间隙发现一篇介绍Transformer的文章,觉得写得很好,但是时间有限一时半会没办法深入去读这里就做了简单的阅读记录,英语水平有限这里只好借助于机器翻译的帮助,将阅读的内容记录下来,等后续有时间再来回顾。

原文在这里,如下:

在过去的五年里,Transformer一直在很多人的脑海中。这篇文章以逐行实现的形式展示了论文的注释版本。它会重新排序和删除原始文件中的某些部分,并在整个过程中添加注释。本文档本身就是一个工作笔记本,应该是一个完全可用的实现。

背景

减少顺序计算的目标也构成了扩展神经GPU、ByteNet和Convs2的基础,它们都使用卷积神经网络作为基本构造块,并行计算所有输入和输出位置的隐藏表示。在这些模型中,将来自两个任意输入或输出位置的信号关联起来所需的操作数随着位置之间的距离而增加,对于convs2是线性的,对于ByteNet是对数的。这使得学习远程位置之间的依赖关系更加困难。在Transformer中,这被减少到一个恒定数量的操作,尽管由于平均注意加权位置而降低了有效分辨率,我们用多头注意抵消了这种影响。

自注意,有时被称为内部注意,是一种将单个序列的不同位置联系起来以计算序列表示的注意机制。自注意已经成功地应用于阅读理解、抽象概括、语篇蕴涵和学习任务无关的句子表征等任务中。端到端记忆网络是一种基于重复注意机制而不是顺序一致的重复注意机制,在简单的语言问答和语言建模任务中表现良好。

然而,据我们所知,Transformer是第一个完全依靠自注意来计算其输入和输出表示的转导模型,而不使用序列对齐的RNN或卷积。

第1部分:模型体系结构

模型体系结构

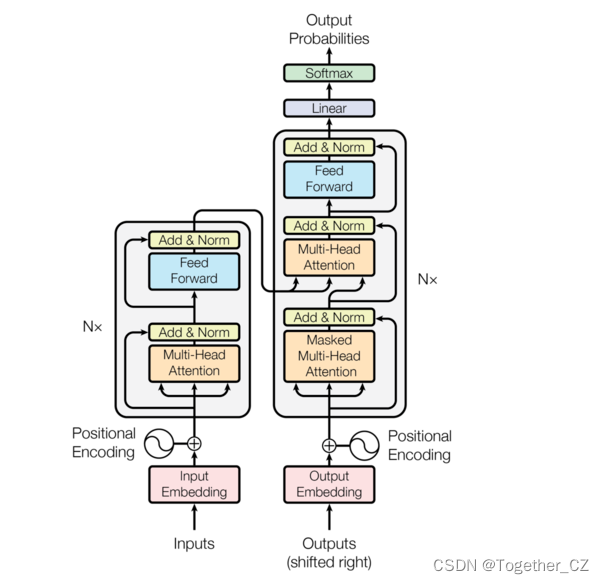

class EncoderDecoder(nn.Module):"""A standard Encoder-Decoder architecture. Base for this and manyother models."""def __init__(self, encoder, decoder, src_embed, tgt_embed, generator):super(EncoderDecoder, self).__init__()self.encoder = encoderself.decoder = decoderself.src_embed = src_embedself.tgt_embed = tgt_embedself.generator = generatordef forward(self, src, tgt, src_mask, tgt_mask):"Take in and process masked src and target sequences."return self.decode(self.encode(src, src_mask), src_mask, tgt, tgt_mask)def encode(self, src, src_mask):return self.encoder(self.src_embed(src), src_mask)def decode(self, memory, src_mask, tgt, tgt_mask):return self.decoder(self.tgt_embed(tgt), memory, src_mask, tgt_mask)class Generator(nn.Module):"Define standard linear + softmax generation step."def __init__(self, d_model, vocab):super(Generator, self).__init__()self.proj = nn.Linear(d_model, vocab)def forward(self, x):return log_softmax(self.proj(x), dim=-1)Transfomer遵循这种整体架构,使用编码器和解码器的堆叠自关注层和逐点完全连接层,分别如图1的左半部分和右半部分所示。

编码器和解码器堆栈

编码器

编码器由N=6个相同层组成。

def clones(module, N):"Produce N identical layers."return nn.ModuleList([copy.deepcopy(module) for _ in range(N)])class Encoder(nn.Module):"Core encoder is a stack of N layers"def __init__(self, layer, N):super(Encoder, self).__init__()self.layers = clones(layer, N)self.norm = LayerNorm(layer.size)def forward(self, x, mask):"Pass the input (and mask) through each layer in turn."for layer in self.layers:x = layer(x, mask)return self.norm(x)我们在两个子层的每一个子层周围使用剩余连接,然后使用层规范化。

class LayerNorm(nn.Module):"Construct a layernorm module (See citation for details)."def __init__(self, features, eps=1e-6):super(LayerNorm, self).__init__()self.a_2 = nn.Parameter(torch.ones(features))self.b_2 = nn.Parameter(torch.zeros(features))self.eps = epsdef forward(self, x):mean = x.mean(-1, keepdim=True)std = x.std(-1, keepdim=True)return self.a_2 * (x - mean) / (std + self.eps) + self.b_2也就是说,每个子层的输出是LayerNorm(x+Sublayer(x)),其中Sublayer(x)是子层本身实现的功能。我们将dropout(cite)应用于每个子层的输出,然后再将其添加到子层输入并进行归一化。为了方便这些剩余连接,模型中的所有子层以及嵌入层都会生成尺寸为dmodel=512的输出。

class SublayerConnection(nn.Module):"""A residual connection followed by a layer norm.Note for code simplicity the norm is first as opposed to last."""def __init__(self, size, dropout):super(SublayerConnection, self).__init__()self.norm = LayerNorm(size)self.dropout = nn.Dropout(dropout)def forward(self, x, sublayer):"Apply residual connection to any sublayer with the same size."return x + self.dropout(sublayer(self.norm(x)))每层有两个子层。第一种是多头自注意机制,第二种是简单的、位置相关的全连接前馈网络。

class EncoderLayer(nn.Module):"Encoder is made up of self-attn and feed forward (defined below)"def __init__(self, size, self_attn, feed_forward, dropout):super(EncoderLayer, self).__init__()self.self_attn = self_attnself.feed_forward = feed_forwardself.sublayer = clones(SublayerConnection(size, dropout), 2)self.size = sizedef forward(self, x, mask):"Follow Figure 1 (left) for connections."x = self.sublayer[0](x, lambda x: self.self_attn(x, x, x, mask))return self.sublayer[1](x, self.feed_forward)解码器

解码器也由N=6个相同层的堆栈组成。

class Decoder(nn.Module):"Generic N layer decoder with masking."def __init__(self, layer, N):super(Decoder, self).__init__()self.layers = clones(layer, N)self.norm = LayerNorm(layer.size)def forward(self, x, memory, src_mask, tgt_mask):for layer in self.layers:x = layer(x, memory, src_mask, tgt_mask)return self.norm(x)除了每个编码器层中的两个子层之外,解码器还插入第三个子层,该子层对编码器堆栈的输出执行多头部注意。与编码器类似,我们在每个子层周围使用剩余连接,然后进行层规范化。

class DecoderLayer(nn.Module):"Decoder is made of self-attn, src-attn, and feed forward (defined below)"def __init__(self, size, self_attn, src_attn, feed_forward, dropout):super(DecoderLayer, self).__init__()self.size = sizeself.self_attn = self_attnself.src_attn = src_attnself.feed_forward = feed_forwardself.sublayer = clones(SublayerConnection(size, dropout), 3)def forward(self, x, memory, src_mask, tgt_mask):"Follow Figure 1 (right) for connections."m = memoryx = self.sublayer[0](x, lambda x: self.self_attn(x, x, x, tgt_mask))x = self.sublayer[1](x, lambda x: self.src_attn(x, m, m, src_mask))return self.sublayer[2](x, self.feed_forward)我们还修改了解码器堆栈中的自注意子层,以防止位置关注后续位置。这种掩蔽,结合输出嵌入被偏移一个位置的事实,确保位置i的预测只能依赖于位置小于i的已知输出。

def subsequent_mask(size):"Mask out subsequent positions."attn_shape = (1, size, size)subsequent_mask = torch.triu(torch.ones(attn_shape), diagonal=1).type(torch.uint8)return subsequent_mask == 0下面的注意掩码显示了每个tgt单词(行)可以查看的位置(列)。在训练过程中,为了注意将来的单词,单词被屏蔽。

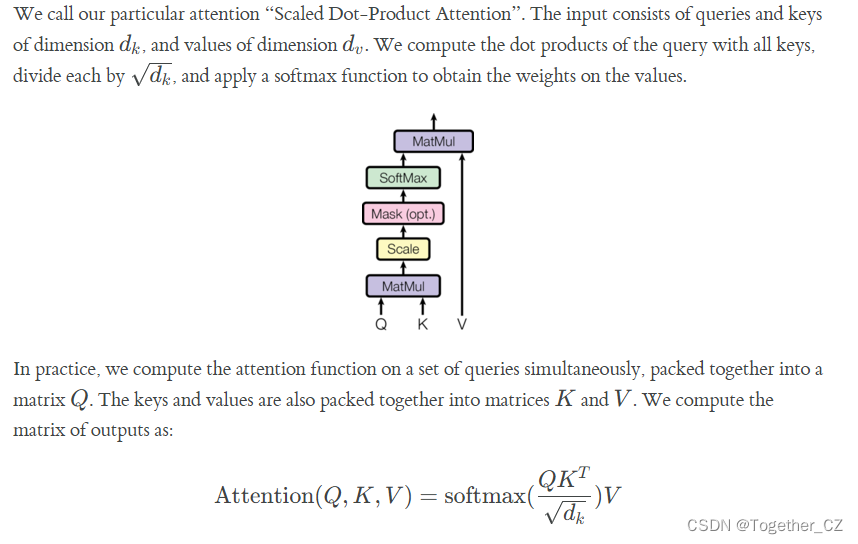

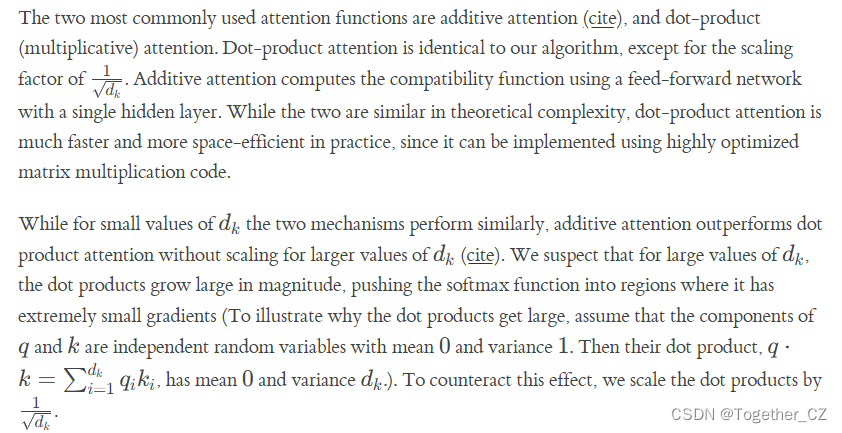

def example_mask():LS_data = pd.concat([pd.DataFrame({"Subsequent Mask": subsequent_mask(20)[0][x, y].flatten(),"Window": y,"Masking": x,})for y in range(20)for x in range(20)])return (alt.Chart(LS_data).mark_rect().properties(height=250, width=250).encode(alt.X("Window:O"),alt.Y("Masking:O"),alt.Color("Subsequent Mask:Q", scale=alt.Scale(scheme="viridis")),).interactive())show_example(example_mask)注意函数可以描述为将查询和一组键值对映射到输出,其中查询、键、值和输出都是向量。将输出计算为值的加权和,其中分配给每个值的权重由查询与相应键的兼容性函数计算。

def attention(query, key, value, mask=None, dropout=None):"Compute 'Scaled Dot Product Attention'"d_k = query.size(-1)scores = torch.matmul(query, key.transpose(-2, -1)) / math.sqrt(d_k)if mask is not None:scores = scores.masked_fill(mask == 0, -1e9)p_attn = scores.softmax(dim=-1)if dropout is not None:p_attn = dropout(p_attn)return torch.matmul(p_attn, value), p_attn

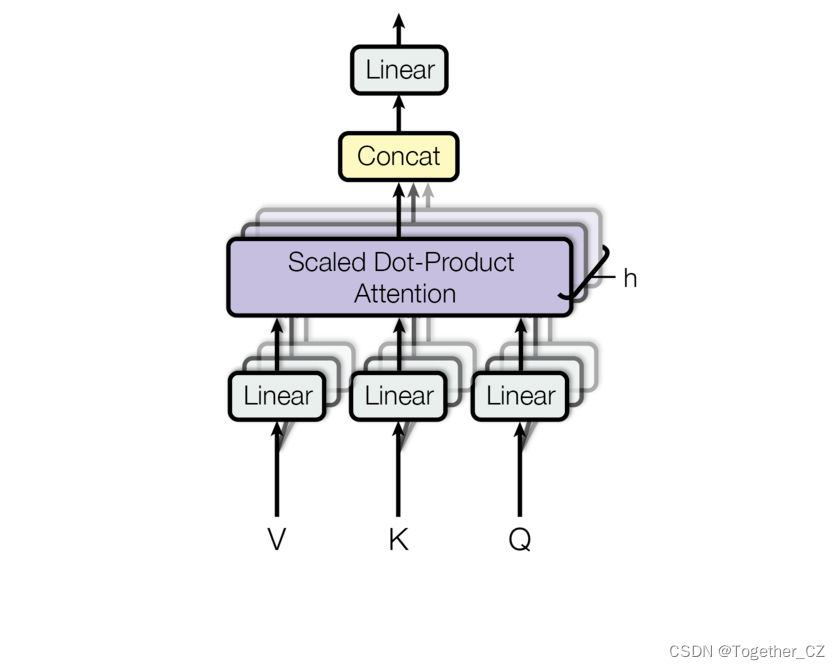

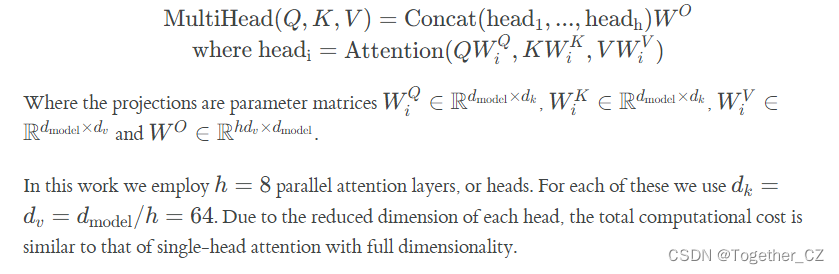

多头部注意使得模型能够联合关注来自不同位置不同表征子空间的信息。对于一个单一的注意力头,平均会抑制这一点。

class MultiHeadedAttention(nn.Module):def __init__(self, h, d_model, dropout=0.1):"Take in model size and number of heads."super(MultiHeadedAttention, self).__init__()assert d_model % h == 0# We assume d_v always equals d_kself.d_k = d_model // hself.h = hself.linears = clones(nn.Linear(d_model, d_model), 4)self.attn = Noneself.dropout = nn.Dropout(p=dropout)def forward(self, query, key, value, mask=None):"Implements Figure 2"if mask is not None:# Same mask applied to all h heads.mask = mask.unsqueeze(1)nbatches = query.size(0)# 1) Do all the linear projections in batch from d_model => h x d_kquery, key, value = [lin(x).view(nbatches, -1, self.h, self.d_k).transpose(1, 2)for lin, x in zip(self.linears, (query, key, value))]# 2) Apply attention on all the projected vectors in batch.x, self.attn = attention(query, key, value, mask=mask, dropout=self.dropout)# 3) "Concat" using a view and apply a final linear.x = (x.transpose(1, 2).contiguous().view(nbatches, -1, self.h * self.d_k))del querydel keydel valuereturn self.linears[-1](x)注意力在我们模型中的应用

Transformer以三种不同的方式使用多头部注意:

1)在“编码器-解码器注意”层中,查询来自前一个解码器层,内存键和值来自编码器的输出。这允许解码器中的每一个位置参与输入序列中的所有位置。这模仿了典型的编码器-解码器注意机制的序列到序列模型。

编码器包含自注意层。在自注意层中,所有键、值和查询都来自同一个位置,在本例中,是编码器中前一层的输出。编码器中的每个位置都可以参与编码器前一层中的所有位置。

类似地,解码器中的自我注意层允许解码器中的每个位置关注解码器中直到并包括该位置的所有位置。为了保持译码器的自回归特性,需要防止译码器中信息的向左流动。我们通过屏蔽(设置为–∞)softmax输入中与非法连接对应的所有值来实现这种内部缩放点积注意。

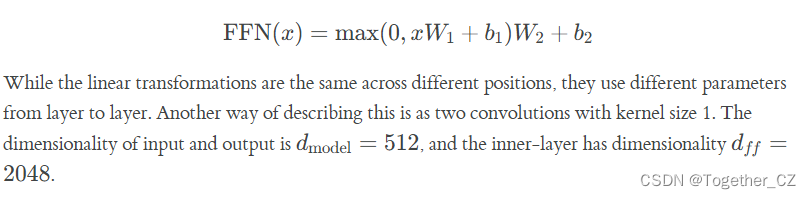

位置前馈网络

除了注意子层之外,我们的编码器和解码器中的每一层都包含一个完全连接的前馈网络,该网络分别且相同地应用于每个位置。这包括两个线性变换,中间有ReLU激活。

class PositionwiseFeedForward(nn.Module):"Implements FFN equation."def __init__(self, d_model, d_ff, dropout=0.1):super(PositionwiseFeedForward, self).__init__()self.w_1 = nn.Linear(d_model, d_ff)self.w_2 = nn.Linear(d_ff, d_model)self.dropout = nn.Dropout(dropout)def forward(self, x):return self.w_2(self.dropout(self.w_1(x).relu()))嵌入和Softmax

与其他序列转换模型类似,我们使用学习嵌入将输入标记和输出标记转换为维度为dmodel的向量。我们还使用通常学习的线性变换和softmax函数将解码器输出转换为预测的下一个令牌概率。在我们的模型中,我们在两个嵌入层和预softmax线性变换之间共享相同的权重矩阵,类似于(cite)。在嵌入层中,我们将这些权重乘以1/sqrt(dmodel)。

class Embeddings(nn.Module):def __init__(self, d_model, vocab):super(Embeddings, self).__init__()self.lut = nn.Embedding(vocab, d_model)self.d_model = d_modeldef forward(self, x):return self.lut(x) * math.sqrt(self.d_model)位置编码

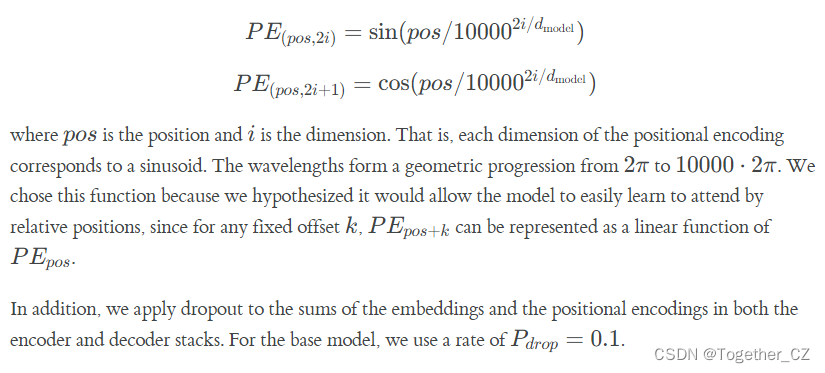

由于我们的模型不包含递归和卷积,为了使模型利用序列的顺序,我们必须在序列中注入一些关于标记的相对或绝对位置的信息。为此,我们将“位置编码”添加到编码器和解码器堆栈底部的输入嵌入中。位置编码具有与嵌入相同的维度dmodel,因此可以将两者相加。有许多位置编码的选择,学习和固定(引用)。

在这项工作中,我们使用不同频率的正弦和余弦函数:

class PositionalEncoding(nn.Module):"Implement the PE function."def __init__(self, d_model, dropout, max_len=5000):super(PositionalEncoding, self).__init__()self.dropout = nn.Dropout(p=dropout)# Compute the positional encodings once in log space.pe = torch.zeros(max_len, d_model)position = torch.arange(0, max_len).unsqueeze(1)div_term = torch.exp(torch.arange(0, d_model, 2) * -(math.log(10000.0) / d_model))pe[:, 0::2] = torch.sin(position * div_term)pe[:, 1::2] = torch.cos(position * div_term)pe = pe.unsqueeze(0)self.register_buffer("pe", pe)def forward(self, x):x = x + self.pe[:, : x.size(1)].requires_grad_(False)return self.dropout(x)下面的位置编码将根据位置添加正弦波。波的频率和偏移对于每个维度都是不同的。

def example_positional():pe = PositionalEncoding(20, 0)y = pe.forward(torch.zeros(1, 100, 20))data = pd.concat([pd.DataFrame({"embedding": y[0, :, dim],"dimension": dim,"position": list(range(100)),})for dim in [4, 5, 6, 7]])return (alt.Chart(data).mark_line().properties(width=800).encode(x="position", y="embedding", color="dimension:N").interactive())show_example(example_positional)我们还尝试使用学习的位置嵌入替代,发现这两个版本产生了几乎相同的结果。我们选择正弦版本,因为它可能允许模型外推序列长度比训练过程中遇到的更长。

完整模型

这里我们定义了一个从超参数到完整模型的函数。

def make_model(src_vocab, tgt_vocab, N=6, d_model=512, d_ff=2048, h=8, dropout=0.1

):"Helper: Construct a model from hyperparameters."c = copy.deepcopyattn = MultiHeadedAttention(h, d_model)ff = PositionwiseFeedForward(d_model, d_ff, dropout)position = PositionalEncoding(d_model, dropout)model = EncoderDecoder(Encoder(EncoderLayer(d_model, c(attn), c(ff), dropout), N),Decoder(DecoderLayer(d_model, c(attn), c(attn), c(ff), dropout), N),nn.Sequential(Embeddings(d_model, src_vocab), c(position)),nn.Sequential(Embeddings(d_model, tgt_vocab), c(position)),Generator(d_model, tgt_vocab),)# This was important from their code.# Initialize parameters with Glorot / fan_avg.for p in model.parameters():if p.dim() > 1:nn.init.xavier_uniform_(p)return model推理

在这里,我们向前迈出了一步来生成模型的预测。我们试着用变压器来记忆输入。正如您将看到的,由于模型尚未训练,所以输出是随机生成的。在下一个教程中,我们将构建训练函数,并尝试训练我们的模型记住从1到10的数字。

def inference_test():test_model = make_model(11, 11, 2)test_model.eval()src = torch.LongTensor([[1, 2, 3, 4, 5, 6, 7, 8, 9, 10]])src_mask = torch.ones(1, 1, 10)memory = test_model.encode(src, src_mask)ys = torch.zeros(1, 1).type_as(src)for i in range(9):out = test_model.decode(memory, src_mask, ys, subsequent_mask(ys.size(1)).type_as(src.data))prob = test_model.generator(out[:, -1])_, next_word = torch.max(prob, dim=1)next_word = next_word.data[0]ys = torch.cat([ys, torch.empty(1, 1).type_as(src.data).fill_(next_word)], dim=1)print("Example Untrained Model Prediction:", ys)def run_tests():for _ in range(10):inference_test()show_example(run_tests)输出结果如下:

Example Untrained Model Prediction: tensor([[0, 0, 0, 0, 0, 0, 0, 0, 0, 0]])

Example Untrained Model Prediction: tensor([[0, 3, 4, 4, 4, 4, 4, 4, 4, 4]])

Example Untrained Model Prediction: tensor([[ 0, 10, 10, 10, 3, 2, 5, 7, 9, 6]])

Example Untrained Model Prediction: tensor([[ 0, 4, 3, 6, 10, 10, 2, 6, 2, 2]])

Example Untrained Model Prediction: tensor([[ 0, 9, 0, 1, 5, 10, 1, 5, 10, 6]])

Example Untrained Model Prediction: tensor([[ 0, 1, 5, 1, 10, 1, 10, 10, 10, 10]])

Example Untrained Model Prediction: tensor([[ 0, 1, 10, 9, 9, 9, 9, 9, 1, 5]])

Example Untrained Model Prediction: tensor([[ 0, 3, 1, 5, 10, 10, 10, 10, 10, 10]])

Example Untrained Model Prediction: tensor([[ 0, 3, 5, 10, 5, 10, 4, 2, 4, 2]])

Example Untrained Model Prediction: tensor([[0, 5, 6, 2, 5, 6, 2, 6, 2, 2]])第2部分:模型训练

训练

我们停下来简单介绍一下训练标准编码器-解码器模型所需的一些工具。首先,我们定义一个批处理对象,该对象包含src和目标语句以进行训练,并构造掩码。

Batches and Masking

class Batch:"""Object for holding a batch of data with mask during training."""def __init__(self, src, tgt=None, pad=2): # 2 = <blank>self.src = srcself.src_mask = (src != pad).unsqueeze(-2)if tgt is not None:self.tgt = tgt[:, :-1]self.tgt_y = tgt[:, 1:]self.tgt_mask = self.make_std_mask(self.tgt, pad)self.ntokens = (self.tgt_y != pad).data.sum()@staticmethoddef make_std_mask(tgt, pad):"Create a mask to hide padding and future words."tgt_mask = (tgt != pad).unsqueeze(-2)tgt_mask = tgt_mask & subsequent_mask(tgt.size(-1)).type_as(tgt_mask.data)return tgt_mask接下来,我们创建一个通用的培训和评分函数来跟踪损失。我们传入一个通用的损失计算函数,该函数还处理参数更新。

Training Loop

class TrainState:"""Track number of steps, examples, and tokens processed"""step: int = 0 # Steps in the current epochaccum_step: int = 0 # Number of gradient accumulation stepssamples: int = 0 # total # of examples usedtokens: int = 0 # total # of tokens processeddef run_epoch(data_iter,model,loss_compute,optimizer,scheduler,mode="train",accum_iter=1,train_state=TrainState(),

):"""Train a single epoch"""start = time.time()total_tokens = 0total_loss = 0tokens = 0n_accum = 0for i, batch in enumerate(data_iter):out = model.forward(batch.src, batch.tgt, batch.src_mask, batch.tgt_mask)loss, loss_node = loss_compute(out, batch.tgt_y, batch.ntokens)# loss_node = loss_node / accum_iterif mode == "train" or mode == "train+log":loss_node.backward()train_state.step += 1train_state.samples += batch.src.shape[0]train_state.tokens += batch.ntokensif i % accum_iter == 0:optimizer.step()optimizer.zero_grad(set_to_none=True)n_accum += 1train_state.accum_step += 1scheduler.step()total_loss += losstotal_tokens += batch.ntokenstokens += batch.ntokensif i % 40 == 1 and (mode == "train" or mode == "train+log"):lr = optimizer.param_groups[0]["lr"]elapsed = time.time() - startprint(("Epoch Step: %6d | Accumulation Step: %3d | Loss: %6.2f "+ "| Tokens / Sec: %7.1f | Learning Rate: %6.1e")% (i, n_accum, loss / batch.ntokens, tokens / elapsed, lr))start = time.time()tokens = 0del lossdel loss_nodereturn total_loss / total_tokens, train_stateTraining Data and Batching

我们在标准WMT 2014英语-德语数据集上进行了培训,该数据集由大约450万个句子对组成。使用字节对编码对句子进行编码,该编码具有大约37000个标记的共享源-目标词汇表。对于英语-法语,我们使用了更大的WMT 2014英语-法语数据集,该数据集包含3600万个句子,并将标记拆分为32000个单词的词汇量。

句子对按大致的序列长度分批排列在一起。每个训练批次包含一组句子对,其中包含大约25000个源标记和25000个目标标记。

Hardware and Schedule

我们在一台配备8个NVIDIA P100 GPU的机器上训练我们的模型。对于使用本文中描述的超参数的基础模型,每个训练步骤大约需要0.4秒。我们对基础模型进行了总共100000步或12小时的训练。对于我们的大型号,步进时间为1.0秒。大模型训练30万步(3.5天)。

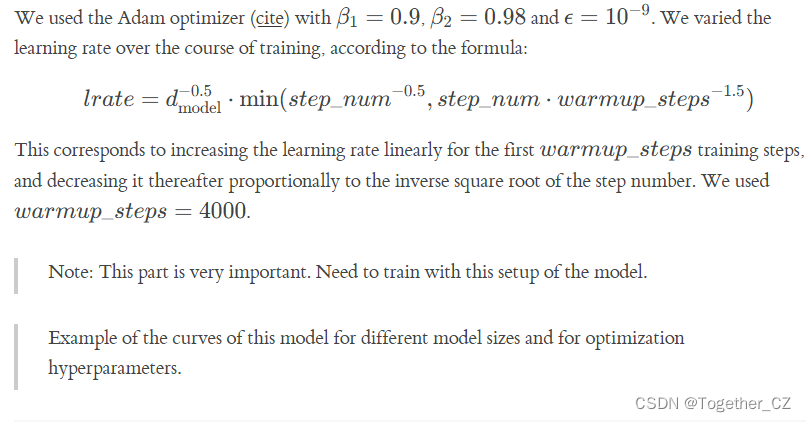

Optimizer

def rate(step, model_size, factor, warmup):"""we have to default the step to 1 for LambdaLR functionto avoid zero raising to negative power."""if step == 0:step = 1return factor * (model_size ** (-0.5) * min(step ** (-0.5), step * warmup ** (-1.5)))def example_learning_schedule():opts = [[512, 1, 4000], # example 1[512, 1, 8000], # example 2[256, 1, 4000], # example 3]dummy_model = torch.nn.Linear(1, 1)learning_rates = []# we have 3 examples in opts list.for idx, example in enumerate(opts):# run 20000 epoch for each exampleoptimizer = torch.optim.Adam(dummy_model.parameters(), lr=1, betas=(0.9, 0.98), eps=1e-9)lr_scheduler = LambdaLR(optimizer=optimizer, lr_lambda=lambda step: rate(step, *example))tmp = []# take 20K dummy training steps, save the learning rate at each stepfor step in range(20000):tmp.append(optimizer.param_groups[0]["lr"])optimizer.step()lr_scheduler.step()learning_rates.append(tmp)learning_rates = torch.tensor(learning_rates)# Enable altair to handle more than 5000 rowsalt.data_transformers.disable_max_rows()opts_data = pd.concat([pd.DataFrame({"Learning Rate": learning_rates[warmup_idx, :],"model_size:warmup": ["512:4000", "512:8000", "256:4000"][warmup_idx],"step": range(20000),})for warmup_idx in [0, 1, 2]])return (alt.Chart(opts_data).mark_line().properties(width=600).encode(x="step", y="Learning Rate", color="model_size:warmup:N").interactive())example_learning_schedule()正则化Regularization

标签平滑Label Smoothing

在训练过程中,我们采用了值ϵls=0.1的标签平滑。这伤害了困惑,因为模型学会了更不确定,但提高了准确性和BLEU分数。

我们使用KL div损失实现标签平滑。而不是使用一个热点目标分布,我们创建一个分布,有信心的正确的字和其余的平滑质量分布在整个词汇表。

class LabelSmoothing(nn.Module):"Implement label smoothing."def __init__(self, size, padding_idx, smoothing=0.0):super(LabelSmoothing, self).__init__()self.criterion = nn.KLDivLoss(reduction="sum")self.padding_idx = padding_idxself.confidence = 1.0 - smoothingself.smoothing = smoothingself.size = sizeself.true_dist = Nonedef forward(self, x, target):assert x.size(1) == self.sizetrue_dist = x.data.clone()true_dist.fill_(self.smoothing / (self.size - 2))true_dist.scatter_(1, target.data.unsqueeze(1), self.confidence)true_dist[:, self.padding_idx] = 0mask = torch.nonzero(target.data == self.padding_idx)if mask.dim() > 0:true_dist.index_fill_(0, mask.squeeze(), 0.0)self.true_dist = true_distreturn self.criterion(x, true_dist.clone().detach())这里我们可以看到一个例子,质量是如何分布到基于置信度的话。

# Example of label smoothing.def example_label_smoothing():crit = LabelSmoothing(5, 0, 0.4)predict = torch.FloatTensor([[0, 0.2, 0.7, 0.1, 0],[0, 0.2, 0.7, 0.1, 0],[0, 0.2, 0.7, 0.1, 0],[0, 0.2, 0.7, 0.1, 0],[0, 0.2, 0.7, 0.1, 0],])crit(x=predict.log(), target=torch.LongTensor([2, 1, 0, 3, 3]))LS_data = pd.concat([pd.DataFrame({"target distribution": crit.true_dist[x, y].flatten(),"columns": y,"rows": x,})for y in range(5)for x in range(5)])return (alt.Chart(LS_data).mark_rect(color="Blue", opacity=1).properties(height=200, width=200).encode(alt.X("columns:O", title=None),alt.Y("rows:O", title=None),alt.Color("target distribution:Q", scale=alt.Scale(scheme="viridis")),).interactive())show_example(example_label_smoothing)如果模型对给定的选择非常自信,那么标签平滑实际上开始惩罚模型。

def loss(x, crit):d = x + 3 * 1predict = torch.FloatTensor([[0, x / d, 1 / d, 1 / d, 1 / d]])return crit(predict.log(), torch.LongTensor([1])).datadef penalization_visualization():crit = LabelSmoothing(5, 0, 0.1)loss_data = pd.DataFrame({"Loss": [loss(x, crit) for x in range(1, 100)],"Steps": list(range(99)),}).astype("float")return (alt.Chart(loss_data).mark_line().properties(width=350).encode(x="Steps",y="Loss",).interactive())show_example(penalization_visualization)第一个例子

我们可以先尝试一个简单的复制任务。给定一个小词汇表中的随机输入符号集,目标是生成相同的符号。

Synthetic Data

def data_gen(V, batch_size, nbatches):"Generate random data for a src-tgt copy task."for i in range(nbatches):data = torch.randint(1, V, size=(batch_size, 10))data[:, 0] = 1src = data.requires_grad_(False).clone().detach()tgt = data.requires_grad_(False).clone().detach()yield Batch(src, tgt, 0)Loss Computation

class SimpleLossCompute:"A simple loss compute and train function."def __init__(self, generator, criterion):self.generator = generatorself.criterion = criteriondef __call__(self, x, y, norm):x = self.generator(x)sloss = (self.criterion(x.contiguous().view(-1, x.size(-1)), y.contiguous().view(-1))/ norm)return sloss.data * norm, slossGreedy Decoding

def greedy_decode(model, src, src_mask, max_len, start_symbol):memory = model.encode(src, src_mask)ys = torch.zeros(1, 1).fill_(start_symbol).type_as(src.data)for i in range(max_len - 1):out = model.decode(memory, src_mask, ys, subsequent_mask(ys.size(1)).type_as(src.data))prob = model.generator(out[:, -1])_, next_word = torch.max(prob, dim=1)next_word = next_word.data[0]ys = torch.cat([ys, torch.zeros(1, 1).type_as(src.data).fill_(next_word)], dim=1)return ys# Train the simple copy task.def example_simple_model():V = 11criterion = LabelSmoothing(size=V, padding_idx=0, smoothing=0.0)model = make_model(V, V, N=2)optimizer = torch.optim.Adam(model.parameters(), lr=0.5, betas=(0.9, 0.98), eps=1e-9)lr_scheduler = LambdaLR(optimizer=optimizer,lr_lambda=lambda step: rate(step, model_size=model.src_embed[0].d_model, factor=1.0, warmup=400),)batch_size = 80for epoch in range(20):model.train()run_epoch(data_gen(V, batch_size, 20),model,SimpleLossCompute(model.generator, criterion),optimizer,lr_scheduler,mode="train",)model.eval()run_epoch(data_gen(V, batch_size, 5),model,SimpleLossCompute(model.generator, criterion),DummyOptimizer(),DummyScheduler(),mode="eval",)[0]model.eval()src = torch.LongTensor([[0, 1, 2, 3, 4, 5, 6, 7, 8, 9]])max_len = src.shape[1]src_mask = torch.ones(1, 1, max_len)print(greedy_decode(model, src, src_mask, max_len=max_len, start_symbol=0))# execute_example(example_simple_model)第三部分:一个真实世界的例子

现在我们考虑一个使用Multi30k德语-英语翻译任务的真实示例。该任务比文中考虑的WMT任务小得多,但它说明了整个系统。我们还展示了如何使用多gpu处理,使它真正快速。

Data Loading

我们将使用torchtext和spacy加载数据集进行标记化。

# Load spacy tokenizer models, download them if they haven't been

# downloaded alreadydef load_tokenizers():try:spacy_de = spacy.load("de_core_news_sm")except IOError:os.system("python -m spacy download de_core_news_sm")spacy_de = spacy.load("de_core_news_sm")try:spacy_en = spacy.load("en_core_web_sm")except IOError:os.system("python -m spacy download en_core_web_sm")spacy_en = spacy.load("en_core_web_sm")return spacy_de, spacy_endef tokenize(text, tokenizer):return [tok.text for tok in tokenizer.tokenizer(text)]def yield_tokens(data_iter, tokenizer, index):for from_to_tuple in data_iter:yield tokenizer(from_to_tuple[index])def build_vocabulary(spacy_de, spacy_en):def tokenize_de(text):return tokenize(text, spacy_de)def tokenize_en(text):return tokenize(text, spacy_en)print("Building German Vocabulary ...")train, val, test = datasets.Multi30k(language_pair=("de", "en"))vocab_src = build_vocab_from_iterator(yield_tokens(train + val + test, tokenize_de, index=0),min_freq=2,specials=["<s>", "</s>", "<blank>", "<unk>"],)print("Building English Vocabulary ...")train, val, test = datasets.Multi30k(language_pair=("de", "en"))vocab_tgt = build_vocab_from_iterator(yield_tokens(train + val + test, tokenize_en, index=1),min_freq=2,specials=["<s>", "</s>", "<blank>", "<unk>"],)vocab_src.set_default_index(vocab_src["<unk>"])vocab_tgt.set_default_index(vocab_tgt["<unk>"])return vocab_src, vocab_tgtdef load_vocab(spacy_de, spacy_en):if not exists("vocab.pt"):vocab_src, vocab_tgt = build_vocabulary(spacy_de, spacy_en)torch.save((vocab_src, vocab_tgt), "vocab.pt")else:vocab_src, vocab_tgt = torch.load("vocab.pt")print("Finished.\nVocabulary sizes:")print(len(vocab_src))print(len(vocab_tgt))return vocab_src, vocab_tgtif is_interactive_notebook():# global variables used later in the scriptspacy_de, spacy_en = show_example(load_tokenizers)vocab_src, vocab_tgt = show_example(load_vocab, args=[spacy_de, spacy_en])输出如下:

Finished.

Vocabulary sizes:

59981

36745配料对速度至关重要。我们希望有非常均匀划分批次,与绝对最小的填充。为此,我们必须对默认的torchtext批处理进行一些修改。这段代码修补了它们的默认批处理,以确保我们搜索足够多的句子来找到紧凑的批处理。

Iterators

def collate_batch(batch,src_pipeline,tgt_pipeline,src_vocab,tgt_vocab,device,max_padding=128,pad_id=2,

):bs_id = torch.tensor([0], device=device) # <s> token ideos_id = torch.tensor([1], device=device) # </s> token idsrc_list, tgt_list = [], []for (_src, _tgt) in batch:processed_src = torch.cat([bs_id,torch.tensor(src_vocab(src_pipeline(_src)),dtype=torch.int64,device=device,),eos_id,],0,)processed_tgt = torch.cat([bs_id,torch.tensor(tgt_vocab(tgt_pipeline(_tgt)),dtype=torch.int64,device=device,),eos_id,],0,)src_list.append(# warning - overwrites values for negative values of padding - lenpad(processed_src,(0,max_padding - len(processed_src),),value=pad_id,))tgt_list.append(pad(processed_tgt,(0, max_padding - len(processed_tgt)),value=pad_id,))src = torch.stack(src_list)tgt = torch.stack(tgt_list)return (src, tgt)def create_dataloaders(device,vocab_src,vocab_tgt,spacy_de,spacy_en,batch_size=12000,max_padding=128,is_distributed=True,

):# def create_dataloaders(batch_size=12000):def tokenize_de(text):return tokenize(text, spacy_de)def tokenize_en(text):return tokenize(text, spacy_en)def collate_fn(batch):return collate_batch(batch,tokenize_de,tokenize_en,vocab_src,vocab_tgt,device,max_padding=max_padding,pad_id=vocab_src.get_stoi()["<blank>"],)train_iter, valid_iter, test_iter = datasets.Multi30k(language_pair=("de", "en"))train_iter_map = to_map_style_dataset(train_iter) # DistributedSampler needs a dataset len()train_sampler = (DistributedSampler(train_iter_map) if is_distributed else None)valid_iter_map = to_map_style_dataset(valid_iter)valid_sampler = (DistributedSampler(valid_iter_map) if is_distributed else None)train_dataloader = DataLoader(train_iter_map,batch_size=batch_size,shuffle=(train_sampler is None),sampler=train_sampler,collate_fn=collate_fn,)valid_dataloader = DataLoader(valid_iter_map,batch_size=batch_size,shuffle=(valid_sampler is None),sampler=valid_sampler,collate_fn=collate_fn,)return train_dataloader, valid_dataloaderTraining the System

def train_worker(gpu,ngpus_per_node,vocab_src,vocab_tgt,spacy_de,spacy_en,config,is_distributed=False,

):print(f"Train worker process using GPU: {gpu} for training", flush=True)torch.cuda.set_device(gpu)pad_idx = vocab_tgt["<blank>"]d_model = 512model = make_model(len(vocab_src), len(vocab_tgt), N=6)model.cuda(gpu)module = modelis_main_process = Trueif is_distributed:dist.init_process_group("nccl", init_method="env://", rank=gpu, world_size=ngpus_per_node)model = DDP(model, device_ids=[gpu])module = model.moduleis_main_process = gpu == 0criterion = LabelSmoothing(size=len(vocab_tgt), padding_idx=pad_idx, smoothing=0.1)criterion.cuda(gpu)train_dataloader, valid_dataloader = create_dataloaders(gpu,vocab_src,vocab_tgt,spacy_de,spacy_en,batch_size=config["batch_size"] // ngpus_per_node,max_padding=config["max_padding"],is_distributed=is_distributed,)optimizer = torch.optim.Adam(model.parameters(), lr=config["base_lr"], betas=(0.9, 0.98), eps=1e-9)lr_scheduler = LambdaLR(optimizer=optimizer,lr_lambda=lambda step: rate(step, d_model, factor=1, warmup=config["warmup"]),)train_state = TrainState()for epoch in range(config["num_epochs"]):if is_distributed:train_dataloader.sampler.set_epoch(epoch)valid_dataloader.sampler.set_epoch(epoch)model.train()print(f"[GPU{gpu}] Epoch {epoch} Training ====", flush=True)_, train_state = run_epoch((Batch(b[0], b[1], pad_idx) for b in train_dataloader),model,SimpleLossCompute(module.generator, criterion),optimizer,lr_scheduler,mode="train+log",accum_iter=config["accum_iter"],train_state=train_state,)GPUtil.showUtilization()if is_main_process:file_path = "%s%.2d.pt" % (config["file_prefix"], epoch)torch.save(module.state_dict(), file_path)torch.cuda.empty_cache()print(f"[GPU{gpu}] Epoch {epoch} Validation ====", flush=True)model.eval()sloss = run_epoch((Batch(b[0], b[1], pad_idx) for b in valid_dataloader),model,SimpleLossCompute(module.generator, criterion),DummyOptimizer(),DummyScheduler(),mode="eval",)print(sloss)torch.cuda.empty_cache()if is_main_process:file_path = "%sfinal.pt" % config["file_prefix"]torch.save(module.state_dict(), file_path)def train_distributed_model(vocab_src, vocab_tgt, spacy_de, spacy_en, config):from the_annotated_transformer import train_workerngpus = torch.cuda.device_count()os.environ["MASTER_ADDR"] = "localhost"os.environ["MASTER_PORT"] = "12356"print(f"Number of GPUs detected: {ngpus}")print("Spawning training processes ...")mp.spawn(train_worker,nprocs=ngpus,args=(ngpus, vocab_src, vocab_tgt, spacy_de, spacy_en, config, True),)def train_model(vocab_src, vocab_tgt, spacy_de, spacy_en, config):if config["distributed"]:train_distributed_model(vocab_src, vocab_tgt, spacy_de, spacy_en, config)else:train_worker(0, 1, vocab_src, vocab_tgt, spacy_de, spacy_en, config, False)def load_trained_model():config = {"batch_size": 32,"distributed": False,"num_epochs": 8,"accum_iter": 10,"base_lr": 1.0,"max_padding": 72,"warmup": 3000,"file_prefix": "multi30k_model_",}model_path = "multi30k_model_final.pt"if not exists(model_path):train_model(vocab_src, vocab_tgt, spacy_de, spacy_en, config)model = make_model(len(vocab_src), len(vocab_tgt), N=6)model.load_state_dict(torch.load("multi30k_model_final.pt"))return modelif is_interactive_notebook():model = load_trained_model()一旦训练完成,我们就可以对模型进行解码,生成一组翻译。这里我们只翻译验证集中的第一句话。这个数据集非常小,所以贪婪搜索的翻译是相当精确的。

Additional Components: BPE, Search, Averaging

这主要包括Transformer模型本身。有四个方面,我们没有明确涵盖。我们也在OpenNMT-py中实现了所有这些附加特性。

1、BPE/Word-piece:我们可以使用一个库来首先将数据预处理成子词单元。参见Rico Sennrich的子词nmt实现。这些模型会将训练数据转换为如下所示:

▁Die ▁Protokoll datei ▁kann ▁ heimlich ▁per ▁E - Mail ▁oder ▁FTP ▁an ▁einen ▁bestimmte n ▁Empfänger ▁gesendet ▁werden .2、共享嵌入:当使用具有共享词汇表的BPE时,我们可以在源/目标/生成器之间共享相同的权重向量。详见(引用)。要将其添加到模型,只需执行以下操作:

if False:model.src_embed[0].lut.weight = model.tgt_embeddings[0].lut.weightmodel.generator.lut.weight = model.tgt_embed[0].lut.weight3、光束搜索:这有点太复杂了,这里无法涵盖。请参阅OpenNMT py以了解pytorch实现。

4、模型平均:本文对最后k个检查点进行平均,以创建一个集合效果。如果我们有一堆模型,我们可以事后再做:

def average(model, models):"Average models into model"for ps in zip(*[m.params() for m in [model] + models]):ps[0].copy_(torch.sum(*ps[1:]) / len(ps[1:]))结果

在WMT 2014英语到德语翻译任务中,大Transformer模型(表2中的Transformer(big))比之前报告的最佳模型(包括集成)的BLEU高出2.0以上,建立了新的最先进BLEU分数28.4。此模型的配置在表3的底行中列出。在8个P100 GPU上训练3.5天。即使是我们的基础模型也超过了所有以前发布的模型和集合,只是任何竞争模型的训练成本的一小部分。

在WMT 2014英法翻译任务中,我们的big模型的BLEU得分为41.0,优于之前发布的所有单一模型,训练成本低于之前最先进模型的1/4。为英语到法语训练的Transformer(big)模型使用的dropout率Pdrop=0.1,而不是0.3。

通过上一节中的附加扩展,OpenNMT py复制在EN-DE WMT上达到26.9。在这里,我已经将这些参数加载到我们的重新实现中。

def check_outputs(valid_dataloader,model,vocab_src,vocab_tgt,n_examples=15,pad_idx=2,eos_string="</s>",

):results = [()] * n_examplesfor idx in range(n_examples):print("\nExample %d ========\n" % idx)b = next(iter(valid_dataloader))rb = Batch(b[0], b[1], pad_idx)greedy_decode(model, rb.src, rb.src_mask, 64, 0)[0]src_tokens = [vocab_src.get_itos()[x] for x in rb.src[0] if x != pad_idx]tgt_tokens = [vocab_tgt.get_itos()[x] for x in rb.tgt[0] if x != pad_idx]print("Source Text (Input) : "+ " ".join(src_tokens).replace("\n", ""))print("Target Text (Ground Truth) : "+ " ".join(tgt_tokens).replace("\n", ""))model_out = greedy_decode(model, rb.src, rb.src_mask, 72, 0)[0]model_txt = (" ".join([vocab_tgt.get_itos()[x] for x in model_out if x != pad_idx]).split(eos_string, 1)[0]+ eos_string)print("Model Output : " + model_txt.replace("\n", ""))results[idx] = (rb, src_tokens, tgt_tokens, model_out, model_txt)return resultsdef run_model_example(n_examples=5):global vocab_src, vocab_tgt, spacy_de, spacy_enprint("Preparing Data ...")_, valid_dataloader = create_dataloaders(torch.device("cpu"),vocab_src,vocab_tgt,spacy_de,spacy_en,batch_size=1,is_distributed=False,)print("Loading Trained Model ...")model = make_model(len(vocab_src), len(vocab_tgt), N=6)model.load_state_dict(torch.load("multi30k_model_final.pt", map_location=torch.device("cpu")))print("Checking Model Outputs:")example_data = check_outputs(valid_dataloader, model, vocab_src, vocab_tgt, n_examples=n_examples)return model, example_data# execute_example(run_model_example)注意力可视化

即使有一个贪婪的解码器翻译看起来相当不错。我们可以进一步将其可视化,以查看在注意力的每一层发生了什么

def mtx2df(m, max_row, max_col, row_tokens, col_tokens):"convert a dense matrix to a data frame with row and column indices"return pd.DataFrame([(r,c,float(m[r, c]),"%.3d %s"% (r, row_tokens[r] if len(row_tokens) > r else "<blank>"),"%.3d %s"% (c, col_tokens[c] if len(col_tokens) > c else "<blank>"),)for r in range(m.shape[0])for c in range(m.shape[1])if r < max_row and c < max_col],# if float(m[r,c]) != 0 and r < max_row and c < max_col],columns=["row", "column", "value", "row_token", "col_token"],)def attn_map(attn, layer, head, row_tokens, col_tokens, max_dim=30):df = mtx2df(attn[0, head].data,max_dim,max_dim,row_tokens,col_tokens,)return (alt.Chart(data=df).mark_rect().encode(x=alt.X("col_token", axis=alt.Axis(title="")),y=alt.Y("row_token", axis=alt.Axis(title="")),color="value",tooltip=["row", "column", "value", "row_token", "col_token"],).properties(height=400, width=400).interactive())def get_encoder(model, layer):return model.encoder.layers[layer].self_attn.attndef get_decoder_self(model, layer):return model.decoder.layers[layer].self_attn.attndef get_decoder_src(model, layer):return model.decoder.layers[layer].src_attn.attndef visualize_layer(model, layer, getter_fn, ntokens, row_tokens, col_tokens):# ntokens = last_example[0].ntokensattn = getter_fn(model, layer)n_heads = attn.shape[1]charts = [attn_map(attn,0,h,row_tokens=row_tokens,col_tokens=col_tokens,max_dim=ntokens,)for h in range(n_heads)]assert n_heads == 8return alt.vconcat(charts[0]# | charts[1]| charts[2]# | charts[3]| charts[4]# | charts[5]| charts[6]# | charts[7]# layer + 1 due to 0-indexing).properties(title="Layer %d" % (layer + 1))Encoder Self Attention

def viz_encoder_self():model, example_data = run_model_example(n_examples=1)example = example_data[len(example_data) - 1] # batch object for the final examplelayer_viz = [visualize_layer(model, layer, get_encoder, len(example[1]), example[1], example[1])for layer in range(6)]return alt.hconcat(layer_viz[0]# & layer_viz[1]& layer_viz[2]# & layer_viz[3]& layer_viz[4]# & layer_viz[5])show_example(viz_encoder_self)结果输出如下:

Preparing Data ...

Loading Trained Model ...

Checking Model Outputs:Example 0 ========Source Text (Input) : <s> Zwei Frauen in pinkfarbenen T-Shirts und <unk> unterhalten sich vor einem <unk> . </s>

Target Text (Ground Truth) : <s> Two women wearing pink T - shirts and blue jeans converse outside clothing store . </s>

Model Output : <s> Two women in pink shirts and face are talking in front of a <unk> . </s>Decoder Self Attention

def viz_decoder_self():model, example_data = run_model_example(n_examples=1)example = example_data[len(example_data) - 1]layer_viz = [visualize_layer(model,layer,get_decoder_self,len(example[1]),example[1],example[1],)for layer in range(6)]return alt.hconcat(layer_viz[0]& layer_viz[1]& layer_viz[2]& layer_viz[3]& layer_viz[4]& layer_viz[5])show_example(viz_decoder_self)结果输出如下:

Preparing Data ...

Loading Trained Model ...

Checking Model Outputs:Example 0 ========Source Text (Input) : <s> Eine Gruppe von Männern in Kostümen spielt Musik . </s>

Target Text (Ground Truth) : <s> A group of men in costume play music . </s>

Model Output : <s> A group of men in costumes playing music . </s>Decoder Src Attention

def viz_decoder_src():model, example_data = run_model_example(n_examples=1)example = example_data[len(example_data) - 1]layer_viz = [visualize_layer(model,layer,get_decoder_src,max(len(example[1]), len(example[2])),example[1],example[2],)for layer in range(6)]return alt.hconcat(layer_viz[0]& layer_viz[1]& layer_viz[2]& layer_viz[3]& layer_viz[4]& layer_viz[5])show_example(viz_decoder_src)结果输出如下:

Preparing Data ...

Loading Trained Model ...

Checking Model Outputs:Example 0 ========Source Text (Input) : <s> Ein kleiner Junge verwendet einen Bohrer , um ein Loch in ein Holzstück zu machen . </s>

Target Text (Ground Truth) : <s> A little boy using a drill to make a hole in a piece of wood . </s>

Model Output : <s> A little boy uses a machine to be working in a hole in a log . </s>这篇关于The Annotated Transformer 阅读学习的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!