本文主要是介绍微服务轮子项目(13) - 统一日志中心详解(docker安装部署),希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

文章目录

- 1. 概述

- 1.1 相关地址

- 1.2 组件分工

- 2. docker安装部署

1. 概述

1.1 相关地址

- 官网

- elk镜像仓库

1.2 组件分工

- filebeat:部署在每台应用服务器、数据库、中间件中,负责日志抓取与日志聚合

- 日志聚合:把多行日志合并成一条,例如exception的堆栈信息等

- logstash:通过各种filter结构化日志信息,并把字段transform成对应的类型

- elasticsearch:负责存储和查询日志信息

- kibana:通过ui展示日志信息、还能生成饼图、柱状图等

2. docker安装部署

step1:修改mmap计数大于等于262144的限制

#在/etc/sysctl.conf文件最后添加一行

vm.max_map_count=655360

#并执行命令

sysctl -p

step2:下载并运行镜像

docker run -p 5601:5601 -p 9200:9200 -p 9300:9300 -p 5044:5044 --name elk -d sebp/elk:651

step3:准备elasticsearch的配置文件

mkdir /opt/elk/elasticsearch/conf

#复制elasticsearch的配置出来

docker cp elk:/etc/elasticsearch/elasticsearch.yml /opt/elk/elasticsearch/conf

step4:修改elasticsearch.yml配置

修改cluster.name参数

cluster.name: my-es

在最后新增以下三个参数:

thread_pool.bulk.queue_size: 1000

http.cors.enabled: true

http.cors.allow-origin: "*"

step5:准备logstash的配置文件

mkdir /opt/elk/logstash/conf#复制logstash的配置出来

docker cp elk:/etc/logstash/conf.d/. /opt/elk/logstash/conf/

step6:准备logstash的patterns文件

新建一个java的patterns文件

mkdir /opt/elk/logstash/patterns

vim java 内容如下:

# user-center

MYAPPNAME ([0-9a-zA-Z_-]*)

# RMI TCP Connection(2)-127.0.0.1

MYTHREADNAME ([0-9a-zA-Z._-]|\(|\)|\s)*

就是一个名字叫做java的文件,不需要文件后缀

step7:删除02-beats-input.conf的最后三句,去掉强制认证

vim /opt/elk/logstash/conf/02-beats-input.conf

#ssl => true

#ssl_certificate => "/pki/tls/certs/logstash.crt"

#ssl_key => "/pki/tls/private/logstash.key"

step8:修改10-syslog.conf配置,改为以下内容

- 注意,下面的logstash结构化配置样例都是以本工程的日志格式配置,并不是通用的

filter {if [type] == "syslog" {grok {match => { "message" => "%{SYSLOGTIMESTAMP:syslog_timestamp} %{SYSLOGHOST:syslog_hostname} %{DATA:syslog_program}(?:\[%{POSINT:syslog_pid}\])?: %{GREEDYDATA:syslog_message}" }add_field => [ "received_at", "%{@timestamp}" ]add_field => [ "received_from", "%{host}" ]}syslog_pri { }date {match => [ "syslog_timestamp", "MMM d HH:mm:ss", "MMM dd HH:mm:ss" ]}}if [fields][docType] == "sys-log" {grok {patterns_dir => ["/opt/elk/logstash/patterns"]match => { "message" => "\[%{NOTSPACE:appName}:%{NOTSPACE:serverIp}:%{NOTSPACE:serverPort}\] %{TIMESTAMP_ISO8601:logTime} %{LOGLEVEL:logLevel} %{WORD:pid} \[%{MYAPPNAME:traceId}\] \[%{MYTHREADNAME:threadName}\] %{NOTSPACE:classname} %{GREEDYDATA:message}" }overwrite => ["message"]}date {match => ["logTime","yyyy-MM-dd HH:mm:ss.SSS Z"]}date {match => ["logTime","yyyy-MM-dd HH:mm:ss.SSS"]target => "timestamp"locale => "en"timezone => "+08:00"}mutate { remove_field => "logTime"remove_field => "@version"remove_field => "host"remove_field => "offset"}}if [fields][docType] == "point-log" {grok {patterns_dir => ["/opt/elk/logstash/patterns"]match => { "message" => "%{TIMESTAMP_ISO8601:logTime}\|%{MYAPPNAME:appName}\|%{WORD:resouceid}\|%{MYAPPNAME:type}\|%{GREEDYDATA:object}"}}kv {source => "object"field_split => "&"value_split => "="}date {match => ["logTime","yyyy-MM-dd HH:mm:ss.SSS Z"]}date {match => ["logTime","yyyy-MM-dd HH:mm:ss.SSS"]target => "timestamp"locale => "en"timezone => "+08:00"}mutate {remove_field => "logTime"remove_field => "@version"remove_field => "host"remove_field => "offset"}}

}

step9:修改30-output.conf配置,改为以下内容

output {if [fields][docType] == "sys-log" {elasticsearch {hosts => ["localhost"]manage_template => falseindex => "sys-log-%{+YYYY.MM.dd}"document_type => "%{[@metadata][type]}"}}if [fields][docType] == "point-log" {elasticsearch {hosts => ["localhost"]manage_template => falseindex => "point-log-%{+YYYY.MM.dd}"document_type => "%{[@metadata][type]}"routing => "%{type}"}}

}

step10:创建运行脚本

vim /opt/elk/start.sh

docker stop elk

docker rm elkdocker run -p 5601:5601 -p 9200:9200 -p 9300:9300 -p 5044:5044 \-e LS_HEAP_SIZE="1g" -e ES_JAVA_OPTS="-Xms2g -Xmx2g" \-v $PWD/elasticsearch/data:/var/lib/elasticsearch \-v $PWD/elasticsearch/plugins:/opt/elasticsearch/plugins \-v $PWD/logstash/conf:/etc/logstash/conf.d \-v $PWD/logstash/patterns:/opt/logstash/patterns \-v $PWD/elasticsearch/conf/elasticsearch.yml:/etc/elasticsearch/elasticsearch.yml \-v $PWD/elasticsearch/log:/var/log/elasticsearch \-v $PWD/logstash/log:/var/log/logstash \--name elk \-d sebp/elk:651

step11:运行镜像

sh start.sh

step12:添加索引模板(非必需)

如果是单节点的es需要去掉索引的副本配置,不然会出现unassigned_shards

1.更新已有索引

curl -X PUT "http://192.168.28.130:9200/sys-log-*/_settings" -H 'Content-Type: application/json' -d'

{"index" : {"number_of_replicas" : 0}

}

'

curl -X PUT "http://192.168.28.130:9200/mysql-slowlog-*/_settings" -H 'Content-Type: application/json' -d'

{"index" : {"number_of_replicas" : 0}

}'

2.设置索引模板

系统日志

curl -XPUT http://192.168.28.130:9200/_template/template_sys_log -H 'Content-Type: application/json' -d '

{"index_patterns" : ["sys-log-*"],"order" : 0,"settings" : {"number_of_replicas" : 0},"mappings": {"doc": {"properties": {"message": {"type": "text","fields": {"keyword": {"type": "keyword","ignore_above": 256}},"analyzer": "ik_max_word"},"pid": {"type": "text"},"serverPort": {"type": "text"},"logLevel": {"type": "text"},"traceId": {"type": "text"}}} }

}'

慢sql日志

curl -XPUT http://192.168.28.130:9200/_template/template_sql_slowlog -H 'Content-Type: application/json' -d '

{"index_patterns" : ["mysql-slowlog-*"],"order" : 0,"settings" : {"number_of_replicas" : 0},"mappings": {"doc": {"properties": {"query_str": {"type": "text","fields": {"keyword": {"type": "keyword","ignore_above": 256}},"analyzer": "ik_max_word"}}}}

}'

埋点日志

curl -XPUT http://192.168.28.130:9200/_template/template_point_log -H 'Content-Type: application/json' -d '

{"index_patterns" : ["point-log-*"],"order" : 0,"settings" : {"number_of_shards" : 2,"number_of_replicas" : 0}

}'

step13:安装IK分词器

查询数据,都是使用的默认的分词器,分词效果不太理想,会把text的字段分成一个一个汉字,然后搜索的时候也会把搜索的句子进行分词,所以这里就需要更加智能的分词器IK分词器了

1.下载

- 下载地址:https://github.com/medcl/elasticsearch-analysis-ik/releases

- 这里你需要根据你的Es的版本来下载对应版本的IK

2.解压

将文件复制到 es的安装目录/plugin/ik下面即可,完成之后效果如下:

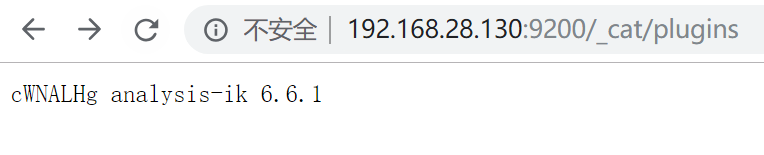

3.重启容器并检查插件是否安装成功

http://192.168.28.130:9200/_cat/plugins

step14:配置样例

链接: https://pan.baidu.com/s/1Qq3ywAbXMMRYyYxBAViMag

提取码: aubp

这篇关于微服务轮子项目(13) - 统一日志中心详解(docker安装部署)的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!