本文主要是介绍Redis6 集群,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

Redis 集群实现了对Redis的水平扩容,即启动N个redis节点,将整个数据库分布存储在这N个节点中,每个节点存储总数据的1/N。

Redis 集群通过分区(partition)来提供一定程度的可用性(availability): 即使集群中有一部分节点失效或者无法进行通讯, 集群也可以继续处理命令请求。

集群方式配置

cluster-enabled yes 打开集群模式

cluster-config-file nodes-6379.conf 设定节点配置文件名

cluster-node-timeout 15000 设定节点失联时间,超过该时间(毫秒),集群自动进行主从切换。

主机配置方式:最少有三个主节点,6台服务器 三主三从。

include /usr/local/redis/bin/redis.conf

port 6379

pidfile "/var/run/redis_6379.pid"

dbfilename "dump6379.rdb"

dir "/r"

logfile ".log"

cluster-enabled yes

cluster-config-file nodes-6379.conf

cluster-node-timeout 15000修改好redis6379.conf文件,拷贝多个redis.conf文件

```textinclude ok.confport 6379pidfile "/var/run/redis_6379.pid"logfile "6379.log"dbfilename "6379.rdb"requirepass rootmasterauth rootcluster-enabled yescluster-node-timeout 15000cluster-config-file node_6379.conf include ok.confport 6380pidfile "/var/run/redis_6380.pid"logfile "6380.log"dbfilename "6380.rdb"requirepass rootmasterauth rootcluster-enabled yescluster-node-timeout 15000cluster-config-file node_6380.conf include ok.confport 6381pidfile "/var/run/redis_6381.pid"logfile "6381.log"dbfilename "6381.rdb"requirepass rootmasterauth rootcluster-enabled yescluster-node-timeout 15000cluster-config-file node_6381.conf include ok.confport 6382pidfile "/var/run/redis_6382.pid"logfile "6382.log"dbfilename "6382.rdb"requirepass rootmasterauth rootcluster-enabled yescluster-node-timeout 15000cluster-config-file node_6382.conf

include ok.conf

port 6383

pidfile “/var/run/redis_6383.pid”

logfile “6383.log”

dbfilename “6383.rdb”

requirepass root

masterauth root

cluster-enabled yes

cluster-node-timeout 15000

cluster-config-file node_6383.conf

include ok.conf

port 6384

pidfile “/var/run/redis_6384.pid”

logfile “6384.log”

dbfilename “6384.rdb”

requirepass root

masterauth root

cluster-enabled yes

cluster-node-timeout 15000

cluster-config-file node_6384.conf

cluster-enabled yes

cluster-node-timeout 15000

cluster-config-file node_6379.conf

redis-cli --cluster -replicas 1 192.168.16.20:6379 192.168.16.20:6380 192.168.16.20:6381 192.168.16.20:6382 192.168.16.20:6383 192.168.16.20:6384

sudo ./redis-cli --cluster create 192.168.16.20:6379 192.168.16.20:6380 192.168.16.20:6381 192.168.16.20:6382 192.168.16.20:6383 192.168.16.20:6384 --cluster-replicas 1 -a root

```

webrx@us:/usr/local/redis/bin$ ps -ef | grep redis

root 1773 1 0 10:55 ? 00:00:01 ./redis-server 0.0.0.0:6379 [cluster]

root 1782 1 0 10:55 ? 00:00:01 ./redis-server 0.0.0.0:6380 [cluster]

root 1791 1 0 10:55 ? 00:00:00 ./redis-server 0.0.0.0:6381 [cluster]

root 1798 1 0 10:55 ? 00:00:01 ./redis-server 0.0.0.0:6382 [cluster]

root 1809 1 0 10:55 ? 00:00:00 ./redis-server 0.0.0.0:6383 [cluster]

root 1816 1 0 10:55 ? 00:00:01 ./redis-server 0.0.0.0:6384 [cluster]

webrx 2042 1178 0 11:03 pts/0 00:00:00 grep --color=auto redis

webrx@us:/usr/local/redis/bin$ sudo kill -9 1773 1782 1791 1798 1809 1816

webrx@us:/usr/local/redis/bin$ ps -ef | grep redis

webrx 2054 1178 0 11:03 pts/0 00:00:00 grep --color=auto redis

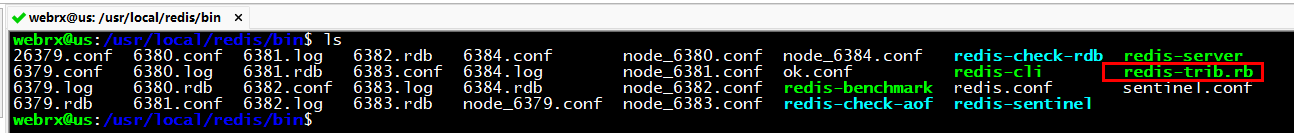

webrx@us:/usr/local/redis/bin$ ls

26379.conf 6379.rdb 6380.rdb 6381.rdb 6382.rdb 6383.rdb 6384.rdb redis-check-aof redis.conf redis-trib.rb

6379.conf 6380.conf 6381.conf 6382.conf 6383.conf 6384.conf ok.conf redis-check-rdb redis-sentinel sentinel.conf

6379.log 6380.log 6381.log 6382.log 6383.log 6384.log redis-benchmark redis-cli redis-server

webrx@us:/usr/local/redis/bin$ sudo rm *.log *.rdb

webrx@us:/usr/local/redis/bin$ ls

26379.conf 6380.conf 6382.conf 6384.conf redis-benchmark redis-check-rdb redis.conf redis-server sentinel.conf

6379.conf 6381.conf 6383.conf ok.conf redis-check-aof redis-cli redis-sentinel redis-trib.rb

webrx@us:/usr/local/redis/bin$ sudo vim 6379.conf

webrx@us:/usr/local/redis/bin$ sudo vim 6380.conf

webrx@us:/usr/local/redis/bin$ sudo vim 6381.conf

webrx@us:/usr/local/redis/bin$ sudo vim 6382.conf

webrx@us:/usr/local/redis/bin$ sudo vim 6383.conf

webrx@us:/usr/local/redis/bin$ sudo vim 6384.conf

webrx@us:/usr/local/redis/bin$ sudo ./redis-server 6379.conf

webrx@us:/usr/local/redis/bin$ sudo ./redis-server 6380.conf

webrx@us:/usr/local/redis/bin$ sudo ./redis-server 6381.conf

webrx@us:/usr/local/redis/bin$ sudo ./redis-server 6382.conf

webrx@us:/usr/local/redis/bin$ sudo ./redis-server 6383.conf

webrx@us:/usr/local/redis/bin$ sudo ./redis-server 6384.conf

webrx@us:/usr/local/redis/bin$ sudo ./redis-cli --cluster create 192.168.16.20:6379 192.168.16.20:6380 192.168.16.20:6381 192.168.16.20:6382 192.168.16.20:6383 192.168.16.20:6384 --cluster-replicas 1 -a root

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

>>> Performing hash slots allocation on 6 nodes...

Master[0] -> Slots 0 - 5460

Master[1] -> Slots 5461 - 10922

Master[2] -> Slots 10923 - 16383

Adding replica 192.168.16.20:6383 to 192.168.16.20:6379

Adding replica 192.168.16.20:6384 to 192.168.16.20:6380

Adding replica 192.168.16.20:6382 to 192.168.16.20:6381

>>> Trying to optimize slaves allocation for anti-affinity

[WARNING] Some slaves are in the same host as their master

M: fdf680da97343309b5a15ea9670b05588183335b 192.168.16.20:6379slots:[0-5460] (5461 slots) master

M: 28a954d92d149dd70d015bb15de5516adf44d2a7 192.168.16.20:6380slots:[5461-10922] (5462 slots) master

M: 56a3c2dc96417d5b520f7b00ca23b9df800cde12 192.168.16.20:6381slots:[10923-16383] (5461 slots) master

S: d41efd6f63e6d733e6913f5b39babe94060811ae 192.168.16.20:6382replicates fdf680da97343309b5a15ea9670b05588183335b

S: 7cac8e1376814bbc4184914880917e590c676f7d 192.168.16.20:6383replicates 28a954d92d149dd70d015bb15de5516adf44d2a7

S: 22e8f9ec968b3be100b59d407c74b9238740154a 192.168.16.20:6384replicates 56a3c2dc96417d5b520f7b00ca23b9df800cde12

Can I set the above configuration? (type 'yes' to accept): yes

>>> Nodes configuration updated

>>> Assign a different config epoch to each node

>>> Sending CLUSTER MEET messages to join the cluster

Waiting for the cluster to join

......

>>> Performing Cluster Check (using node 192.168.16.20:6379)

M: fdf680da97343309b5a15ea9670b05588183335b 192.168.16.20:6379slots:[0-5460] (5461 slots) master1 additional replica(s)

M: 28a954d92d149dd70d015bb15de5516adf44d2a7 192.168.16.20:6380slots:[5461-10922] (5462 slots) master1 additional replica(s)

S: 22e8f9ec968b3be100b59d407c74b9238740154a 192.168.16.20:6384slots: (0 slots) slavereplicates 56a3c2dc96417d5b520f7b00ca23b9df800cde12

S: 7cac8e1376814bbc4184914880917e590c676f7d 192.168.16.20:6383slots: (0 slots) slavereplicates 28a954d92d149dd70d015bb15de5516adf44d2a7

S: d41efd6f63e6d733e6913f5b39babe94060811ae 192.168.16.20:6382slots: (0 slots) slavereplicates fdf680da97343309b5a15ea9670b05588183335b

M: 56a3c2dc96417d5b520f7b00ca23b9df800cde12 192.168.16.20:6381slots:[10923-16383] (5461 slots) master1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

webrx@us:/usr/local/redis/bin$

登录集群方式

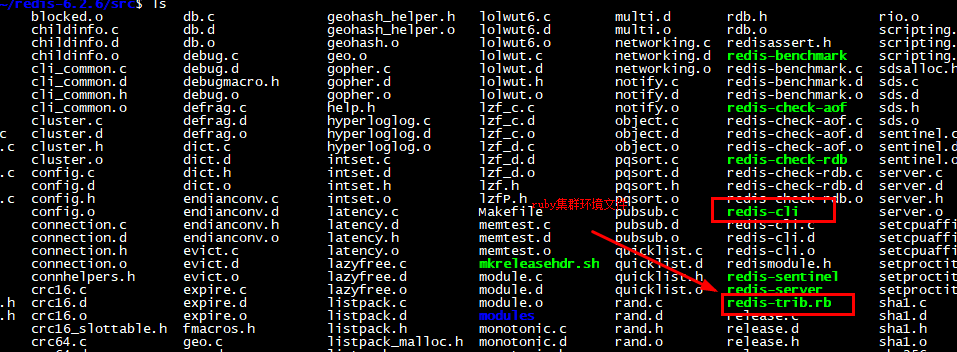

ruby

/home/webrx/redis6.2.65/src/redis-cli --cluster-replicas 1 192.168.0.68:6379 192.168.0.68:6380 192.168.0.68:6381 192.168.0.68:6382 192.168.0.68:6383 192.168.0.68:6384

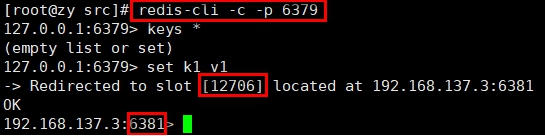

集群方式登录

登录时redis-cli -c -p 6379 -c 代表cluster集群方式,任何主机都可以

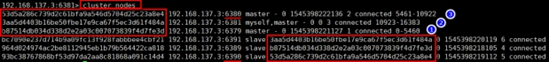

cluster nodes 查看

一个集群至少要有三个主节点。

选项 --cluster-replicas 1 表示我们希望为集群中的每个主节点创建一个从节点。

分配原则尽量保证每个主数据库运行在不同的IP地址,每个从库和主库不在一个IP地址上。

即使连接的不是主机,集群会自动切换主机存储。主机写,从机读。

无中心化主从集群。无论从哪台主机写的数据,其他主机上都能读到数据。

public class JedisClusterTest {public static void main(String[] args) { Set<HostAndPort>set =new HashSet<HostAndPort>();set.add(new HostAndPort("192.168.31.211",6379));JedisCluster jedisCluster=new JedisCluster(set);jedisCluster.set("k1", "v1");System.out.println(jedisCluster.get("k1"));}

}

集群中增加节点主机

windows节点配置

#windows 6381.conf

include redis.service.conf

port 6381

logfile "6381.log"

dbfilename "6381.rdb"

requirepass root

masterauth root

cluster-enabled yes

cluster-node-timeout 15000

cluster-config-file node_6381.conf #windows 6380.conf

include redis.service.conf

port 6380logfile "6380.log"

dbfilename "6380.rdb"

requirepass root

masterauth root

cluster-enabled yes

cluster-node-timeout 15000

cluster-config-file node_6380.conf sudo ./redis-cli --cluster add-node 192.168.16.20:6391 192.168.16.20:6383 -a root

webrx@us:/usr/local/redis/bin$ sudo ./redis-cli --cluster add-node 192.168.16.20:6391 192.168.16.20:6383 -a root

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

>>> Adding node 192.168.16.20:6391 to cluster 192.168.16.20:6383

>>> Performing Cluster Check (using node 192.168.16.20:6383)

S: 7cac8e1376814bbc4184914880917e590c676f7d 192.168.16.20:6383slots: (0 slots) slavereplicates 28a954d92d149dd70d015bb15de5516adf44d2a7

S: 22e8f9ec968b3be100b59d407c74b9238740154a 192.168.16.20:6384slots: (0 slots) slavereplicates 56a3c2dc96417d5b520f7b00ca23b9df800cde12

M: 28a954d92d149dd70d015bb15de5516adf44d2a7 192.168.16.20:6380slots:[5461-10922] (5462 slots) master1 additional replica(s)

M: 2292f60072185d493afcc021bbec68846bd27c67 192.168.16.9:6381slots: (0 slots) master

M: 072585bc70e49b085af73f10b6103fe2fb2a23f3 192.168.16.20:6390slots: (0 slots) master

M: d41efd6f63e6d733e6913f5b39babe94060811ae 192.168.16.20:6382slots:[0-5460] (5461 slots) master1 additional replica(s)

S: fdf680da97343309b5a15ea9670b05588183335b 192.168.16.20:6379slots: (0 slots) slavereplicates d41efd6f63e6d733e6913f5b39babe94060811ae

M: eed969d8c4ca6e2fe03b2d0c7198e3adb126cdb7 192.168.16.9:6380slots: (0 slots) master

M: 56a3c2dc96417d5b520f7b00ca23b9df800cde12 192.168.16.20:6381slots:[10923-16383] (5461 slots) master1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

>>> Send CLUSTER MEET to node 192.168.16.20:6391 to make it join the cluster.

[OK] New node added correctly.

webrx@us:/usr/local/redis/bin$ ./redis-cli -c

127.0.0.1:6379> cluster nodes

NOAUTH Authentication required.

127.0.0.1:6379> auth root

OK

127.0.0.1:6379> clear

127.0.0.1:6379> cluster nodes

072585bc70e49b085af73f10b6103fe2fb2a23f3 192.168.16.20:6390@16390 master - 0 1639713710483 9 connected

7cac8e1376814bbc4184914880917e590c676f7d 192.168.16.20:6383@16383 slave 28a954d92d149dd70d015bb15de5516adf44d2a7 0 1639713709000 2 connected

22e8f9ec968b3be100b59d407c74b9238740154a 192.168.16.20:6384@16384 slave 56a3c2dc96417d5b520f7b00ca23b9df800cde12 0 1639713708000 3 connected

eed969d8c4ca6e2fe03b2d0c7198e3adb126cdb7 192.168.16.9:6380@16380 master - 0 1639713711560 0 connected

fdf680da97343309b5a15ea9670b05588183335b 192.168.16.20:6379@16379 myself,slave d41efd6f63e6d733e6913f5b39babe94060811ae 0 1639713707000 7 connected

28a954d92d149dd70d015bb15de5516adf44d2a7 192.168.16.20:6380@16380 master - 0 1639713709000 2 connected 5461-10922

56a3c2dc96417d5b520f7b00ca23b9df800cde12 192.168.16.20:6381@16381 master - 0 1639713707000 3 connected 10923-16383

5624e318d76606e473e6f8c927c31570613d6d00 192.168.16.20:6391@16391 master - 0 1639713710000 10 connected

d41efd6f63e6d733e6913f5b39babe94060811ae 192.168.16.20:6382@16382 master - 0 1639713707000 7 connected 0-5460

2292f60072185d493afcc021bbec68846bd27c67 192.168.16.9:6381@16381 master - 0 1639713710000 8 connected

127.0.0.1:6379>

springboot redis集群配置操作

# 使用lettuce连接池

1 application.yml

spring:redis:#timeout: 6000mspassword: rootcluster:max-redirects: 3 # 获取失败 最大重定向次数 nodes:- 192.168.16.20:6379- 192.168.16.20:6380- 192.168.16.20:6381- 192.168.16.20:6382- 192.168.16.20:6383- 192.168.16.20:6384lettuce:pool:max-active: 1000 #连接池最大连接数(使用负值表示没有限制)max-idle: 10 # 连接池中的最大空闲连接min-idle: 5 # 连接池中的最小空闲连接max-wait: -1 # 连接池最大阻塞等待时间(使用负值表示没有限制)

2 连接池注入配置信息,RedisConfig.java

<dependency><groupId>org.projectlombok</groupId><artifactId>lombok</artifactId><version>1.18.22</version><scope>provided</scope>

</dependency>

<!-- jackson-databind -->

<dependency><groupId>com.fasterxml.jackson.core</groupId><artifactId>jackson-databind</artifactId><version>2.13.0</version>

</dependency>

<!-- jackson-datatype-jsr310 -->

<dependency><groupId>com.fasterxml.jackson.datatype</groupId><artifactId>jackson-datatype-jsr310</artifactId><version>2.13.0</version>

</dependency><dependency><groupId>org.springframework.boot</groupId><artifactId>spring-boot-starter-data-redis</artifactId><version>2.6.1</version>

</dependency>

@Configuration

@AutoConfigureAfter(RedisAutoConfiguration.class)

public class RedisConfig {

@Beanpublic RedisTemplate<String,Object> redisTemplate(LettuceConnectionFactory f){RedisTemplate<String,Object> template =new RedisTemplate<>();template.setConnectionFactory(f);var srs = new StringRedisSerializer();template.setKeySerializer(srs);template.setHashKeySerializer(srs);//value和hash的value采用Json的序列化配置var js = new Jackson2JsonRedisSerializer<>(Object.class);ObjectMapper om = new ObjectMapper();// 解决jackson2无法反序列化LocalDateTime的问题om.disable(SerializationFeature.WRITE_DATES_AS_TIMESTAMPS);SimpleModule sm = new SimpleModule();sm.addSerializer(LocalDateTime.class, new LocalDateTimeSerializer(DateTimeFormatter.ofPattern("yyyy-MM-dd HH:mm:ss")));sm.addSerializer(LocalDate.class, new LocalDateSerializer(DateTimeFormatter.ofPattern("yyyy-MM-dd")));sm.addSerializer(LocalTime.class, new LocalTimeSerializer(DateTimeFormatter.ofPattern("HH:mm:ss")));sm.addDeserializer(LocalDateTime.class, new LocalDateTimeDeserializer(DateTimeFormatter.ofPattern("yyyy-MM-dd HH:mm:ss")));sm.addDeserializer(LocalDate.class, new LocalDateDeserializer(DateTimeFormatter.ofPattern("yyyy-MM-dd")));sm.addDeserializer(LocalTime.class, new LocalTimeDeserializer(DateTimeFormatter.ofPattern("HH:mm:ss")));om.registerModule(sm);om.setTimeZone(TimeZone.getTimeZone("GMT+8"));om.setLocale(Locale.CHINA);//java.util.Date 序列化om.setDateFormat(new SimpleDateFormat("yyyy-MM-dd HH:mm:ss"));js.setObjectMapper(om);template.setValueSerializer(js);template.setHashValueSerializer(js);return template;

}

3 使用方式:

@Autowired

private RedisTemplate<String, Object> redisTemplate;

@Configuration

public class RedisConfig {@Beanpublic RedisTemplate<String, Object> redisTemplate(RedisConnectionFactory redisConnectionFactory) throws UnknownHostException {// 我们为了自己开发方便,一般直接使用 <String, Object>// 两个泛型都是 Object, Object 的类型,我们后使用需要强制转换 <String, Object>RedisTemplate<String, Object> template = new RedisTemplate<>();template.setConnectionFactory(redisConnectionFactory);setRedisTemplate(template);template.afterPropertiesSet();return template;}private void setRedisTemplate(RedisTemplate<String, Object> template) {// Json序列化配置Jackson2JsonRedisSerializer<Object> jackson2JsonRedisSerializer = new Jackson2JsonRedisSerializer<>(Object.class);ObjectMapper objectMapper = new ObjectMapper();objectMapper.setVisibility(PropertyAccessor.ALL, JsonAutoDetect.Visibility.ANY);// 解决jackson2无法反序列化LocalDateTime的问题objectMapper.disable(SerializationFeature.WRITE_DATES_AS_TIMESTAMPS);objectMapper.registerModule(new JavaTimeModule());// 该方法过时// om.enableDefaultTyping(ObjectMapper.DefaultTyping.NON_FINAL);// 上面 enableDefaultTyping 方法过时,使用 activateDefaultTypingobjectMapper.activateDefaultTyping(LaissezFaireSubTypeValidator.instance, ObjectMapper.DefaultTyping.NON_FINAL, JsonTypeInfo.As.PROPERTY);jackson2JsonRedisSerializer.setObjectMapper(objectMapper);// String 的序列化StringRedisSerializer stringRedisSerializer = new StringRedisSerializer();// key采用String的序列化方式template.setKeySerializer(stringRedisSerializer);// hash的key也采用String的序列化方式template.setHashKeySerializer(stringRedisSerializer);// value序列化方式采用jacksontemplate.setValueSerializer(jackson2JsonRedisSerializer);// hash的value序列化方式采用jacksontemplate.setHashValueSerializer(jackson2JsonRedisSerializer);// 设置值(value)的序列化采用FastJsonRedisSerializer。// 设置键(key)的序列化采用StringRedisSerializer。template.afterPropertiesSet();}

}

JacksonConfig.java

/** Copyright (c) 2006, 2021, webrx.cn All rights reserved.**/

package cn.webrx.config;import com.fasterxml.jackson.databind.ObjectMapper;

import com.fasterxml.jackson.databind.module.SimpleModule;

import com.fasterxml.jackson.datatype.jsr310.JavaTimeModule;

import com.fasterxml.jackson.datatype.jsr310.deser.LocalDateDeserializer;

import com.fasterxml.jackson.datatype.jsr310.deser.LocalDateTimeDeserializer;

import com.fasterxml.jackson.datatype.jsr310.deser.LocalTimeDeserializer;

import com.fasterxml.jackson.datatype.jsr310.ser.LocalDateSerializer;

import com.fasterxml.jackson.datatype.jsr310.ser.LocalDateTimeSerializer;

import com.fasterxml.jackson.datatype.jsr310.ser.LocalTimeSerializer;

import org.springframework.boot.autoconfigure.AutoConfigureBefore;

import org.springframework.boot.autoconfigure.condition.ConditionalOnClass;

import org.springframework.boot.autoconfigure.jackson.Jackson2ObjectMapperBuilderCustomizer;

import org.springframework.boot.autoconfigure.jackson.JacksonAutoConfiguration;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;import java.time.LocalDate;

import java.time.LocalDateTime;

import java.time.LocalTime;

import java.time.ZoneId;

import java.time.format.DateTimeFormatter;

import java.util.Locale;

import java.util.TimeZone;/*** <p>Project: redis2021 - JacksonConfig* <p>Powered by webrx On 2021-12-17 17:48:02** @author webrx [webrx@126.com]* @version 1.0* @since 17*/

@Configuration

@ConditionalOnClass(ObjectMapper.class)

@AutoConfigureBefore(JacksonAutoConfiguration.class)

public class JacksonConfig {@Beanpublic JavaTimeModule sm(){SimpleModule sm = new SimpleModule();sm.addSerializer(LocalDateTime.class, new LocalDateTimeSerializer(DateTimeFormatter.ofPattern("yyyy-MM-dd HH:mm:ss")));sm.addSerializer(LocalDate.class, new LocalDateSerializer(DateTimeFormatter.ofPattern("yyyy-MM-dd")));sm.addSerializer(LocalTime.class, new LocalTimeSerializer(DateTimeFormatter.ofPattern("HH:mm:ss")));sm.addDeserializer(LocalDateTime.class, new LocalDateTimeDeserializer(DateTimeFormatter.ofPattern("yyyy-MM-dd HH:mm:ss")));sm.addDeserializer(LocalDate.class, new LocalDateDeserializer(DateTimeFormatter.ofPattern("yyyy-MM-dd")));sm.addDeserializer(LocalTime.class, new LocalTimeDeserializer(DateTimeFormatter.ofPattern("HH:mm:ss")));JavaTimeModule jtm = new JavaTimeModule();return jtm;}@Beanpublic Jackson2ObjectMapperBuilderCustomizer customizer() {return builder -> {/*** 配置Date的格式化*/builder.locale(Locale.CHINA);builder.timeZone(TimeZone.getTimeZone(ZoneId.systemDefault()));builder.simpleDateFormat("yyyy-MM-dd HH:mm:ss");/*** 配置java8 时间配置*/builder.modules(sm());};}

}这篇关于Redis6 集群的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!