本文主要是介绍从MobileNetv1到MobileNetv3模型详解,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

简言

MobileNet系列包括V1、V2和V3,专注于轻量级神经网络。MobileNetV1采用深度可分离卷积,MobileNetV2引入倒残差模块,提高准确性。MobileNetV3引入更多设计元素,如可变形卷积和Squeeze-and-Excitation模块,平衡计算效率和准确性。这三个系列在移动设备和嵌入式系统上取得成功,为资源受限的环境提供高效的深度学习解决方案。

- mobilenetv1原论文地址:https://arxiv.org/pdf/1704.04861.pdf

- mobilenetv2原论文地址:https://arxiv.org/pdf/1801.04381.pdf

- mobilenetv3原论文地址:https://arxiv.org/abs/1905.02244.pdf

MobileNetv1

在最近,人们对构建小型而高效的神经网络很感兴趣,使用的方法大致为压缩预训练网络和直接训练小型网络。MobileNet主要关注于优化延迟,但也产生小的网络。

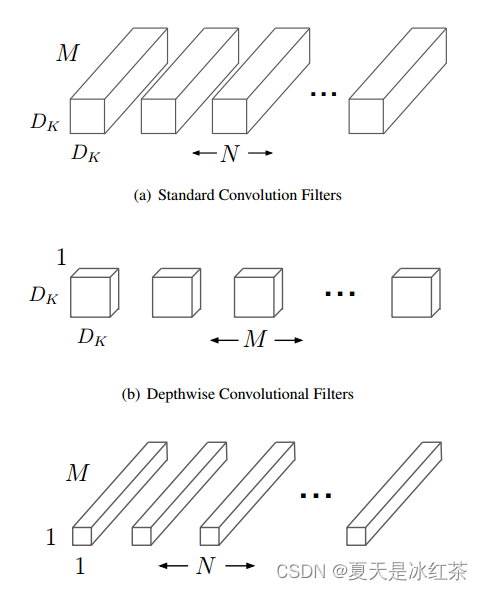

深度可分离卷积

标准卷积本质上是一种通过学习参数的方式,对输入数据进行特征提取的操作;深度可分离卷积相较于标准卷积层引入了两个主要的改进:深度卷积和逐点卷积。

- 深度卷积(DwConv): 在深度可分离卷积中,首先对输入数据的每个通道使用单独的卷积核,称之为深度卷积。这个步骤实际上是对输入数据的每个通道分别进行卷积操作,而不像标准卷积那样在所有通道上共享一个卷积核。这样做减少了参数的数量,因为每个通道有自己的一组卷积核。

- 逐点卷积(PwConv): 在深度卷积之后,使用逐点卷积,也称为 1x1 卷积,将深度卷积的输出进行线性组合,生成最终的输出特征图。逐点卷积使用 1x1 的卷积核,这相当于在每个通道上进行全连接操作。逐点卷积的作用是将深度卷积的输出特征图进行组合和混合,引入非线性关系,从而更好地捕捉通道间的信息。

class DepthSepConv(nn.Module):"""深度可分卷积: DW卷积 + PW卷积dw卷积, 当分组个数等于输入通道数时, 输出矩阵的通道输也变成了输入通道数pw卷积, 使用了1x1的卷积核与普通的卷积一样"""def __init__(self, in_channels, out_channels, stride):super(DepthSepConv, self).__init__()self.depthwise = nn.Conv2d(in_channels, in_channels, kernel_size=3, stride=stride, groups=in_channels, padding=1)self.pointwise = nn.Conv2d(in_channels, out_channels, kernel_size=1)self.batch_norm1 = nn.BatchNorm2d(in_channels)self.batch_norm2 = nn.BatchNorm2d(out_channels)self.relu6 = nn.ReLU6(inplace=True)def forward(self, x):x = self.depthwise(x)x = self.batch_norm1(x)x = self.relu6(x)x = self.pointwise(x)x = self.batch_norm2(x)x = self.relu6(x)return x引入了深度可分离卷积,可减少参数的数量,模型的参数量大幅降低,降低了过拟合的风险,同时减小了计算复杂度。

对于标准卷积来说:

而深度可分离卷积则是:

所以:

其使用的计算量比标准卷积少8到9倍,而且精度只有很小的降低。

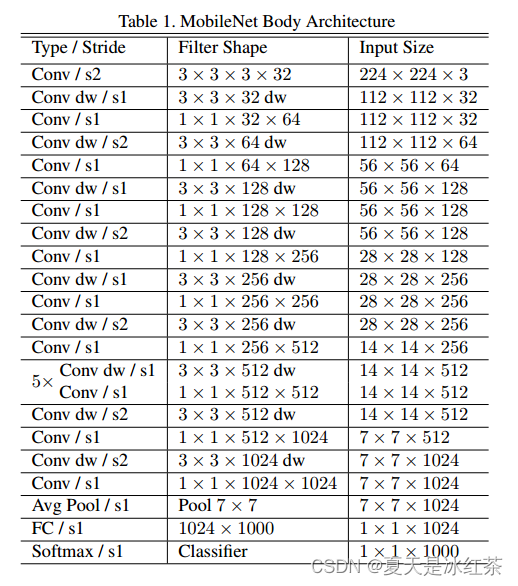

mobilenetv1模型实现

这一部分是我参照着论文中的图表按照输出结构复现的。

class MobileNetV1(nn.Module):def __init__(self, num_classes=1000, drop_rate=0.2):super(MobileNetV1, self).__init__()# torch.Size([1, 3, 224, 224])self.conv_bn = nn.Sequential(nn.Conv2d(in_channels=3, out_channels=32, kernel_size=3, stride=2, padding=1, bias=False),nn.BatchNorm2d(32),nn.ReLU(inplace=True)) # torch.Size([1, 32, 112, 112])self.dwmodule = nn.Sequential(# 参考MobileNet_V1 https://arxiv.org/pdf/1704.04861.pdf Table 1DepthSepConv(32, 64, 1), # torch.Size([1, 64, 112, 112])DepthSepConv(64, 128, 2), # torch.Size([1, 128, 56, 56])DepthSepConv(128, 128, 1), # torch.Size([1, 128, 56, 56])DepthSepConv(128, 256, 2), # torch.Size([1, 256, 28, 28])DepthSepConv(256, 256, 1), # torch.Size([1, 256, 28, 28])DepthSepConv(256, 512, 2), # torch.Size([1, 512, 14, 14])# 5 x DepthSepConv(512, 512, 1),DepthSepConv(512, 512, 1), # torch.Size([1, 512, 14, 14])DepthSepConv(512, 512, 1),DepthSepConv(512, 512, 1),DepthSepConv(512, 512, 1),DepthSepConv(512, 512, 1),DepthSepConv(512, 1024, 2), # torch.Size([1, 1024, 7, 7])DepthSepConv(1024, 1024, 1),nn.AvgPool2d(7, stride=1),)self.fc = nn.Linear(in_features=1024, out_features=num_classes)self.dropout = nn.Dropout(p=drop_rate)self.softmax = nn.Softmax(dim=1)for m in self.modules():if isinstance(m, nn.Conv2d):nn.init.kaiming_normal_(m.weight)elif isinstance(m, nn.BatchNorm2d):nn.init.constant_(m.weight, 1)nn.init.constant_(m.bias, 0)elif isinstance(m, nn.Linear):nn.init.constant_(m.bias, 0)def forward(self, x):x = self.conv_bn(x)x = self.dwmodule(x)x = x.view(x.size(0), -1)x = self.fc(x)x = self.softmax(self.dropout(x))return x第1层为标准卷积层,紧接着的26层为核心层结构,采用深度可分离卷积层。这些层通过堆叠深度可分离卷积单元来构建网络。然后是全局平均池化层,使用7x7的池化核,目的是降低空间维度,将图像的每个通道的特征合并为一个值。全连接层加softmax层输出。

MobileNetv2

Mobilenetv2网络设计基于Mobilenetv1,它保持了其简单性,不需要任何特殊的操作,同时显著提高了其准确性,实现了移动应用的多图像分类和检测任务的最先进水平。

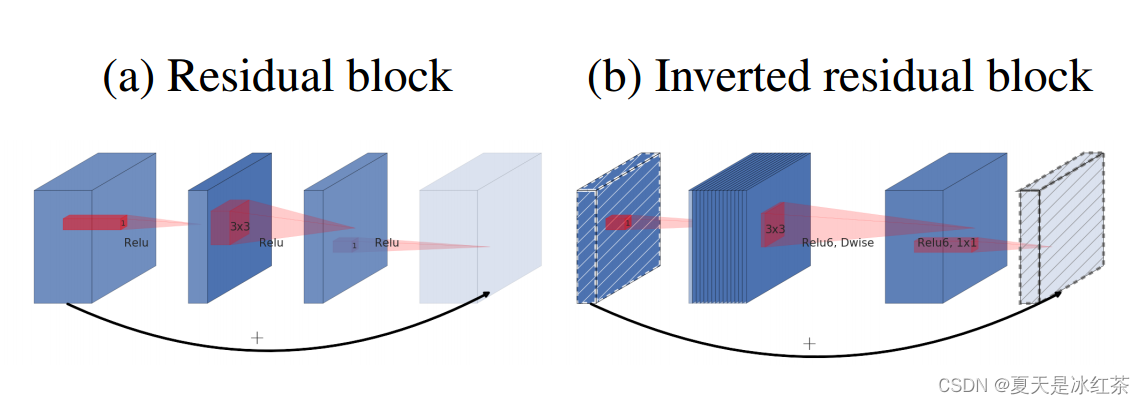

MobileNetV2是基于倒置的残差结构,普通的残差结构是先经过 1x1 的卷积核把 feature map的通道数压下来,然后经过 3x3 的卷积核,最后再用 1x1 的卷积核将通道数扩张回去,即先压缩后扩张,而MobileNetV2的倒置残差结构是先扩张后压缩。另外,我们发现移除通道数很少的层做线性激活非常重要。

Inverted Residual Block倒残差结构

可以看见在我们上图的右边,就是倒残差结构,它会经历以下部分:

- 1x1卷积升维

- 3x3卷积DW

- 1x1卷积降维

接下来请结合着下面的代码来看,首先有一个expand_ratio来表示是否对输入进来的特征层进行升维,如果不需要就会进行卷积、标准化、激活函数、卷积、标准化。不然就会先有1x1卷积进行通道数的上升,在用3x3逐层卷积,进行跨特征点的特征提取,最后1x1卷积进行通道数的下降。

上升是为了让我们的网络结构有具备更好的特征表征能力,下降是为了让我们的网络具备更低的运算量,在完成这样的特征提取后,如果要使用残差边,我们就会将特征提取的结果直接与输入相接,如果没有使用残差边,就会直接输出卷积结果。

import torch

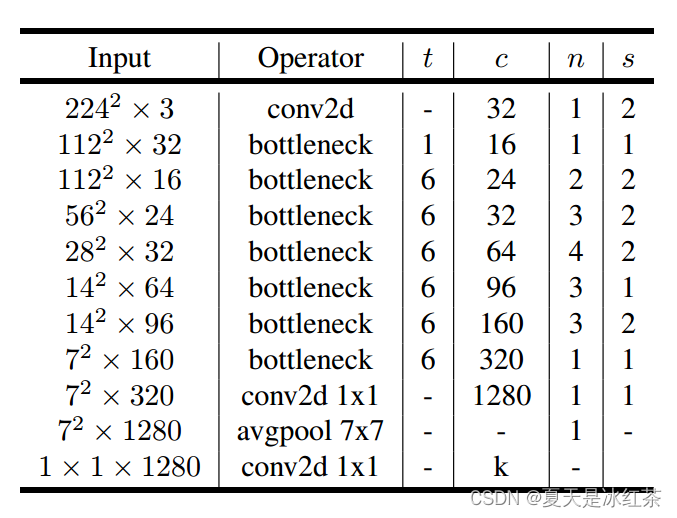

import torch.nn as nndef _make_divisible(v, divisor, min_value=None):if min_value is None:min_value = divisornew_v = max(min_value, int(v + divisor / 2) // divisor * divisor)# Make sure that round down does not go down by more than 10%.if new_v < 0.9 * v:new_v += divisorreturn new_vclass ConvBNReLU6(nn.Module):def __init__(self, in_planes, out_planes, kernel_size=3, stride=1, groups=1, dilation=1,):super(ConvBNReLU6, self).__init__()padding = (kernel_size - 1) // 2 * dilationself.convbnrelu6 = nn.Sequential(nn.Conv2d(in_planes, out_planes, kernel_size, stride, padding, dilation=dilation,groups=groups, bias=False),nn.BatchNorm2d(out_planes),nn.ReLU6(inplace=True))def forward(self, x):return self.convbnrelu6(x)class InvertedResidual(nn.Module):def __init__(self, in_planes, out_planes, stride, expand_ratio):super(InvertedResidual, self).__init__()self.stride = strideassert stride in [1, 2]hidden_dim = int(round(in_planes * expand_ratio))self.use_res_connect = self.stride == 1 and in_planes == out_planeslayers = []if expand_ratio != 1:# pw 利用1x1卷积进行通道数的上升layers.append(ConvBNReLU6(in_planes, hidden_dim, kernel_size=1))layers.extend([# dw 进行3x3的逐层卷积,进行跨特征点的特征提取ConvBNReLU6(hidden_dim, hidden_dim, kernel_size=3, stride=stride, groups=hidden_dim),# pw-linear 利用1x1卷积进行通道数的下降nn.Conv2d(hidden_dim, out_planes, kernel_size=1, stride=1, padding=0),nn.BatchNorm2d(out_planes),])self.conv = nn.Sequential(*layers)self.out_channels = out_planesdef forward(self, x):if self.use_res_connect:return x + self.conv(x)else:return self.conv(x)if __name__ == "__main__":inverted_residual_setting = [# t, c, n, s[1, 16, 1, 1],[6, 24, 2, 2],[6, 32, 3, 2],[6, 64, 4, 2],[6, 96, 3, 1],[6, 160, 3, 2],[6, 320, 1, 1],]class Invertedmodels(nn.Module):def __init__(self, input_channel=32, round_nearest=8):super(Invertedmodels, self).__init__()input_channel = _make_divisible(input_channel, round_nearest)self.conv1 = ConvBNReLU6(3, input_channel, stride=2)self.inverted_residuals = nn.ModuleList()for t, c, n, s in inverted_residual_setting:output_channel = _make_divisible(c, round_nearest)inverted_residual_list = []for i in range(n):stride = s if i == 0 else 1inverted_residual = InvertedResidual(input_channel, output_channel, stride, expand_ratio=t)inverted_residual_list.append(inverted_residual)input_channel = output_channel# 将InvertedResidual的实例添加到模型中setattr(self, f'inverted_residual_{t}_{c}_{n}', nn.Sequential(*inverted_residual_list))self.inverted_residuals.extend(inverted_residual_list)def forward(self, x):x = self.conv1(x)print(x.shape)for i, inverted_residual in enumerate(self.inverted_residuals):x = inverted_residual(x)print(i, x.shape)return xinput_tensor = torch.randn((1, 3, 224, 224))model = Invertedmodels()output = model(input_tensor)mobilenetv2模型实现

这一部分可以参照着论文中的图表进行理解。

import torch

import torch.nn as nn

import torch.nn.functional as Fdef _make_divisible(v, divisor, min_value=None):"""This function is taken from the original tf repo.It can be seen here:https://github.com/tensorflow/models/blob/master/research/slim/nets/mobilenet/mobilenet.pyArgs:v: The number of input channels.divisor: The number of channels should be a multiple of this value.min_value: The minimum value of the number of channels, which defaults to the advisor.Returns: It ensures that all layers have a channel number that is divisible by 8"""if min_value is None:min_value = divisornew_v = max(min_value, int(v + divisor / 2) // divisor * divisor)# Make sure that round down does not go down by more than 10%.if new_v < 0.9 * v:new_v += divisorreturn new_vclass ConvBNReLU6(nn.Module):def __init__(self, in_planes, out_planes, kernel_size=3, stride=1, groups=1, dilation=1,):super(ConvBNReLU6, self).__init__()padding = (kernel_size - 1) // 2 * dilationself.convbnrelu6 = nn.Sequential(nn.Conv2d(in_planes, out_planes, kernel_size, stride, padding, dilation=dilation,groups=groups, bias=False),nn.BatchNorm2d(out_planes),nn.ReLU6(inplace=True))def forward(self, x):return self.convbnrelu6(x)class InvertedResidual(nn.Module):def __init__(self, in_planes, out_planes, stride, expand_ratio):super(InvertedResidual, self).__init__()self.stride = strideassert stride in [1, 2]hidden_dim = int(round(in_planes * expand_ratio))self.use_res_connect = self.stride == 1 and in_planes == out_planeslayers = []if expand_ratio != 1:# pw 利用1x1卷积进行通道数的上升layers.append(ConvBNReLU6(in_planes, hidden_dim, kernel_size=1))layers.extend([# dw 进行3x3的逐层卷积,进行跨特征点的特征提取ConvBNReLU6(hidden_dim, hidden_dim, stride=stride, groups=hidden_dim),# pw-linear 利用1x1卷积进行通道数的下降nn.Conv2d(hidden_dim, out_planes, kernel_size=1, stride=1, padding=0),nn.BatchNorm2d(out_planes),])self.conv = nn.Sequential(*layers)self.out_channels = out_planesdef forward(self, x):if self.use_res_connect:return x + self.conv(x)else:return self.conv(x)class MobileNetV2(nn.Module):def __init__(self, num_classes=1000, drop_rate=0.2, width_mult=1.0, round_nearest=8):"""MobileNet V2 main classArgs:num_classes (int): Number of classesdrop_rate (float): Dropout layer drop ratewidth_mult (float): Width multiplier - adjusts number of channels in each layer by this amountround_nearest (int): Round the number of channels in each layer to be a multiple of this numberSet to 1 to turn off rounding"""super(MobileNetV2, self).__init__()input_channel = 32last_channel = 1280inverted_residual_setting = [# t, c, n, s[1, 16, 1, 1],[6, 24, 2, 2],[6, 32, 3, 2],[6, 64, 4, 2],[6, 96, 3, 1],[6, 160, 3, 2],[6, 320, 1, 1],]# t表示是否进行1*1卷积上升的过程 c表示output_channel大小 n表示小列表倒残差次数 s是步长,表示是否对高和宽进行压缩# building first layerinput_channel = _make_divisible(input_channel * width_mult, round_nearest)self.last_channel = _make_divisible(last_channel * max(1.0, width_mult), round_nearest)features = [ConvBNReLU6(3, input_channel, stride=2)]# building inverted residual blocksfor t, c, n, s in inverted_residual_setting:output_channel = _make_divisible(c * width_mult, round_nearest)for i in range(n):stride = s if i == 0 else 1features.append(InvertedResidual(input_channel, output_channel, stride, expand_ratio=t))input_channel = output_channel# building last several layersfeatures.append(ConvBNReLU6(input_channel, self.last_channel, kernel_size=1))# make it nn.Sequentialself.features = nn.Sequential(*features)self.classifier = nn.Sequential(nn.Dropout(drop_rate),nn.Linear(self.last_channel, num_classes),)for m in self.modules():if isinstance(m, nn.Conv2d):nn.init.kaiming_normal_(m.weight, mode='fan_out')if m.bias is not None:nn.init.zeros_(m.bias)elif isinstance(m, (nn.BatchNorm2d, nn.GroupNorm)):nn.init.ones_(m.weight)nn.init.zeros_(m.bias)elif isinstance(m, nn.Linear):nn.init.normal_(m.weight, 0, 0.01)nn.init.zeros_(m.bias)def forward(self, x):x = self.features(x)# Cannot use "squeeze" as batch-size can be 1 => must use reshape with x.shape[0]x = F.adaptive_avg_pool2d(x, (1, 1)).reshape(x.shape[0], -1)x = self.classifier(x)return xif __name__=="__main__":import torchsummarydevice = 'cuda' if torch.cuda.is_available() else 'cpu'input = torch.ones(2, 3, 224, 224).to(device)net = MobileNetV2(num_classes=4)net = net.to(device)out = net(input)print(out)print(out.shape)torchsummary.summary(net, input_size=(3, 224, 224))MobileNetv3

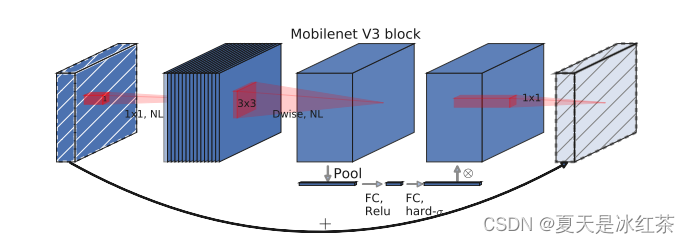

mobilenetv3中的block

在如上的结构图当中,mobilenetv3添加了SE模块,并且更换了激活函数。

SE模块你可以通过这里了解更多:SE通道注意力机制模块-CSDN博客

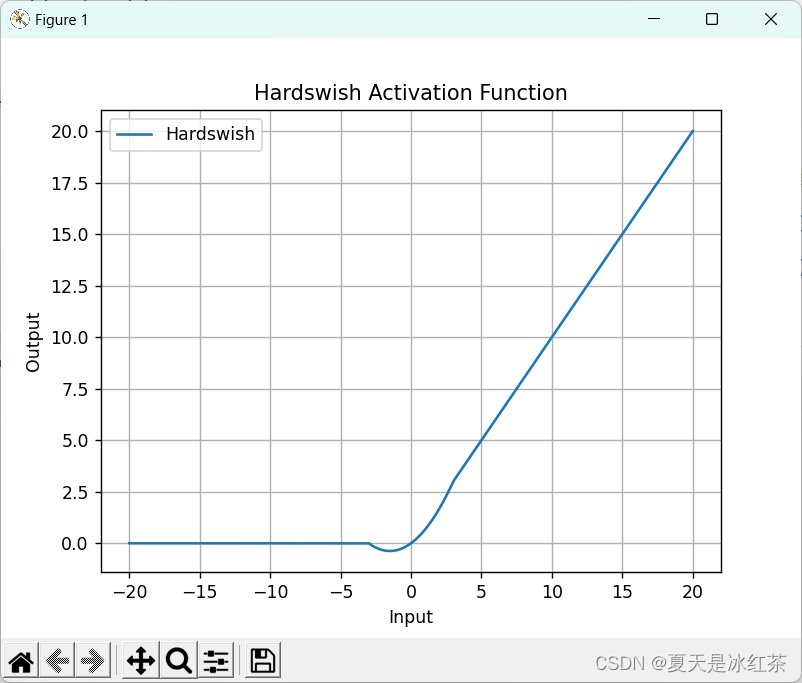

这里用到的激活函数不一样,有hardswish、relu两种。relu我想大家也是十分的了解了。

HardSwish的数学表达式如下:

hardswish我写了一个手写版本的帮助大家理解,这也是我与官方的实现进行过对比的

class Hardswish(nn.Module):def __init__(self, inplace=False):super(Hardswish, self).__init__()self.inplace = inplacedef _hardswish(self, x):inner = F.relu6(x + 3.).div_(6.)return x.mul_(inner) if self.inplace else x.mul(inner)def forward(self, x):return self._hardswish(x)

这种设计的优势在于,HardSwish在保持一定的非线性特性的同时,通过使用ReLU6的硬性截断,使得函数在接近零的地方趋向于线性,这有助于梯度的传播。

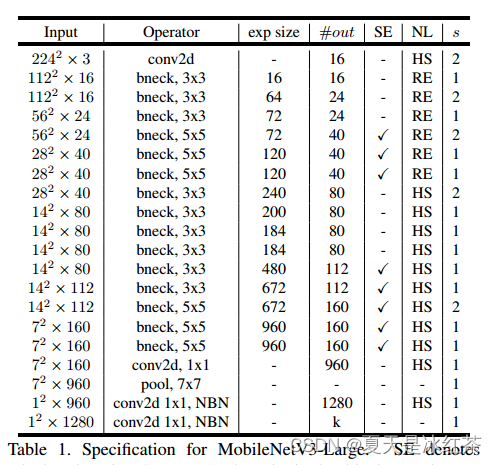

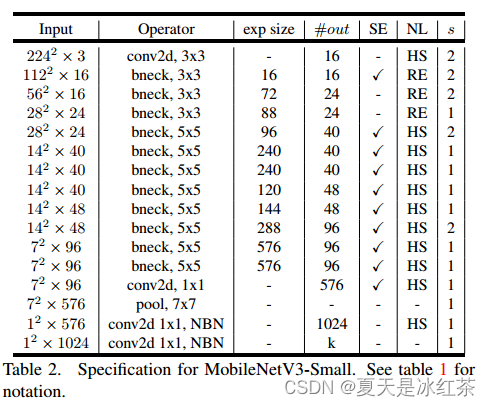

mobilenetv3模型实现

论文当中提供了两种实现方式,分别是large和small。

import torch

import torch.nn as nn

from functools import partialdef _make_divisible(v, divisor, min_value=None):"""This function is taken from the original tf repo.It can be seen here:https://github.com/tensorflow/models/blob/master/research/slim/nets/mobilenet/mobilenet.pyArgs:v: The number of input channels.divisor: The number of channels should be a multiple of this value.min_value: The minimum value of the number of channels, which defaults to the advisor.Returns: It ensures that all layers have a channel number that is divisible by 8"""if min_value is None:min_value = divisornew_v = max(min_value, int(v + divisor / 2) // divisor * divisor)# Make sure that round down does not go down by more than 10%.if new_v < 0.9 * v:new_v += divisorreturn new_vclass ConvBNActivation(nn.Module):def __init__(self, in_planes, out_planes, kernel_size=3, stride=1, groups=1,norm_layer=None, activation_layer=None, dilation=1,):super(ConvBNActivation, self).__init__()padding = (kernel_size - 1) // 2 * dilationif norm_layer is None:norm_layer = nn.BatchNorm2dif activation_layer is None:activation_layer = nn.ReLU6self.convbnact=nn.Sequential(nn.Conv2d(in_planes, out_planes, kernel_size, stride, padding, dilation=dilation, groups=groups,bias=False),norm_layer(out_planes),activation_layer(inplace=True))self.out_channels = out_planesdef forward(self, x):return self.convbnact(x)class SeModule(nn.Module):def __init__(self, input_channels, reduction=4):super(SeModule, self).__init__()expand_size = _make_divisible(input_channels // reduction, 8)self.se = nn.Sequential(nn.AdaptiveAvgPool2d(1),nn.Conv2d(input_channels, expand_size, kernel_size=1, bias=False),nn.BatchNorm2d(expand_size),nn.ReLU(inplace=True),nn.Conv2d(expand_size, input_channels, kernel_size=1, bias=False),nn.Hardsigmoid())def forward(self, x):return x * self.se(x)class MobileNetV3(nn.Module):"""MobileNet V3 main classArgs:num_classes: Number of classesmode: "large" or "small""""def __init__(self, num_classes=1000, mode=None, drop_rate=0.2):super().__init__()norm_layer = partial(nn.BatchNorm2d, eps=0.001, momentum=0.01)layers = []inverted_residual_setting, last_channel = _mobilenetv3_cfg[mode]# building first layerfirstconv_output_channels = 16layers.append(ConvBNActivation(3, firstconv_output_channels, kernel_size=3, stride=2, norm_layer=norm_layer,activation_layer=nn.Hardswish))layers.append(inverted_residual_setting)# building last several layerslastconv_input_channels = 96 if mode == "small" else 160lastconv_output_channels = 6 * lastconv_input_channelslayers.append(ConvBNActivation(lastconv_input_channels, lastconv_output_channels, kernel_size=1,norm_layer=norm_layer, activation_layer=nn.Hardswish))self.features = nn.Sequential(*layers)self.avgpool = nn.AdaptiveAvgPool2d(1)self.classifier = nn.Sequential(nn.Linear(lastconv_output_channels, last_channel),nn.Hardswish(inplace=True),nn.Dropout(p=drop_rate, inplace=True),nn.Linear(last_channel, num_classes),)for m in self.modules():if isinstance(m, nn.Conv2d):nn.init.kaiming_normal_(m.weight, mode='fan_out')if m.bias is not None:nn.init.zeros_(m.bias)elif isinstance(m, (nn.BatchNorm2d, nn.GroupNorm)):nn.init.ones_(m.weight)nn.init.zeros_(m.bias)elif isinstance(m, nn.Linear):nn.init.normal_(m.weight, 0, 0.01)nn.init.zeros_(m.bias)def forward(self, x):x = self.features(x)x = self.avgpool(x)x = torch.flatten(x, 1)x = self.classifier(x)return xclass InvertedResidualv3(nn.Module):'''expand + depthwise + pointwise'''def __init__(self, kernel_size, input_channels, expanded_channels, out_channels, activation, use_se, stride):super(InvertedResidualv3, self).__init__()self.stride = stridenorm_layer = partial(nn.BatchNorm2d, eps=0.001, momentum=0.01)self.use_res_connect = stride == 1 and input_channels == out_channelsactivation_layer = nn.ReLU if activation == "RE" else nn.Hardswishlayers = []if expanded_channels != input_channels:layers.append(ConvBNActivation(input_channels, expanded_channels, kernel_size=1,norm_layer=norm_layer, activation_layer=activation_layer))# depthwiselayers.append(ConvBNActivation(expanded_channels, expanded_channels, kernel_size=kernel_size,stride=stride, groups=expanded_channels,norm_layer=norm_layer, activation_layer=activation_layer))if use_se:layers.append(SeModule(expanded_channels))layers.append(ConvBNActivation(expanded_channels, out_channels, kernel_size=1, norm_layer=norm_layer,activation_layer=nn.Identity))self.block = nn.Sequential(*layers)self.out_channels = out_channelsdef forward(self, x):result = self.block(x)if self.use_res_connect:result += xreturn result_mobilenetv3_cfg = {"large": [nn.Sequential(# kernel, in_chs, exp_chs, out_chs, act, use_se, strideInvertedResidualv3(3, 16, 16, 16, "RE", False, 1),InvertedResidualv3(3, 16, 64, 24, "RE", False, 2),InvertedResidualv3(3, 24, 72, 24, "RE", False, 1),InvertedResidualv3(5, 24, 72, 40, "RE", True, 2),InvertedResidualv3(5, 40, 120, 40, "RE", True, 1),InvertedResidualv3(5, 40, 120, 40, "RE", True, 1),InvertedResidualv3(3, 40, 240, 80, "HS", False, 2),InvertedResidualv3(3, 80, 200, 80, "HS", False, 1),InvertedResidualv3(3, 80, 184, 80, "HS", False, 1),InvertedResidualv3(3, 80, 184, 80, "HS", False, 1),InvertedResidualv3(3, 80, 480, 112, "HS", True, 1),InvertedResidualv3(3, 112, 672, 112, "HS", True, 1),InvertedResidualv3(5, 112, 672, 160, "HS", True, 1),InvertedResidualv3(5, 160, 672, 160, "HS", True, 2),InvertedResidualv3(5, 160, 960, 160, "HS", True, 1),),_make_divisible(1280, 8)],"small": [nn.Sequential(# kernel, in_chs, exp_chs, out_chs, act, use_se, strideInvertedResidualv3(3, 16, 16, 16, "RE", True, 2),InvertedResidualv3(3, 16, 72, 24, "RE", False, 2),InvertedResidualv3(3, 24, 88, 24, "RE", False, 1),InvertedResidualv3(5, 24, 96, 40, "HS", True, 2),InvertedResidualv3(5, 40, 240, 40, "HS", True, 1),InvertedResidualv3(5, 40, 240, 40, "HS", True, 1),InvertedResidualv3(5, 40, 120, 48, "HS", True, 1),InvertedResidualv3(5, 48, 144, 48, "HS", True, 1),InvertedResidualv3(5, 48, 288, 96, "HS", True, 2),InvertedResidualv3(5, 96, 576, 96, "HS", True, 1),InvertedResidualv3(5, 96, 576, 96, "HS", True, 1),),_make_divisible(1024, 8)],

}def MobileNetV3_Large(num_classes):"""Large version of mobilenet_v3"""return MobileNetV3(num_classes=num_classes, mode="large")def MobileNetV3_Small(num_classes):"""small version of mobilenet_v3"""return MobileNetV3(num_classes=num_classes, mode="small")if __name__=="__main__":import torchsummarydevice = 'cuda' if torch.cuda.is_available() else 'cpu'input = torch.ones(2, 3, 224, 224).to(device)net = MobileNetV3_Large(num_classes=4)net = net.to(device)out = net(input)print(out)print(out.shape)torchsummary.summary(net, input_size=(3, 224, 224))

其他

老规矩,模型实现了还是要测试一下它的分类性能,但让我感到奇怪的一点是mobilenetv3在验证集上的损失在不断上升,而且越来越离谱,大致在10到20,这让我一度以为是我写的训练脚本计算出了问题(因为期间在不断的改进),后面我又跑了前面的网络,以及mobilenetv1和v2两个版本都还是挺正常的,然后我又拿官方的进行实验(torchvision下的mobilenetv3),也是和我一样的问题,验证集损失在十几,所以这部分我暂时还是比较的疑惑的。

问题暂时没有解决,先放在这里。

参考文章

【轻量化网络系列(1)】MobileNetV1论文超详细解读(翻译 +学习笔记+代码实现)-CSDN博客

【轻量化网络系列(2)】MobileNetV2论文超详细解读(翻译 +学习笔记+代码实现)-CSDN博客

轻量级网络——MobileNetV1_mobilenet_v1-CSDN博客

MobileNetV3网络结构详解-CSDN博客

MobileNet系列(4):MobileNetv3网络详解-CSDN博客

DeepLabV3+:搭建Mobilenetv2网络_deeplabv3+编码部分采用 mobilenetv2-CSDN博客

这篇关于从MobileNetv1到MobileNetv3模型详解的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!