本文主要是介绍Python爬取百度百科简介以及内链词条简介,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

注意初始中文词条需要转换(不转换会报错UnicodeEncodeError: 'ascii' codec can't encode characters in position 10-12: ordinal not in range(128)):

如:爱奇艺--->urllib.parse.quote("爱奇艺")-->%E7%88%B1%E5%A5%87%E8%89%BA

程序源码

from urllib.request import urlopen

import urllib

from bs4 import BeautifulSouppages = set()

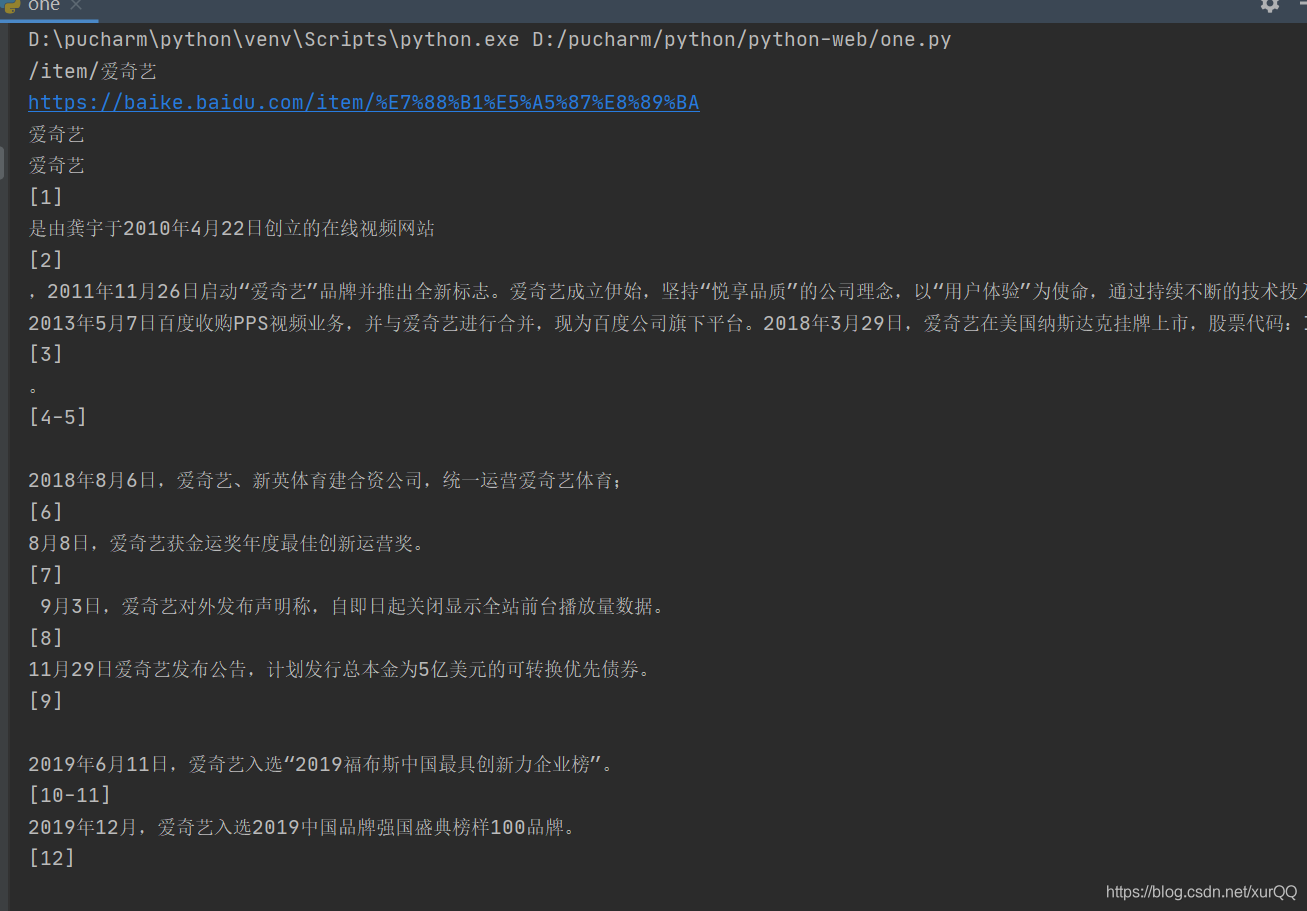

def getLink(pageurl):global pagesheaders = {"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/60.0.3100.0 Safari/537.36"}print('https://baike.baidu.com{}'.format(pageurl))html = urlopen(urllib.request.Request('https://baike.baidu.com{}'.format(pageurl), headers=headers))bs = BeautifulSoup(html, 'html.parser')try:print(bs.h1.get_text())for text in bs.find('div', {'class': 'lemma-summary'}).find_all('div', {'class': 'para'}):print(text.get_text())for link in bs.find('div', {'class': 'para'}).find_all('a'):if 'href' in link.attrs:print(link.attrs['href'])else:print('-----非链接-----')print(link)print('-----非链接-----')except AttributeError:print("yemianqieshaoshuxing")for link in bs.find('div', {'class': 'para'}).find_all('a', href=re.compile('^(/item/)')):if 'href' in link.attrs:if link.attrs['href'] not in pages:newpage = link.attrs['href']print('-'*20)print(newpage)pages.add(newpage)getLink(newpage)get_ = '/item/爱奇艺'

print(get_)

getLink(urllib.parse.quote(get_))

这篇关于Python爬取百度百科简介以及内链词条简介的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!