本文主要是介绍旋律生成学习日记(二)歌词生成旋律LSTM+GAN,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

前几天做了一个LSTM生成旋律的程序,虽然失败了,但也不能说是全失败对吧。于是为了证明自己,我又去关注了一下前沿的文章,我发现个更妙的东西,别说这个领域虽然小众,但是各个都是狠人啊。简而言之就是,由歌词生成旋律。离谱对吧。

文章链接:https://arxiv.org/pdf/1908.05551.pdf

原文代码链接:GitHub - yy1lab/Lyrics-Conditioned-Neural-Melody-Generation

因为原作者是tensorflow1写的,我是tensorflow2,所以我把自己更改过的,以及完整程序链接上传,需要自取吧。

链接:https://pan.baidu.com/s/1k7N4nRzyUNSPmoaGQnSgvg?pwd=0312

提取码:0312

话不多说!!!进入正题!

作者用的数据集是一个他们自己做的一个由12197首歌词和旋律对齐的MIDI歌曲组成的大型数据集。需要的可以去gitup上的链接下载。

先补课补课!!不能继续瞎玩下去了,要走理论路线。

旋律

旋律和歌词为理解一首丰富的人类情感、文化和活动的歌曲提供了补充信息。旋律作为一个包含音符的时间序列,在其中扮演者重要的角色。一个音符包含两个音乐属性:音高和音长。音高是声音的感知属性,它在与频率相关的尺度上通过高低来组织音乐,可以按照模式演奏来创造旋律。在音乐中,持续时间表示音高或音符响起的时间长度,休止符是乐曲中停顿的时间间隔,用符号表示停顿的长度。这篇文章的思想是把休息时间作为一个三连音序列所包含的音乐属性之一。

歌词

歌词作为自然语言,代表音乐主题和故事,是创造音乐有意义印象的非常重要的元素。一个英语音节是一个声音单位,它可能是一个词,也可能是一个词的一部分。根据时间戳信息在音乐曲目的MIDI文件中,旋律和歌词被同步在一起,以解析数据并提取对齐信息。

旋律——歌词对齐例子

歌词和旋律之间的对齐可以是一个音节对一个音符或一个音节到多个音符的成对序列。

文章所有的训练是一对单音节对一个音符来训练LSTM-GAN模型从歌词生成旋律。

数据集

如果想训练自己的数据,那就需要自己先做一步预处理,它不像我前一篇LSTM那样直接用MIDI文件训练。

(1)如果初始文件是MIDI(.mid)文件,MIDI文件里是包括音符、音高、持续时间和乐器等数据的。在数据处理过程中,需要将 MIDI 文件转换为 NumPy 数组,保存成一个单独的.npy 文件。

如果你不会写转化代码,你可以参考下:MusicCritique/create_database.py at master · josephding23/MusicCritique · GitHub

好吧我贴出来

转换后应该是这样的文件。

当然到了这一步还不算构成正儿八经的数据集哈。你还得再次构成一个数据集矩阵。

#!/usr/bin/env python

# coding: utf-8# # Creating matrices from dataset# In[4]:from gensim.models import Word2Vec

import os

import numpy as np

import pandas as pd

import pickle# In[5]:seqlength = 20

num_midi_features = 3

num_sequences_per_song = 2

training_rate = 0.8

validation_rate = 0.1

test_rate = 0.1syll_model_path = './enc_models/syllEncoding_20190419.bin'

word_model_path = './enc_models/wordLevelEncoder_20190419.bin'

songs_path = './data/songs_word_level'print('Creating a dataset with sequences of length', seqlength, 'with', num_sequences_per_song, 'sequences per song')syllModel = Word2Vec.load(syll_model_path)

wordModel = Word2Vec.load(word_model_path)

syll2Vec = syllModel.wv['Hello']

word2Vec = wordModel.wv['world']

num_syll_features = len(syll2Vec) + len(word2Vec)

print('Syllable embedding length :', num_syll_features)# In[6]:files = os.listdir(songs_path)

num_songs = len(files)

print("Total number of songs : ", num_songs)# In[7]:print(files)# In[8]:num_syll_features, num_midi_features, seqlength# In[9]:# inspect content of sample file

sample_file = files[0]

sample_features = np.load(os.path.join(songs_path, sample_file), allow_pickle=True) # load midi files to feature

len(sample_features), len(sample_features[0])# In[10]:print(len(sample_features[0][0]))

print(sample_features[0][0])# In[11]:print(len(sample_features[0][1]))

print(sample_features[0][1])# In[12]:print(len(sample_features[0][2]))

print(sample_features[0][2]) # In[13]:print(len(sample_features[0][3]))

print(sample_features[0][3])# In[14]:# for a file, we will work with features[0][1] & features[0][3] representing

# list of midi_list & list of syll_list# In[15]:data_matrix = np.zeros(shape=(num_sequences_per_song*num_songs, (num_syll_features + num_midi_features) * seqlength))

data_matrix.shape# In[16]:seq_filename_list = [] # to keep track of filename from which a sequence is extracted

small_file_cntr = 0 # to keep track of files with less than 20 syllable-note pairs# In[15]:# load all the songs, cut to 20 note-sequence, convert to song embeddindsi = 0

j = 0for file in files:features = np.load(os.path.join(songs_path, file), allow_pickle=True) # load midi files to featureif len(features[0][1]) >= seqlength: # seqlength = 20, if length of song > 20 notej = 0for midiList, syllList in zip(features[0][1], features[0][3]):word = ''for syll in syllList:#print(syll)word = word + syllif word in list(wordModel.wv.vocab):word2Vec = wordModel.wv[word]for midi, syll in zip(midiList, syllList):if syll in list(syllModel.wv.vocab):syll2Vec = syllModel.wv[syll]syllWordVec = np.concatenate((syll2Vec,word2Vec)) # joint embedding = syllabus + wordsif j < seqlength:data_matrix[i][num_midi_features * j:num_midi_features * j + num_midi_features] = midi # append mididata_matrix[i][num_midi_features * seqlength + num_syll_features * j:num_midi_features * seqlength + num_syll_features * (j + 1)] = syllWordVec # append joint embeddingj += 1else:breaki += 1seq_filename_list.append(file)#print(syllWordVec)if i%100 == 0:print("sequence ", i)else: # seqlength < 20small_file_cntr += 1if len(features[0][1]) >= 2*seqlength: # if length of song > 40 notej = 0for midiList, syllList in zip(features[0][1][seqlength:], features[0][3][seqlength:]): # cut one more from note 21word = ''for syll in syllList:word = word + syllif word in list(wordModel.wv.vocab):word2Vec = wordModel.wv[word]for midi, syll in zip(midiList, syllList):if syll in list(syllModel.wv.vocab):syll2Vec = syllModel.wv[syll]syllWordVec = np.concatenate((syll2Vec,word2Vec))if j < seqlength:data_matrix[i][num_midi_features * j:num_midi_features * j + num_midi_features] = mididata_matrix[i][num_midi_features * seqlength + num_syll_features * j:num_midi_features * seqlength + num_syll_features * (j + 1)] = syllWordVecj += 1else:breaki += 1seq_filename_list.append(file)if i%100 == 0:print("sequence number ", i)# In[95]:print('There are {} files out of {} with less than 20 syllable-note pairs hence not considered.'.format(small_file_cntr, len(files)))# In[18]:data_matrix = data_matrix[0:i, :]

data_matrix.shape# In[16]:# dump sequence filename list & data matrixpickle.dump(seq_filename_list, open('./data/dataset_filenames/full_filename_list.pkl', 'wb'))

np.save('./data/dataset_matrices/full_data_matrix.npy', data_matrix)# In[18]:# end of the data matrix generation# In[26]:# creating train, valid & test matrix from data matrix # In[27]:data_matrix.shape# In[28]:len(data_matrix), 0.80 * len(data_matrix), 0.20 * len(data_matrix)# In[17]:# we split the data into 80% train with 11149 sequences and 10% validation & test with 1394 sequences each.# we want all the sequences extracted from a file to be present exclusively in one set (train/validation/test) only.# this allows us to bind files to specific sets (train/validation/test)# this strategy gives us freedom to reconstruct data matrices with different sequence lengths, lyrics encoders etc.# In[31]:print('Out of {} sequence {} sequences are unique'.format(len(data_matrix), len(np.unique(data_matrix, axis=0))))# In[33]:# assign the first 11149 sequences in the data matrix to the training settrain_data_matrix = data_matrix[:11149]

train_filename_list = seq_filename_list[:11149]

train_data_matrix.shape, len(train_filename_list), train_filename_list[-2:]# In[34]:# remove duplicates and create test & valildation set# In[35]:data_matrix_df = pd.DataFrame(data_matrix)

is_duplicated_df = data_matrix_df.duplicated()

is_duplicated_df.values.sum() # 13937 - 11824# In[36]:clean_data_matrix_df = data_matrix_df.drop_duplicates() # clean_data_matrix_df contains no duplicate sequences

clean_data_matrix_df.shape# In[37]:clean_data_matrix_df_index = clean_data_matrix_df.index

print(len(clean_data_matrix_df_index), list(clean_data_matrix_df_index))# In[38]:# find indices from clean_data_matrix_df_index which are not part of training set

clean_data_matrix_df_non_train_index = clean_data_matrix_df_index[clean_data_matrix_df_index >= 11149]

print(len(clean_data_matrix_df_non_train_index), list(clean_data_matrix_df_non_train_index))# In[39]:valid_test_data_matrix = data_matrix[clean_data_matrix_df_non_train_index]

valid_test_filename_list = np.asarray(seq_filename_list)[clean_data_matrix_df_non_train_index].tolist()# In[40]:valid_test_data_matrix.shape, len(valid_test_filename_list)# In[41]:# out of 2102 sequences present in valid_test_matrix, first 50% i.e. 1051 sequences will form validation matrix

# and remaining 1051 sequences will form the test matrix# In[42]:valid_data_matrix = valid_test_data_matrix[:1051]

valid_filename_list = valid_test_filename_list[:1051]test_data_matrix = valid_test_data_matrix[1051:]

test_filename_list = valid_test_filename_list[1051:]valid_data_matrix.shape, len(valid_filename_list), test_data_matrix.shape, len(test_filename_list)# In[43]:# end of data matrix creation and post processing# In[56]:# save matrix and file list for different sets# In[57]:np.save('./data/dataset_matrices/train_data_matrix.npy', train_data_matrix)

np.save('./data/dataset_matrices/valid_data_matrix.npy', valid_data_matrix)

np.save('./data/dataset_matrices/test_data_matrix.npy', test_data_matrix)# In[58]:pickle.dump(train_filename_list, open('./data/dataset_filenames/train_filename_list.pkl', 'wb'))

pickle.dump(valid_filename_list, open('./data/dataset_filenames/valid_filename_list.pkl', 'wb'))

pickle.dump(test_filename_list, open('./data/dataset_filenames/test_filename_list.pkl', 'wb'))# In[ ]:# end of the notebookLSTM-GAN模型

以歌词作为输入,我们的目标是预测一个与歌词顺序对齐的旋律,其中MIDI数字、音符持续时间和休息时间时长与歌词合成,生成歌曲。

由于歌词数据的词汇量很大,没有任何标签,需要事先训练一个无监督模型,它可以学习任何单词或音节的上下文。具体来说就是利用歌词数据来训练一个skip-gram模型,这使我们能够获得可以在给定歌词的上下文中编码语言规律和模式的向量表示。文章提出的方法在两个不同的语义级别上对歌词信息进行编码:音节级和单词级,并且分别训练了两个skip-gram模型,其目的是将向量表示与英语音节关联起来。具体来说,每首歌的歌词都被划分为句子,每个句子都被划分为单词,每个单词又被进一步划分为音节。单词作为符号训练词级嵌入模型,音节作为训练音节级嵌入模型的标记。

LSTM-GAN

LSTM被训练来学习歌词和旋律序列之间的语义和关系。GAN在考虑歌词和旋律之间的音乐对齐关系的基础上,被训练为在给定歌词作为输入时预测旋律。

emmm。。整体就这样,还有些细节感兴趣的可以自己看,我主要是对代码比较感兴趣,理论对我而言点到为止即可。

代码实在太长了我就不贴了,反正自己下,代码基本没有啥问题,自己改的时候也是因为实在是懒得换tensorflow的版本,下次我还是找找torch的吧。

训练生成模型,还是得试试具体效果怎么样。

从歌词生成旋律

#!/usr/bin/env python

# coding: utf-8# In[14]:import numpy as np

#import tensorflow as tf

import tensorflow.compat.v1 as tfimport midi_statistics

import utils

import os

from gensim.models import Word2Vec# In[15]:syll_model_path = './enc_models/syllEncoding_20190419.bin'

word_model_path = './enc_models/wordLevelEncoder_20190419.bin'# In[16]:syllModel = Word2Vec.load(syll_model_path)

wordModel = Word2Vec.load(word_model_path)'''

lyrics = [['Must','Must'],['have','have'],['been','been'],['love','love'],['but','but'],['its','its'],['o','over'],['ver','over'],['now','now'],['lay','lay'],['a','a'],['whis','whisper'],['per','whisper'],['on','on'],['my','my'],['pil','pillow'],['low','pillow']]

lyrics = [['Then','Then'],['the','the'],['rain','rainstorm'],['storm','rainstorm'],['came','came'],['ov','over'],['er','over'],['me','me'],['and','and'],['i','i'],['felt','felt'],['my','my'],['spi','spirit'],['rit','spirit'],['break','break']]

lyrics = [['E','Everywhere'],['very','Everywhere'],['where','Everywhere'],['I','I'],['look','look'],['I','I'],['found','found'],['you','you'],['look','looking'],['king','looking'],['back','back']]

'''

lyrics = [['baby','baby'],['oh','my'],['big','baby'],['big','love'],['yes','baby'],['go','oh'],['yes','go'],['beautiful','number'],['one','one'],['come','go'],['yeah','beautiful'],['wo','shi'],['da','da'],['ge','ge']]length_song = len(lyrics)

cond = []for i in range(20):if i < length_song:syll2Vec = syllModel.wv[lyrics[i][0]]word2Vec = wordModel.wv[lyrics[i][1]]cond.append(np.concatenate((syll2Vec,word2Vec)))else:cond.append(np.concatenate((syll2Vec,word2Vec)))flattened_cond = []

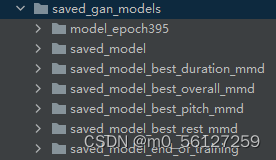

for x in cond:for y in x:flattened_cond.append(y)# In[13]:model_path = './saved_gan_models/saved_model_best_overall_mmd'

# model_path = './saved_gan_models/saved_model_end_of_training'x_list = []

y_list = []with tf.Session(graph=tf.Graph()) as sess:tf.saved_model.loader.load(sess, [], model_path)graph = tf.get_default_graph()keep_prob = graph.get_tensor_by_name("model/keep_prob:0")input_metadata = graph.get_tensor_by_name("model/input_metadata:0")input_songdata = graph.get_tensor_by_name("model/input_data:0")output_midi = graph.get_tensor_by_name("output_midi:0")feed_dict = {}feed_dict[keep_prob.name] = 1.0condition = []feed_dict[input_metadata.name] = conditionfeed_dict[input_songdata.name] = np.random.uniform(size=(1, 20, 3))condition.append(np.split(np.asarray(flattened_cond), 20))feed_dict[input_metadata.name] = conditiongenerated_features = sess.run(output_midi, feed_dict)sample = [x[0, :] for x in generated_features]sample = midi_statistics.tune_song(utils.discretize(sample))midi_pattern = utils.create_midi_pattern_from_discretized_data(sample[0:length_song])destination = "test.mid"midi_pattern.write(destination)print('done')直接输入单词,千万别用中文,不然会报错,我自己试了下跑了下效果,和我自己跑的LSTM比起来,效果强了很多,能明显感觉到旋律的变化,但是离我想象中的效果还差了很多,跟AI生成图像的效果比起来,AI生成音乐着实有些拉跨了。

这篇关于旋律生成学习日记(二)歌词生成旋律LSTM+GAN的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!