本文主要是介绍图像捕获_立体声360图像和视频捕获,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

图像捕获

We are proud to announce that in 2018.1 creators can now capture stereoscopic 360 images and video in Unity. Whether you’re a VR developer who wants to make a 360 trailer to show off your experience or a director who wants to make an engaging cinematic short film, Unity’s new capture technology empowers you to share your immersive experience with an audience of millions on platforms such as YouTube, Within, Jaunt, Facebook 360, or Steam 360 Video. Download the beta version of Unity 2018.1 today to start capturing.

我们很自豪地宣布,在2018.1版本中,创作者现在可以在Unity中捕获立体360度图像和视频。 无论您是想要制作360度预告片以展示您的经验的VR开发人员,还是想要制作引人入胜的电影短片的导演,Unity的新捕获技术都可以使您与数百万平台上的观众分享沉浸式体验如YouTube上 , 在 , 短途旅游 , Facebook的360 ,或蒸汽360视频 。 立即下载Unity 2018.1 Beta版以开始捕获。

如何使用此功能 (How to use this Feature)

Our device independent stereo 360 capture technique is based on Google’s Omni-directional Stereo (ODS) technology using stereo cubemap rendering. We support rendering to stereo cubemaps natively in Unity’s graphics pipeline on both Editor and on PC standalone player. After stereo cubemaps are generated, we can convert these cubemaps to stereo equirectangular maps which is a projection format used by 360 video players.

我们与设备无关的立体声360捕获技术基于使用立体立方体贴图渲染的Google全向立体声(ODS)技术。 我们支持在编辑器和PC独立播放器上的Unity图形管道中本地渲染到立体立方体贴图。 生成立体立方体贴图后,我们可以将这些立方体贴图转换为立体等矩形贴图,这是360个视频播放器使用的投影格式。

To capture a scene in Editor or standalone player is as simple as calling Camera.RenderToCubemap() once per eye:

在编辑器或独立播放器中捕获场景就像调用Camera一样简单。 每只眼睛一次渲染RenderToCubemap ( ) :

| 1 2 3 4 5 | camera.stereoSeparation = 0.064; // Eye separation (IPD) of 64mm. camera.RenderToCubemap(cubemapLeftEye, 63, Camera.MonoOrStereoscopicEye.Left); camera.RenderToCubemap(cubemapRightEye, 63, Camera.MonoOrStereoscopicEye.Right); |

| 1 2 3 4 5 | camera . stereoSeparation = 0.064 ; // Eye separation (IPD) of 64mm. camera . RenderToCubemap ( cubemapLeftEye , 63 , Camera . MonoOrStereoscopicEye . Left ) ; camera . RenderToCubemap ( cubemapRightEye , 63 , Camera . MonoOrStereoscopicEye . Right ) ; |

During capture of each eye, we enable a shader keyword which warps each vertex position in the scene according to a shader ODSOffset() function which does the per eye projection and offset.

在捕获每只眼睛的过程中,我们启用了一个着色器关键字,该关键字根据着色器ODSOffset()函数对场景中的每个顶点位置进行扭曲,该函数对每个眼睛进行投影和偏移。

Stereo 360 capture works in forward and deferred lighting pipelines, with screen space and cubemap shadows, skybox, MSAA, HDR and the new post processing stack. For more info, see our new stereo 360 capture API.

立体360捕捉可在向前和向后的照明管道中工作,并具有屏幕空间和立方体贴图阴影,天空盒,MSAA,HDR和新的后处理堆栈。 有关更多信息,请参见我们新的立体声360捕获API 。

To convert cubemaps to stereo equirectangular maps, call RenderTexture.ConvertToEquirect() :

要将立方体贴图转换为立体等角图,请调用RenderTexture 。 ConvertToEquirect ( ) :

| 1 2 3 | cubemapLeftEye.ConvertToEquirect(equirect, Camera.MonoOrStereoscopicEye.Left); cubemapRightEye.ConvertToEquirect(equirect,Camera.MonoOrStereoscopicEye.Right); |

| 1 2 3 | cubemapLeftEye . ConvertToEquirect ( equirect , Camera . MonoOrStereoscopicEye . Left ) ; cubemapRightEye . ConvertToEquirect ( equirect , Camera . MonoOrStereoscopicEye . Right ) ; |

Using Unity frame recorder, a sequence of these equirect images can be captured out as frames of a stereo 360 video. This video can then be posted on video websites that support 360 playback, or can be used inside your app using Unity’s 360 video playback introduced in 2017.3.

使用Unity帧记录器 ,可以将这些等价图像的序列捕获为立体声360视频的帧。 然后可以将此视频发布在支持360回放的视频网站上,也可以使用2017.3引入的Unity 360视频回放在您的应用程序内部使用。

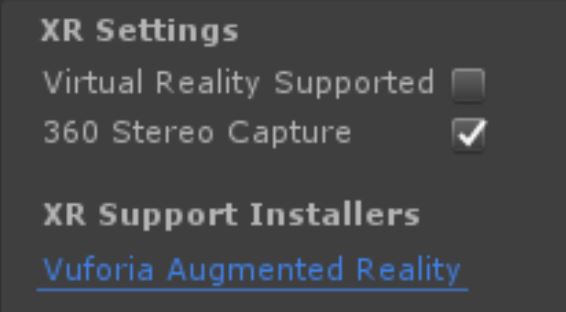

For the PC standalone player, you need to enable the “360 Stereo Capture” option in your build (see below) so that Unity generates 360 capture enabled shader variants which are disabled by default in normal player builds.

对于PC独立播放器,您需要在构建中启用“ 360 Stereo Capture”选项(请参见下文),以便Unity生成启用了360捕获的着色器变体,默认情况下在常规播放器构建中将其禁用。

In practice, most of 360 capture work can be done on the PC in Editor/Play mode.

实际上,大多数360捕获工作都可以在PC上以编辑器/播放模式完成。

For VR applications, we recommend disabling VR in Editor when capturing 360 stereo cubemaps (our stereo 360 capture method doesn’t require VR hardware). This will speedup performance without affecting the captured results.

对于VR应用程序,我们建议在捕获360立体立方体贴图时禁用编辑器中的VR(我们的立体360捕获方法不需要VR硬件)。 这将提高性能,而不会影响捕获的结果。

Stereo 360 Capture的技术说明 (Technical Notes on Stereo 360 Capture)

For those of you using your own shaders or implementing your own shadowing algorithms, here are some additional notes to help you integrate with Unity stereo 360 capture.

对于那些使用自己的着色器或实现自己的阴影算法的人,这里有一些其他说明,可帮助您与Unity Stereo 360捕捉集成。

We added an optional shader keyword: STEREO_CUBEMAP_RENDER. When enabled, this keyword will modify UnityObjectToClipPos() to include the additional shader code to transform positions with ODSOffset() function (see UnityShaderUtilities file in 2018.1). The keyword will also let engine setup the proper stereo 360 capture rendering.

我们添加了一个可选的着色器关键字:STEREO_CUBEMAP_RENDER。 启用后,此关键字将修改UnityObjectToClipPos ( )以包括其他着色器代码,以使用ODSOffset ( )函数转换位置(请参阅2018.1中的UnityShaderUtilities文件)。 该关键字还将使引擎设置正确的立体声360捕获渲染。

If you are implementing screen space shadows, there is the additional issue that the shadow computation of reconstructed world space from depth map (which has post ODS Offset applied) and view ray is not the original world space position. This will affect shadow lookup in light space which expects the true world position. The view ray is also based on the original camera and not in ODS space.

如果要实现屏幕空间阴影,则存在另一个问题,即根据深度图(应用了后期ODS偏移)和视线对重建的世界空间进行阴影计算不是原始的世界空间位置。 这将影响希望获得真实世界位置的光空间中的阴影查找。 视线也基于原始相机,不在ODS空间中。

One way to solve this is to render the scene to create a one-to-one mapping of world positions with screen space shadow map and write out the world positions (unmodified by ODS offset) into a float texture. This map is used as true world positions to lookup shadow from light space. You can also use 16-bit float texture if you know the scene fits within 16-bit float precision based on scene center and world bounds.

解决此问题的一种方法是渲染场景,以使用屏幕空间阴影贴图创建世界位置的一对一映射,并将世界位置(未经ODS偏移修改)写入浮动纹理。 该地图用作从光空间查找阴影的真实世界位置。 如果您知道场景适合于基于场景中心和世界范围的16位浮点精度,则也可以使用16位浮点纹理。

We’d love to see what you’re creating. Share links to your 360 videos on Unity Connect or tweet with #madewithunity. Also, remember this feature is experimental. Please give us your feedback and engage with us on our 2018.1 beta forum.

我们希望看到您正在创造什么。 在Unity Connect上共享指向您的360视频的链接,或通过#madewithunity进行鸣叫。 另外,请记住此功能是实验性的。 请给我们您的反馈并在我们的2018.1 Beta论坛上与我们互动 。

翻译自: https://blogs.unity3d.com/2018/01/26/stereo-360-image-and-video-capture/

图像捕获

这篇关于图像捕获_立体声360图像和视频捕获的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!