本文主要是介绍K8S v1.17.17KubeEdge v1.7部署指南+kubeedge-counter-demo示例,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

概述

KubeEdge是一个开源系统,用于将容器化应用程序编排功能扩展到Edge的主机。它基于kubernetes构建,并为网络应用程序提供基础架构支持。云和边缘之间的部署和元数据同步。 KubeEdge使用Apache 2.0许可。并且绝对可以免费用于个人或商业用途。KubeEdge 的目标是创建一个开放平台,使能边缘计算,将容器化应用编排功能扩展到边缘的节点和设备,后者基于kubernetes构建,并为云和边缘之间的网络,应用部署和元数据同步提供基础架构支持。本文将基于Centos7.6系统对KugeEdge进行编译与部署,最后演示KubeEdge官方示例Counter Demo 计数器。

一、系统配置

1.1 集群环境

Cloud云端 192.168.17.137

Edge边缘端 192.168.17.138

| Hostname | IP | Soft |

|---|---|---|

| k8s-master | 192.168.17.137 | k8s、docker、cloudcore |

| node1 | 192.168.17.138 | docker、edgecore |

1.2 禁用开机启动防火墙

systemctl disable firewalld

1.3 永久禁用SELinux

编辑文件/etc/selinux/config,将SELINUX修改为disabled,如下:

sed -i 's/SELINUX=enforcing/SELINUX=disabled/' /etc/sysconfig/selinux

SELINUX=disabled

1.4 关闭系统Swap(可选)

Kbernetes 1.8 开始要求关闭系统的Swap,如果不关闭,默认配置下kubelet将无法启动,如下:

# sed -i 's/.*swap.*/#&/' /etc/fstab

#/dev/mapper/centos-swap swap swap defaults 0 0

1.5 所有机器安装Docker

a. 前置步骤

设置阿里云yum镜像源

wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

清除原有yum缓存 yum clean all

yum clean all

生成缓存yum makecache

yum makecache

设置阿里云docker镜像源

yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

安装依赖包

yum install -y yum-utils device-mapper-persistent-data lvm2

b. 安装docker

安装docker:

yum install docker-ce -y

启动docker:

systemctl enable docker && systemctl start docker

调整docker部分参数:

registry-mirrors改为阿里镜像 “registry-mirrors”: [“https://cheg08k8.mirror.aliyuncs.com”]

native.cgroupdriver默认cgroupfs,k8s官方推荐systemd,否则初始化出现Warning

vi /etc/docker/daemon.json

输入以下内容:

{“registry-mirrors”: [“https://cheg08k8.mirror.aliyuncs.com”],

“exec-opts”: [“native.cgroupdriver=systemd”],

“live-restore”: true

}

systemctl daemon-reload

systemctl restart docker

检查确认docker的Cgroup Driver信息:

docker info |grep Cgroup

Cgroup Driver: systemd

1.6 重启系统

# reboot

二、cloud节点部署K8s

2.1 配置yum源

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

2.2 安装kubeadm、kubectl

安装指定版本(建议用这个)

yum install kubelet-1.17.0-0.x86_64 kubeadm-1.17.0-0.x86_64 kubectl-1.17.0-0.x86_64

安装最新版

yum install -y kubelet kubeadm kubectl

2.3 配置内核参数(可以省略)

cat <<EOF > /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

vm.swappiness=0

EOF

sysctl --system

modprobe br_netfilter

sysctl -p /etc/sysctl.d/k8s.conf

2.4 拉取镜像

用命令查看版本当前kubeadm对应的k8s组件镜像版本,如下:

kubeadm config images list

I0201 01:11:35.698583 15417 version.go:251] remote version is much newer: v1.20.2; falling back to: stable-1.17

W0201 01:11:39.538445 15417 validation.go:28] Cannot validate kube-proxy config - no validator is available

W0201 01:11:39.538486 15417 validation.go:28] Cannot validate kubelet config - no validator is available

k8s.gcr.io/kube-apiserver:v1.17.17

k8s.gcr.io/kube-controller-manager:v1.17.17

k8s.gcr.io/kube-scheduler:v1.17.17

k8s.gcr.io/kube-proxy:v1.17.17

k8s.gcr.io/pause:3.1

k8s.gcr.io/etcd:3.4.3-0

k8s.gcr.io/coredns:1.6.5

因为这些重要镜像都被墙了,所以要预先单独下载好,然后才能初始化集群。

下载脚本:

#!/bin/bash

set -e

KUBE_VERSION=v1.17.17

KUBE_PAUSE_VERSION=3.1

ETCD_VERSION=3.4.3-0

CORE_DNS_VERSION=1.6.5GCR_URL=k8s.gcr.io

ALIYUN_URL=registry.cn-hangzhou.aliyuncs.com/google_containers

images=(kube-proxy:${KUBE_VERSION}

kube-scheduler:${KUBE_VERSION}

kube-controller-manager:${KUBE_VERSION}

kube-apiserver:${KUBE_VERSION}

pause:${KUBE_PAUSE_VERSION}

etcd:${ETCD_VERSION}

coredns:${CORE_DNS_VERSION})

for imageName in ${images[@]} ; dodocker pull $ALIYUN_URL/$imageNamedocker tag $ALIYUN_URL/$imageName $GCR_URL/$imageNamedocker rmi $ALIYUN_URL/$imageName

done

执行完以上脚本,查看下载下来的镜像,如下:

docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

k8s.gcr.io/kube-proxy v1.17.17 3ef67d180564 2 weeks ago 117MB

k8s.gcr.io/kube-apiserver v1.17.17 38db32e0f351 2 weeks ago 171MB

k8s.gcr.io/kube-controller-manager v1.17.17 0ddd96ecb9e5 2 weeks ago 161MB

k8s.gcr.io/kube-scheduler v1.17.17 d415ebbf09db 2 weeks ago 94.4MB

quay.io/coreos/flannel v0.13.1-rc1 f03a23d55e57 2 months ago 64.6MB

k8s.gcr.io/coredns 1.6.5 70f311871ae1 15 months ago 41.6MB

k8s.gcr.io/etcd 3.4.3-0 303ce5db0e90 15 months ago 288MB

k8s.gcr.io/pause 3.1 da86e6ba6ca1 3 years ago 742kB

2.5 配置kubelet

在cloud端配置kubelet并非必须,主要是为了验证K8s集群的部署是否正确,也可以在云端搭建Dashboard等应用。

获取Docker的cgroups

DOCKER_CGROUPS=$(docker info | grep 'Cgroup Driver' | cut -d' ' -f4)

echo $DOCKER_CGROUPS

配置kubelet的cgroups

cat >/etc/sysconfig/kubelet<<EOF

KUBELET_EXTRA_ARGS="--cgroup-driver=$DOCKER_CGROUPS --pod-infra-container-image=k8s.gcr.io/pause:3.1"

EOF

启动kubelet

systemctl daemon-reload

systemctl enable kubelet && systemctl start kubelet

2.6 初始化K8S集群

kubeadm init --kubernetes-version=v1.17.17 \--pod-network-cidr=10.244.0.0/16 \--apiserver-advertise-address=192.168.2.133 \--ignore-preflight-errors=Swap

To start using your cluster, you need to run the following as a regular user:mkdir -p $HOME/.kubesudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/configsudo chown $(id -u):$(id -g) $HOME/.kube/configYou should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:https://kubernetes.io/docs/concepts/cluster-administration/addons/Then you can join any number of worker nodes by running the following on each as root:kubeadm join 192.168.2.133:6443 --token befwji.rmuh07racybsqik5 \--discovery-token-ca-cert-hash sha256:74d996a22680090540f06c1f7732e329e518d0147dc2e27895b0b770c1c74d84

进一步配置kubectl

mkdir -p $HOME/.kube

cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

chown $(id -u):$(id -g) $HOME/.kube/config

查看集群状态

kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master NotReady master 4d19h v1.17.0

NotReady 不用担心,因为还没有配置网络插件,后面配置好就会变成Ready

2.7 配置网络插件

下载flannel插件的yaml文件

cd ~ && mkdir flannel && cd flannel

curl -O https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

启动

kubectl apply -f ~/flannel/kube-flannel.yml

如果下载不下来配置文件,可以直接复制下面内容:

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:name: psp.flannel.unprivilegedannotations:seccomp.security.alpha.kubernetes.io/allowedProfileNames: docker/defaultseccomp.security.alpha.kubernetes.io/defaultProfileName: docker/defaultapparmor.security.beta.kubernetes.io/allowedProfileNames: runtime/defaultapparmor.security.beta.kubernetes.io/defaultProfileName: runtime/default

spec:privileged: falsevolumes:- configMap- secret- emptyDir- hostPathallowedHostPaths:- pathPrefix: "/etc/cni/net.d"- pathPrefix: "/etc/kube-flannel"- pathPrefix: "/run/flannel"readOnlyRootFilesystem: false# Users and groupsrunAsUser:rule: RunAsAnysupplementalGroups:rule: RunAsAnyfsGroup:rule: RunAsAny# Privilege EscalationallowPrivilegeEscalation: falsedefaultAllowPrivilegeEscalation: false# CapabilitiesallowedCapabilities: ['NET_ADMIN', 'NET_RAW']defaultAddCapabilities: []requiredDropCapabilities: []# Host namespaceshostPID: falsehostIPC: falsehostNetwork: truehostPorts:- min: 0max: 65535# SELinuxseLinux:# SELinux is unused in CaaSPrule: 'RunAsAny'

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:name: flannel

rules:

- apiGroups: ['extensions']resources: ['podsecuritypolicies']verbs: ['use']resourceNames: ['psp.flannel.unprivileged']

- apiGroups:- ""resources:- podsverbs:- get

- apiGroups:- ""resources:- nodesverbs:- list- watch

- apiGroups:- ""resources:- nodes/statusverbs:- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:name: flannel

roleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: flannel

subjects:

- kind: ServiceAccountname: flannelnamespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:name: flannelnamespace: kube-system

---

kind: ConfigMap

apiVersion: v1

metadata:name: kube-flannel-cfgnamespace: kube-systemlabels:tier: nodeapp: flannel

data:cni-conf.json: |{"name": "cbr0","cniVersion": "0.3.1","plugins": [{"type": "flannel","delegate": {"hairpinMode": true,"isDefaultGateway": true}},{"type": "portmap","capabilities": {"portMappings": true}}]}net-conf.json: |{"Network": "10.244.0.0/16","Backend": {"Type": "vxlan"}}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:name: kube-flannel-dsnamespace: kube-systemlabels:tier: nodeapp: flannel

spec:selector:matchLabels:app: flanneltemplate:metadata:labels:tier: nodeapp: flannelspec:affinity:nodeAffinity:requiredDuringSchedulingIgnoredDuringExecution:nodeSelectorTerms:- matchExpressions:- key: kubernetes.io/osoperator: Invalues:- linuxhostNetwork: truepriorityClassName: system-node-criticaltolerations:- operator: Existseffect: NoScheduleserviceAccountName: flannelinitContainers:- name: install-cniimage: quay.io/coreos/flannel:v0.14.0command:- cpargs:- -f- /etc/kube-flannel/cni-conf.json- /etc/cni/net.d/10-flannel.conflistvolumeMounts:- name: cnimountPath: /etc/cni/net.d- name: flannel-cfgmountPath: /etc/kube-flannel/containers:- name: kube-flannelimage: quay.io/coreos/flannel:v0.14.0command:- /opt/bin/flanneldargs:- --ip-masq- --kube-subnet-mgrresources:requests:cpu: "100m"memory: "50Mi"limits:cpu: "100m"memory: "50Mi"securityContext:privileged: falsecapabilities:add: ["NET_ADMIN", "NET_RAW"]env:- name: POD_NAMEvalueFrom:fieldRef:fieldPath: metadata.name- name: POD_NAMESPACEvalueFrom:fieldRef:fieldPath: metadata.namespacevolumeMounts:- name: runmountPath: /run/flannel- name: flannel-cfgmountPath: /etc/kube-flannel/volumes:- name: runhostPath:path: /run/flannel- name: cnihostPath:path: /etc/cni/net.d- name: flannel-cfgconfigMap:name: kube-flannel-cfg

quay.io/coreos/flannel:v0.14.0 镜像会下载失败,执行以下命令

docker pull lizhenliang/flannel:v0.14.0 docker tag lizhenliang/flannel:v0.14.0 quay.io/coreos/flannel:v0.14.0docker rmi lizhenliang/flannel:v0.14.0

镜像下载完毕后,再次查看集群状态

kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master Ready master 4d19h v1.17.0

kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-6955765f44-f7s8b 1/1 Running 2 26h

coredns-6955765f44-q69lg 1/1 Running 2 26h

etcd-k8s-master 1/1 Running 2 26h

kube-apiserver-k8s-master 1/1 Running 10 26h

kube-controller-manager-k8s-master 1/1 Running 4 26h

kube-flannel-ds-75btq 0/1 Pending 0 3h40m

kube-flannel-ds-7w6zp 1/1 Running 2 25h

kube-proxy-qrj4g 1/1 Running 2 26h

kube-scheduler-k8s-master 1/1 Running 4 26h三、KubeEdge的安装与配置

3.1 cloud端配置

cloud端负责编译KubeEdge的相关组件与运行cloudcore。

3.1.1 准备工作

下载golang

wget https://golang.google.cn/dl/go1.14.4.linux-amd64.tar.gztar -zxvf go1.14.4.linux-amd64.tar.gz -C /usr/local

配置golang环境变量

vim /etc/profile

文件末尾添加:

# golang env

export GOROOT=/usr/local/go

export GOPATH=/data/gopath

export PATH=$PATH:$GOROOT/bin:$GOPATH/bin

source /etc/profile

mkdir -p /data/gopath && cd /data/gopath

mkdir -p src pkg bin

下载KubeEdge源码

这里需要git命令,自行安装git即可

git clone https://github.com/kubeedge/kubeedge $GOPATH/src/github.com/kubeedge/kubeedge

这个下载有可能会报错,因为github在国内访问不是很稳定,可以在gitee下载

git clone https://gitee.com/iot-kubeedge/kubeedge.git $GOPATH/src/github.com/kubeedge/kubeedge

我的git遇到这个问题:

it fatal: Unable to find remote helper for 'https'

用git代替https,可以试一下(没有遇到的忽略下面的操作)

git clone --recursive git://gitee.com/iot-kubeedge/kubeedge.git

3.1.2 部署cloudcore

编译kubeadm

cd $GOPATH/src/github.com/kubeedge/kubeedge

make all WHAT=keadm

make all WHAT=cloudcore

make all WHAT=edgecore

编译完成后进入bin下

cd ./_output/local/bin/

创建cloud节点

./keadm init --advertise-address=192.168.17.137 --kubeedge-version=1.7.0

如果出现下面的内容,恭喜你,初始化成功

Kubernetes version verification passed, KubeEdge installation will start...

KubeEdge cloudcore is running, For logs visit: /var/log/kubeedge/cloudcore.log

CloudCore started

不过,99%的人应该是看不到这个界面的,除非你翻墙了。下面就需要手动下载几个文件了

cd /etc/kubeedge/crds/devices/ ##没有这个目录的话,手动创建即可

wget https://raw.githubusercontent.com/kubeedge/kubeedge/release-1.7/build/crds/devices/devices_v1alpha2_devicemodel.yaml

wget https://raw.githubusercontent.com/kubeedge/kubeedge/release-1.7/build/crds/devices/devices_v1alpha2_device.yaml

cd /etc/kubeedge/crds/reliablesyncs/ ##没有这个目录的话,手动创建即可

wget https://raw.githubusercontent.com/kubeedge/kubeedge/release-1.7/build/crds/reliablesyncs/cluster_objectsync_v1alpha1.yaml

wget https://raw.githubusercontent.com/kubeedge/kubeedge/release-1.7/build/crds/reliablesyncs/objectsync_v1alpha1.yaml

cd /etc/kubeedge/crds/router/ ##没有这个目录的话,手动创建即可

wget https://raw.githubusercontent.com/kubeedge/kubeedge/release-1.7/build/crds/router/router_v1_rule.yaml

wget https://raw.githubusercontent.com/kubeedge/kubeedge/release-1.7/build/crds/router/router_v1_ruleEndpoint.yaml

cd /etc/kubeedge/ ##没有这个目录的话,手动创建即可

wget https://raw.githubusercontent.com/kubeedge/kubeedge/release-1.7/build/tools/cloudcore.service

wget https://github.com/kubeedge/kubeedge/releases/download/v1.7.0/kubeedge-v1.7.0-linux-amd64.tar.gz

再次初始化

./keadm init --advertise-address=192.168.17.137 --kubeedge-version=1.7.0

Kubernetes version verification passed, KubeEdge installation will start...

Expected or Default KubeEdge version 1.7.0 is already downloaded and will checksum for it.

kubeedge-v1.7.0-linux-amd64.tar.gz checksum:

checksum_kubeedge-v1.7.0-linux-amd64.tar.gz.txt content:

kubeedge-v1.7.0-linux-amd64.tar.gz in your path checksum failed and do you want to delete this file and try to download again?

[y/N]:

此处输入N,checksum失败不影响配置

最后cloudcore 启动成功:

kubeedge-v1.7.0-linux-amd64/

kubeedge-v1.7.0-linux-amd64/edge/

kubeedge-v1.7.0-linux-amd64/edge/edgecore

kubeedge-v1.7.0-linux-amd64/cloud/

kubeedge-v1.7.0-linux-amd64/cloud/csidriver/

kubeedge-v1.7.0-linux-amd64/cloud/csidriver/csidriver

kubeedge-v1.7.0-linux-amd64/cloud/admission/

kubeedge-v1.7.0-linux-amd64/cloud/admission/admission

kubeedge-v1.7.0-linux-amd64/cloud/cloudcore/

kubeedge-v1.7.0-linux-amd64/cloud/cloudcore/cloudcore

kubeedge-v1.7.0-linux-amd64/versionKubeEdge cloudcore is running, For logs visit: /var/log/kubeedge/cloudcore.log

CloudCore started

3.2 edge端配置

edge端可以将cloud端编译生成的二进制可执行文件通过scp命令复制到edge端。

3.2.1 从云端获取令牌

在Cloud云端运行将返回令牌,该令牌将在加入边缘节点时使用

./keadm gettoken

934ea2c26ecfbb57dd8fb391e8906d79496c59598e3657bd09c6ec08068b931e.eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJleHAiOjE2Mjg5MTEyMTl9.osN_3o8roDfCg6kufPv7Qkf-sZJk7CwGNs9rZJcSets

3.2.2 加入边缘节点

keadm join将安装edgecore和mqtt。它还提供了一个标志,通过它可以设置特定的版本。

./keadm join --cloudcore-ipport=192.168.17.137:10000 --edgenode-name=node1 --kubeedge-version=1.7.0 --token=934ea2c26ecfbb57dd8fb391e8906d79496c59598e3657bd09c6ec08068b931e.eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJleHAiOjE2Mjg5MTEyMTl9.osN_3o8roDfCg6kufPv7Qkf-sZJk7CwGNs9rZJcSets

重要的提示: * –cloudcore-ipport 标志是强制性标志。 * 如果要自动为边缘节点应用证书,–token则需要。 * 云和边缘端使用的kubeEdge版本应相同。edgenode-name是主机名,千万不要写错

报错信息:

F0817 16:58:45.223586 31693 keadm.go:27] fail to download service file,error:{failed to exec 'bash -c cd /etc/kubeedge/ && sudo -E wget -t 5 -k --no-check-certificate https://raw.githubusercontent.com/kubeedge/kubeedge/release-1.7/build/tools/edgecore.service', err: --2021-08-17 16:58:23-- https://raw.githubusercontent.com/kubeedge/kubeedge/release-1.7/build/tools/edgecore.service

同样类似于cloudcore,将下面对应文件共享到配置目录/etc/kubeedge

https://github.com/kubeedge/kubeedge/releases/download/v1.7.0/kubeedge-v1.7.0-linux-amd64.tar.gzhttps://raw.githubusercontent.com/kubeedge/kubeedge/release-1.7/build/tools/edgecore.service

3.3 验证

边缘端在启动edgecore后,会与云端的cloudcore进行通信,K8s进而会将边缘端作为一个node纳入K8s的管控。

kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master Ready master 27h v1.17.0

node1 Ready agent,edge 4h25m v1.19.3-kubeedge-v1.7.0

kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-6955765f44-f7s8b 1/1 Running 2 27h

coredns-6955765f44-q69lg 1/1 Running 2 27h

etcd-k8s-master 1/1 Running 2 27h

kube-apiserver-k8s-master 1/1 Running 10 27h

kube-controller-manager-k8s-master 1/1 Running 4 27h

kube-flannel-ds-75btq 0/1 Pending 0 4h26m

kube-flannel-ds-7w6zp 1/1 Running 2 26h

kube-proxy-qrj4g 1/1 Running 2 27h

kube-scheduler-k8s-master 1/1 Running 4 27h

说明:这里由于edge节点没有部署kubelet,所以调度到edge节点上的flannel pod会创建失败。 这不影响KubeEdge的使用,可以先忽略这个问题。

四。运行 kubeedge 示例

这里拿官方的一个例子,进行测试,官方介绍如下:

❝ KubeEdge Counter Demo 计数器是一个伪设备,用户无需任何额外的物理设备即可运行此演示。

计数器在边缘侧运行,用户可以从云侧在 Web 中对其进行控制,也可以从云侧在 Web 中获得计数器值,

原理图如下:

4.1 准备工作 (云端操作)

下载示例代码:

使用官方的示例仓库会比较慢,这里使用加速仓库

git clone https://gitee.com/iot-kubeedge/kubeedge-examples.git $GOPATH/src/github.com/kubeedge/examples

4.2 创建 device model 和 device

创建 device model

cd $GOPATH/src/github.com/kubeedge/examples/kubeedge-counter-demo/crds

kubectl create -f kubeedge-counter-model.yaml

创建 model

#需要根据实际情况修改yaml文件,修改matchExpressions

cd $GOPATH/src/github.com/kubeedge/examples/kubeedge-counter-demo/crds

vim kubeedge-counter-instance.yaml

apiVersion: devices.kubeedge.io/v1alpha1

kind: Device

metadata:name: counterlabels:description: 'counter'manufacturer: 'test'

spec:deviceModelRef:name: counter-modelnodeSelector:nodeSelectorTerms:- matchExpressions:- key: 'kubernetes.io/hostname'operator: Invalues:- node1 # 我这里的hostname就是node1status:twins:- propertyName: statusdesired:metadata:type: stringvalue: 'OFF'reported:metadata:type: stringvalue: '0'

运行yaml

kubectl create -f kubeedge-counter-instance.yaml

4.3 部署云端应用

云端应用 web-controller-app 用来控制边缘端的 pi-counter-app 应用,该程序默认监听的端口号为 80,此处修改为 8090,如下所示:

# cd $GOPATH/src/github.com/kubeedge/examples/kubeedge-counter-demo/web-controller-app

# vim main.go

package mainimport ("github.com/astaxie/beego""github.com/kubeedge/examples/kubeedge-counter-demo/web-controller-app/controller"

)func main() {beego.Router("/", new(controllers.TrackController), "get:Index")beego.Router("/track/control/:trackId", new(controllers.TrackController), "get,post:ControlTrack")beego.Run(":8090")

}

改程序依赖的js有可能访问不到,导致后面会有问题,我们需要修改以下源码:、

# cd $GOPATH/src/github.com/kubeedge/examples/kubeedge-counter-demo/web-controller-app/views/

vi layout.html

<!DOCTYPE html>

<html lang="en"><head><meta charset="utf-8"><meta content="IE=edge" http-equiv="X-UA-Compatible"><meta content="width=device-width, initial-scale=1.0" name="viewport"><title>KubeEdge Web Demo {{.Title}}</title><meta content="" name="description"><meta content="" name="author"><script src="https://code.jquery.com/jquery-1.10.2.min.js"></script> ##改成下面的地址,把这行删了<script src="https://cdn.staticfile.org/jquery/1.10.2/jquery.min.js"></script><script src="/static/js/bootstrap.min.js"></script><script src="/static/js/bootstrap-select.js"></script><link rel="stylesheet" href="/static/css/bootstrap.min.css"><link rel="stylesheet" href="/static/css/main.css"><link rel="stylesheet" href="/static/css/bootstrap-select.css"><link href='http://fonts.googleapis.com/css?family=Rambla' rel='stylesheet' type='text/css'>{{.PageHead}}<!-- Just for debugging purposes. Don't actually copy this line! --><!--[if lt IE 9]><script src="../../docs-assets/js/ie8-responsive-file-warning.js"></script><![endif]--><!-- HTML5 shim and Respond.js IE8 support of HTML5 elements and media queries --><!--[if lt IE 9]><script src="https://oss.maxcdn.com/libs/html5shiv/3.7.0/html5shiv.js"></script><script src="https://oss.maxcdn.com/libs/respond.js/1.3.0/respond.min.js"></script><![endif]--></head><body>{{.LayoutContent}}{{.Modal}}

</body>

</html>

构建镜像

make all

make docker

部署 web-controller-app

cd $GOPATH/src/github.com/kubeedge/examples/kubeedge-counter-demo/crds

kubectl apply -f kubeedge-web-controller-app.yaml

部署成功后浏览器访问如下地址:

4.4部署边缘端应用

cd $GOPATH/src/github.com/kubeedge/examples/kubeedge-counter-demo/counter-mappermake allmake docker

部署 Pi Counter App

# cd $GOPATH/src/github.com/kubeedge/examples/kubeedge-counter-demo/crds

# kubectl apply -f kubeedge-pi-counter-app.yaml

说明:为了防止Pod的部署卡在ContainerCreating,这里直接通过docker save、scp和docker load命令将镜像发布到边缘端

docker save -o kubeedge-pi-counter.tar kubeedge/kubeedge-pi-counter:v1.0.0scp kubeedge-pi-counter.tar root@192.168.17.138:/rootdocker load -i kubeedge-pi-counter.tar # 在边缘端执行

体验 demo 现在,KubeEdge Demo 的云端部分和边缘端的部分都已经部署完毕,如下:

kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kubeedge-counter-app-758b9b4ffd-h4kcl 1/1 Running 0 147m 192.168.17.137 k8s-master <none> <none>

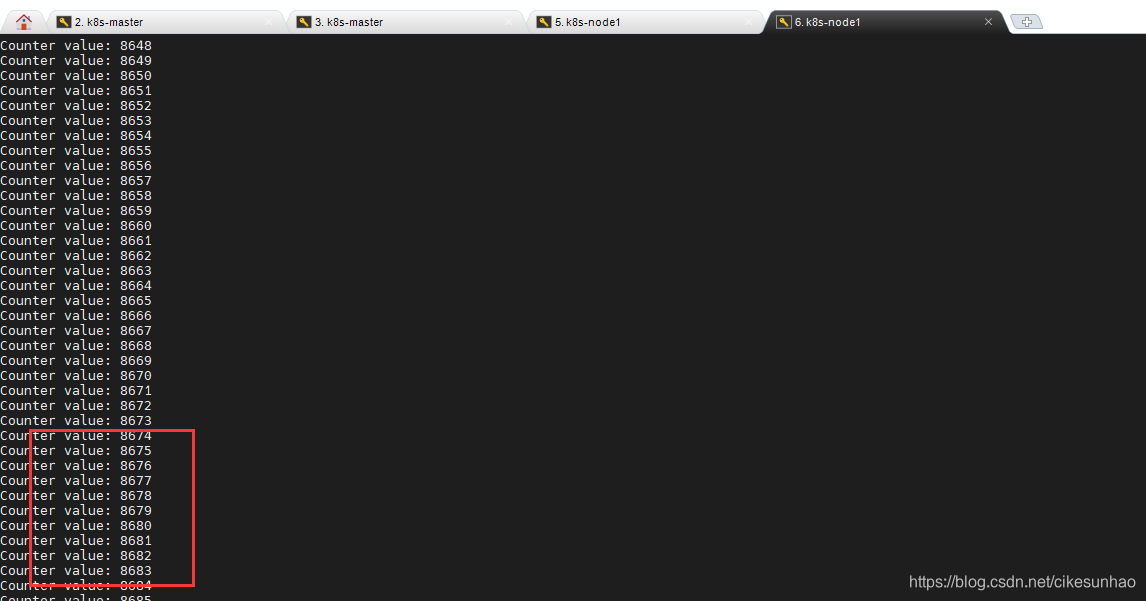

kubeedge-pi-counter-c69698d6-vxnbh 1/1 Running 0 156m 192.168.17.138 node1 <none> <none>选择ON点击Execute

去边缘节点使用docker logs -f counter-container-id

docker logs -f 16029a18aeed

这篇关于K8S v1.17.17KubeEdge v1.7部署指南+kubeedge-counter-demo示例的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!