本文主要是介绍C# OpenCvSharp DNN 部署L2CS-Net人脸朝向估计,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

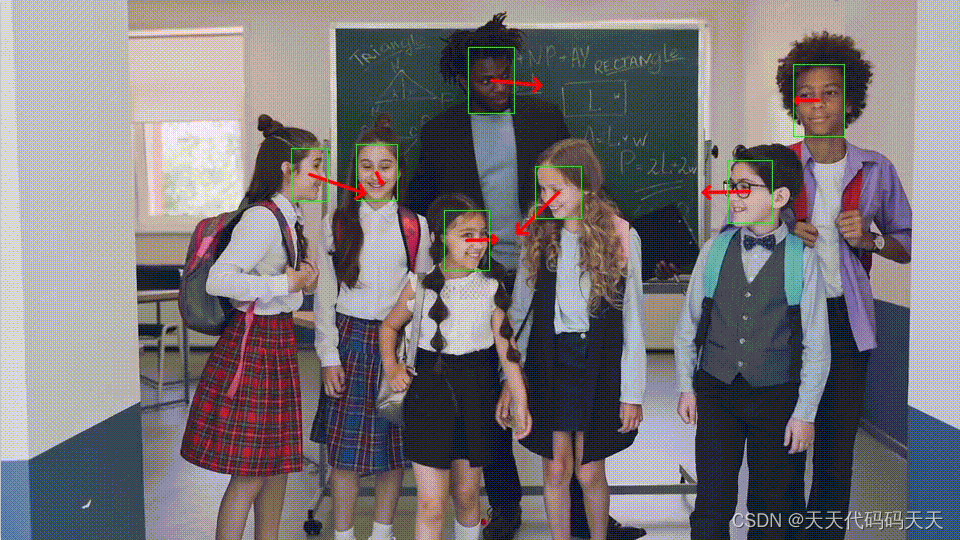

效果

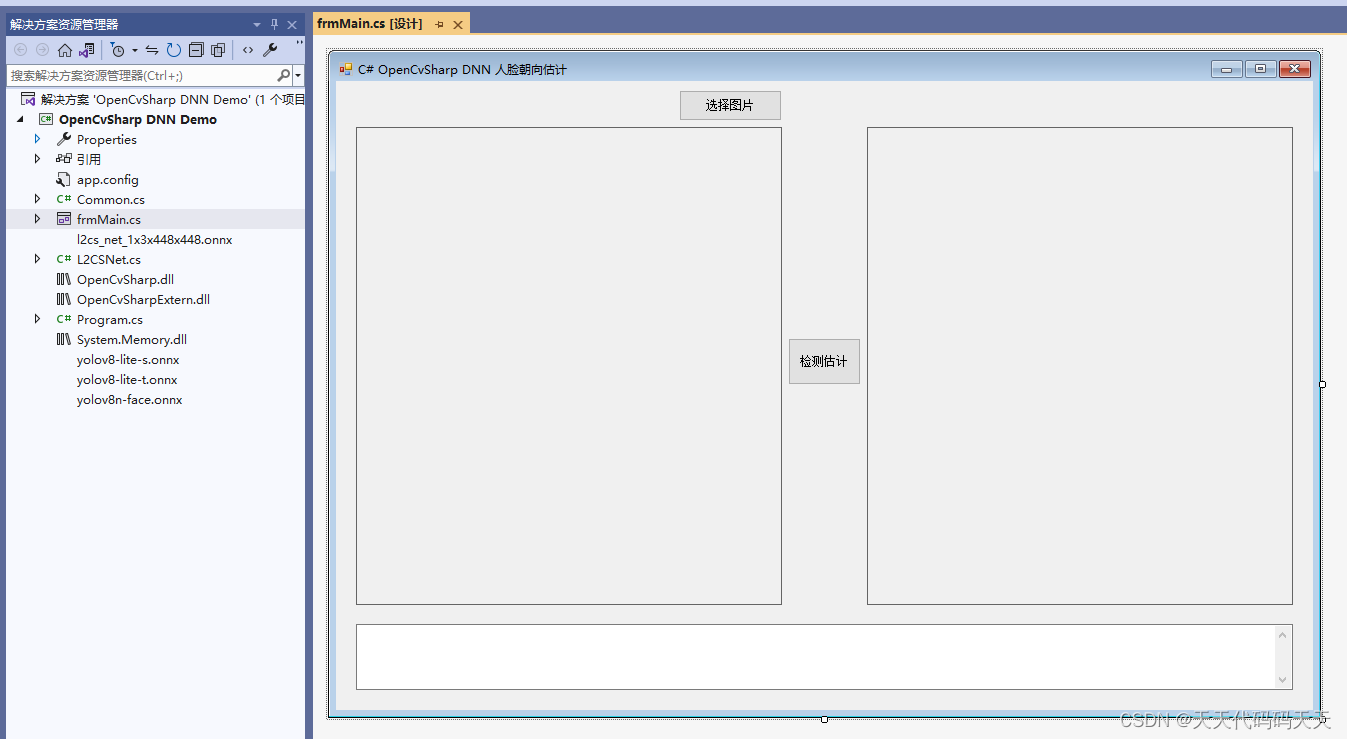

项目

代码

using OpenCvSharp;

using OpenCvSharp.Dnn;

using System;

using System.Collections.Generic;

using System.Drawing;

using System.Drawing.Drawing2D;

using System.Linq;

using System.Text;

using System.Windows.Forms;namespace OpenCvSharp_DNN_Demo

{public partial class frmMain : Form{public frmMain(){InitializeComponent();}string fileFilter = "*.*|*.bmp;*.jpg;*.jpeg;*.tiff;*.tiff;*.png";string image_path = "";string startupPath;DateTime dt1 = DateTime.Now;DateTime dt2 = DateTime.Now;string model_path;Mat image;Mat result_image;Net opencv_net;Mat BN_image;StringBuilder sb = new StringBuilder();int reg_max = 16;int num_class = 1;int inpWidth = 640;int inpHeight = 640;float score_threshold = 0.25f;float nms_threshold = 0.5f;L2CSNet gaze_predictor;private void Form1_Load(object sender, EventArgs e){startupPath = System.Windows.Forms.Application.StartupPath;model_path = startupPath + "\\yolov8n-face.onnx";//初始化网络类,读取本地模型opencv_net = CvDnn.ReadNetFromOnnx(model_path);gaze_predictor = new L2CSNet("l2cs_net_1x3x448x448.onnx");}private void button1_Click(object sender, EventArgs e){OpenFileDialog ofd = new OpenFileDialog();ofd.Filter = fileFilter;if (ofd.ShowDialog() != DialogResult.OK) return;pictureBox1.Image = null;image_path = ofd.FileName;pictureBox1.Image = new Bitmap(image_path);textBox1.Text = "";image = new Mat(image_path);pictureBox2.Image = null;}private void button2_Click(object sender, EventArgs e){if (image_path == ""){return;}int newh = 0, neww = 0, padh = 0, padw = 0;Mat resize_img = Common.ResizeImage(image, inpHeight, inpWidth, ref newh, ref neww, ref padh, ref padw);float ratioh = (float)image.Rows / newh, ratiow = (float)image.Cols / neww;dt1 = DateTime.Now;//数据归一化处理BN_image = CvDnn.BlobFromImage(resize_img, 1 / 255.0, new OpenCvSharp.Size(inpWidth, inpHeight), new Scalar(0, 0, 0), true, false);//配置图片输入数据opencv_net.SetInput(BN_image);//模型推理,读取推理结果Mat[] outs = new Mat[3] { new Mat(), new Mat(), new Mat() };string[] outBlobNames = opencv_net.GetUnconnectedOutLayersNames().ToArray();opencv_net.Forward(outs, outBlobNames);List<Rect> position_boxes = new List<Rect>();List<float> confidences = new List<float>();List<List<OpenCvSharp.Point>> landmarks = new List<List<OpenCvSharp.Point>>();Common.GenerateProposal(inpHeight, inpWidth, reg_max, num_class, score_threshold, 40, 40, outs[0], position_boxes, confidences, landmarks, image.Rows, image.Cols, ratioh, ratiow, padh, padw);Common.GenerateProposal(inpHeight, inpWidth, reg_max, num_class, score_threshold, 20, 20, outs[1], position_boxes, confidences, landmarks, image.Rows, image.Cols, ratioh, ratiow, padh, padw);Common.GenerateProposal(inpHeight, inpWidth, reg_max, num_class, score_threshold, 80, 80, outs[2], position_boxes, confidences, landmarks, image.Rows, image.Cols, ratioh, ratiow, padh, padw);//NMS非极大值抑制int[] indexes = new int[position_boxes.Count];CvDnn.NMSBoxes(position_boxes, confidences, score_threshold, nms_threshold, out indexes);List<Rect> re_result = new List<Rect>();List<List<OpenCvSharp.Point>> re_landmarks = new List<List<OpenCvSharp.Point>>();List<float> re_confidences = new List<float>();for (int i = 0; i < indexes.Length; i++){int index = indexes[i];re_result.Add(position_boxes[index]);re_landmarks.Add(landmarks[index]);re_confidences.Add(confidences[index]);}float[] gaze_yaw_pitch = new float[2];float length = (float)(image.Cols / 1.5);result_image = image.Clone();if (re_result.Count > 0){sb.Clear();for (int i = 0; i < re_result.Count; i++){Mat crop_img = new Mat(result_image, re_result[i]);gaze_predictor.Detect(crop_img, gaze_yaw_pitch);//draw gaze float pos_x = (float)(re_result[i].X + 0.5 * re_result[i].Width);float pos_y = (float)(re_result[i].Y + 0.5 * re_result[i].Height);float dy = (float)(-length * Math.Sin(gaze_yaw_pitch[0]) * Math.Cos(gaze_yaw_pitch[1]));float dx = (float)(-length * Math.Sin(gaze_yaw_pitch[1]));OpenCvSharp.Point from = new OpenCvSharp.Point((int)pos_x, (int)pos_y);OpenCvSharp.Point to = new OpenCvSharp.Point((int)(pos_x + dx), (int)(pos_y + dy));Cv2.ArrowedLine(result_image, from, to, new Scalar(255, 0, 0), 2, 0, 0, 0.18);Cv2.Rectangle(result_image, re_result[i], new Scalar(0, 0, 255), 2, LineTypes.Link8);//Cv2.Rectangle(result_image, new OpenCvSharp.Point(re_result[i].X, re_result[i].Y), new OpenCvSharp.Point(re_result[i].X + re_result[i].Width, re_result[i].Y+ re_result[i].Height), new Scalar(0, 255, 0), 2);Cv2.PutText(result_image, "face-" + re_confidences[i].ToString("0.00"),new OpenCvSharp.Point(re_result[i].X, re_result[i].Y - 10),HersheyFonts.HersheySimplex, 1, new Scalar(0, 0, 255), 2);foreach (var item in re_landmarks[i]){Cv2.Circle(result_image, item, 2, new Scalar(0, 255, 0), -1);}sb.AppendLine(string.Format("{0}:{1},({2},{3},{4},{5})", "face", re_confidences[i].ToString("0.00"), re_result[i].TopLeft.X, re_result[i].TopLeft.Y, re_result[i].BottomRight.X, re_result[i].BottomRight.Y));}dt2 = DateTime.Now;sb.AppendLine("--------------------------");sb.AppendLine("耗时:" + (dt2 - dt1).TotalMilliseconds + "ms");pictureBox2.Image = new Bitmap(result_image.ToMemoryStream());textBox1.Text = sb.ToString();}else{textBox1.Text = "无信息";}}}

}

参考

GitHub - Ahmednull/L2CS-Net: The official PyTorch implementation of L2CS-Net for gaze estimation and tracking

下载

可执行程序exe包0积分下载

源码下载

这篇关于C# OpenCvSharp DNN 部署L2CS-Net人脸朝向估计的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!