本文主要是介绍【flink】Chunk splitting has encountered exception,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

执行任务报错: Chunk splitting has encountered exception

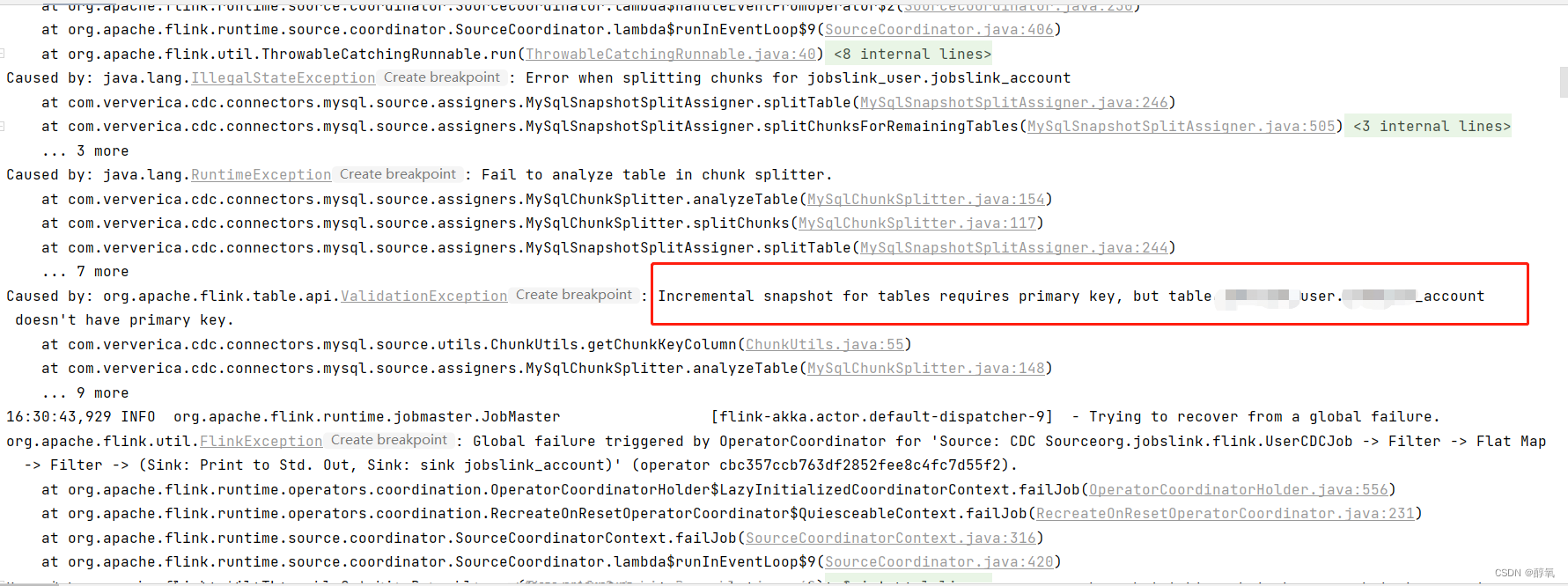

错误信息截图:

完整的错误信息:

16:30:43,911 ERROR org.apache.flink.runtime.source.coordinator.SourceCoordinator [SourceCoordinator-Source: CDC Sourceorg.jobslink.flink.UserCDCJob] - Uncaught exception in the SplitEnumerator for Source Source: CDC Sourceorg.flink.UserCDCJob while handling operator event RequestSplitEvent (host='') from subtask 0. Triggering job failover.

org.apache.flink.util.FlinkRuntimeException: Chunk splitting has encountered exceptionat com.ververica.cdc.connectors.mysql.source.assigners.MySqlSnapshotSplitAssigner.checkSplitterErrors(MySqlSnapshotSplitAssigner.java:522)at com.ververica.cdc.connectors.mysql.source.assigners.MySqlSnapshotSplitAssigner.getNext(MySqlSnapshotSplitAssigner.java:278)at com.ververica.cdc.connectors.mysql.source.assigners.MySqlSnapshotSplitAssigner.getNext(MySqlSnapshotSplitAssigner.java:298)at com.ververica.cdc.connectors.mysql.source.assigners.MySqlHybridSplitAssigner.getNext(MySqlHybridSplitAssigner.java:123)at com.ververica.cdc.connectors.mysql.source.enumerator.MySqlSourceEnumerator.assignSplits(MySqlSourceEnumerator.java:201)at com.ververica.cdc.connectors.mysql.source.enumerator.MySqlSourceEnumerator.handleSplitRequest(MySqlSourceEnumerator.java:117)at org.apache.flink.runtime.source.coordinator.SourceCoordinator.lambda$handleEventFromOperator$2(SourceCoordinator.java:230)at org.apache.flink.runtime.source.coordinator.SourceCoordinator.lambda$runInEventLoop$9(SourceCoordinator.java:406)at org.apache.flink.util.ThrowableCatchingRunnable.run(ThrowableCatchingRunnable.java:40)at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)at java.util.concurrent.FutureTask.run$$$capture(FutureTask.java:266)at java.util.concurrent.FutureTask.run(FutureTask.java)at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$201(ScheduledThreadPoolExecutor.java:180)at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:293)at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)at java.lang.Thread.run(Thread.java:748)

Caused by: java.lang.IllegalStateException: Error when splitting chunks for jobslink_user.jobslink_accountat com.ververica.cdc.connectors.mysql.source.assigners.MySqlSnapshotSplitAssigner.splitTable(MySqlSnapshotSplitAssigner.java:246)at com.ververica.cdc.connectors.mysql.source.assigners.MySqlSnapshotSplitAssigner.splitChunksForRemainingTables(MySqlSnapshotSplitAssigner.java:505)at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)at java.util.concurrent.FutureTask.run$$$capture(FutureTask.java:266)at java.util.concurrent.FutureTask.run(FutureTask.java)... 3 more

Caused by: java.lang.RuntimeException: Fail to analyze table in chunk splitter.at com.ververica.cdc.connectors.mysql.source.assigners.MySqlChunkSplitter.analyzeTable(MySqlChunkSplitter.java:154)at com.ververica.cdc.connectors.mysql.source.assigners.MySqlChunkSplitter.splitChunks(MySqlChunkSplitter.java:117)at com.ververica.cdc.connectors.mysql.source.assigners.MySqlSnapshotSplitAssigner.splitTable(MySqlSnapshotSplitAssigner.java:244)... 7 more

Caused by: org.apache.flink.table.api.ValidationException: Incremental snapshot for tables requires primary key, but table user.account doesn't have primary key.at com.ververica.cdc.connectors.mysql.source.utils.ChunkUtils.getChunkKeyColumn(ChunkUtils.java:55)at com.ververica.cdc.connectors.mysql.source.assigners.MySqlChunkSplitter.analyzeTable(MySqlChunkSplitter.java:148)... 9 more

16:30:43,929 INFO org.apache.flink.runtime.jobmaster.JobMaster [flink-akka.actor.default-dispatcher-9] - Trying to recover from a global failure.

org.apache.flink.util.FlinkException: Global failure triggered by OperatorCoordinator for 'Source: CDC Sourceorg.jobslink.flink.UserCDCJob -> Filter -> Flat Map -> Filter -> (Sink: Print to Std. Out, Sink: sink jobslink_account)' (operator cbc357ccb763df2852fee8c4fc7d55f2).at org.apache.flink.runtime.operators.coordination.OperatorCoordinatorHolder$LazyInitializedCoordinatorContext.failJob(OperatorCoordinatorHolder.java:556)at org.apache.flink.runtime.operators.coordination.RecreateOnResetOperatorCoordinator$QuiesceableContext.failJob(RecreateOnResetOperatorCoordinator.java:231)at org.apache.flink.runtime.source.coordinator.SourceCoordinatorContext.failJob(SourceCoordinatorContext.java:316)at org.apache.flink.runtime.source.coordinator.SourceCoordinator.lambda$runInEventLoop$9(SourceCoordinator.java:420)at org.apache.flink.util.ThrowableCatchingRunnable.run(ThrowableCatchingRunnable.java:40)at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)at java.util.concurrent.FutureTask.run$$$capture(FutureTask.java:266)at java.util.concurrent.FutureTask.run(FutureTask.java)at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$201(ScheduledThreadPoolExecutor.java:180)at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:293)at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)at java.lang.Thread.run(Thread.java:748)

Caused by: org.apache.flink.util.FlinkRuntimeException: Chunk splitting has encountered exceptionat com.ververica.cdc.connectors.mysql.source.assigners.MySqlSnapshotSplitAssigner.checkSplitterErrors(MySqlSnapshotSplitAssigner.java:522)at com.ververica.cdc.connectors.mysql.source.assigners.MySqlSnapshotSplitAssigner.getNext(MySqlSnapshotSplitAssigner.java:278)at com.ververica.cdc.connectors.mysql.source.assigners.MySqlSnapshotSplitAssigner.getNext(MySqlSnapshotSplitAssigner.java:298)at com.ververica.cdc.connectors.mysql.source.assigners.MySqlHybridSplitAssigner.getNext(MySqlHybridSplitAssigner.java:123)at com.ververica.cdc.connectors.mysql.source.enumerator.MySqlSourceEnumerator.assignSplits(MySqlSourceEnumerator.java:201)at com.ververica.cdc.connectors.mysql.source.enumerator.MySqlSourceEnumerator.handleSplitRequest(MySqlSourceEnumerator.java:117)at org.apache.flink.runtime.source.coordinator.SourceCoordinator.lambda$handleEventFromOperator$2(SourceCoordinator.java:230)at org.apache.flink.runtime.source.coordinator.SourceCoordinator.lambda$runInEventLoop$9(SourceCoordinator.java:406)... 9 more

Caused by: java.lang.IllegalStateException: Error when splitting chunks for jobslink_user.jobslink_accountat com.ververica.cdc.connectors.mysql.source.assigners.MySqlSnapshotSplitAssigner.splitTable(MySqlSnapshotSplitAssigner.java:246)at com.ververica.cdc.connectors.mysql.source.assigners.MySqlSnapshotSplitAssigner.splitChunksForRemainingTables(MySqlSnapshotSplitAssigner.java:505)at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)at java.util.concurrent.FutureTask.run$$$capture(FutureTask.java:266)at java.util.concurrent.FutureTask.run(FutureTask.java)... 3 more

Caused by: java.lang.RuntimeException: Fail to analyze table in chunk splitter.at com.ververica.cdc.connectors.mysql.source.assigners.MySqlChunkSplitter.analyzeTable(MySqlChunkSplitter.java:154)at com.ververica.cdc.connectors.mysql.source.assigners.MySqlChunkSplitter.splitChunks(MySqlChunkSplitter.java:117)at com.ververica.cdc.connectors.mysql.source.assigners.MySqlSnapshotSplitAssigner.splitTable(MySqlSnapshotSplitAssigner.java:244)... 7 more

Caused by: org.apache.flink.table.api.ValidationException: Incremental snapshot for tables requires primary key, but table user.account doesn't have primary key.at com.ververica.cdc.connectors.mysql.source.utils.ChunkUtils.getChunkKeyColumn(ChunkUtils.java:55)at com.ververica.cdc.connectors.mysql.source.assigners.MySqlChunkSplitter.analyzeTable(MySqlChunkSplitter.java:148)... 9 more

16:30:43,933 INFO org.apache.flink.runtime.executiongraph.ExecutionGraph [flink-akka.actor.default-dispatcher-9] - Job org.flink.UserCDCJob (35780b6f0490a368fc256b228e99f7cb) switched from state RUNNING to RESTARTING.

16:30:43,936 INFO org.apache.flink.runtime.executiongraph.ExecutionGraph [flink-akka.actor.default-dispatcher-9] - Source: CDC Sourceorg.flink.UserCDCJob -> Filter -> Flat Map -> Filter -> (Sink: Print to Std. Out, Sink: sink account) (1/1) (45028a2ced14e3bbe2d7cf3349defba0) switched from RUNNING to CANCELING.Incremental snapshot for tables requires primary key, but table user.account doesn't have primary key.

表的增量快照需要主键,但表user.account没有主键。

去user库account表增加主键

添加上主键以后就可以正常执行了!!!

这篇关于【flink】Chunk splitting has encountered exception的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!