本文主要是介绍Kaggle竞赛项目--推荐系统之便利店销量预测,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

视频地址:https://www.bilibili.com/video/av53701517

源码地址: 链接:https://pan.baidu.com/s/1r-ncwIDU92ZFMQaBY5sBaQ 提取码:peeq

Forecast sales using store, promotion, and competitor data

Rossmann operates over 3,000 drug stores in 7 European countries. Currently,

Rossmann store managers are tasked with predicting their daily sales for up to six weeks in advance. Store sales are influenced by many factors, including promotions, competition, school and state holidays, seasonality, and locality. With thousands of individual managers predicting sales based on their unique circumstances, the accuracy of results can be quite varied.

In their first Kaggle competition, Rossmann is challenging you to predict 6 weeks of daily sales for 1,115 stores located across Germany. Reliable sales forecasts enable store managers to create effective staff schedules that increase productivity and motivation. By helping Rossmann create a robust prediction model, you will help store managers stay focused on what’s most important to them: their customers and their teams!If you are interested in joining Rossmann at their headquarters near Hanover, Germany, please contact Mr. Frank König (Frank.Koenig {at} rossmann.de) Rossmann is currently recruiting data scientists at senior and entry-level positions.

数据

You are provided with historical sales data for 1,115 Rossmann stores. The task is to forecast the "Sales" column for the test set. Note that some stores in the dataset were temporarily closed for refurbishment.

Files

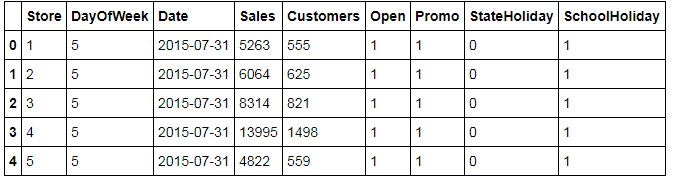

- train.csv - historical data including Sales

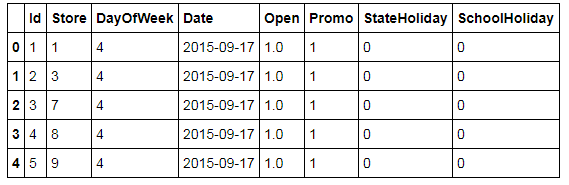

- test.csv - historical data excluding Sales

- sample_submission.csv - a sample submission file in the correct format

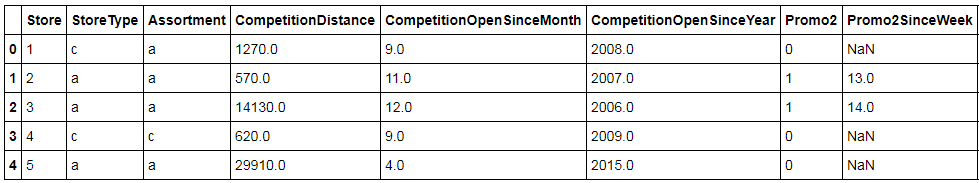

- store.csv - supplemental information about the stores

Data fields

Most of the fields are self-explanatory. The following are descriptions for those that aren't.

- Id - an Id that represents a (Store, Date) duple within the test set

- Store - a unique Id for each store

- Sales - the turnover for any given day (this is what you are predicting)

- Customers - the number of customers on a given day

- Open - an indicator for whether the store was open: 0 = closed, 1 = open

- StateHoliday - indicates a state holiday. Normally all stores, with few exceptions, are closed on state holidays. Note that all schools are closed on public holidays and weekends. a = public holiday, b = Easter holiday, c = Christmas, 0 = None

- SchoolHoliday - indicates if the (Store, Date) was affected by the closure of public schools

- StoreType - differentiates between 4 different store models: a, b, c, d

- Assortment - describes an assortment level: a = basic, b = extra, c = extended

- CompetitionDistance - distance in meters to the nearest competitor store

- CompetitionOpenSince[Month/Year] - gives the approximate year and month of the time the nearest competitor was opened

- Promo - indicates whether a store is running a promo on that day

- Promo2 - Promo2 is a continuing and consecutive promotion for some stores: 0 = store is not participating, 1 = store is participating

- Promo2Since[Year/Week] - describes the year and calendar week when the store started participating in Promo2

- PromoInterval - describes the consecutive intervals Promo2 is started, naming the months the promotion is started anew. E.g. "Feb,May,Aug,Nov" means each round starts in February, May, August, November of any given year for that store

引入所需的库

import pandas as pd

import datetime

import csv

import numpy as np

import os

import scipy as sp

import xgboost as xgb

import itertools

import operator

import warnings

warnings.filterwarnings("ignore")from sklearn.preprocessing import StandardScaler, LabelEncoder

from sklearn.base import TransformerMixin

from sklearn import cross_validation

from matplotlib import pylab as plt

plot = Truegoal = 'Sales'

myid = 'Id'定义一些变换和评判准则

使用不同的loss function的时候要特别注意这个

def ToWeight(y):w = np.zeros(y.shape, dtype=float)ind = y != 0w[ind] = 1./(y[ind]**2)return wdef rmspe(yhat, y):w = ToWeight(y)rmspe = np.sqrt(np.mean( w * (y - yhat)**2 ))return rmspedef rmspe_xg(yhat, y):# y = y.valuesy = y.get_label()y = np.exp(y) - 1yhat = np.exp(yhat) - 1w = ToWeight(y)rmspe = np.sqrt(np.mean(w * (y - yhat)**2))return "rmspe", rmspestore = pd.read_csv('./data/store.csv')

store.head()

train_df = pd.read_csv('./data/train.csv')

train_df.head()

test_df = pd.read_csv('./data/test.csv')

test_df.head()

加载数据

def load_data():"""加载数据,设定数值型和非数值型数据"""store = pd.read_csv('./data/store.csv')train_org = pd.read_csv('./data/train.csv',dtype={'StateHoliday':pd.np.string_})test_org = pd.read_csv('./data/test.csv',dtype={'StateHoliday':pd.np.string_})train = pd.merge(train_org,store, on='Store', how='left')test = pd.merge(test_org,store, on='Store', how='left')features = test.columns.tolist()numerics = ['int16', 'int32', 'int64', 'float16', 'float32', 'float64']features_numeric = test.select_dtypes(include=numerics).columns.tolist()features_non_numeric = [f for f in features if f not in features_numeric]return (train,test,features,features_non_numeric)数据与特征处理

def process_data(train,test,features,features_non_numeric):"""Feature engineering and selection."""# # FEATURE ENGINEERINGtrain = train[train['Sales'] > 0]for data in [train,test]:# year month daydata['year'] = data.Date.apply(lambda x: x.split('-')[0])data['year'] = data['year'].astype(float)data['month'] = data.Date.apply(lambda x: x.split('-')[1])data['month'] = data['month'].astype(float)data['day'] = data.Date.apply(lambda x: x.split('-')[2])data['day'] = data['day'].astype(float)# promo interval "Jan,Apr,Jul,Oct"data['promojan'] = data.PromoInterval.apply(lambda x: 0 if isinstance(x, float) else 1 if "Jan" in x else 0)data['promofeb'] = data.PromoInterval.apply(lambda x: 0 if isinstance(x, float) else 1 if "Feb" in x else 0)data['promomar'] = data.PromoInterval.apply(lambda x: 0 if isinstance(x, float) else 1 if "Mar" in x else 0)data['promoapr'] = data.PromoInterval.apply(lambda x: 0 if isinstance(x, float) else 1 if "Apr" in x else 0)data['promomay'] = data.PromoInterval.apply(lambda x: 0 if isinstance(x, float) else 1 if "May" in x else 0)data['promojun'] = data.PromoInterval.apply(lambda x: 0 if isinstance(x, float) else 1 if "Jun" in x else 0)data['promojul'] = data.PromoInterval.apply(lambda x: 0 if isinstance(x, float) else 1 if "Jul" in x else 0)data['promoaug'] = data.PromoInterval.apply(lambda x: 0 if isinstance(x, float) else 1 if "Aug" in x else 0)data['promosep'] = data.PromoInterval.apply(lambda x: 0 if isinstance(x, float) else 1 if "Sep" in x else 0)data['promooct'] = data.PromoInterval.apply(lambda x: 0 if isinstance(x, float) else 1 if "Oct" in x else 0)data['promonov'] = data.PromoInterval.apply(lambda x: 0 if isinstance(x, float) else 1 if "Nov" in x else 0)data['promodec'] = data.PromoInterval.apply(lambda x: 0 if isinstance(x, float) else 1 if "Dec" in x else 0)# # Features set.noisy_features = [myid,'Date']features = [c for c in features if c not in noisy_features]features_non_numeric = [c for c in features_non_numeric if c not in noisy_features]features.extend(['year','month','day'])# Fill NAclass DataFrameImputer(TransformerMixin):# http://stackoverflow.com/questions/25239958/impute-categorical-missing-values-in-scikit-learndef __init__(self):"""Impute missing values.Columns of dtype object are imputed with the most frequent valuein column.Columns of other types are imputed with mean of column."""def fit(self, X, y=None):self.fill = pd.Series([X[c].value_counts().index[0] # modeif X[c].dtype == np.dtype('O') else X[c].mean() for c in X], # meanindex=X.columns)return selfdef transform(self, X, y=None):return X.fillna(self.fill)train = DataFrameImputer().fit_transform(train)test = DataFrameImputer().fit_transform(test)# Pre-processing non-numberic valuesle = LabelEncoder()for col in features_non_numeric:le.fit(list(train[col])+list(test[col]))train[col] = le.transform(train[col])test[col] = le.transform(test[col])# LR和神经网络这种模型都对输入数据的幅度极度敏感,请先做归一化操作scaler = StandardScaler()for col in set(features) - set(features_non_numeric) - \set([]): # TODO: add what not to scalescaler.fit(list(train[col])+list(test[col]))train[col] = scaler.transform(train[col])test[col] = scaler.transform(test[col])return (train,test,features,features_non_numeric)训练与分析

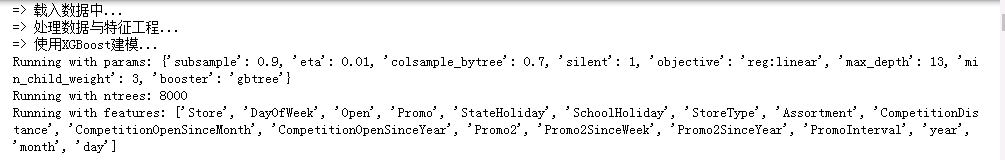

def XGB_native(train,test,features,features_non_numeric):depth = 13eta = 0.01ntrees = 8000mcw = 3params = {"objective": "reg:linear","booster": "gbtree","eta": eta,"max_depth": depth,"min_child_weight": mcw,"subsample": 0.9,"colsample_bytree": 0.7,"silent": 1}print "Running with params: " + str(params)print "Running with ntrees: " + str(ntrees)print "Running with features: " + str(features)# Train model with local splittsize = 0.05X_train, X_test = cross_validation.train_test_split(train, test_size=tsize)dtrain = xgb.DMatrix(X_train[features], np.log(X_train[goal] + 1))dvalid = xgb.DMatrix(X_test[features], np.log(X_test[goal] + 1))watchlist = [(dvalid, 'eval'), (dtrain, 'train')]gbm = xgb.train(params, dtrain, ntrees, evals=watchlist, early_stopping_rounds=100, feval=rmspe_xg, verbose_eval=True)train_probs = gbm.predict(xgb.DMatrix(X_test[features]))indices = train_probs < 0train_probs[indices] = 0error = rmspe(np.exp(train_probs) - 1, X_test[goal].values)print error# Predict and Exporttest_probs = gbm.predict(xgb.DMatrix(test[features]))indices = test_probs < 0test_probs[indices] = 0submission = pd.DataFrame({myid: test[myid], goal: np.exp(test_probs) - 1})if not os.path.exists('result/'):os.makedirs('result/')submission.to_csv("./result/dat-xgb_d%s_eta%s_ntree%s_mcw%s_tsize%s.csv" % (str(depth),str(eta),str(ntrees),str(mcw),str(tsize)) , index=False)# Feature importanceif plot:outfile = open('xgb.fmap', 'w')i = 0for feat in features:outfile.write('{0}\t{1}\tq\n'.format(i, feat))i = i + 1outfile.close()importance = gbm.get_fscore(fmap='xgb.fmap')importance = sorted(importance.items(), key=operator.itemgetter(1))df = pd.DataFrame(importance, columns=['feature', 'fscore'])df['fscore'] = df['fscore'] / df['fscore'].sum()# Plotitupplt.figure()df.plot()df.plot(kind='barh', x='feature', y='fscore', legend=False, figsize=(25, 15))plt.title('XGBoost Feature Importance')plt.xlabel('relative importance')plt.gcf().savefig('Feature_Importance_xgb_d%s_eta%s_ntree%s_mcw%s_tsize%s.png' % (str(depth),str(eta),str(ntrees),str(mcw),str(tsize)))print "=> 载入数据中..."

train,test,features,features_non_numeric = load_data()

print "=> 处理数据与特征工程..."

train,test,features,features_non_numeric = process_data(train,test,features,features_non_numeric)

print "=> 使用XGBoost建模..."

XGB_native(train,test,features,features_non_numeric)

Will train until train error hasn't decreased in 100 rounds.

[0] eval-rmspe:0.999864 train-rmspe:0.999864

[1] eval-rmspe:0.999838 train-rmspe:0.999838

[2] eval-rmspe:0.999810 train-rmspe:0.999810

[3] eval-rmspe:0.999780 train-rmspe:0.999780

[4] eval-rmspe:0.999747 train-rmspe:0.999748

[5] eval-rmspe:0.999713 train-rmspe:0.999713

[6] eval-rmspe:0.999676 train-rmspe:0.999676

[7] eval-rmspe:0.999637 train-rmspe:0.999637

[8] eval-rmspe:0.999594 train-rmspe:0.999595

[9] eval-rmspe:0.999550 train-rmspe:0.999550

[10] eval-rmspe:0.999502 train-rmspe:0.999502

[11] eval-rmspe:0.999451 train-rmspe:0.999451

[12] eval-rmspe:0.999397 train-rmspe:0.999397

[13] eval-rmspe:0.999339 train-rmspe:0.999339

[14] eval-rmspe:0.999277 train-rmspe:0.999278

[15] eval-rmspe:0.999212 train-rmspe:0.999213

[16] eval-rmspe:0.999143 train-rmspe:0.999143

[17] eval-rmspe:0.999069 train-rmspe:0.999070

[18] eval-rmspe:0.998991 train-rmspe:0.998992

[19] eval-rmspe:0.998908 train-rmspe:0.998909

[20] eval-rmspe:0.998821 train-rmspe:0.998822

[21] eval-rmspe:0.998728 train-rmspe:0.998729

[22] eval-rmspe:0.998630 train-rmspe:0.998631

[23] eval-rmspe:0.998527 train-rmspe:0.998528

[24] eval-rmspe:0.998418 train-rmspe:0.998418

[25] eval-rmspe:0.998303 train-rmspe:0.998303

[26] eval-rmspe:0.998181 train-rmspe:0.998182

[27] eval-rmspe:0.998053 train-rmspe:0.998053

[28] eval-rmspe:0.997917 train-rmspe:0.997918

[29] eval-rmspe:0.997775 train-rmspe:0.997776

[30] eval-rmspe:0.997626 train-rmspe:0.997627

[31] eval-rmspe:0.997469 train-rmspe:0.997470

[32] eval-rmspe:0.997303 train-rmspe:0.997304

[33] eval-rmspe:0.997130 train-rmspe:0.997130

[34] eval-rmspe:0.996947 train-rmspe:0.996948

[35] eval-rmspe:0.996756 train-rmspe:0.996757

[36] eval-rmspe:0.996556 train-rmspe:0.996557

[37] eval-rmspe:0.996347 train-rmspe:0.996348

[38] eval-rmspe:0.996127 train-rmspe:0.996128

[39] eval-rmspe:0.995897 train-rmspe:0.995898

[40] eval-rmspe:0.995657 train-rmspe:0.995658

[41] eval-rmspe:0.995405 train-rmspe:0.995406

[42] eval-rmspe:0.995143 train-rmspe:0.995143

[43] eval-rmspe:0.994869 train-rmspe:0.994870

[44] eval-rmspe:0.994582 train-rmspe:0.994583

[45] eval-rmspe:0.994284 train-rmspe:0.994285

[46] eval-rmspe:0.993972 train-rmspe:0.993973

[47] eval-rmspe:0.993647 train-rmspe:0.993648

[48] eval-rmspe:0.993310 train-rmspe:0.993310

[49] eval-rmspe:0.992957 train-rmspe:0.992958

[50] eval-rmspe:0.992592 train-rmspe:0.992592

[51] eval-rmspe:0.992212 train-rmspe:0.992212

[52] eval-rmspe:0.991815 train-rmspe:0.991815

[53] eval-rmspe:0.991404 train-rmspe:0.991404

[54] eval-rmspe:0.990975 train-rmspe:0.990975

[55] eval-rmspe:0.990532 train-rmspe:0.990532

[56] eval-rmspe:0.990072 train-rmspe:0.990072

[57] eval-rmspe:0.989596 train-rmspe:0.989595

[58] eval-rmspe:0.989102 train-rmspe:0.989101

[59] eval-rmspe:0.988589 train-rmspe:0.988588

[60] eval-rmspe:0.988058 train-rmspe:0.988058

[61] eval-rmspe:0.987509 train-rmspe:0.987508

[62] eval-rmspe:0.986942 train-rmspe:0.986941

[63] eval-rmspe:0.986356 train-rmspe:0.986355

[64] eval-rmspe:0.985748 train-rmspe:0.985747

[65] eval-rmspe:0.985122 train-rmspe:0.985121

[66] eval-rmspe:0.984474 train-rmspe:0.984473

[67] eval-rmspe:0.983806 train-rmspe:0.983804

[68] eval-rmspe:0.983117 train-rmspe:0.983115

[69] eval-rmspe:0.982407 train-rmspe:0.982406

[70] eval-rmspe:0.981673 train-rmspe:0.981671

[71] eval-rmspe:0.980916 train-rmspe:0.980914

[72] eval-rmspe:0.980138 train-rmspe:0.980136

[73] eval-rmspe:0.979336 train-rmspe:0.979333

[74] eval-rmspe:0.978509 train-rmspe:0.978506

[75] eval-rmspe:0.977662 train-rmspe:0.977660

[76] eval-rmspe:0.976788 train-rmspe:0.976786

[77] eval-rmspe:0.975891 train-rmspe:0.975887

[78] eval-rmspe:0.974967 train-rmspe:0.974963

[79] eval-rmspe:0.974015 train-rmspe:0.974011

[80] eval-rmspe:0.973041 train-rmspe:0.973037

[81] eval-rmspe:0.972039 train-rmspe:0.972036

[82] eval-rmspe:0.971013 train-rmspe:0.971010

[83] eval-rmspe:0.969961 train-rmspe:0.969957

[84] eval-rmspe:0.968882 train-rmspe:0.968878

[85] eval-rmspe:0.967775 train-rmspe:0.967770

[86] eval-rmspe:0.966639 train-rmspe:0.966635

[87] eval-rmspe:0.965480 train-rmspe:0.965475

[88] eval-rmspe:0.964287 train-rmspe:0.964283

[89] eval-rmspe:0.963068 train-rmspe:0.963063

[90] eval-rmspe:0.961818 train-rmspe:0.961814

[91] eval-rmspe:0.960541 train-rmspe:0.960536

[92] eval-rmspe:0.959236 train-rmspe:0.959231

[93] eval-rmspe:0.957903 train-rmspe:0.957898

[94] eval-rmspe:0.956536 train-rmspe:0.956532

[95] eval-rmspe:0.955146 train-rmspe:0.955141

[96] eval-rmspe:0.953722 train-rmspe:0.953717

[97] eval-rmspe:0.952264 train-rmspe:0.952260

[98] eval-rmspe:0.950781 train-rmspe:0.950777

[99] eval-rmspe:0.949263 train-rmspe:0.949260

[100] eval-rmspe:0.947724 train-rmspe:0.947721

[101] eval-rmspe:0.946153 train-rmspe:0.946150

[102] eval-rmspe:0.944546 train-rmspe:0.944544

[103] eval-rmspe:0.942909 train-rmspe:0.942907

[104] eval-rmspe:0.941239 train-rmspe:0.941238

[105] eval-rmspe:0.939537 train-rmspe:0.939537

[106] eval-rmspe:0.937813 train-rmspe:0.937813

[107] eval-rmspe:0.936048 train-rmspe:0.936050

[108] eval-rmspe:0.934255 train-rmspe:0.934258

[109] eval-rmspe:0.932432 train-rmspe:0.932436

[110] eval-rmspe:0.930573 train-rmspe:0.930578

[111] eval-rmspe:0.928691 train-rmspe:0.928697

[112] eval-rmspe:0.926783 train-rmspe:0.926790

[113] eval-rmspe:0.924841 train-rmspe:0.924849

[114] eval-rmspe:0.922866 train-rmspe:0.922875

[115] eval-rmspe:0.920861 train-rmspe:0.920872

[116] eval-rmspe:0.918817 train-rmspe:0.918830

[117] eval-rmspe:0.916740 train-rmspe:0.916756

[118] eval-rmspe:0.914641 train-rmspe:0.914659

[119] eval-rmspe:0.912504 train-rmspe:0.912524

[120] eval-rmspe:0.910340 train-rmspe:0.910363

[121] eval-rmspe:0.908146 train-rmspe:0.908172

[122] eval-rmspe:0.905924 train-rmspe:0.905952

[123] eval-rmspe:0.903665 train-rmspe:0.903696

[124] eval-rmspe:0.901385 train-rmspe:0.901419

[125] eval-rmspe:0.899064 train-rmspe:0.899100

[126] eval-rmspe:0.896714 train-rmspe:0.896753

[127] eval-rmspe:0.894339 train-rmspe:0.894382

[128] eval-rmspe:0.891930 train-rmspe:0.891975

[129] eval-rmspe:0.889488 train-rmspe:0.889537

[130] eval-rmspe:0.887016 train-rmspe:0.887070

[131] eval-rmspe:0.884516 train-rmspe:0.884575

[132] eval-rmspe:0.881991 train-rmspe:0.882054

[133] eval-rmspe:0.879438 train-rmspe:0.879505

[134] eval-rmspe:0.876871 train-rmspe:0.876942

[135] eval-rmspe:0.874260 train-rmspe:0.874337

[136] eval-rmspe:0.871616 train-rmspe:0.871698

[137] eval-rmspe:0.868961 train-rmspe:0.869046

[138] eval-rmspe:0.866260 train-rmspe:0.866351

[139] eval-rmspe:0.863534 train-rmspe:0.863632

[140] eval-rmspe:0.860796 train-rmspe:0.860899

[141] eval-rmspe:0.858021 train-rmspe:0.858131

[142] eval-rmspe:0.855213 train-rmspe:0.855331

[143] eval-rmspe:0.852387 train-rmspe:0.852512

[144] eval-rmspe:0.849545 train-rmspe:0.849678

[145] eval-rmspe:0.846674 train-rmspe:0.846814

[146] eval-rmspe:0.843768 train-rmspe:0.843917

[147] eval-rmspe:0.840848 train-rmspe:0.841005

[148] eval-rmspe:0.837907 train-rmspe:0.838073

[149] eval-rmspe:0.834927 train-rmspe:0.835103

[150] eval-rmspe:0.831933 train-rmspe:0.832115

[151] eval-rmspe:0.828913 train-rmspe:0.829105

[152] eval-rmspe:0.825874 train-rmspe:0.826077

[153] eval-rmspe:0.822809 train-rmspe:0.823022

[154] eval-rmspe:0.819724 train-rmspe:0.819949

[155] eval-rmspe:0.816622 train-rmspe:0.816859

[156] eval-rmspe:0.813492 train-rmspe:0.813740

[157] eval-rmspe:0.810347 train-rmspe:0.810608

[158] eval-rmspe:0.807189 train-rmspe:0.807463

[159] eval-rmspe:0.804018 train-rmspe:0.804305

[160] eval-rmspe:0.800810 train-rmspe:0.801112

[161] eval-rmspe:0.797586 train-rmspe:0.797902

[162] eval-rmspe:0.794338 train-rmspe:0.794668

[163] eval-rmspe:0.791072 train-rmspe:0.791419

[164] eval-rmspe:0.787807 train-rmspe:0.788168

[165] eval-rmspe:0.784528 train-rmspe:0.784904

[166] eval-rmspe:0.781223 train-rmspe:0.781614

[167] eval-rmspe:0.777907 train-rmspe:0.778315

[168] eval-rmspe:0.774574 train-rmspe:0.774998

[169] eval-rmspe:0.771232 train-rmspe:0.771673

[170] eval-rmspe:0.767850 train-rmspe:0.768309

[171] eval-rmspe:0.764459 train-rmspe:0.764921

[172] eval-rmspe:0.761063 train-rmspe:0.761544

[173] eval-rmspe:0.757638 train-rmspe:0.758139

[174] eval-rmspe:0.754222 train-rmspe:0.754743

[175] eval-rmspe:0.750783 train-rmspe:0.751324

[176] eval-rmspe:0.747327 train-rmspe:0.747891

[177] eval-rmspe:0.743874 train-rmspe:0.744462

[178] eval-rmspe:0.740393 train-rmspe:0.741004

[179] eval-rmspe:0.736912 train-rmspe:0.737547

[180] eval-rmspe:0.733413 train-rmspe:0.734073

[181] eval-rmspe:0.729911 train-rmspe:0.730592

[182] eval-rmspe:0.726400 train-rmspe:0.727108

[183] eval-rmspe:0.722867 train-rmspe:0.723602

[184] eval-rmspe:0.719336 train-rmspe:0.720100

[185] eval-rmspe:0.715804 train-rmspe:0.716595

[186] eval-rmspe:0.712266 train-rmspe:0.713081

[187] eval-rmspe:0.708727 train-rmspe:0.709572

[188] eval-rmspe:0.705180 train-rmspe:0.706057

[189] eval-rmspe:0.701624 train-rmspe:0.702531

[190] eval-rmspe:0.698065 train-rmspe:0.699002

[191] eval-rmspe:0.694486 train-rmspe:0.695456

[192] eval-rmspe:0.690903 train-rmspe:0.691906

[193] eval-rmspe:0.687327 train-rmspe:0.688363

[194] eval-rmspe:0.683744 train-rmspe:0.684816

[195] eval-rmspe:0.680163 train-rmspe:0.681267

[196] eval-rmspe:0.676581 train-rmspe:0.677718

[197] eval-rmspe:0.672995 train-rmspe:0.674167

[198] eval-rmspe:0.669403 train-rmspe:0.670615

[199] eval-rmspe:0.665808 train-rmspe:0.667054

[200] eval-rmspe:0.662204 train-rmspe:0.663490

[201] eval-rmspe:0.658612 train-rmspe:0.659936

[202] eval-rmspe:0.655009 train-rmspe:0.656374

[203] eval-rmspe:0.651414 train-rmspe:0.652821

[204] eval-rmspe:0.647823 train-rmspe:0.649273

[205] eval-rmspe:0.644224 train-rmspe:0.645712

[206] eval-rmspe:0.640633 train-rmspe:0.642163

[207] eval-rmspe:0.637032 train-rmspe:0.638610

[208] eval-rmspe:0.633434 train-rmspe:0.635059

[209] eval-rmspe:0.629856 train-rmspe:0.631529

[210] eval-rmspe:0.626272 train-rmspe:0.627993

[211] eval-rmspe:0.622688 train-rmspe:0.624438

[212] eval-rmspe:0.619104 train-rmspe:0.620901

[213] eval-rmspe:0.615533 train-rmspe:0.617381

[214] eval-rmspe:0.611960 train-rmspe:0.613804

[215] eval-rmspe:0.608390 train-rmspe:0.610288

[216] eval-rmspe:0.604828 train-rmspe:0.606736

[217] eval-rmspe:0.601281 train-rmspe:0.603231

[218] eval-rmspe:0.597736 train-rmspe:0.599731

[219] eval-rmspe:0.594199 train-rmspe:0.596253

[220] eval-rmspe:0.590670 train-rmspe:0.592772

[221] eval-rmspe:0.587147 train-rmspe:0.589304

[222] eval-rmspe:0.583627 train-rmspe:0.585833

[223] eval-rmspe:0.580118 train-rmspe:0.582381

[224] eval-rmspe:0.576615 train-rmspe:0.578939

[225] eval-rmspe:0.573117 train-rmspe:0.575501

[226] eval-rmspe:0.569634 train-rmspe:0.572083

[227] eval-rmspe:0.566160 train-rmspe:0.568666

[228] eval-rmspe:0.562700 train-rmspe:0.565268

[229] eval-rmspe:0.559248 train-rmspe:0.561880

[230] eval-rmspe:0.555806 train-rmspe:0.558472

[231] eval-rmspe:0.552370 train-rmspe:0.555089

[232] eval-rmspe:0.548946 train-rmspe:0.551725

[233] eval-rmspe:0.545530 train-rmspe:0.548373

[234] eval-rmspe:0.542128 train-rmspe:0.544979

[235] eval-rmspe:0.538738 train-rmspe:0.541658

[236] eval-rmspe:0.535359 train-rmspe:0.538285

[237] eval-rmspe:0.531994 train-rmspe:0.534954

[238] eval-rmspe:0.528642 train-rmspe:0.531675

[239] eval-rmspe:0.525300 train-rmspe:0.528395

[240] eval-rmspe:0.521970 train-rmspe:0.525119

[241] eval-rmspe:0.518657 train-rmspe:0.521875

[242] eval-rmspe:0.515360 train-rmspe:0.518650

[243] eval-rmspe:0.512069 train-rmspe:0.515436

[244] eval-rmspe:0.508801 train-rmspe:0.512158

[245] eval-rmspe:0.505542 train-rmspe:0.508967

[246] eval-rmspe:0.502301 train-rmspe:0.505802

[247] eval-rmspe:0.499072 train-rmspe:0.502568

[248] eval-rmspe:0.495861 train-rmspe:0.499409

[249] eval-rmspe:0.492660 train-rmspe:0.496207

[250] eval-rmspe:0.489473 train-rmspe:0.493101

[251] eval-rmspe:0.486301 train-rmspe:0.489997

[252] eval-rmspe:0.483143 train-rmspe:0.486908

[253] eval-rmspe:0.480005 train-rmspe:0.483849

[254] eval-rmspe:0.476886 train-rmspe:0.480812

[255] eval-rmspe:0.473782 train-rmspe:0.477789

[256] eval-rmspe:0.470686 train-rmspe:0.474778

[257] eval-rmspe:0.467605 train-rmspe:0.471782

[258] eval-rmspe:0.464537 train-rmspe:0.468798

[259] eval-rmspe:0.461504 train-rmspe:0.465849

[260] eval-rmspe:0.458486 train-rmspe:0.462883

[261] eval-rmspe:0.455457 train-rmspe:0.459945

[262] eval-rmspe:0.452470 train-rmspe:0.457031

[263] eval-rmspe:0.449508 train-rmspe:0.454157

[264] eval-rmspe:0.446550 train-rmspe:0.451285

[265] eval-rmspe:0.443623 train-rmspe:0.448448

[266] eval-rmspe:0.440713 train-rmspe:0.445626

[267] eval-rmspe:0.437818 train-rmspe:0.442742

[268] eval-rmspe:0.434943 train-rmspe:0.439899

[269] eval-rmspe:0.432090 train-rmspe:0.437131

[270] eval-rmspe:0.429257 train-rmspe:0.434280

[271] eval-rmspe:0.426442 train-rmspe:0.431552

[272] eval-rmspe:0.423644 train-rmspe:0.428818

[273] eval-rmspe:0.420857 train-rmspe:0.426133

[274] eval-rmspe:0.418090 train-rmspe:0.423427

[275] eval-rmspe:0.415340 train-rmspe:0.420774

[276] eval-rmspe:0.412621 train-rmspe:0.418084

[277] eval-rmspe:0.409919 train-rmspe:0.415451

[278] eval-rmspe:0.407238 train-rmspe:0.412865

[279] eval-rmspe:0.404579 train-rmspe:0.410286

[280] eval-rmspe:0.401938 train-rmspe:0.407717

[281] eval-rmspe:0.399287 train-rmspe:0.405173

[282] eval-rmspe:0.396676 train-rmspe:0.402659

[283] eval-rmspe:0.394083 train-rmspe:0.400166

[284] eval-rmspe:0.391519 train-rmspe:0.397708

[285] eval-rmspe:0.388986 train-rmspe:0.395199

[286] eval-rmspe:0.386444 train-rmspe:0.392762

[287] eval-rmspe:0.383928 train-rmspe:0.390350

[288] eval-rmspe:0.381446 train-rmspe:0.387937

[289] eval-rmspe:0.378978 train-rmspe:0.385567

[290] eval-rmspe:0.376485 train-rmspe:0.383176

[291] eval-rmspe:0.374064 train-rmspe:0.380707

[292] eval-rmspe:0.371648 train-rmspe:0.378380

[293] eval-rmspe:0.369268 train-rmspe:0.375973

[294] eval-rmspe:0.366876 train-rmspe:0.373680

[295] eval-rmspe:0.364530 train-rmspe:0.371400

[296] eval-rmspe:0.362199 train-rmspe:0.369171

[297] eval-rmspe:0.359884 train-rmspe:0.366944

[298] eval-rmspe:0.357610 train-rmspe:0.364654

[299] eval-rmspe:0.355357 train-rmspe:0.362500

[300] eval-rmspe:0.353066 train-rmspe:0.360315

[301] eval-rmspe:0.350850 train-rmspe:0.358120

[302] eval-rmspe:0.348654 train-rmspe:0.355849

[303] eval-rmspe:0.346417 train-rmspe:0.353714

[304] eval-rmspe:0.344271 train-rmspe:0.351512

[305] eval-rmspe:0.342142 train-rmspe:0.349361

[306] eval-rmspe:0.339941 train-rmspe:0.347270

[307] eval-rmspe:0.337833 train-rmspe:0.345234

[308] eval-rmspe:0.335749 train-rmspe:0.343239

[309] eval-rmspe:0.333685 train-rmspe:0.341267

[310] eval-rmspe:0.331629 train-rmspe:0.339320

[311] eval-rmspe:0.329614 train-rmspe:0.337300

[312] eval-rmspe:0.327619 train-rmspe:0.335254

[313] eval-rmspe:0.325638 train-rmspe:0.333346

[314] eval-rmspe:0.323649 train-rmspe:0.331452

[315] eval-rmspe:0.321682 train-rmspe:0.329566

[316] eval-rmspe:0.319734 train-rmspe:0.327706

[317] eval-rmspe:0.317831 train-rmspe:0.325903

[318] eval-rmspe:0.315936 train-rmspe:0.324120

[319] eval-rmspe:0.314067 train-rmspe:0.322258

[320] eval-rmspe:0.312201 train-rmspe:0.320489

[321] eval-rmspe:0.310397 train-rmspe:0.318799

[322] eval-rmspe:0.308579 train-rmspe:0.317072

[323] eval-rmspe:0.306776 train-rmspe:0.315366

[324] eval-rmspe:0.305025 train-rmspe:0.313709

[325] eval-rmspe:0.303214 train-rmspe:0.311992

[326] eval-rmspe:0.301484 train-rmspe:0.310345

[327] eval-rmspe:0.299785 train-rmspe:0.308697

[328] eval-rmspe:0.298099 train-rmspe:0.307095

[329] eval-rmspe:0.296425 train-rmspe:0.305521

[330] eval-rmspe:0.294744 train-rmspe:0.303948

[331] eval-rmspe:0.293088 train-rmspe:0.302402

[332] eval-rmspe:0.291456 train-rmspe:0.300756

[333] eval-rmspe:0.289895 train-rmspe:0.299292

[334] eval-rmspe:0.288335 train-rmspe:0.297665

[335] eval-rmspe:0.286746 train-rmspe:0.296190

这篇关于Kaggle竞赛项目--推荐系统之便利店销量预测的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!