本文主要是介绍从uniprot网站上爬取蛋白质家族信息,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

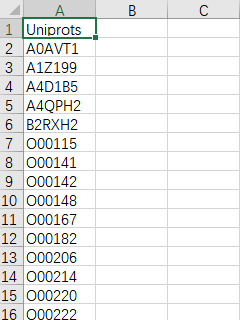

原始数据:

逼话少说,上代码

import requests

import pandas as pd

from bs4 import BeautifulSoup

import time

from multiprocessing import Pool"""

类说明:从uniprot网站下获取蛋白的家族信息

Parameters:无

Returns:无

Modify:2020-01-09

"""class Downloader():def __init__(self):self.server = 'https://www.uniprot.org/uniprot/'self.file = r"D:\SMALL_MOLECULAR_AIDS\chembl\outcome\10uM(less)\uniprots(2806).csv"self.url = 'https://www.uniprot.org/uniprot/XXX#family_and_domains'self.uniprots = []self.headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:71.0) Gecko/20100101 Firefox/71.0','Upgrade-Insecure-Requests': '1'}self.outpath_txt(r"C:\Users\86177\Desktop\families.txt")"""函数说明:获取uniprotParameters:无Returns:无Modify:2020-01-07""" def get_uni(self): df = pd.read_csv(self.file)self.uniprots.extend(df['Uniprots'])"""函数说明:获取uniprot号的蛋白质家族信息Parameters:uniprotReturns:fam (protein's(uniprot's) family info)Modify:2020-01-09""" def get_contents(self, uniprot):target = self.url.replace('XXX',uniprot)s = requests.session() rep = s.get(url = target,verify = False,headers = self.headers,timeout=30)rep.raise_for_status()soup = BeautifulSoup(rep.text,'html.parser')families = soup.select('#family_and_domains > div.annotation > a')fam = [i.get_text() for i in families]if fam != []:fam.extend([uniprot])else:families = soup.select('#family_and_domains > span > a ')fam = [i.get_text() for i in families]fam.extend([uniprot])print(fam)return fam"""函数说明:输出数据Parameters:family(protein's(uniprot's) family info)Returns:无Modify:2020-01-07""" def write_txt(self,family):with open(self.outpath_txt, 'a') as f:f.write(str(family) + '\n')"""函数说明:将爬虫结果映射进原来的csv表格中Parameters:无Returns:无Modify:2020-01-09"""def trans_csv(self):df = pd.read_csv(self.file)families = {}with open (self.outpath_txt,'r') as f:text = f.readlines()for f_u in text:f_u = f_u.replace('\n','').split(',')families.update({f_u[len(f_u)-1]:'|'.join(f_u[0:(len(f_u)-1)])})uni_left = [uni for uni in list(df['Uniprots']) if uni not in families.keys()]for u in uni_left:families.update({u:None})df['Family'] = df['Uniprots'].map(lambda x:families[x])df.to_csv(self.file)if __name__ == "__main__":dl = Downloader()dl.get_uni()pool = Pool(processes = 8)for uniprot in dl.uniprots:try:pool.apply_async(func = dl.write_txt,args = (dl.get_contents(uniprot),)) print("uniprot:"+uniprot+"info download finished")time.sleep(0.5)except:print(uniprot+"download fail")pool.close()pool.join()dl.trans_csv()

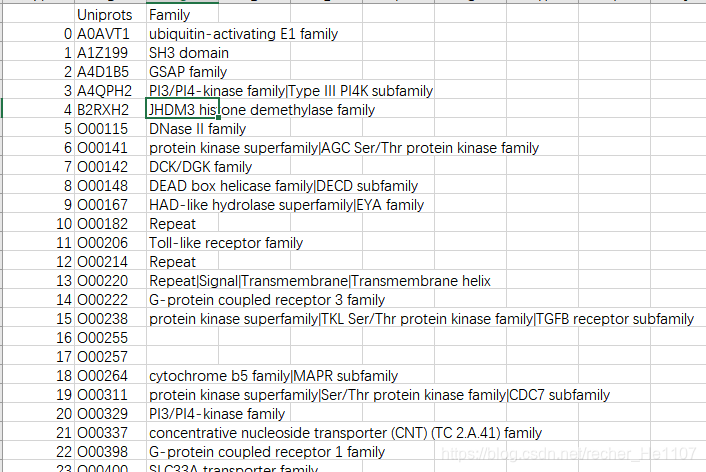

输出结果:

可以看到还有一些uniprot没对应到家族,应该是网站结构改变没爬到,再探!!

这篇关于从uniprot网站上爬取蛋白质家族信息的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!