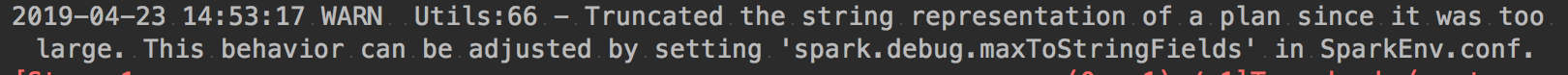

本文主要是介绍This behavior can be adjusted by setting 'spark.debug.maxToStringFields' in SparkEnv.conf.,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

def create_spark():spark = SparkSession. \builder.master('local'). \appName('pipelinedemo'). \getOrCreate()return spark报错:

WARN NativeCodeLoader:62 - Unable to load native-hadoop library for your platform… using builtin-java classes where applicable

Setting default log level to “WARN”.

改:

def create_spark():spark = SparkSession. \builder.master('local'). \appName('pipelinedemo'). \***config("spark.debug.maxToStringFields", "100").\***getOrCreate()return spark

这篇关于This behavior can be adjusted by setting 'spark.debug.maxToStringFields' in SparkEnv.conf.的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!