本文主要是介绍spark从入门到放弃 之 分布式运行jar包,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

scala代码如下:

import org.apache.spark.SparkConf

import org.apache.spark.SparkContext

import org.apache.spark.SparkContext._/*** 统计字符出现次数*/

object WordCount {def main(args: Array[String]) {if (args.length < 1) {System.err.println("Usage: <file>")System.exit(1)}val conf = new SparkConf()val sc = new SparkContext(conf)val line = sc.textFile(args(0))line.flatMap(_.split(" ")).map((_, 1)).reduceByKey(_+_).collect().foreach(println)sc.stop()}

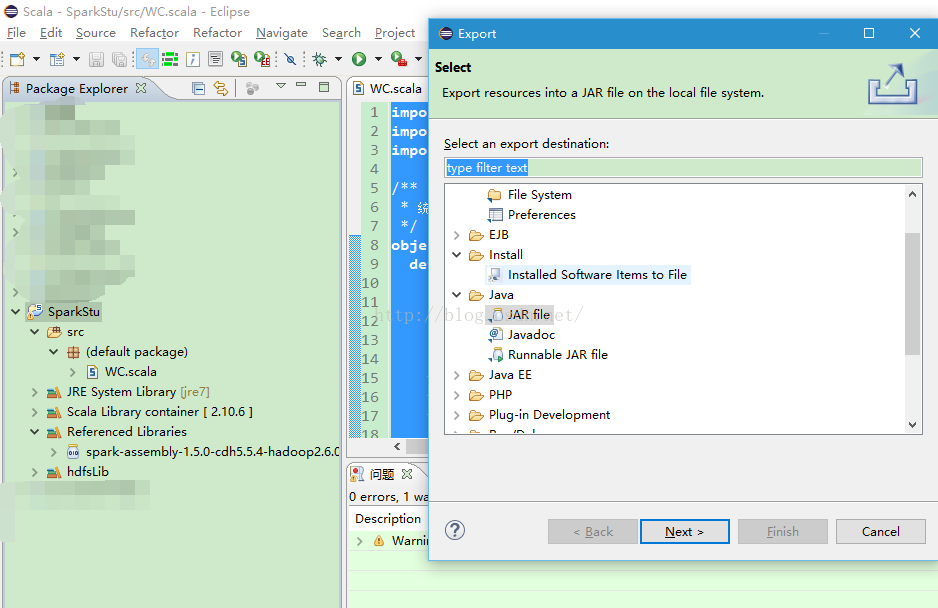

}用eclipse将其打成jar包

注意:scala的object名不一定要和文件名相同,这一点和java不一样。例如我的object名为WordCount,但文件名是WC.scala

上传服务器

查看服务器上测试文件内容

-bash-4.1$ hadoop fs -cat /user/hdfs/test.txt

张三 张四

张三 张五

李三 李三

李四 李四

李四 王二

老王 老王运行spark-submit命令,提交jar包

-bash-4.1$ spark-submit --class "WordCount" wc.jar /user/hdfs/test.txt

16/08/22 15:54:17 INFO SparkContext: Running Spark version 1.5.0-cdh5.5.4

16/08/22 15:54:18 INFO SecurityManager: Changing view acls to: hdfs

16/08/22 15:54:18 INFO SecurityManager: Changing modify acls to: hdfs

16/08/22 15:54:18 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(hdfs); users with modify permissions: Set(hdfs)

16/08/22 15:54:19 INFO Slf4jLogger: Slf4jLogger started

16/08/22 15:54:19 INFO Remoting: Starting remoting

16/08/22 15:54:19 INFO Remoting: Remoting started; listening on addresses :[akka.tcp://sparkDriver@192.168.56.201:55886]

16/08/22 15:54:19 INFO Remoting: Remoting now listens on addresses: [akka.tcp://sparkDriver@192.168.56.201:55886]

16/08/22 15:54:19 INFO Utils: Successfully started service 'sparkDriver' on port 55886.

16/08/22 15:54:19 INFO SparkEnv: Registering MapOutputTracker

16/08/22 15:54:19 INFO SparkEnv: Registering BlockManagerMaster

16/08/22 15:54:19 INFO DiskBlockManager: Created local directory at /tmp/blockmgr-eccd9ad6-6296-4508-a9c8-a22b5a36ecbe

16/08/22 15:54:20 INFO MemoryStore: MemoryStore started with capacity 534.5 MB

16/08/22 15:54:20 INFO HttpFileServer: HTTP File server directory is /tmp/spark-bbf694e7-32e2-40b6-88a3-4d97a1d1aab9/httpd-72a45554-b57b-4a5d-af2f-24f198e6300b

16/08/22 15:54:20 INFO HttpServer: Starting HTTP Server

16/08/22 15:54:20 INFO Utils: Successfully started service 'HTTP file server' on port 59636.

16/08/22 15:54:20 INFO SparkEnv: Registering OutputCommitCoordinator

16/08/22 15:54:41 INFO Utils: Successfully started service 'SparkUI' on port 4040.

16/08/22 15:54:41 INFO SparkUI: Started SparkUI at http://192.168.56.201:4040

16/08/22 15:54:41 INFO SparkContext: Added JAR file:/var/lib/hadoop-hdfs/wc.jar at http://192.168.56.201:59636/jars/wc.jar with timestamp 1471852481181

16/08/22 15:54:41 WARN MetricsSystem: Using default name DAGScheduler for source because spark.app.id is not set.

16/08/22 15:54:41 INFO RMProxy: Connecting to ResourceManager at hadoop01/192.168.56.201:8032

16/08/22 15:54:41 INFO Client: Requesting a new application from cluster with 2 NodeManagers

16/08/22 15:54:41 INFO Client: Verifying our application has not requested more than the maximum memory capability of the cluster (1536 MB per container)

16/08/22 15:54:41 INFO Client: Will allocate AM container, with 896 MB memory including 384 MB overhead

16/08/22 15:54:41 INFO Client: Setting up container launch context for our AM

16/08/22 15:54:41 INFO Client: Setting up the launch environment for our AM container

16/08/22 15:54:41 INFO Client: Preparing resources for our AM container

16/08/22 15:54:42 INFO Client: Uploading resource file:/tmp/spark-bbf694e7-32e2-40b6-88a3-4d97a1d1aab9/__spark_conf__5421268438919389977.zip -> hdfs://hadoop01:8020/user/hdfs/.sparkStaging/application_1471848612199_0005/__spark_conf__5421268438919389977.zip

16/08/22 15:54:43 INFO SecurityManager: Changing view acls to: hdfs

16/08/22 15:54:43 INFO SecurityManager: Changing modify acls to: hdfs

16/08/22 15:54:43 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(hdfs); users with modify permissions: Set(hdfs)

16/08/22 15:54:43 INFO Client: Submitting application 5 to ResourceManager

16/08/22 15:54:43 INFO YarnClientImpl: Submitted application application_1471848612199_0005

16/08/22 15:54:44 INFO Client: Application report for application_1471848612199_0005 (state: ACCEPTED)

16/08/22 15:54:44 INFO Client: client token: N/Adiagnostics: N/AApplicationMaster host: N/AApplicationMaster RPC port: -1queue: root.hdfsstart time: 1471852483082final status: UNDEFINEDtracking URL: http://hadoop01:8088/proxy/application_1471848612199_0005/user: hdfs

16/08/22 15:54:45 INFO Client: Application report for application_1471848612199_0005 (state: ACCEPTED)

16/08/22 15:54:46 INFO Client: Application report for application_1471848612199_0005 (state: ACCEPTED)

16/08/22 15:54:47 INFO Client: Application report for application_1471848612199_0005 (state: ACCEPTED)

16/08/22 15:54:48 INFO Client: Application report for application_1471848612199_0005 (state: ACCEPTED)

16/08/22 15:54:49 INFO Client: Application report for application_1471848612199_0005 (state: ACCEPTED)

16/08/22 15:54:49 INFO YarnSchedulerBackend$YarnSchedulerEndpoint: ApplicationMaster registered as AkkaRpcEndpointRef(Actor[akka.tcp://sparkYarnAM@192.168.56.206:46225/user/YarnAM#289706976])

16/08/22 15:54:49 INFO YarnClientSchedulerBackend: Add WebUI Filter. org.apache.hadoop.yarn.server.webproxy.amfilter.AmIpFilter, Map(PROXY_HOSTS -> hadoop01, PROXY_URI_BASES -> http://hadoop01:8088/proxy/application_1471848612199_0005), /proxy/application_1471848612199_0005

16/08/22 15:54:49 INFO JettyUtils: Adding filter: org.apache.hadoop.yarn.server.webproxy.amfilter.AmIpFilter

16/08/22 15:54:50 INFO Client: Application report for application_1471848612199_0005 (state: RUNNING)

16/08/22 15:54:50 INFO Client: client token: N/Adiagnostics: N/AApplicationMaster host: 192.168.56.206ApplicationMaster RPC port: 0queue: root.hdfsstart time: 1471852483082final status: UNDEFINEDtracking URL: http://hadoop01:8088/proxy/application_1471848612199_0005/user: hdfs

16/08/22 15:54:50 INFO YarnClientSchedulerBackend: Application application_1471848612199_0005 has started running.

16/08/22 15:54:50 INFO Utils: Successfully started service 'org.apache.spark.network.netty.NettyBlockTransferService' on port 38391.

16/08/22 15:54:50 INFO NettyBlockTransferService: Server created on 38391

16/08/22 15:54:50 INFO BlockManager: external shuffle service port = 7337

16/08/22 15:54:50 INFO BlockManagerMaster: Trying to register BlockManager

16/08/22 15:54:50 INFO BlockManagerMasterEndpoint: Registering block manager 192.168.56.201:38391 with 534.5 MB RAM, BlockManagerId(driver, 192.168.56.201, 38391)

16/08/22 15:54:50 INFO BlockManagerMaster: Registered BlockManager

16/08/22 15:54:51 INFO EventLoggingListener: Logging events to hdfs://hadoop01:8020/user/spark/applicationHistory/application_1471848612199_0005

16/08/22 15:54:51 INFO YarnClientSchedulerBackend: SchedulerBackend is ready for scheduling beginning after reached minRegisteredResourcesRatio: 0.8

16/08/22 15:54:52 INFO MemoryStore: ensureFreeSpace(195280) called with curMem=0, maxMem=560497950

16/08/22 15:54:52 INFO MemoryStore: Block broadcast_0 stored as values in memory (estimated size 190.7 KB, free 534.3 MB)

16/08/22 15:54:52 INFO MemoryStore: ensureFreeSpace(22784) called with curMem=195280, maxMem=560497950

16/08/22 15:54:52 INFO MemoryStore: Block broadcast_0_piece0 stored as bytes in memory (estimated size 22.3 KB, free 534.3 MB)

16/08/22 15:54:52 INFO BlockManagerInfo: Added broadcast_0_piece0 in memory on 192.168.56.201:38391 (size: 22.3 KB, free: 534.5 MB)

16/08/22 15:54:52 INFO SparkContext: Created broadcast 0 from textFile at WC.scala:17

16/08/22 15:54:52 INFO FileInputFormat: Total input paths to process : 1

16/08/22 15:54:52 INFO SparkContext: Starting job: collect at WC.scala:19

16/08/22 15:54:52 INFO DAGScheduler: Registering RDD 3 (map at WC.scala:19)

16/08/22 15:54:52 INFO DAGScheduler: Got job 0 (collect at WC.scala:19) with 2 output partitions

16/08/22 15:54:52 INFO DAGScheduler: Final stage: ResultStage 1(collect at WC.scala:19)

16/08/22 15:54:52 INFO DAGScheduler: Parents of final stage: List(ShuffleMapStage 0)

16/08/22 15:54:52 INFO DAGScheduler: Missing parents: List(ShuffleMapStage 0)

16/08/22 15:54:52 INFO DAGScheduler: Submitting ShuffleMapStage 0 (MapPartitionsRDD[3] at map at WC.scala:19), which has no missing parents

16/08/22 15:54:52 INFO MemoryStore: ensureFreeSpace(4024) called with curMem=218064, maxMem=560497950

16/08/22 15:54:52 INFO MemoryStore: Block broadcast_1 stored as values in memory (estimated size 3.9 KB, free 534.3 MB)

16/08/22 15:54:52 INFO MemoryStore: ensureFreeSpace(2281) called with curMem=222088, maxMem=560497950

16/08/22 15:54:52 INFO MemoryStore: Block broadcast_1_piece0 stored as bytes in memory (estimated size 2.2 KB, free 534.3 MB)

16/08/22 15:54:52 INFO BlockManagerInfo: Added broadcast_1_piece0 in memory on 192.168.56.201:38391 (size: 2.2 KB, free: 534.5 MB)

16/08/22 15:54:52 INFO SparkContext: Created broadcast 1 from broadcast at DAGScheduler.scala:861

16/08/22 15:54:52 INFO DAGScheduler: Submitting 2 missing tasks from ShuffleMapStage 0 (MapPartitionsRDD[3] at map at WC.scala:19)

16/08/22 15:54:52 INFO YarnScheduler: Adding task set 0.0 with 2 tasks

16/08/22 15:54:53 INFO ExecutorAllocationManager: Requesting 1 new executor because tasks are backlogged (new desired total will be 1)

16/08/22 15:54:54 INFO ExecutorAllocationManager: Requesting 1 new executor because tasks are backlogged (new desired total will be 2)

16/08/22 15:54:59 INFO YarnClientSchedulerBackend: Registered executor: AkkaRpcEndpointRef(Actor[akka.tcp://sparkExecutor@hadoop05:59707/user/Executor#729574503]) with ID 1

16/08/22 15:54:59 INFO ExecutorAllocationManager: New executor 1 has registered (new total is 1)

16/08/22 15:54:59 INFO TaskSetManager: Starting task 0.0 in stage 0.0 (TID 0, hadoop05, partition 0,NODE_LOCAL, 2186 bytes)

16/08/22 15:54:59 INFO BlockManagerMasterEndpoint: Registering block manager hadoop05:53273 with 534.5 MB RAM, BlockManagerId(1, hadoop05, 53273)

16/08/22 15:55:00 INFO BlockManagerInfo: Added broadcast_1_piece0 in memory on hadoop05:53273 (size: 2.2 KB, free: 534.5 MB)

16/08/22 15:55:01 INFO BlockManagerInfo: Added broadcast_0_piece0 in memory on hadoop05:53273 (size: 22.3 KB, free: 534.5 MB)

16/08/22 15:55:03 INFO TaskSetManager: Starting task 1.0 in stage 0.0 (TID 1, hadoop05, partition 1,NODE_LOCAL, 2186 bytes)

16/08/22 15:55:03 INFO TaskSetManager: Finished task 0.0 in stage 0.0 (TID 0) in 3733 ms on hadoop05 (1/2)

16/08/22 15:55:03 INFO DAGScheduler: ShuffleMapStage 0 (map at WC.scala:19) finished in 10.621 s

16/08/22 15:55:03 INFO DAGScheduler: looking for newly runnable stages

16/08/22 15:55:03 INFO DAGScheduler: running: Set()

16/08/22 15:55:03 INFO DAGScheduler: waiting: Set(ResultStage 1)

16/08/22 15:55:03 INFO DAGScheduler: failed: Set()

16/08/22 15:55:03 INFO TaskSetManager: Finished task 1.0 in stage 0.0 (TID 1) in 150 ms on hadoop05 (2/2)

16/08/22 15:55:03 INFO YarnScheduler: Removed TaskSet 0.0, whose tasks have all completed, from pool

16/08/22 15:55:03 INFO DAGScheduler: Missing parents for ResultStage 1: List()

16/08/22 15:55:03 INFO DAGScheduler: Submitting ResultStage 1 (ShuffledRDD[4] at reduceByKey at WC.scala:19), which is now runnable

16/08/22 15:55:03 INFO MemoryStore: ensureFreeSpace(2288) called with curMem=224369, maxMem=560497950

16/08/22 15:55:03 INFO MemoryStore: Block broadcast_2 stored as values in memory (estimated size 2.2 KB, free 534.3 MB)

16/08/22 15:55:03 INFO MemoryStore: ensureFreeSpace(1363) called with curMem=226657, maxMem=560497950

16/08/22 15:55:03 INFO MemoryStore: Block broadcast_2_piece0 stored as bytes in memory (estimated size 1363.0 B, free 534.3 MB)

16/08/22 15:55:03 INFO BlockManagerInfo: Added broadcast_2_piece0 in memory on 192.168.56.201:38391 (size: 1363.0 B, free: 534.5 MB)

16/08/22 15:55:03 INFO SparkContext: Created broadcast 2 from broadcast at DAGScheduler.scala:861

16/08/22 15:55:03 INFO DAGScheduler: Submitting 2 missing tasks from ResultStage 1 (ShuffledRDD[4] at reduceByKey at WC.scala:19)

16/08/22 15:55:03 INFO YarnScheduler: Adding task set 1.0 with 2 tasks

16/08/22 15:55:03 INFO TaskSetManager: Starting task 0.0 in stage 1.0 (TID 2, hadoop05, partition 0,PROCESS_LOCAL, 1950 bytes)

16/08/22 15:55:03 INFO BlockManagerInfo: Added broadcast_2_piece0 in memory on hadoop05:53273 (size: 1363.0 B, free: 534.5 MB)

16/08/22 15:55:03 INFO MapOutputTrackerMasterEndpoint: Asked to send map output locations for shuffle 0 to hadoop05:59707

16/08/22 15:55:03 INFO MapOutputTrackerMaster: Size of output statuses for shuffle 0 is 148 bytes

16/08/22 15:55:03 INFO TaskSetManager: Starting task 1.0 in stage 1.0 (TID 3, hadoop05, partition 1,PROCESS_LOCAL, 1950 bytes)

16/08/22 15:55:03 INFO TaskSetManager: Finished task 0.0 in stage 1.0 (TID 2) in 155 ms on hadoop05 (1/2)

16/08/22 15:55:03 INFO DAGScheduler: ResultStage 1 (collect at WC.scala:19) finished in 0.193 s

16/08/22 15:55:03 INFO TaskSetManager: Finished task 1.0 in stage 1.0 (TID 3) in 53 ms on hadoop05 (2/2)

16/08/22 15:55:03 INFO YarnScheduler: Removed TaskSet 1.0, whose tasks have all completed, from pool

16/08/22 15:55:03 INFO DAGScheduler: Job 0 finished: collect at WC.scala:19, took 11.041942 s

(张五,1)

(老王,2)

(张三,2)

(张四,1)

(王二,1)

(李四,3)

(李三,2)

16/08/22 15:55:03 INFO SparkUI: Stopped Spark web UI at http://192.168.56.201:4040

16/08/22 15:55:03 INFO DAGScheduler: Stopping DAGScheduler

16/08/22 15:55:03 INFO YarnClientSchedulerBackend: Interrupting monitor thread

16/08/22 15:55:03 INFO YarnClientSchedulerBackend: Shutting down all executors

16/08/22 15:55:03 INFO YarnClientSchedulerBackend: Asking each executor to shut down

16/08/22 15:55:03 INFO YarnClientSchedulerBackend: Stopped

16/08/22 15:55:03 INFO MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped!

16/08/22 15:55:03 INFO MemoryStore: MemoryStore cleared

16/08/22 15:55:03 INFO BlockManager: BlockManager stopped

16/08/22 15:55:03 INFO BlockManagerMaster: BlockManagerMaster stopped

16/08/22 15:55:03 INFO OutputCommitCoordinator$OutputCommitCoordinatorEndpoint: OutputCommitCoordinator stopped!

16/08/22 15:55:03 INFO RemoteActorRefProvider$RemotingTerminator: Shutting down remote daemon.

16/08/22 15:55:03 INFO SparkContext: Successfully stopped SparkContext

16/08/22 15:55:03 INFO RemoteActorRefProvider$RemotingTerminator: Remote daemon shut down; proceeding with flushing remote transports.

16/08/22 15:55:03 INFO ShutdownHookManager: Shutdown hook called

16/08/22 15:55:03 INFO ShutdownHookManager: Deleting directory /tmp/spark-bbf694e7-32e2-40b6-88a3-4d97a1d1aab9执行成功。

这篇关于spark从入门到放弃 之 分布式运行jar包的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!