本文主要是介绍YOLOv8使用COCO评测,解决AssertionError: Results do not correspond to current coco set.,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

YOLO评测指标和COCO评测指标还是有些区别的,通过数据表明YOLO评测指标要比COCO评测指标高2,3个点都是正常的。

跟着流程走吧!!

yolo设置

在yolo.eval()评估的时候,需要设置save_json = True保存结果json文件

model = YOLO("weight/best.pt")

model.val(data="data.yaml",imgsz=640, save_json=True)

结果默认保存在runs/detect/val/predictions.json

Id转化

coco格式id是从0-n,一个序列。而yolo的id是文件名称,字符。需要借助标签文件进行映射,写到新文件中

def cover_pred_json_id(anno_json_path, pred_json_path):with open(anno_json_path, "r") as f:ann_json = json.load(f)with open(pred_json_path, "r") as f:pred_json = json.load(f)for pred_item in pred_json:img_id = pred_item["image_id"]ann_id = [ann_item["id"] for ann_item in ann_json["images"] if ann_item["file_name"][:-4] == img_id]try:pred_item["image_id"] = ann_id[0]except IndexError:print(img_id)out_json_path = os.path.join(os.path.dirname(pred_json_path),"newpred.json")with open(out_json_path, 'w') as file:json.dump(pred_json, file, indent=4)return out_json_path

评测

完整代码

def parse_opt():parser = argparse.ArgumentParser()parser.add_argument('--anno_json', type=str, default='datasets/annotations/instances_val2017.json', help='training model path')parser.add_argument('--pred_json', type=str, default='utils/json_files/newcocopred.json', help='data yaml path')return parser.parse_known_args()[0]def cover_pred_json_id(anno_json_path, pred_json_path):with open(anno_json_path, "r") as f:ann_json = json.load(f)with open(pred_json_path, "r") as f:pred_json = json.load(f)for pred_item in pred_json:img_id = pred_item["image_id"]ann_id = [ann_item["id"] for ann_item in ann_json["images"] if ann_item["file_name"][:-4] == img_id]try:pred_item["image_id"] = ann_id[0]except IndexError:print(img_id)out_json_path = os.path.join(os.path.dirname(pred_json_path),"newpred.json")with open(out_json_path, 'w') as file:json.dump(pred_json, file, indent=4)return out_json_pathif __name__ == '__main__':opt = parse_opt()anno_json = opt.anno_jsonpred_json = opt.pred_jsonpred_json = cover_pred_json_bbox(anno_json, pred_json) # cover yolo id to coco idanno = COCO(anno_json) # init annotations apiprint(pred_json)pred = anno.loadRes(pred_json) # init predictions apieval = COCOeval(anno, pred, 'bbox')eval.evaluate()eval.accumulate()eval.summarize()

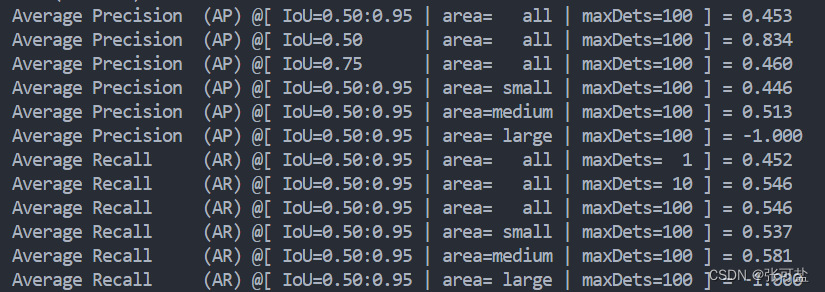

如果不出意外的话,会正确打印结果:

错误

- 如果出现评测指标为0的时候,说明category_id都没对应上,coco是从1开始计算, 而yolo是0开始

更改ultralytics/models/yolo/detect/val.py设置self.is_coco = True

def init_metrics(self, model):"""Initialize evaluation metrics for YOLO."""val = self.data.get(self.args.split, "") # validation pathself.is_coco = True # isinstance(val, str) and "coco" in val and val.endswith(f"{os.sep}val2017.txt") # is COCOself.class_map = converter.coco80_to_coco91_class() if self.is_coco else list(range(1000))self.args.save_json |= self.is_coco # run on final val if training COCOself.names = model.namesself.nc = len(model.names)self.metrics.names = self.namesself.metrics.plot = self.args.plotsself.confusion_matrix = ConfusionMatrix(nc=self.nc, conf=self.args.conf)self.seen = 0self.jdict = []self.stats = dict(tp=[], conf=[], pred_cls=[], target_cls=[])

- 如果出现

AssertionError: Results do not correspond to current coco set的错误通常是预测文件和标签中的id值不对应,这时候就要查看预测的文件中是不是少了或者多了哪个文件。

这篇关于YOLOv8使用COCO评测,解决AssertionError: Results do not correspond to current coco set.的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!