allocate专题

torch/lib/libgomp-d22c30c5.so.1: cannot allocate memory in static TLS block的正解

torch/lib/libgomp-4dbbc2f2.so.1.0.0: cannot allocate memory in static TLS block的正解 只需要一行命令即可解决 export LD_PRELOAD=/home/ma-user/anaconda3/envs/MindSpore/lib/python3.9/site-packages/torch/lib/../../to

RuntimeError: CUDA out of memory. Tried to allocate 1.77 GiB?如何解决

🏆本文收录于「Bug调优」专栏,主要记录项目实战过程中的Bug之前因后果及提供真实有效的解决方案,希望能够助你一臂之力,帮你早日登顶实现财富自由🚀;同时,欢迎大家关注&&收藏&&订阅!持续更新中,up!up!up!! 问题描述 mmdetection运行mask rcnn,训练模型时运行train.py出现RuntimeError: CUDA out of memory. T

Android Emulator could not allocate o 无法启动安卓自带虚拟机解决办法

Android模拟器无法为当前的AVD配置1.0gb内存分配。考虑调整内存包含多个你的AVD的AVD管理。错误细节:QEMU的pc.ram” Android Emulator could not allocate 1.0GB of memory for the current AVD configuration. Consider adjusting the Ram Ssize of your

模型训练时报错Failed to allocate 12192768 bytes in function ‘cv::OutOfMemoryError‘

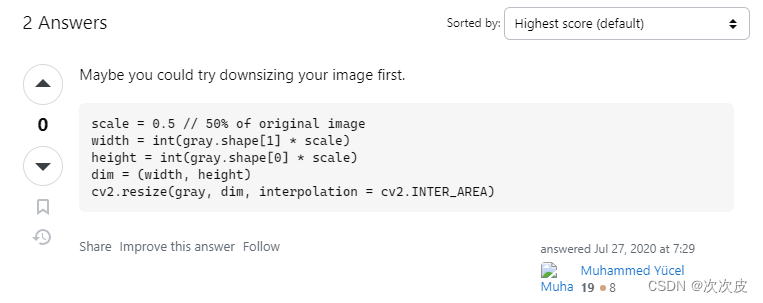

目录 报错信息: 查找网上解决方法: 改进思路: 改进方法: 报错信息: D:\Programs\miniconda3\envs\python311\python.exe D:\python\project\VisDrone2019-DET-MOT\train.py Ultralytics YOLOv8.1.9 🚀 Python-3.11.7 torch-2.2.0 CUD

严重: Allocate exception for servlet aa

启动Tomcat出现以下异常 严重: Allocate exception for servlet aajava.lang.ClassNotFoundException: cookie.aaat org.apache.catalina.loader.WebappClassLoader.loadClass(WebappClassLoader.java:1718)at org.apache.cat

Maven打包时报错:Cannot allocate memory

使用Jenkins执行Maven打包任务时报错 Cannot allocate memory 解决办法: 配置系统变量 MAVEN_OPTS=-Xmx256m -XX:MaxPermSize=512m 或者 在项目目录下新建文件 .mvn/jvm.config -Xmx256m -Xms256m -XX:MaxPermSize=512m -Djava.awt.headless=tr

torch.cuda.OutOfMemoryError: CUDA out of memory. Tried to allocate XXX

问题描述:torch.cuda.OutOfMemoryError: CUDA out of memory. Tried to allocate 2.39 GiB. GPU 0 has a total capacty of 8.00 GiB of which 0 bytes is free. Of the allocated memory 9.50 GiB is allocated by PyTor

NoResourceAvailableException: Could not allocate all requires slots within timeout of 300000 ms...

完整的报错信息: org.apache.flink.runtime.jobmanager.scheduler.NoResourceAvailableException: Could not allocate all requires slots within timeout of 300000 ms. Slots required: 3, slots allocated: 1, previous

Allowed memory size of 149946368 bytes exhausted (tried to allocate 32640 bytes)

问题:PHP Fatal error: Allowed memory size of 149946368 bytes exhausted (tried to allocate 32640 bytes)原因:php限制了该脚本的能申请到的内存;解决办法: 修改php.ini文件中memory_limit, 并重启php-fpm;在脚本中添加ini_set(‘memory_limit’, ‘-1’);

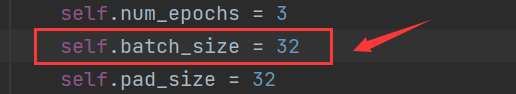

Python报错:RuntimeError: CUDA out of memory. Tried to allocate 48.00 MiB

Python报错:RuntimeError: CUDA out of memory. Tried to allocate 48.00 MiB (GPU 0; 6.00 GiB total capacity; 4.44 GiB already allocated; 0 bytes free; 4.49 GiB reserved in total by PyTorch) 可以修改batch_size

fatal error failed to allocate memory. out of virtual memory.

fatal error failed to allocate memory. out of virtual memory. 今天早上看到ETL monitor监控界面一片爆红,全部是异常终止workflow 抽取进程,重来没有遇到过, fatal error failed to allocate memory. out of virtual memory. 咨询了一下服务器关联人员,

Allocate aligned memory

InterlockedCompareExchange128 要求目标操作数地址必须16字节对齐,否则会出访问异常。所以在分配目标操作数的时候需用特殊的分配函数: Windows 下用这个: http://msdn.microsoft.com/en-us/library/8z34s9c6(vs.71).aspx Linux 用这个: http://linux.die.net/man/3/posi

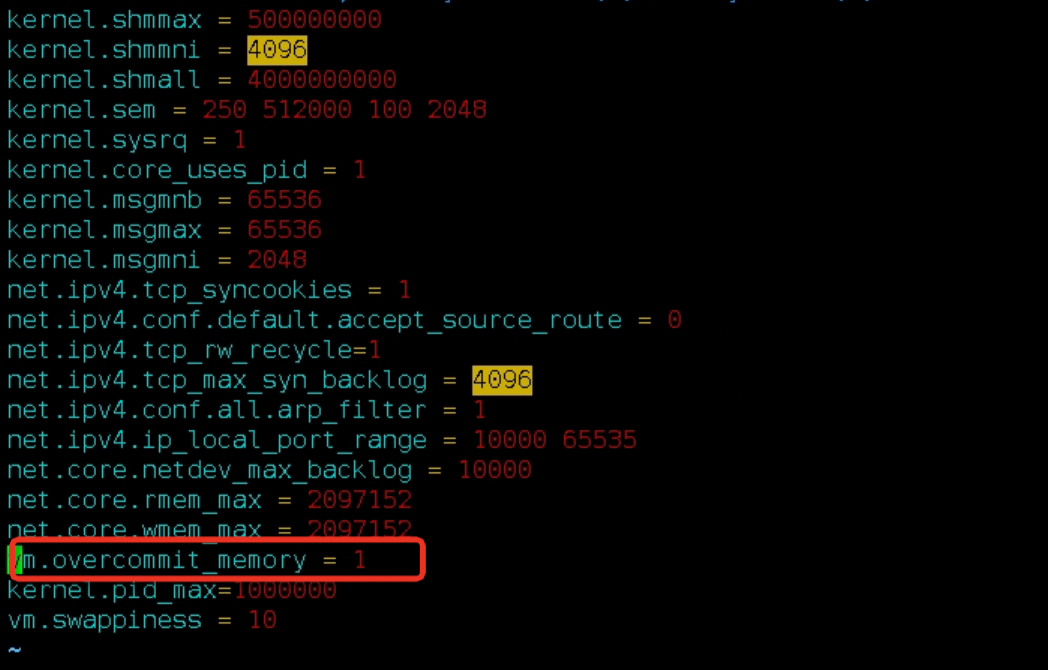

【linux|java应用报错】Cannot allocate memory

启动一个java应用报Cannot allocate memory,并且会生产一个hs_ess_pid.log文件。 文件内容为: #内存不足,Java运行时环境无法继续。 #本机内存分配(mmap)无法映射4294967296字节以提交保留内存。 【排查】 1、尝试使用文件里的命令 ulimit -c unlimited。 用ulimit -a可以查看当前用户可执行的资源限制。

swShareMemory_mmap_create:mmap(248000096) failed / Error: Cannot allocate memory[12]

启用swoole时报的错误,可以确定是内存问题 [2019-04-09 09:04:32 @220.0] WARNING swShareMemory_mmap_create: mmap(260046944) failed. Error: Cannot allocate memory[12] [2019-04-09 09:04:32 @220.0] ERROR calloc[1

kali linux ERROR 12 Cannot allocate memory

ERROR : 12, Cannot allocate memory [/build/ettercap-G9V59y/ettercap-0.8.2/src/ec_threads.c:ec_thread_new_detached:210] not enough resources to create a new thread in this process: Operation no

Java中ByteBuffer的allocate方法 和allocateDirect方法的区别和选用原则

文章目录 背景区别1. allocate:2. allocateDirect: 总结 背景 公司开发一个商用的支付应用。写协议的时候需要用到byte类型数组来填充协议的报文数据。在调研了JDK各个类库之后,最终选用Java类库中的ByteBuffer。 在Java中,ByteBuffer是java.nio包中的一个类,用于处理字节数据。ByteBuffer提供了两种方式来分配内

Can’t save in background: fork: Cannot allocate memory

在ELK日志系统中,用redis作为日志的缓存。但今天发现,redis数据不变,而且从redis读数据的logstash报错: Redis "MISCONF Redis is configured to save RDB snapshots, but is currently not able to persist on disk" 查看redis的日志,报下面的错: Can’t save

Can't allocate space for object 'syslogs' in database:Sybase

使用Sybase时候,遇到下面的错误: Can't allocate space for object 'syslogs' in database 'master' because 'logsegment' segment is full/has no free extents. If you ran out of space in syslogs, dump the transaction lo

spark(sparkSQL)报错 WARN TaskMemoryManager: Failed to allocate a page (bytes), try again.

背景 最近在跑 sparkSQL的时候,执行到一半,频繁打印日志报错: WARN TaskMemoryManager: Failed to allocate a page (104876 bytes), try again. WARN TaskMemoryManager: Failed to allocate a page (104876 bytes), try again. … 原因 执

RuntimeError: CUDA out of memory. Tried to allocate 5. If reserved memory is >> allocated memory

报错信息如下: RuntimeError: CUDA out of memory. Tried to allocate 50.00 MiB (GPU 0; 5.80 GiB total capacity; 4.39 GiB already allocated; 35.94 MiB free; 4.46 GiB reserved in total by PyTorch) If reserved

ORACLE数据库等待-enq: TX - allocate ITL entry

https://blog.csdn.net/coco3600/article/details/100232105?utm_source=app 故障分析及解决过程 3.1 故障环境介绍 2 故障发生现象及报错信息 最近事情比较多,不过还好,碰到的都是等待事件相关的,同事发了个AWR报告,说是系统响应很慢,我简单看了下,简单分析下吧: 20分钟