一层专题

LeetCode-题目详解(十一):回溯算法【递归回溯、迭代回溯】【DFS是一个劲往某一个方向搜索;回溯算法建立在DFS基础之上,在搜索过程中,达到结束/裁剪条件后,恢复状态,回溯上一层,再次搜索】

这里写目录标题 一、概述1、深度优先遍历(DFS) 和回溯算法区别2、 何时使用回溯算法3、回溯算法步骤4、回溯问题的类型 二、LeetCode案例39. 组合总和40. 组合总和II77. 组合216. 组合总和 III46. 全排列47. 全排列 II剑指 Offer 38. 字符串的排列剑指 Offer II 079. 所有子集90. 子集 II剑指 Offer II 085. 生成匹

按层次顺序(同一层自左至右)遍历二叉树的算法

编写按层次顺序(同一层自左至右)遍历二叉树的算法。 二叉链表类型定义: typedef char TElemType; // 设二叉树的元素为char类型typedef struct BiTNode {TElemType data;BiTNode *lchild, *rchild;} BiTNode, *BiTree;可用队列类型Queue的相关定义: typed

darknet获取网络某一层的feature代码

当我们在命令行中执行./darknet detect fridge.cfg fridge.weights dog.jpg时,代码流程是:detect到了detector.c文件里面的test_detector函数里面的test_detector,然后在test_detector函数里面首先用parse_network_cfg_custom读取 cfg配置文件,并且用load_weights

Pytorch如何获取BERT模型最后一层隐藏状态的CLS的embedding?

遇到问题 BERT模型中最后一层的句子的CLS的embedding怎么获取? 来源于阅读 An Interpretability Illusion for BERT这篇论文 We began by creating embeddings for the 624,712 sentences in our four datasets. To do this, we used the BERT-b

117. 填充同一层的兄弟节点 II

https://leetcode-cn.com/problems/populating-next-right-pointers-in-each-node-ii/ 和116一样层序 import Queueclass Solution:# @param root, a tree link node# @return nothingdef connect(self, root):if not ro

linux系统中,pwd获取当前路径,dirname获取上一层路径;不使用 ../获取上一层路径

在实际项目中,我们通常可以使用 pwd 来获取当前路径,但是如果需要获取上一层路径,有不想使用 …/ 的方式,可以尝试使用 dirname指令 测试shell脚本 #!/bin/bash# 获取当前路径CURRENT_PATH=$PWDecho "CURRENT_PATH=$CURRENT_PATH"# 获取上一层路径TOP_PATH=$(dirname $CURRENT_PATH)e

一层循环实现回旋数列

所谓回旋数,就是下面这样 3的回旋数 1 2 3 8 9 4 7 6 5 5的回旋数 1 2 3 4 5 16 17 18 19 6 15 24 25 20 7 14 23 22 21 8 13 12 11 10 9 输入n得到相应的回旋数 学完了 判断 循环 和函数 恰好看到这道题

[Linux] UDP协议介绍:UDP协议格式、端口号在网络协议栈那一层工作...

TCP/IP网络模型, 将网络分为了四层: 之前的文章中以HTTP和HTTPS这两个协议为代表, 简单介绍了应用层协议. 实际上, 无论是HTTP还是HTTPS等应用层协议, 都是在传输层协议的基础上实现的 而传输层协议中最具代表性的就是: UDP和TCP协议了. 以HTTP为例, 在使用HTTP协议通信之前, 是先需要建立TCP连接的. 那么, 传输层协议的介绍就先从UDP协议开始

完全二叉树指向同一层的相邻结点

题目:对于一颗完全二叉树,要求给所有节点加上一个pNext指针,指向同一层的相邻节点;如果当前节点已经是该层的最后一个节点,则将pNext指针指向NULL;给出程序实现,并分析时间复杂度和空间复杂度。 答:时间复杂度为O(n),空间复杂度为O(1)。 #include "stdafx.h"#include <iostream>#include <fstream>#includ

从嵌套了两层的json里获取最后一层里的数据

JSONObject json = sysconfServiceFeign.getSysconfByNameFeign("xxxx");//拿到第一个json,这里我是从配置项里获取的,其他方法也可以,可以传递Map<String, Object> resultMap = new HashMap<>();if (json != null) {String parameValue = json.g

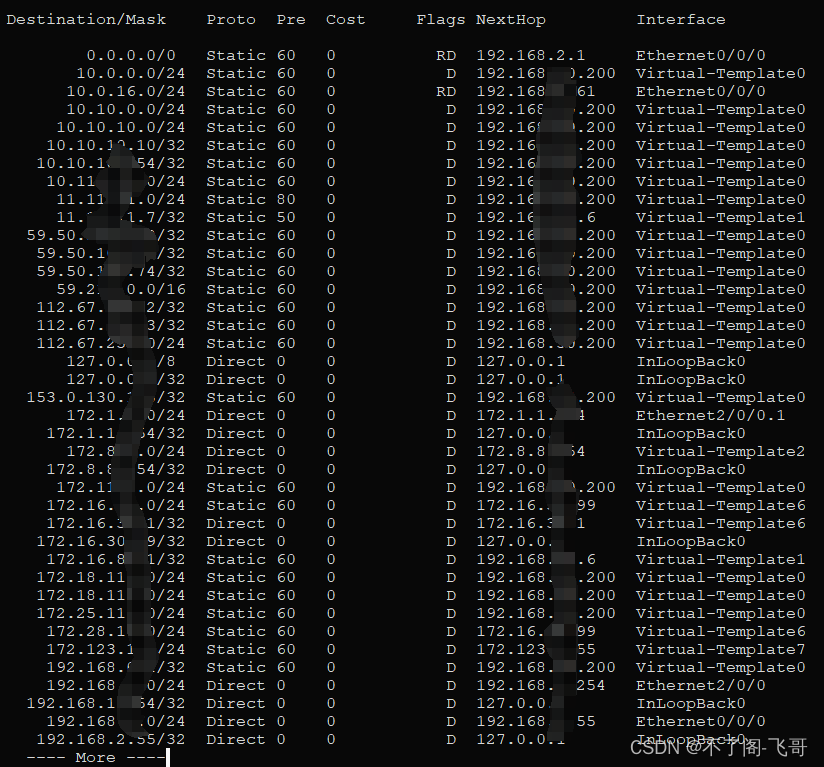

通俗的理解网关的概念的用途(三):你的数据包是如何到达下一层的

其实,这一章我写不好,因为这其中会涉及到一些计算和一些广播等概念,本人不善于此项。在此略述,可以参考。 每台设备的不同连接在获得有效的IP地址后,会根据IP地址的规则和掩码的规则,在操作系统和交换机(也有集线器)(一般叫二层交换设备)层面将此设备定义到各自的“本地网络”区域中。 比如,当你有一个IP 地址是 192.168.1.1/24时,网络系统会设置成所有在 192.168.1.1-192

蚂蚁面试:DDD外部接口调用,应该放在哪一层?

尼恩说在前面: 在40岁老架构师 尼恩的读者交流群(50+)中,最近有小伙伴拿到了一线互联网企业如字节、阿里、滴滴、极兔、有赞、希音、百度、网易、美团的面试资格,遇到很多很重要的面试题: DDD 的外部接口调用,应该放在哪一层? DDD架构,如何落地? 谈谈你的DDD落地经验? 谈谈你对DDD的理解? 如何保证RPC代码不会腐烂,升级能力强? 微服务如何拆分? 微服务爆炸,如何解决? 你们的

将一层的json转成拼接的query字符串

方法: 封装方法手写利用第三方库 qs利用浏览器api- URLSearchParams 记录一下URLSearchParams const a = new URLSearchParams({bar:'foo',foo:20})a.toString() // 'bar=foo&foo=20'

【Godot4自学手册】第三十九节利用shader(着色器)给游戏添加一层雾气效果

今天,主要是利用shader给游戏给地宫场景添加一层雾气效果,增加一下气氛,先看一下效果: 一、新建ParallaxBackground根节点 新建场景,根节点选择ParallaxBackground,命名为Fog,然后将该场景保存到Component文件夹下。ParallaxBackground 使用一个或多个 ParallaxLayer 子节点来创建视差效果。每个 ParallaxLay

VC6下CreateThread的回调函数无法直接用函数模板生成,需要一层分装

CreateThread的回调函数格式为:DWORD WINAPI XXX(LPVOID lpv) 使用示例: DWORD WINAPI CB(LPVOID lpv) { return 0; } CreateThread(NULL, NULL, CB, NULL, NULL, NULL); 当把CB更改为模板函数时,会发生错误: error C2664: 'CreateTh

1.9 DICOM带有overlay覆盖层图像显示(一层覆盖层)

以下链接是本系列文章,不足之处,可在评论区讨论: 系列文章 1.1 DICOM协议简介及应用 1.2 DICOM成像协议剖析 1.3 DICOM成像协议实现思路 1.4 DICOM图像CT值计算 1.5 DICOM图像CT值转RGB 1.6 DICOM图像的基本操作 1.7 DICOM层级关系

你在测试金字塔的哪一层(下)

在《你在测试金字塔的哪一层(上)》中介绍了自动化测试的重要性以及测试金字塔。测试金字塔分为单元测试、服务测试、UI测试,它们分别是什么呢?本期文章让我们一起详细看看测试金字塔的不同层次。 一、单元测试 单元测试是指对程序模块(软件设计的最小单位)进行正确性检验的测试工作,能够提高代码质量和可维护性。 但对“一个单元”的概念是没有标准答案,每个人可以根据自身所处的编程范式和语言环境确定。

Doris的3种数据模型详解和数据仓库每一层的模型选用

Apache Doris是一个用于离线数据仓库开发的分布式SQL查询和分析引擎。在使用Doris进行离线数据仓库开发时,可以采用三种不同的数据模型:Duplicate模型、Aggregate模型和Unique模型。每种模型都有其适用的场景和特点,同时也对于不同层次的数据仓库有着不同的使用建议。 Duplicate模型 Duplicate模型适合任意维度的Ad-hoc查询,在这种模型下,数据完全

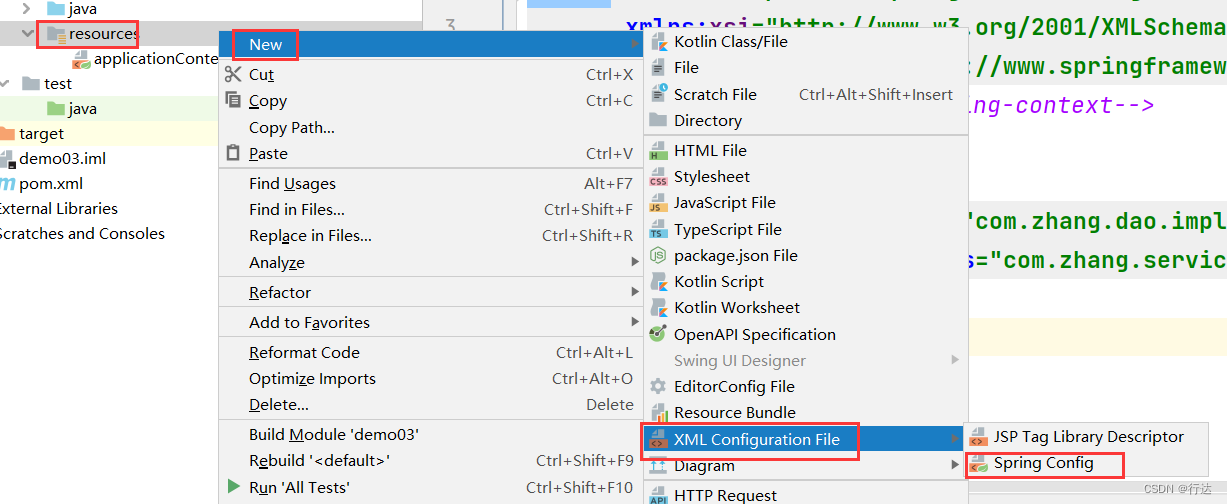

Spring炼气之路(炼气一层)

目录 一、IOC 1.1 控制反转是什么? 1.2 什么是IOC容器? 1.3 IOC容器的作用 1.4 IOC容器存放的是什么? 二、DI 2.1 依赖注入是什么? 2.2 依赖注入的作用 三、IOC案例实现 3.1下载Maven 3.2 配置Maven中的settings.xml文件 3.2.1 配置本地库 3.2.2 配置中央仓库 3.2.3 配置JDK版本

赚钱的5个层次,你在哪一层?

对大多数人来说,赚钱辛苦又劳累,即使是每天起早贪黑,还是赚不到多少钱;而对有钱人来说,越赚钱反倒越清闲,甚至什么都不用做,就有钱入账。 这就是赚钱的层次不同,从而决定了赚钱难易程度、轻松与否。大叔今天就来和大家聊聊,你,处于哪一层。 01 影响一个人赚钱能力的5个因素 专业 俗话说男怕选错行,女怕嫁错郎,现今社会男女平等,都怕选错行。这说明了一个人所学专业、从事的领域行业对于你赚钱、甚至人生有多

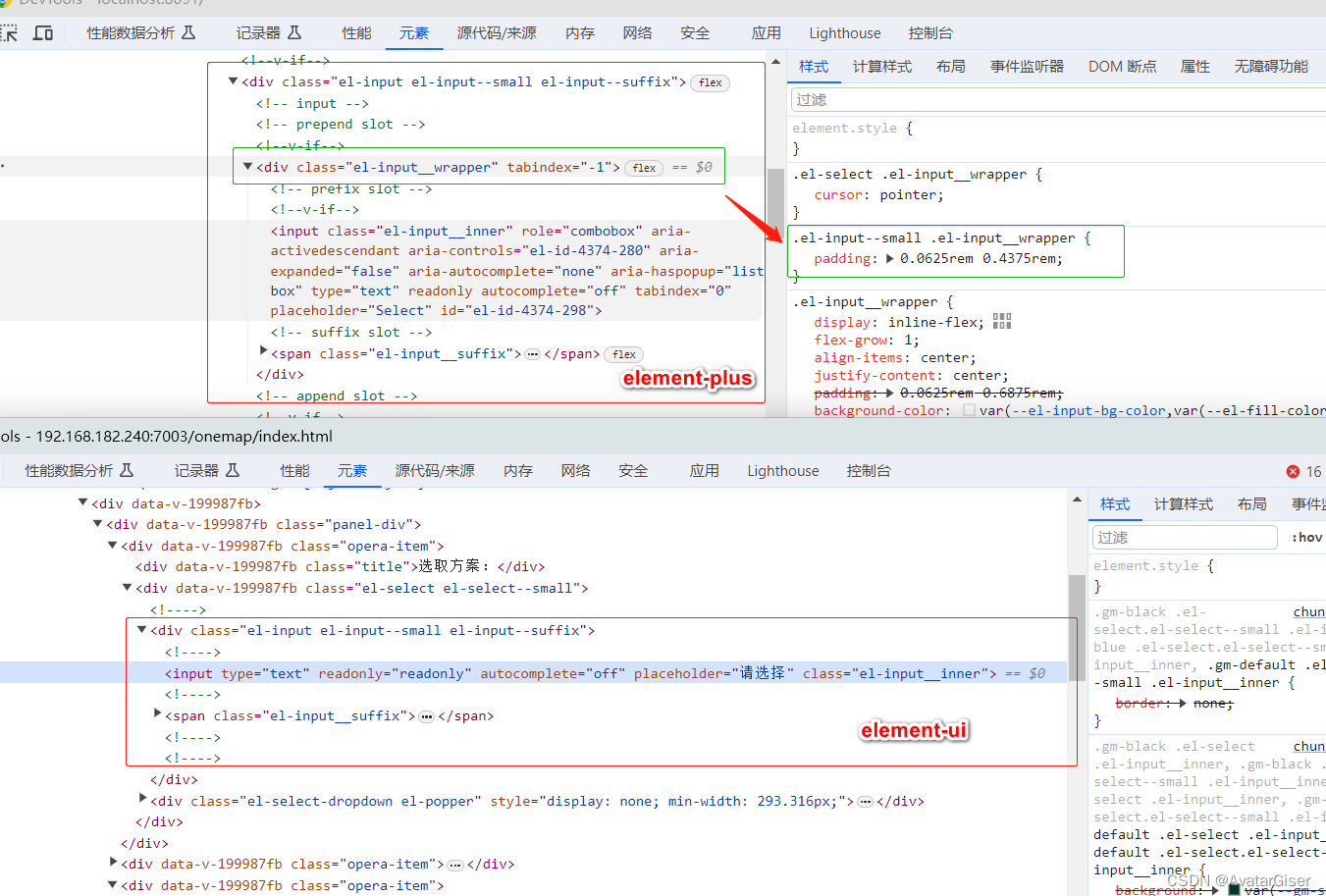

《ElementPlus 与 ElementUI 差异集合》el-input 多包裹一层 el-input__wrapper

差异 element-ui el-input 中,<div class="el-input"> 下一级就是 <input> 标签 ;element-plus el-input中,<div class="el-input"> 和 <input> 标签之间多了一层 <div class="el-input__wrapper">,且有 padding 设置; PS:如果是从 element-ui 项

3d渲染的模型仿佛有一层雾是怎么回事?---模大狮模型网

当在3D渲染的模型上出现仿佛有一层雾的效果时,可能是由于以下几个原因导致的: 环境光设置过高: 如果环境光设置过高,会使整个场景看起来像是笼罩在一层薄雾中。尝试降低环境光的强度,让场景更清晰明亮。 材质透明度设置不当: 检查模型的材质属性,特别是透明度或反射属性。如果透明度设置过高,可能会导致模型看起来朦胧模糊,就像被一层雾气包围着一样。 渲染器设置问题: 某些渲染器在默认设置下可能

@Valid的校验范围,只能校验一层,若包含子类,则子类属性不会被校验

@Valid的校验范围,只能校验一层,若包含子类,则子类属性不会被校验public ApiResultDto<List<UUID>> batchAdd(@RequestBody @Valid ResourceBatchSaveDto req) {return dtResourceService.batchSaveResource(req);}此时@Valid只会校验ResourceBatchSa

adb 打开系统设置界面和后退/返回/上一层

项目场景: 场景:某些Android大屏设备没有虚拟按键(比如自助收银设备),当你需要控制其返回桌面或者退出时,感觉无处下手(手动狗头) 例如:没有虚拟键,怎样才能实现:返回桌面,打开设置,返回上一层,退出,返回等 解决方案: 这时候adb的作用就来啦 要实现点击返回键的效果,用到的是这个指令: adb shell input keyevent BACK 也可以直接打开系统设置页

![[Linux] UDP协议介绍:UDP协议格式、端口号在网络协议栈那一层工作...](https://img-blog.csdnimg.cn/img_convert/89f8d427a90f84f4b1646b96963cbd91.webp?x-oss-process=image/format,png)