sparsity专题

Why is L1 regularization supposed to lead to sparsity than L2?

Just because of its geometric shape: Here is some intentionally non-rigorous intuition: suppose you have a linear system Ax=b for which you know there exists a sparse solution x∗ , and that

ReLU Strikes Back: Exploiting Activation Sparsity in Large Language Models

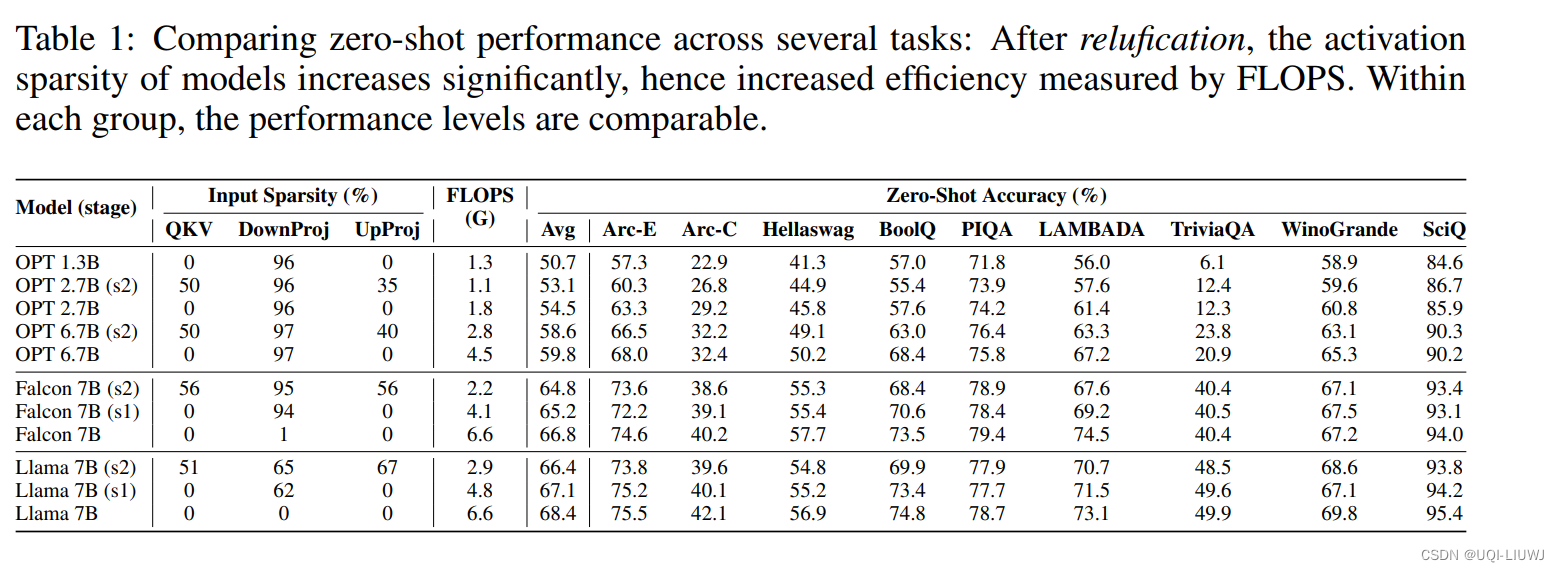

iclr 2024 oral reviewer 评分 688 1 intro 目前LLM社区中通常使用GELU和SiLU来作为替代激活函数,它们在某些情况下可以提高LLM的预测准确率 但从节省模型计算量的角度考虑,论文认为经典的ReLU函数对模型收敛和性能的影响可以忽略不计,同时可以显着减少计算和权重IO量\ 2 激活函数影响效果吗? 选用了开源的大模型 OPT,Llama