本文主要是介绍随便看看AutoGluon-Tabular,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

论文提到预先准备一些模型,并且按一个手设的priority进行训练

# Higher values indicate higher priority, priority dictates the order models are trained for a given level.

DEFAULT_MODEL_PRIORITY = dict(RF=100,XT=90,KNN=80,GBM=70,CAT=60,NN=50,LR=40,custom=0,

)

感觉设的很随意

MODEL_TYPES = dict(RF=RFModel,XT=XTModel,KNN=KNNModel,GBM=LGBModel,CAT=CatboostModel,NN=TabularNeuralNetModel,LR=LinearModel,

)

可能是Amazon经费有限,就整合了这几个模型

autogluon.scheduler.fifo.FIFOScheduler#run总算在这看到了超参优化的影子了

self.searcher

RandomSearcher(

ConfigSpace: Configuration space object:Hyperparameters:feature_fraction, Type: UniformFloat, Range: [0.75, 1.0], Default: 1.0learning_rate, Type: UniformFloat, Range: [0.01, 0.1], Default: 0.0316227766, on log-scalemin_data_in_leaf, Type: UniformInteger, Range: [2, 30], Default: 20num_leaves, Type: UniformInteger, Range: [16, 96], Default: 31

.

Number of Trials: 0.

Best Config: {}

Best Reward: -inf)

随机搜索

如果能找到AG配置的超参空间,也很有价值

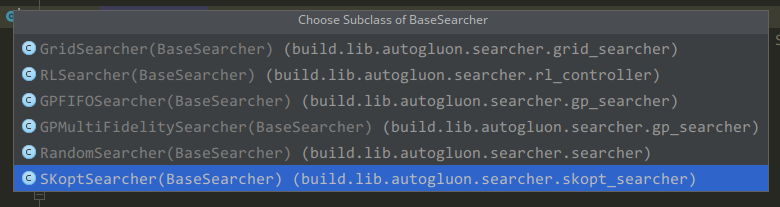

BaseSearcher被哪些类继承了?

GP优化相关的类在autogluon.searcher.bayesopt.autogluon.gp_fifo_searcher.GPFIFOSearcher

这应该就是paper里面用来拟合tabular数据的NN了

autogluon.utils.tabular.ml.models.tabular_nn.tabular_nn_model.TabularNeuralNetModel

autogluon.utils.tabular.ml.models.tabular_nn.embednet.EmbedNet

构造ColumnTransformer

autogluon.utils.tabular.ml.models.tabular_nn.tabular_nn_model.TabularNeuralNetModel#_create_preprocessor

TabularNN还是值得研究的,有空看一下

这篇关于随便看看AutoGluon-Tabular的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!