本文主要是介绍Android NDK 实现视音频播放器源码,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

目录:

-

CMake配置环境项目,gradle代码块:

-

项目流程图:

-

ffmpeg解封装解码流程API概况:

-

activity_main.xml:

-

搭建C++上层:

-

Java层MainActivity(上层):

-

完成Native函数实现(JNI函数):

-

C++实现文件:

-

C++头文件:

CMake配置环境项目,gradle代码块:

android {compileSdkVersion 30buildToolsVersion "30.0.3"defaultConfig {applicationId "cn.itcast.newproject"minSdkVersion 17targetSdkVersion 28externalNativeBuild{cmake{cppFlags ""abiFilters "armeabi-v7a" //给出CMakeLists.txt指定编译此平台}}ndk{abiFilters("armeabi-v7a") //apk/lib/libnative-lib.so指定编译的是此平台}versionCode 1versionName "1.0"testInstrumentationRunner "androidx.test.runner.AndroidJUnitRunner"}buildTypes {release {minifyEnabled falseproguardFiles getDefaultProguardFile('proguard-android-optimize.txt'), 'proguard-rules.pro'}}

}

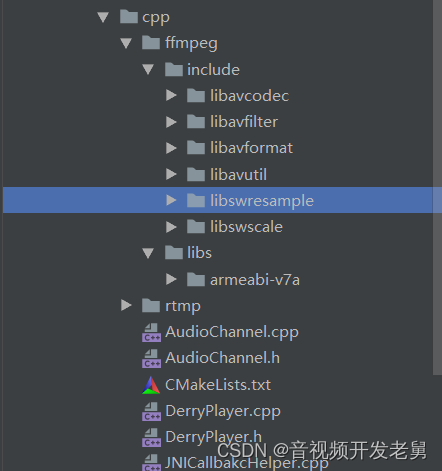

手写FFmpeg && rtmp(导入别人的也行):

CMakeLists.txt:

cmake_minimum_required(VERSION 3.6.4111459)set(FFMPEG ${CMAKE_SOURCE_DIR}/ffmpeg) ##拿到ffmpeg的路径

set(RTMP ${CMAKE_SOURCE_DIR}/rtmp) ##拿到rtmp的路径include_directories(${FFMPEG}/include) ##导入ffmpeg的头文件set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -L${FFMPEG}/libs/${CMAKE_ANDROID_ARCH_ABI}") ##导入ffmpeg库指定set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -L${RTMP}/libs/${CMAKE_ANDROID_ARCH_ABI}") # rtmp库指定##批量导入 源文件

file(GLOB src_files *.cpp)add_library(native-lib # 总库libnative-lib.soSHARED # 动态库${src_files})target_link_libraries( native-lib # 总库libnative-lib.so##忽略顺序的方式,导入-Wl,--start-groupavcodec avfilter avformat avutil swresample swscale-Wl,--end-grouplog # 日志库,打印日志用的z # libz.so库,是FFmpeg需要用ndk的z库,FFMpeg需要额外支持 libz.sortmp # rtmp 后面会专门介绍android # android 后面会专门介绍,目前先要明白的是 ANativeWindow 用来渲染画面的OpenSLES # OpenSLES 后面会专门介绍,目前先要明白的是 OpenSLES 用来播放声音的

)

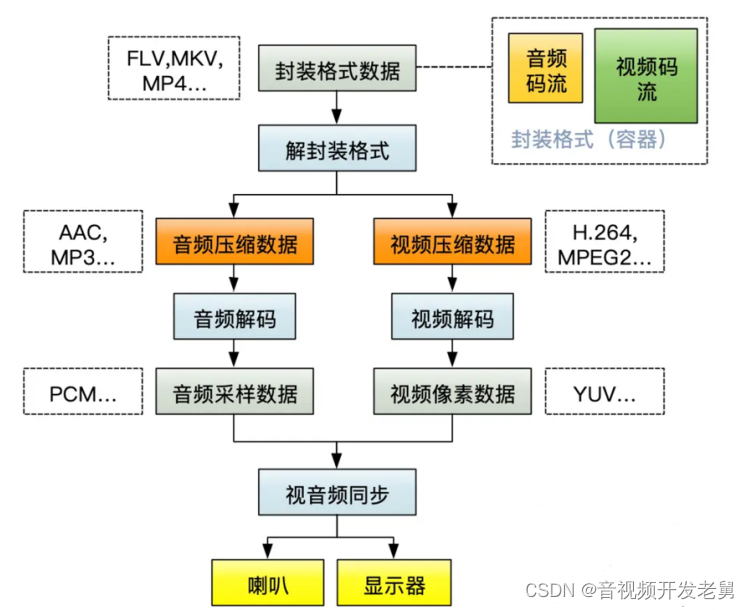

熟悉一下之前的编码流程

项目流程图:

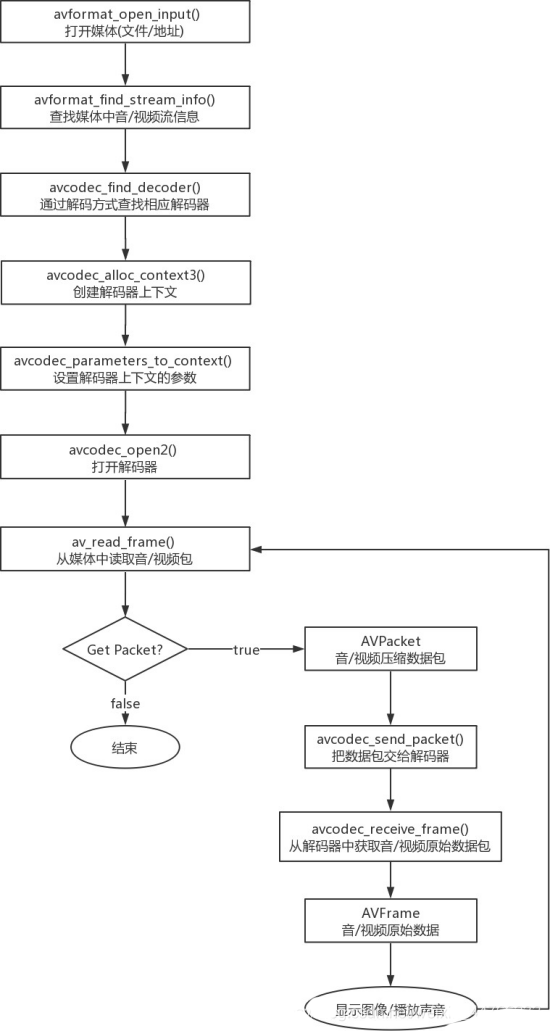

ffmpeg解封装解码流程API概况:

activity_main.xml:

<?xml version="1.0" encoding="utf-8"?>

<LinearLayout xmlns:android="http://schemas.android.com/apk/res/android" xmlns:tools="http://schemas.android.com/tools" android:layout_width="match_parent"android:layout_height="match_parent" android:orientation="vertical" tools:context=".MainActivity"><SurfaceViewandroid:id="@+id/surfaceView" android:layout_width="match_parent" android:layout_height="200dp" /><LinearLayoutandroid:layout_width="match_parent" android:layout_height="30dp" android:layout_margin="5dp"><TextViewandroid:id="@+id/tv_time" android:layout_width="wrap_content" android:layout_height="match_parent" android:gravity="center" android:text="00:00/00:00" android:visibility="gone" /><SeekBarandroid:id="@+id/seekBar" android:layout_width="0dp" android:layout_height="match_parent" android:layout_weight="1" android:max="100" android:visibility="gone" />

</LinearLayout></LinearLayout>

搭建C++上层:

思路在注释上

package cn.itcast.newproject;public class PlayerClass {static {System.loadLibrary("native-lib");}//第一步先声明接口//下层工作完上层要有接口,准备成功的接口,会去告诉上层//接口是给Java层的main用的private OnPreparedListener onPreparedListener;public PlayerClass() {}// 第二步// 设置媒体源(文件路径++++直播地址rtmp)// sdk卡本地有MP4文件//声明meidiePlay dataSourceprivate String dataSource;public void setDataSource(String dataSource) {this.dataSource = dataSource;}//第三步// 播放器准备播放,因为解封装格式不一定成功,一定要打开测试一下//成功后再调用接口public void prepare() {//传参媒体源prepareNative(dataSource);}//第四步// 开始播放public void start() {startNative(); }//第五步// 停止播放public void stop() {stopNative(); }//第六步//程序关闭时,释放资源public void release() {releaseNative(); }//给JNI反射调用的//Native层为C++下层,提供函数给上层Java层调用public void onPrepared() {//判空,不为空就回调if (onPreparedListener != null) {onPreparedListener.onPrepared();}}//设置准备成功的监听public void setOnPreparedListener(OnPreparedListener onPreparedListener) {this.onPreparedListener = onPreparedListener; }//准备成功的监听public interface OnPreparedListener {void onPrepared();}//Native函数实现区域//使用软编解码,硬编解码的调参数太烦了就不用了private native void prepareNative(String dataSource);private native void startNative();private native void stopNative();private native void releaseNative();

}Java层MainActivity(上层):

package cn.itcast.newproject;import androidx.appcompat.app.AppCompatActivity;import android.os.Bundle;

import android.os.Environment;

import android.view.WindowManager;

import android.widget.Toast;import java.io.File;public class MainActivity extends AppCompatActivity {private PlayerClass player;@Overrideprotected void onCreate(Bundle savedInstanceState) {super.onCreate(savedInstanceState);getWindow().setFlags(WindowManager.LayoutParams.FLAG_KEEP_SCREEN_ON, WindowManager.LayoutParams.FLAG_KEEP_SCREEN_ON);setContentView(R.layout.activity_main);//创建类player = new PlayerClass();player.setDataSource(new File(Environment.getExternalStorageDirectory() + File.separator + "demo.mp4").getAbsolutePath());// 准备成功的回调处// 被C++调用 可能会是子线程调用的player.setOnPreparedListener(new PlayerClass.OnPreparedListener() {@Overridepublic void onPrepared() {runOnUiThread(new Runnable() {@Overridepublic void run() {Toast.makeText(MainActivity.this, "准备完成,即将开始播放", Toast.LENGTH_SHORT).show();}});//准备成功,调用 C++ 开始播放player.start();}});}@Override // ActivityThread.java Handlerprotected void onResume() { // 触发准备super.onResume();//保证一触发就传到C++层//C++如果是准备成功就返回成功信息,回到runOnUiThread函数打印//再调用Play.start(),最后再调回C++player.prepare();}@Overrideprotected void onStop() {super.onStop();player.stop();}//Activity关闭的时候释放资源,爱放不放@Overrideprotected void onDestroy() {super.onDestroy();player.release();}}完成Native函数实现(JNI函数):

#include <jni.h>

#include <string>

#include "DerryPlayer.h"

#include "JNICallbakcHelper.h"extern "C"{#include <libavutil/avutil.h>

}extern "C" JNIEXPORT jstring JNICALL

Java_com_derry_player_MainActivity_getFFmpegVersion(JNIEnv *env,jobject /* this */) {std::string info = "FFmpeg的版本号是:";info.append(av_version_info());return env->NewStringUTF(info.c_str());

}DerryPlayer *player = 0;

JavaVM *vm = 0;

jint JNI_OnLoad(JavaVM * vm, void * args) {::vm = vm;return JNI_VERSION_1_6;

}//prepareNative

extern "C"

JNIEXPORT void JNICALL

Java_com_derry_player_DerryPlayer_prepareNative(JNIEnv *env, jobject job, jstring data_source) {const char * data_source_ = env->GetStringUTFChars(data_source, 0);auto *helper = new JNICallbakcHelper(vm, env, job); // C++子线程回调 , C++主线程回调player = new DerryPlayer(data_source_, helper);player->prepare();env->ReleaseStringUTFChars(data_source, data_source_);

}//startNative

extern "C"

JNIEXPORT void JNICALL

Java_com_derry_player_DerryPlayer_startNative(JNIEnv *env, jobject thiz) {if (player) {// player.start();}

}//stopNative

extern "C"

JNIEXPORT void JNICALL

Java_com_derry_player_DerryPlayer_stopNative(JNIEnv *env, jobject thiz) {

}//releaseNative

extern "C"

JNIEXPORT void JNICALL

Java_com_derry_player_DerryPlayer_releaseNative(JNIEnv *env, jobject thiz) {

}

C++实现文件:

#include "DerryPlayer.h"DerryPlayer::PlayerClass(const char *data_source, JNICallbakcHelper *helper) {// this->data_source = data_source;// 如果一旦被释放,会一定造成悬空指针// 记得复习深拷贝// this->data_source = new char[strlen(data_source)];// Java: demo.mp4// C层:demo.mp4\0 C层会自动 + \0, strlen不计算\0的长度,需要手动加 \0this->data_source = new char[strlen(data_source) + 1];strcpy(this->data_source, data_source); // 把源 Copy给成员this->helper = helper;

}PlayerClass::~PlayerClass() {if (data_source) {delete data_source;}if (helper) {delete helper;}

}void *task_prepare(void *args) { // 此函数和PlayerClass这个对象没有关系,不能用PlayerClass的私有成员// avformat_open_input(0, this->data_source)auto *player = static_cast<PlayerClass *>(args);player->prepare_();return 0; // 必须返回,坑,错误很难找

}void PlayerClass::prepare_() { // 此函数 是 子线程/*** TODO 第一步:打开媒体地址(文件路径, 直播地址rtmp)*/formatContext = avformat_alloc_context();AVDictionary *dictionary = 0;av_dict_set(&dictionary, "timeout", "5000000", 0); // 单位微妙//AVFormatContext *

//路径

//AVInputFormat *fmt Mac、Windows 摄像头、麦克风,用不到不写,也不想写

//Http 连接超时, 打开rtmp的超时 AVDictionary **optionsint r = avformat_open_input(&formatContext, data_source, 0, &dictionary);// 释放字典av_dict_free(&dictionary);if (r) {// 把错误信息反馈给Java,回调给Java Toast——打开媒体格式失败,请检查代码//实现 JNI 反射回调到Java方法,并提示return;}//第二步:查找媒体中的音视频流的信息r = avformat_find_stream_info(formatContext, 0);if (r < 0) {// 这里实现 JNI 反射回调到Java方法return;}//根据流信息,流的个数,用循环来找for (int i = 0; i < formatContext->nb_streams; ++i) {//获取媒体流(视频,音频)AVStream *stream = formatContext->streams[i];// 第五步:从上面的流中 获取 编码解码的【参数】//由于:后面的编码器 解码器 都需要参数(宽高 等等)AVCodecParameters *parameters = stream->codecpar;//第六步:(根据上面的【参数】)获取编解码器AVCodec *codec = avcodec_find_decoder(parameters->codec_id);//第七步:编解码器 上下文 AVCodecContext *codecContext = avcodec_alloc_context3(codec);if (!codecContext) {// 实现 JNI 反射回调到Java方法,并提示return;}//第八步:空白parameters copy codecContext)r = avcodec_parameters_to_context(codecContext, parameters);if (r < 0) {// 实现JNI 反射回调到Java方法,并提示return;}//第九步:打开解码器r = avcodec_open2(codecContext, codec, 0);if (r) { // 非0就是true// 实现JNI 反射回调到Java方法,并提示return;}//第十步:从编解码器参数中,获取流的类型 codec_type === 音频 视频if (parameters->codec_type == AVMediaType::AVMEDIA_TYPE_AUDIO) { // 音频audio_channel = new AudioChannel();} else if (parameters->codec_type == AVMediaType::AVMEDIA_TYPE_VIDEO) { // 视频video_channel = new VideoChannel();}} // for end/**//第十一步: 如果流中 没有音频 也没有 视频 健壮性校验*/if (!audio_channel && !video_channel) {// 实现JNI 反射回调到Java方法,并提示return;}//第十二步:媒体文件可以了,通知给上层if (helper) {helper->onPrepared(THREAD_CHILD);}

}void DerryPlayer::prepare() {// 最后创建子线程pthread_create(&pid_prepare, 0, task_prepare, this);

}

C++头文件:

#ifndef PLAYERCLASS_PLAYERCLASS_H

#define PLAYERCLASS_PLAYERCLASS_H#include <cstring>

#include <pthread.h>

#include "AudioChannel.h"

#include "VideoChannel.h"

#include "JNICallbakcHelper.h"

#include "util.h"extern "C" {//FFmpeg需要用C编译#include <libavformat/avformat.h>

};class DerryPlayer {private:char *data_source = 0; // 指针pthread_t pid_prepare;AVFormatContext *formatContext = 0;AudioChannel *audio_channel = 0;VideoChannel *video_channel = 0;JNICallbakcHelper *helper = 0;public:PlayerClass(const char *data_source, JNICallbakcHelper *helper);~PlayerClass();void prepare();void prepare_();

};#endif //PLAYERCLASS_PLAYERCLASS_H原文链接:Android NDK 实现视音频播放器源码 - 资料 - 我爱音视频网 - 构建全国最权威的音视频技术交流分享论坛

本文福利, C++音视频学习资料包、技术视频,内容包括(音视频开发,面试题,FFmpeg ,webRTC ,rtmp ,hls ,rtsp ,ffplay ,srs)↓↓↓↓↓↓见下面↓↓私信或文章底部领取↓↓

这篇关于Android NDK 实现视音频播放器源码的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!