本文主要是介绍Scrapy+Selenium的使用:获取huya/lol板块直播间信息,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

目录

系列文章目录

第一章 Scrapy+Selenium的使用:获取huya/lol板块直播间信息

前言

一、代码

huya_novideo.py

items.py

middlewares.py

pipelines.py

selenium_request.py

settings.py

二、结果图示

总结

前言

- 本次交流主要包含了selenium在scrapy的使用,具体如下。

- 创建selenium_request.py文件,构造的selenium_request.py方法。

- 在解析文件(huya_novideo.py)中判断是否需要使用selenium。

- 配置items.py/middlewares.py/pipelines.py/settings.py

- 本代码仅用于学习/交流,请勿商用。

- 如有侵权,请及时联系删除。

一、代码

huya_novideo.py

import scrapy

from ..items import HuyaItem

from huya.selenium_request import SeleniumRequest

import datetime

# import json

# from scrapy.linkextractor import LinkExtractorclass HuyaNovideoSpider(scrapy.Spider):name = 'huya_novideo'# allowed_domains = ['www.huya.com']start_urls = ['https://www.huya.com/g/lol']# le = LinkExtractor(restrict_xpath='')def start_requests(self):print('*处理起始URL')yield SeleniumRequest(url=self.start_urls[0],callback=self.parse,)def parse(self, response):print(str(datetime.datetime.now().strftime('%Y-%m-%d %H:%M:%S')) + '正在解析' + str(response.url) + '……')live_infos = response.xpath('//li[@class="game-live-item"]')for info in live_infos:huya = HuyaItem()huya['liver'] = info.xpath('.//span[@class="txt"]//i[@class="nick"]/text()').extract_first()huya['title'] = info.xpath('.//a[@class="title"]/text()').extract_first()huya['tag_right'] = str(info.xpath('string(.//*[@class="tag-right"])').extract_first()).strip()huya['tag_left'] = str(info.xpath('.//*[@class="tag-leftTopImg"]/@src').extract_first()).strip()huya['popularity'] = info.xpath('.//i[@class="js-num"]//text()').extract_first()huya['href'] = info.xpath('.//a[contains(@class,"video-info")]/@href').extract_first()huya['tag_recommend'] = info.xpath('.//em[@class="tag tag-recommend"]/text()').extract_first()# imgs = info.xpath('.//img[@class="pic"]/@src').extract_first()# if 'https' not in imgs:# huya['imgs'] = 'https:' + str(imgs)# else:# huya['imgs'] = imgsyield huyadata_page = response.xpath('//div[@id="js-list-page"]/@data-pages').extract_first()for page in range(2, int(data_page) + 1):yield SeleniumRequest(url='https://www.huya.com/cache.php?m=LiveList&do=getLiveListByPage&gameId=1&tagAll=0&callback=getLiveListJsonpCallback&page=' + str(page), callback=self.parse)

items.py

import scrapyclass HuyaItem(scrapy.Item):# define the fields for your item here like:# name = scrapy.Field()liver = scrapy.Field()title = scrapy.Field()tag_right = scrapy.Field()tag_left = scrapy.Field()popularity = scrapy.Field()href = scrapy.Field()tag_recommend = scrapy.Field()middlewares.py

# Define here the models for your spider middleware

#

# See documentation in:

# https://docs.scrapy.org/en/latest/topics/spider-middleware.htmlfrom scrapy import signals# useful for handling different item types with a single interface

from itemadapter import is_item, ItemAdapter

from huya.selenium_request import SeleniumRequest

from selenium.webdriver import Chrome

from selenium import webdriver

from scrapy.http.response.html import HtmlResponse

import time

from selenium.webdriver.common.action_chains import ActionChains

from selenium.webdriver.common.by import Byclass HuyaSpiderMiddleware:# Not all methods need to be defined. If a method is not defined,# scrapy acts as if the spider middleware does not modify the# passed objects.@classmethoddef from_crawler(cls, crawler):# This method is used by Scrapy to create your spiders.s = cls()crawler.signals.connect(s.spider_opened, signal=signals.spider_opened)return sdef process_spider_input(self, response, spider):# Called for each response that goes through the spider# middleware and into the spider.# Should return None or raise an exception.return Nonedef process_spider_output(self, response, result, spider):# Called with the results returned from the Spider, after# it has processed the response.# Must return an iterable of Request, or item objects.for i in result:yield idef process_spider_exception(self, response, exception, spider):# Called when a spider or process_spider_input() method# (from other spider middleware) raises an exception.# Should return either None or an iterable of Request or item objects.passdef process_start_requests(self, start_requests, spider):# Called with the start requests of the spider, and works# similarly to the process_spider_output() method, except# that it doesn’t have a response associated.# Must return only requests (not items).for r in start_requests:yield rdef spider_opened(self, spider):spider.logger.info('Spider opened: %s' % spider.name)class HuyaDownloaderMiddleware:# Not all methods need to be defined. If a method is not defined,# scrapy acts as if the downloader middleware does not modify the# passed objects.@classmethoddef from_crawler(cls, crawler):# This method is used by Scrapy to create your spiders.s = cls()crawler.signals.connect(s.spider_opened, signal=signals.spider_opened)return sdef process_request(self, request, spider):# Called for each request that goes through the downloader# middleware.# Must either:# - return None: continue processing this request# - or return a Response object# - or return a Request object# - or raise IgnoreRequest: process_exception() methods of# installed downloader middleware will be calledif 'cache' in request.url:next_page = self.web.find_element(By.XPATH, '//div[@id="js-list-page"]/div//a[@class="laypage_next"]')action = ActionChains(self.web)action.move_to_element(next_page)action.click()action.perform()time.sleep(3)return HtmlResponse(url=request.url, status=200, headers=None, body=self.web.page_source, request=request, encoding="utf-8")elif isinstance(request, SeleniumRequest):# SeleniumRequest处理请求self.web.get(request.url)print('我用selenium获取网页信息了'+str(request.url))time.sleep(3)return HtmlResponse(url=request.url, status=200, headers=None, body=self.web.page_source, request=request, encoding="utf-8")else:return Nonedef process_response(self, request, response, spider):# Called with the response returned from the downloader.# Must either;# - return a Response object# - return a Request object# - or raise IgnoreRequestreturn responsedef process_exception(self, request, exception, spider):# Called when a download handler or a process_request()# (from other downloader middleware) raises an exception.# Must either:# - return None: continue processing this exception# - return a Response object: stops process_exception() chain# - return a Request object: stops process_exception() chainpassdef spider_opened(self, spider):spider.logger.info('Spider opened: %s' % spider.name)option = webdriver.ChromeOptions()option.add_experimental_option("excludeSwitches", ['enable-automation', 'enable-logging'])self.web = Chrome(options=option)print('我开始用selenium了')

pipelines.py

from itemadapter import ItemAdapter

from scrapy.pipelines.images import ImagesPipeline

import scrapy

import datetimeclass HuyaPipeline:def open_spider(self, spider):self.f = open('huya' + str(datetime.datetime.now().strftime('%Y%m%d %H%M%S')) + '.csv', mode='w', encoding='utf-8-sig')# self.f.write(f"'liver', 'title', 'tag_right', 'tag_left', 'popularity', 'href', 'tag_recommend'")self.f.write('liver, title, tag_right, tag_left, popularity, href, tag_recommend\n')def process_item(self, item, spider):self.f.write(f"{item['liver']}, {item['title']}, {item['tag_right']},{item['tag_left']},{item['popularity']},{item['href']},{item['tag_recommend']}\n")return itemdef close_spider(self, spider):self.f.close()class FigurePipeline(ImagesPipeline):def get_media_requests(self, item, info):return scrapy.Request(item['imgs'])def file_path(self, item, request, response=None, info=None):file_name = str(item['liver'])+str(datetime.datetime.now().strftime('%Y%m%d %H%M%S'))return f"img/{file_name}"def item_completed(self, results, item, info):print(results)selenium_request.py

from scrapy import Requestclass SeleniumRequest(Request):passsettings.py

# Scrapy settings for huya project

#

# For simplicity, this file contains only settings considered important or

# commonly used. You can find more settings consulting the documentation:

#

# https://docs.scrapy.org/en/latest/topics/settings.html

# https://docs.scrapy.org/en/latest/topics/downloader-middleware.html

# https://docs.scrapy.org/en/latest/topics/spider-middleware.htmlBOT_NAME = 'huya'SPIDER_MODULES = ['huya.spiders']

NEWSPIDER_MODULE = 'huya.spiders'# Crawl responsibly by identifying yourself (and your website) on the user-agent

USER_AGENT = 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/110.0.0.0 Safari/537.36'# Obey robots.txt rules

# ROBOTSTXT_OBEY = True# Configure maximum concurrent requests performed by Scrapy (default: 16)

CONCURRENT_REQUESTS = 32# Configure a delay for requests for the same website (default: 0)

# See https://docs.scrapy.org/en/latest/topics/settings.html#download-delay

# See also autothrottle settings and docs

# DOWNLOAD_DELAY = 3

# The download delay setting will honor only one of:

# CONCURRENT_REQUESTS_PER_DOMAIN = 16

# CONCURRENT_REQUESTS_PER_IP = 16# Disable cookies (enabled by default)

# COOKIES_ENABLED = False# Disable Telnet Console (enabled by default)

# TELNETCONSOLE_ENABLED = False# Override the default request headers:

# DEFAULT_REQUEST_HEADERS = {

# 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8',

# 'Accept-Language': 'en',

# }# Enable or disable spider middlewares

# See https://docs.scrapy.org/en/latest/topics/spider-middleware.html

# SPIDER_MIDDLEWARES = {

# 'huya.middlewares.HuyaSpiderMiddleware': 543,

# }# Enable or disable downloader middlewares

# See https://docs.scrapy.org/en/latest/topics/downloader-middleware.html

DOWNLOADER_MIDDLEWARES = {'huya.middlewares.HuyaDownloaderMiddleware': 543,

}# Enable or disable extensions

# See https://docs.scrapy.org/en/latest/topics/extensions.html

# EXTENSIONS = {

# 'scrapy.extensions.telnet.TelnetConsole': None,

# }# Configure item pipelines

# See https://docs.scrapy.org/en/latest/topics/item-pipeline.html

ITEM_PIPELINES = {'huya.pipelines.HuyaPipeline': 300,# 'huya.pipelines.FigurePipeline': 301,

}# Enable and configure the AutoThrottle extension (disabled by default)

# See https://docs.scrapy.org/en/latest/topics/autothrottle.html

# AUTOTHROTTLE_ENABLED = True

# The initial download delay

# AUTOTHROTTLE_START_DELAY = 5

# The maximum download delay to be set in case of high latencies

# AUTOTHROTTLE_MAX_DELAY = 60

# The average number of requests Scrapy should be sending in parallel to

# each remote server

# AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0

# Enable showing throttling stats for every response received:

# AUTOTHROTTLE_DEBUG = False# Enable and configure HTTP caching (disabled by default)

# See https://docs.scrapy.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings

# HTTPCACHE_ENABLED = True

# HTTPCACHE_EXPIRATION_SECS = 0

# HTTPCACHE_DIR = 'httpcache'

# HTTPCACHE_IGNORE_HTTP_CODES = []

# HTTPCACHE_STORAGE = 'scrapy.extensions.httpcache.FilesystemCacheStorage'

IMAGES_STORE = './imgs_huya'

LOG_LEVEL = 'ERROR'

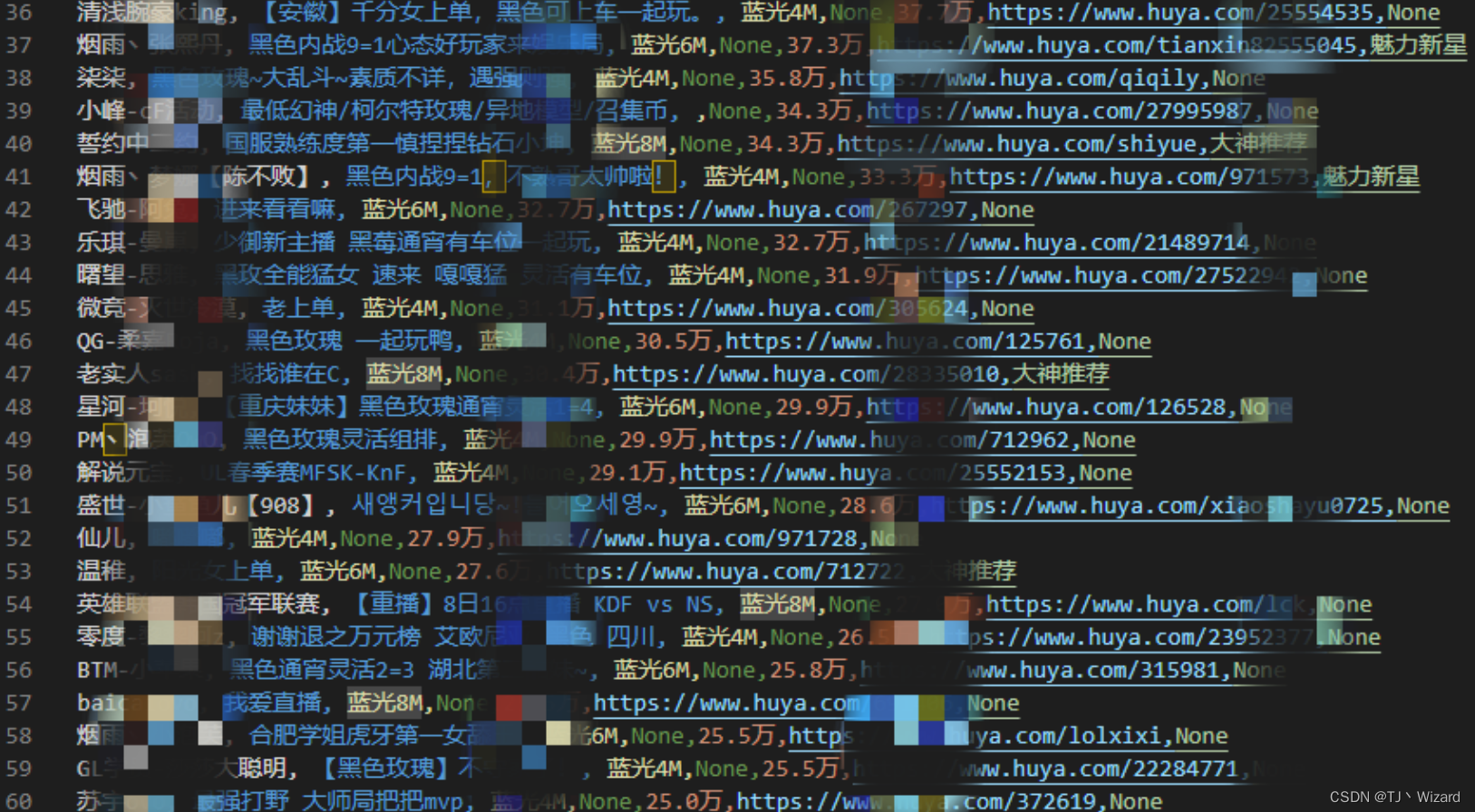

二、结果图示

考虑信息隐私问题,码住部分信息~

总结

本文仅简单介绍了scrapy+selenium的使用,而scrapy提供了更多自定义的功能仍待探究学习。

附Scrapy中文文档:Scrapy 2.5.0中文文档

这篇关于Scrapy+Selenium的使用:获取huya/lol板块直播间信息的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!