本文主要是介绍android 使用MediaCodec 编解码总结(最全),希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

http://blog.csdn.net/gh_home/article/details/52143102

android 使用MediaCodec 编解码总结

版权声明:本文为博主原创文章,未经博主允许不得转载。

目录(?)[+]

导言

本文将主要介绍在安卓中调用MediaCodec类实现视频文件的硬解码,以及如何将以byte[]类型存储的图像数据通过硬编码合成视频文件。

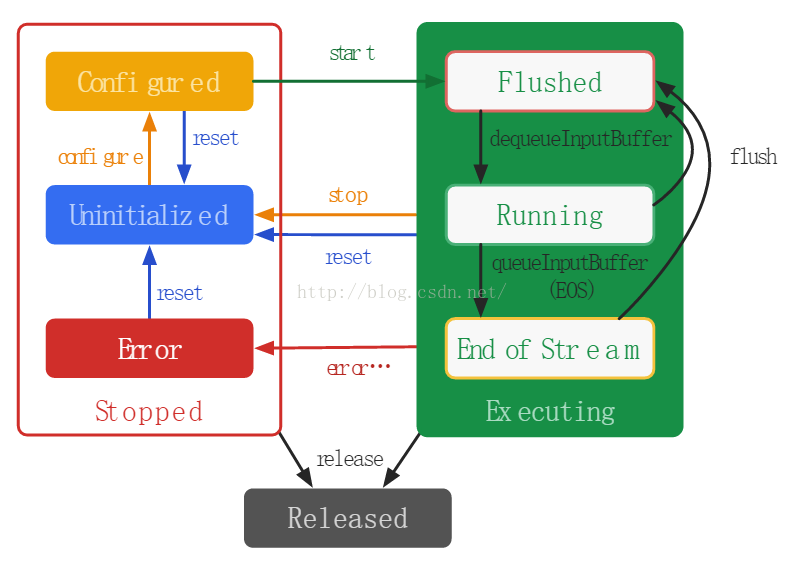

1.MediaCodec类的编解码原理

参考链接:https://developer.Android.com/reference/android/media/MediaCodec.html

工作流是这样的: 以编码为例,首先要初始化硬件编码器,配置要编码的格式、视频文件的长宽、码率、帧率、关键帧间隔等等。这一步叫configure。之后开启编码器,当前编码器便是可用状态,随时准备接收数据。下一个过程便是编码的running过程,在此过程中,需要维护两个buffer队列,InputBuffer 和OutputBuffer,用户需要不断出队InputBuffer (即dequeueInputBuffer),往里边放入需要编码的图像数据之后再入队等待处理,然后硬件编码器开始异步处理,一旦处理结束,他会将数据放在OutputBuffer中,并且通知用户当前有输出数据可用了,那么用户就可以出队一个OutputBuffer,将其中的数据拿走,然后释放掉这个buffer。结束条件在于end-of-stream这个flag标志位的设定。在编码结束后,编码器调用stop函数停止编码,之后调用release函数将编码器完全释放掉,整体流程结束。

2. 视频解码程序示例

代码来源于

Android: MediaCodec视频文件硬件解码

以下所有代码可以在此处下载

- package com.example.guoheng_iri.helloworld;

- import android.graphics.ImageFormat;

- import android.graphics.Rect;

- import android.graphics.YuvImage;

- import android.media.Image;

- import android.media.MediaCodec;

- import android.media.MediaCodecInfo;

- import android.media.MediaExtractor;

- import android.media.MediaFormat;

- import android.util.Log;

- import java.io.File;

- import java.io.FileOutputStream;

- import java.io.IOException;

- import java.nio.ByteBuffer;

- import java.util.concurrent.LinkedBlockingQueue;

- public class VideoDecode {

- private static final String TAG = "VideoToFrames";

- private static final boolean VERBOSE = true;

- private static final long DEFAULT_TIMEOUT_US = 10000;

- private static final int COLOR_FormatI420 = 1;

- private static final int COLOR_FormatNV21 = 2;

- public static final int FILE_TypeI420 = 1;

- public static final int FILE_TypeNV21 = 2;

- public static final int FILE_TypeJPEG = 3;

- private final int decodeColorFormat = MediaCodecInfo.CodecCapabilities.COLOR_FormatYUV420Flexible;

- private int outputImageFileType = -1;

- private String OUTPUT_DIR;

- public int ImageWidth=0;

- public int ImageHeight=0;

- MediaExtractor extractor = null;

- MediaCodec decoder = null;

- MediaFormat mediaFormat;

- public void setSaveFrames(String dir, int fileType) throws IOException {

- if (fileType != FILE_TypeI420 && fileType != FILE_TypeNV21 && fileType != FILE_TypeJPEG) {

- throw new IllegalArgumentException("only support FILE_TypeI420 " + "and FILE_TypeNV21 " + "and FILE_TypeJPEG");

- }

- outputImageFileType = fileType;

- File theDir = new File(dir);

- if (!theDir.exists()) {

- theDir.mkdirs();

- } else if (!theDir.isDirectory()) {

- throw new IOException("Not a directory");

- }

- OUTPUT_DIR = theDir.getAbsolutePath() + "/";

- }

- public void VideoDecodePrepare(String videoFilePath) {

- extractor = null;

- decoder = null;

- try {

- File videoFile = new File(videoFilePath);

- extractor = new MediaExtractor();

- extractor.setDataSource(videoFile.toString());

- int trackIndex = selectTrack(extractor);

- if (trackIndex < 0) {

- throw new RuntimeException("No video track found in " + videoFilePath);

- }

- extractor.selectTrack(trackIndex);

- mediaFormat = extractor.getTrackFormat(trackIndex);

- String mime = mediaFormat.getString(MediaFormat.KEY_MIME);

- decoder = MediaCodec.createDecoderByType(mime);

- showSupportedColorFormat(decoder.getCodecInfo().getCapabilitiesForType(mime));

- if (isColorFormatSupported(decodeColorFormat, decoder.getCodecInfo().getCapabilitiesForType(mime))) {

- mediaFormat.setInteger(MediaFormat.KEY_COLOR_FORMAT, decodeColorFormat);

- Log.i(TAG, "set decode color format to type " + decodeColorFormat);

- } else {

- Log.i(TAG, "unable to set decode color format, color format type " + decodeColorFormat + " not supported");

- }

- decoder.configure(mediaFormat, null, null, 0);

- decoder.start();

- } catch (IOException ioe) {

- throw new RuntimeException("failed init encoder", ioe);

- }

- }

- public void close() {

- decoder.stop();

- decoder.release();

- if (extractor != null) {

- extractor.release();

- extractor = null;

- }

- }

- public void excuate()

- {

- try {

- decodeFramesToImage(decoder, extractor, mediaFormat);

- }finally {

- // release encoder, muxer, and input Surface

- close();

- }

- }

- private void showSupportedColorFormat(MediaCodecInfo.CodecCapabilities caps) {

- System.out.print("supported color format: ");

- for (int c : caps.colorFormats) {

- System.out.print(c + "\t");

- }

- System.out.println();

- }

- private boolean isColorFormatSupported(int colorFormat, MediaCodecInfo.CodecCapabilities caps) {

- for (int c : caps.colorFormats) {

- if (c == colorFormat) {

- return true;

- }

- }

- return false;

- }

- public void decodeFramesToImage(MediaCodec decoder, MediaExtractor extractor, MediaFormat mediaFormat) {

- MediaCodec.BufferInfo info = new MediaCodec.BufferInfo();

- boolean sawInputEOS = false;

- boolean sawOutputEOS = false;

- final int width = mediaFormat.getInteger(MediaFormat.KEY_WIDTH);

- final int height = mediaFormat.getInteger(MediaFormat.KEY_HEIGHT);

- ImageWidth=width;

- ImageHeight=height;

- int outputFrameCount = 0;

- while (!sawOutputEOS) {

- if (!sawInputEOS) {

- int inputBufferId = decoder.dequeueInputBuffer(DEFAULT_TIMEOUT_US);

- if (inputBufferId >= 0) {

- ByteBuffer inputBuffer = decoder.getInputBuffer(inputBufferId);

- int sampleSize = extractor.readSampleData(inputBuffer, 0); //将一部分视频数据读取到inputbuffer中,大小为sampleSize

- if (sampleSize < 0) {

- decoder.queueInputBuffer(inputBufferId, 0, 0, 0L, MediaCodec.BUFFER_FLAG_END_OF_STREAM);

- sawInputEOS = true;

- } else {

- long presentationTimeUs = extractor.getSampleTime();

- decoder.queueInputBuffer(inputBufferId, 0, sampleSize, presentationTimeUs, 0);

- extractor.advance(); //移动到视频文件的下一个地址

- }

- }

- }

- int outputBufferId = decoder.dequeueOutputBuffer(info, DEFAULT_TIMEOUT_US);

- if (outputBufferId >= 0) {

- if ((info.flags & MediaCodec.BUFFER_FLAG_END_OF_STREAM) != 0) {

- sawOutputEOS = true;

- }

- boolean doRender = (info.size != 0);

- if (doRender) {

- outputFrameCount++;

- Image image = decoder.getOutputImage(outputBufferId);

- System.out.println("image format: " + image.getFormat());

- if (outputImageFileType != -1) {

- String fileName;

- switch (outputImageFileType) {

- case FILE_TypeI420:

- fileName = OUTPUT_DIR + String.format("frame_%05d_I420_%dx%d.yuv", outputFrameCount, width, height);

- dumpFile(fileName, getDataFromImage(image, COLOR_FormatI420));

- break;

- case FILE_TypeNV21:

- fileName = OUTPUT_DIR + String.format("frame_%05d_NV21_%dx%d.yuv", outputFrameCount, width, height);

- dumpFile(fileName, getDataFromImage(image, COLOR_FormatNV21));

- break;

- case FILE_TypeJPEG:

- fileName = OUTPUT_DIR + String.format("frame_%05d.jpg", outputFrameCount);

- compressToJpeg(fileName, image);

- break;

- }

- }

- image.close();

- decoder.releaseOutputBuffer(outputBufferId, true);

- }

- }

- }

- }

- private static int selectTrack(MediaExtractor extractor) {

- int numTracks = extractor.getTrackCount();

- for (int i = 0; i < numTracks; i++) {

- MediaFormat format = extractor.getTrackFormat(i);

- String mime = format.getString(MediaFormat.KEY_MIME);

- if (mime.startsWith("video/")) {

- if (VERBOSE) {

- Log.d(TAG, "Extractor selected track " + i + " (" + mime + "): " + format);

- }

- return i;

- }

- }

- return -1;

- }

- private static boolean isImageFormatSupported(Image image) {

- int format = image.getFormat();

- switch (format) {

- case ImageFormat.YUV_420_888:

- case ImageFormat.NV21:

- case ImageFormat.YV12:

- return true;

- }

- return false;

- }

- public static byte[] getGrayFromData(Image image, int colorFormat) {

- if (colorFormat != COLOR_FormatI420 && colorFormat != COLOR_FormatNV21) {

- throw new IllegalArgumentException("only support COLOR_FormatI420 " + "and COLOR_FormatNV21");

- }

- if (!isImageFormatSupported(image)) {

- throw new RuntimeException("can't convert Image to byte array, format " + image.getFormat());

- }

- Image.Plane[] planes = image.getPlanes();

- int i = 0;

- ByteBuffer buffer = planes[i].getBuffer();

- byte[] data = new byte[buffer.remaining()];

- buffer.get(data, 0, data.length);

- if (VERBOSE) Log.v(TAG, "Finished reading data from plane " + i);

- return data;

- }

- public static byte[] getDataFromImage(Image image, int colorFormat) {

- if (colorFormat != COLOR_FormatI420 && colorFormat != COLOR_FormatNV21) {

- throw new IllegalArgumentException("only support COLOR_FormatI420 " + "and COLOR_FormatNV21");

- }

- if (!isImageFormatSupported(image)) {

- throw new RuntimeException("can't convert Image to byte array, format " + image.getFormat());

- }

- Rect crop = image.getCropRect();

- int format = image.getFormat();

- int width = crop.width();

- int height = crop.height();

- Image.Plane[] planes = image.getPlanes();

- byte[] data = new byte[width * height * ImageFormat.getBitsPerPixel(format) / 8];

- byte[] rowData = new byte[planes[0].getRowStride()];

- int channelOffset = 0;

- int outputStride = 1;

- for (int i = 0; i < planes.length; i++) {

- switch (i) {

- case 0:

- channelOffset = 0;

- outputStride = 1;

- break;

- case 1:

- if (colorFormat == COLOR_FormatI420) {

- channelOffset = width * height;

- outputStride = 1;

- } else if (colorFormat == COLOR_FormatNV21) {

- channelOffset = width * height ;

- outputStride = 2;

- }

- break;

- case 2:

- if (colorFormat == COLOR_FormatI420) {

- channelOffset = (int) (width * height * 1.25);

- outputStride = 1;

- } else if (colorFormat == COLOR_FormatNV21) {

- channelOffset = width * height + 1;

- outputStride = 2;

- }

- break;

- }

- ByteBuffer buffer = planes[i].getBuffer();

- int rowStride = planes[i].getRowStride();

- int pixelStride = planes[i].getPixelStride();

- if (VERBOSE) {

- Log.v(TAG, "pixelStride " + pixelStride);

- Log.v(TAG, "rowStride " + rowStride);

- Log.v(TAG, "width " + width);

- Log.v(TAG, "height " + height);

- Log.v(TAG, "buffer size " + buffer.remaining());

- }

- int shift = (i == 0) ? 0 : 1;

- int w = width >> shift;

- int h = height >> shift;

- buffer.position(rowStride * (crop.top >> shift) + pixelStride * (crop.left >> shift));

- for (int row = 0; row < h; row++) {

- int length;

- if (pixelStride == 1 && outputStride == 1) {

- length = w;

- buffer.get(data, channelOffset, length);

- channelOffset += length;

- } else {

- length = (w - 1) * pixelStride + 1;

- buffer.get(rowData, 0, length);

- for (int col = 0; col < w; col++) {

- data[channelOffset] = rowData[col * pixelStride];

- channelOffset += outputStride;

- }

- }

- if (row < h - 1) {

- buffer.position(buffer.position() + rowStride - length);

- }

- }

- if (VERBOSE) Log.v(TAG, "Finished reading data from plane " + i);

- }

- return data;

- }

- private static void dumpFile(String fileName, byte[] data) {

- FileOutputStream outStream;

- try {

- outStream = new FileOutputStream(fileName);

- } catch (IOException ioe) {

- throw new RuntimeException("Unable to create output file " + fileName, ioe);

- }

- try {

- outStream.write(data);

- outStream.close();

- } catch (IOException ioe) {

- throw new RuntimeException("failed writing data to file " + fileName, ioe);

- }

- }

- private void compressToJpeg(String fileName, Image image) {

- FileOutputStream outStream;

- try {

- outStream = new FileOutputStream(fileName);

- } catch (IOException ioe) {

- throw new RuntimeException("Unable to create output file " + fileName, ioe);

- }

- Rect rect = image.getCropRect();

- YuvImage yuvImage = new YuvImage(getDataFromImage(image, COLOR_FormatNV21), ImageFormat.NV21, rect.width(), rect.height(), null);

- yuvImage.compressToJpeg(rect, 100, outStream);

- }

- }

- /***************************************

- //here is video decode example

- VideoDecode videodecoder=new VideoDecode();

- try{

- videodecoder.setSaveFrames(outputdir,2); //这里用于设置输出YUV的位置和YUV的格式

- videodecoder.VideoDecodePrepare(videodir); //这里要输入待解码视频文件的地址

- videodecoder.excuate();

- }catch(IOException el){

- }

- *************************************/

3. 视频编码示例

这部分代码是将一个自动生成的Byte[] frameData 传入到视频编码器生成视频文件,后续用户可以传入自己的frameData即可

- package com.example.guoheng_iri.helloworld;

- import java.io.File;

- import java.io.FileOutputStream;

- import java.io.IOException;

- import java.nio.ByteBuffer;

- import java.util.Arrays;

- import java.util.LinkedList;

- import java.util.Queue;

- import android.media.MediaCodec;

- import android.media.MediaCodecInfo;

- import android.media.MediaFormat;

- import android.media.MediaMuxer;

- import android.os.Environment;

- import android.util.Log;

- import junit.framework.Assert;

- public class VideoEncode {

- private static final int TEST_Y = 120; // YUV values for colored rect

- private static final int TEST_U = 160;

- private static final int TEST_V = 200;

- public int mWidth=1920;

- public int mHeight=1080;

- private static final int BIT_RATE = 1920*1080*30; // 2Mbps

- private static final int FRAME_RATE = 30; // 30fps

- private static final int IFRAME_INTERVAL = 10; // 10 seconds between I-frames

- private static final int WIDTH = 1920;

- private static final int HEIGHT = 1080;

- public static final int NUMFRAMES=300;

- private static final String TAG = "EncodeDecodeTest";

- private static final boolean VERBOSE = true; // lots of logging

- private static final String MIME_TYPE = "video/avc"; // H.264 Advanced Video Coding

- private MediaCodec.BufferInfo mBufferInfo;

- public MediaCodec mediaCodec;

- public MediaMuxer mMuxer;

- public int mTrackIndex;

- public boolean mMuxerStarted;

- private static final int encoderColorFormat=MediaCodecInfo.CodecCapabilities.COLOR_FormatYUV420SemiPlanar;

- public void VideoEncodePrepare() //这里需要传进来一个编码时的mediaformat,后续做

- {

- String outputPath = new File(Environment.getExternalStorageDirectory(),

- "mytest." + WIDTH + "x" + HEIGHT + ".mp4").toString();

- mBufferInfo = new MediaCodec.BufferInfo();

- MediaFormat format = MediaFormat.createVideoFormat(MIME_TYPE, mWidth, mHeight);

- // Set some properties. Failing to specify some of these can cause the MediaCodec

- // configure() call to throw an unhelpful exception.

- format.setInteger(MediaFormat.KEY_COLOR_FORMAT, encoderColorFormat);

- format.setInteger(MediaFormat.KEY_BIT_RATE, BIT_RATE);

- format.setInteger(MediaFormat.KEY_FRAME_RATE, FRAME_RATE);

- format.setInteger(MediaFormat.KEY_I_FRAME_INTERVAL, IFRAME_INTERVAL);

- mediaCodec = null;

- try {

- mediaCodec = MediaCodec.createEncoderByType(MIME_TYPE);

- mediaCodec.configure(format, null, null, MediaCodec.CONFIGURE_FLAG_ENCODE);

- mediaCodec.start();

- mMuxer = new MediaMuxer(outputPath, MediaMuxer.OutputFormat.MUXER_OUTPUT_MPEG_4);

- }catch (IOException ioe) {

- throw new RuntimeException("failed init encoder", ioe);

- }

- mTrackIndex = -1;

- mMuxerStarted = false;

- }

- public void close() {

- mediaCodec.stop();

- mediaCodec.release();

- if (mMuxer != null) {

- mMuxer.stop();

- mMuxer.release();

- mMuxer = null;

- }

- }

- public void offerEncoder(byte[] input, int frameIdx) {

- try {

- ByteBuffer[] inputBuffers = mediaCodec.getInputBuffers();

- //outputBuffers = mediaCodec.getOutputBuffers();

- int inputBufferIndex = mediaCodec.dequeueInputBuffer(-1);

- if (inputBufferIndex >= 0) {

- if (frameIdx==NUMFRAMES)

- {

- mediaCodec.queueInputBuffer(inputBufferIndex, 0, 0, System.nanoTime()/1000,

- MediaCodec.BUFFER_FLAG_END_OF_STREAM);

- if (VERBOSE) Log.d(TAG, "sent input EOS (with zero-length frame)");

- }

- else

- {

- ByteBuffer inputBuffer = inputBuffers[inputBufferIndex];

- Assert.assertTrue(inputBuffer.capacity() >= input.length); //断言,帧数据容器超过缓冲区会抛出 AssertionFailedError

- inputBuffer.clear();

- inputBuffer.put(input);

- mediaCodec.queueInputBuffer(inputBufferIndex, 0, input.length, 0, MediaCodec.BUFFER_FLAG_CODEC_CONFIG);

- }

- }else {

- Log.i("AvcEncoder", "input buffer "+ frameIdx + " not read");

- }

- } catch (Throwable t) {

- t.printStackTrace();

- }

- }

- private void generateFrame(int frameIndex, int colorFormat, byte[] frameData) {

- final int HALF_WIDTH = mWidth / 2;

- boolean semiPlanar = isSemiPlanarYUV(colorFormat);

- // Set to zero. In YUV this is a dull green.

- Arrays.fill(frameData, (byte) 0);

- int startX, startY, countX, countY;

- frameIndex %= 8;

- //frameIndex = (frameIndex / 8) % 8; // use this instead for debug -- easier to see

- if (frameIndex < 4) {

- startX = frameIndex * (mWidth / 4);

- startY = 0;

- } else {

- startX = (7 - frameIndex) * (mWidth / 4);

- startY = mHeight / 2;

- }

- for (int y = startY + (mHeight/2) - 1; y >= startY; --y) {

- for (int x = startX + (mWidth/4) - 1; x >= startX; --x) {

- if (semiPlanar) {

- // full-size Y, followed by UV pairs at half resolution

- // e.g. Nexus 4 OMX.qcom.video.encoder.avc COLOR_FormatYUV420SemiPlanar

- // e.g. Galaxy Nexus OMX.TI.DUCATI1.VIDEO.H264E

- // OMX_TI_COLOR_FormatYUV420PackedSemiPlanar

- frameData[y * mWidth + x] = (byte) TEST_Y;

- if ((x & 0x01) == 0 && (y & 0x01) == 0) {

- frameData[mWidth*mHeight + y * HALF_WIDTH + x] = (byte) TEST_U;

- frameData[mWidth*mHeight + y * HALF_WIDTH + x + 1] = (byte) TEST_V;

- }

- } else {

- // full-size Y, followed by quarter-size U and quarter-size V

- // e.g. Nexus 10 OMX.Exynos.AVC.Encoder COLOR_FormatYUV420Planar

- // e.g. Nexus 7 OMX.Nvidia.h264.encoder COLOR_FormatYUV420Planar

- frameData[y * mWidth + x] = (byte) TEST_Y;

- if ((x & 0x01) == 0 && (y & 0x01) == 0) {

- frameData[mWidth*mHeight + (y/2) * HALF_WIDTH + (x/2)] = (byte) TEST_U;

- frameData[mWidth*mHeight + HALF_WIDTH * (mHeight / 2) +

- (y/2) * HALF_WIDTH + (x/2)] = (byte) TEST_V;

- }

- }

- }

- }

- }

- private static boolean isSemiPlanarYUV(int colorFormat) {

- switch (colorFormat) {

- case MediaCodecInfo.CodecCapabilities.COLOR_FormatYUV420Planar:

- case MediaCodecInfo.CodecCapabilities.COLOR_FormatYUV420PackedPlanar:

- return false;

- case MediaCodecInfo.CodecCapabilities.COLOR_FormatYUV420SemiPlanar:

- case MediaCodecInfo.CodecCapabilities.COLOR_FormatYUV420PackedSemiPlanar:

- case MediaCodecInfo.CodecCapabilities.COLOR_TI_FormatYUV420PackedSemiPlanar:

- return true;

- default:

- throw new RuntimeException("unknown format " + colorFormat);

- }

- }

- public void excuate()

- {

- try {

- VideoEncodePrepare();

- doEncodeVideoFromBuffer(mediaCodec,encoderColorFormat);

- }finally {

- // release encoder, muxer, and input Surface

- close();

- }

- }

- public byte[] swapYV12toI420(byte[] yv12bytes, int width, int height) {

- byte[] i420bytes = new byte[yv12bytes.length];

- for (int i = 0; i < width*height; i++)

- i420bytes[i] = yv12bytes[i];

- for (int i = width*height; i < width*height + (width/2*height/2); i++)

- i420bytes[i] = yv12bytes[i + (width/2*height/2)];

- for (int i = width*height + (width/2*height/2); i < width*height + 2*(width/2*height/2); i++)

- i420bytes[i] = yv12bytes[i - (width/2*height/2)];

- return i420bytes;

- }

- void swapYV12toNV21(byte[] yv12bytes, byte[] nv12bytes, int width,int height) {

- int nLenY = width * height;

- int nLenU = nLenY / 4;

- System.arraycopy(yv12bytes, 0, nv12bytes, 0, width * height);

- for (int i = 0; i < nLenU; i++) {

- nv12bytes[nLenY + 2 * i + 1] = yv12bytes[nLenY + i];

- nv12bytes[nLenY + 2 * i] = yv12bytes[nLenY + nLenU + i];

- }

- }

- private void doEncodeVideoFromBuffer(MediaCodec encoder, int encoderColorFormat) {

- final int TIMEOUT_USEC = 10000;

- ByteBuffer[] encoderInputBuffers = encoder.getInputBuffers();

- ByteBuffer[] encoderOutputBuffers = encoder.getOutputBuffers();

- MediaCodec.BufferInfo info = new MediaCodec.BufferInfo();

- int generateIndex = 0;

- int checkIndex = 0;

- int badFrames = 0;

- // The size of a frame of video data, in the formats we handle, is stride*sliceHeight

- // for Y, and (stride/2)*(sliceHeight/2) for each of the Cb and Cr channels. Application

- // of algebra and assuming that stride==width and sliceHeight==height yields:

- byte[] frameData = new byte[mWidth * mHeight * 3 / 2];

- byte[] frameDataYV12 = new byte[mWidth * mHeight * 3 / 2];

- // Just out of curiosity.

- long rawSize = 0;

- long encodedSize = 0;

- // Save a copy to disk. Useful for debugging the test. Note this is a raw elementary

- // stream, not a .mp4 file, so not all players will know what to do with it.

- // Loop until the output side is done.

- boolean inputDone = false;

- boolean encoderDone = false;

- boolean outputDone = false;

- while (!outputDone) {

- // If we're not done submitting frames, generate a new one and submit it. By

- // doing this on every loop we're working to ensure that the encoder always has

- // work to do.

- //

- // We don't really want a timeout here, but sometimes there's a delay opening

- // the encoder device, so a short timeout can keep us from spinning hard.

- if (!inputDone) {

- int inputBufIndex = encoder.dequeueInputBuffer(TIMEOUT_USEC);

- if (inputBufIndex >= 0) {

- long ptsUsec = computePresentationTime(generateIndex);

- if (generateIndex == NUMFRAMES) {

- // Send an empty frame with the end-of-stream flag set. If we set EOS

- // on a frame with data, that frame data will be ignored, and the

- // output will be short one frame.

- encoder.queueInputBuffer(inputBufIndex, 0, 0, ptsUsec,

- MediaCodec.BUFFER_FLAG_END_OF_STREAM);

- inputDone = true;

- if (VERBOSE) Log.d(TAG, "sent input EOS (with zero-length frame)");

- } else {

- generateFrame(generateIndex, encoderColorFormat, frameData);

- ByteBuffer inputBuf = encoderInputBuffers[inputBufIndex];

- // the buffer should be sized to hold one full frame

- inputBuf.clear();

- inputBuf.put(frameData);

- encoder.queueInputBuffer(inputBufIndex, 0, frameData.length, ptsUsec, 0);

- if (VERBOSE) Log.d(TAG, "submitted frame " + generateIndex + " to enc");

- }

- generateIndex++;

- } else {

- // either all in use, or we timed out during initial setup

- if (VERBOSE) Log.d(TAG, "input buffer not available");

- }

- }

- // Check for output from the encoder. If there's no output yet, we either need to

- // provide more input, or we need to wait for the encoder to work its magic. We

- // can't actually tell which is the case, so if we can't get an output buffer right

- // away we loop around and see if it wants more input.

- //

- // Once we get EOS from the encoder, we don't need to do this anymore.

- if (!encoderDone) {

- int encoderStatus = encoder.dequeueOutputBuffer(info, TIMEOUT_USEC);

- if (encoderStatus == MediaCodec.INFO_TRY_AGAIN_LATER) {

- // no output available yet

- if (VERBOSE) Log.d(TAG, "no output from encoder available");

- } else if (encoderStatus == MediaCodec.INFO_OUTPUT_BUFFERS_CHANGED) {

- // not expected for an encoder

- encoderOutputBuffers = encoder.getOutputBuffers();

- if (VERBOSE) Log.d(TAG, "encoder output buffers changed");

- } else if (encoderStatus == MediaCodec.INFO_OUTPUT_FORMAT_CHANGED) {

- // not expected for an encoder

- MediaFormat newFormat = encoder.getOutputFormat();

- if (VERBOSE) Log.d(TAG, "encoder output format changed: " + newFormat);

- if (mMuxerStarted) {

- throw new RuntimeException("format changed twice");

- }

- Log.d(TAG, "encoder output format changed: " + newFormat);

- // now that we have the Magic Goodies, start the muxer

- mTrackIndex = mMuxer.addTrack(newFormat);

- mMuxer.start();

- mMuxerStarted = true;

- } else if (encoderStatus < 0) {

- Log.d(TAG,"unexpected result from encoder.dequeueOutputBuffer: " + encoderStatus);

- } else { // encoderStatus >= 0

- ByteBuffer encodedData = encoderOutputBuffers[encoderStatus];

- if (encodedData == null) {

- Log.d(TAG,"encoderOutputBuffer " + encoderStatus + " was null");

- }

- if ((info.flags & MediaCodec.BUFFER_FLAG_CODEC_CONFIG) != 0) {

- // Codec config info. Only expected on first packet. One way to

- // handle this is to manually stuff the data into the MediaFormat

- // and pass that to configure(). We do that here to exercise the API.

- MediaFormat format =

- MediaFormat.createVideoFormat(MIME_TYPE, mWidth, mHeight);

- format.setByteBuffer("csd-0", encodedData);

- info.size = 0;

- } else {

- // Get a decoder input buffer, blocking until it's available.

- encoderDone = (info.flags & MediaCodec.BUFFER_FLAG_END_OF_STREAM) != 0;

- if ((info.flags & MediaCodec.BUFFER_FLAG_END_OF_STREAM) != 0)

- outputDone = true;

- if (VERBOSE) Log.d(TAG, "passed " + info.size + " bytes to decoder"

- + (encoderDone ? " (EOS)" : ""));

- }

- // It's usually necessary to adjust the ByteBuffer values to match BufferInfo.

- if (info.size != 0) {

- encodedData.position(info.offset);

- encodedData.limit(info.offset + info.size);

- mMuxer.writeSampleData(mTrackIndex, encodedData, info);

- encodedSize += info.size;

- }

- encoder.releaseOutputBuffer(encoderStatus, false);

- }

- }

- }

- if (VERBOSE) Log.d(TAG, "decoded " + checkIndex + " frames at "

- + mWidth + "x" + mHeight + ": raw=" + rawSize + ", enc=" + encodedSize);

- if (checkIndex != NUMFRAMES) {

- Log.d(TAG,"expected " + 120 + " frames, only decoded " + checkIndex);

- }

- if (badFrames != 0) {

- Log.d(TAG,"Found " + badFrames + " bad frames");

- }

- }

- public static long computePresentationTime(int frameIndex) {

- return 132 + frameIndex * 1000000 / FRAME_RATE;

- }

3. 读入一个视频,在硬件解码后将其硬件编码回去的示例

- package com.example.guoheng_iri.helloworld;

- import java.io.File;

- import java.io.FileOutputStream;

- import java.io.IOException;

- import java.nio.ByteBuffer;

- import java.util.Arrays;

- import android.media.MediaCodec;

- import android.media.MediaCodecInfo;

- import android.media.MediaFormat;

- import android.media.MediaMuxer;

- import android.os.Environment;

- import android.util.Log;

- import junit.framework.Assert;

- import android.graphics.ImageFormat;

- import android.graphics.Rect;

- import android.graphics.YuvImage;

- import android.media.Image;

- import android.media.MediaExtractor;

- import android.util.Log;

- import java.util.LinkedList;

- import java.util.Queue;

- import java.util.concurrent.LinkedBlockingQueue;

- /**

- * Created by guoheng-iri on 2016/8/1.

- */

- public class VideoEncodeDecode {

- public int mWidth=-1;

- public int mHeight=-1;

- private static final int BUFFER_SIZE = 30;

- private static final String MIME_TYPE = "video/avc"; // H.264 Advanced Video Coding

- private static final int DEFAULT_TIMEOUT_US=20000;

- public static int NUMFRAMES=590;

- private static final String TAG = "EncodeDecodeTest";

- private static final boolean VERBOSE = true; // lots of logging

- Queue<byte[]> ImageQueue;

- VideoEncode myencoder;

- VideoDecode mydecoder;

- void VideoCodecPrepare(String videoInputFilePath)

- {

- mydecoder=new VideoDecode();

- myencoder=new VideoEncode();

- mydecoder.VideoDecodePrepare(videoInputFilePath);

- myencoder.VideoEncodePrepare();

- ImageQueue= new LinkedList<byte[]>();

- }

- void MyProcessing()

- {

- //do process

- }

- void VideoEncodeDecodeLoop()

- {

- //here is decode flag

- boolean sawInputEOS = false;

- boolean sawOutputEOS = false;

- boolean IsImageBufferFull=false;

- MediaCodec.BufferInfo encodeinfo = new MediaCodec.BufferInfo();

- MediaCodec.BufferInfo decodeinfo = new MediaCodec.BufferInfo();

- mWidth = mydecoder.mediaFormat.getInteger(MediaFormat.KEY_WIDTH);

- mHeight = mydecoder.mediaFormat.getInteger(MediaFormat.KEY_HEIGHT);

- byte[] frameData = new byte[mWidth * mHeight * 3 / 2];

- byte[] frameDataYV12 = new byte[mWidth * mHeight * 3 / 2];

- ByteBuffer[] encoderInputBuffers = myencoder.mediaCodec.getInputBuffers();

- ByteBuffer[] encoderOutputBuffers = myencoder.mediaCodec.getOutputBuffers();

- int generateIndex = 0;

- boolean encodeinputDone = false;

- boolean encoderDone = false;

- while((!encoderDone) && (!sawOutputEOS))

- {

- while (!sawOutputEOS && (!IsImageBufferFull)) {

- if (!sawInputEOS) {

- int inputBufferId = mydecoder.decoder.dequeueInputBuffer(DEFAULT_TIMEOUT_US);

- if (inputBufferId >= 0) {

- ByteBuffer inputBuffer = mydecoder.decoder.getInputBuffer(inputBufferId);

- int sampleSize = mydecoder.extractor.readSampleData(inputBuffer, 0); //将一部分视频数据读取到inputbuffer中,大小为sampleSize

- if (sampleSize < 0) {

- mydecoder.decoder.queueInputBuffer(inputBufferId, 0, 0, 0L, MediaCodec.BUFFER_FLAG_END_OF_STREAM);

- sawInputEOS = true;

- } else {

- long presentationTimeUs = mydecoder.extractor.getSampleTime();

- mydecoder.decoder.queueInputBuffer(inputBufferId, 0, sampleSize, presentationTimeUs, 0);

- mydecoder.extractor.advance(); //移动到视频文件的下一个地址

- }

- }

- }

- int outputBufferId = mydecoder.decoder.dequeueOutputBuffer(decodeinfo, DEFAULT_TIMEOUT_US);

- if (outputBufferId >= 0) {

- if ((decodeinfo.flags & MediaCodec.BUFFER_FLAG_END_OF_STREAM) != 0) {

- sawOutputEOS = true;

- }

- boolean doRender = (decodeinfo.size != 0);

- if (doRender) {

- //NUMFRAMES++;

- Image image = mydecoder.decoder.getOutputImage(outputBufferId);

- byte[] imagedata=mydecoder.getDataFromImage(image, mydecoder.FILE_TypeNV21);

- ImageQueue.offer(imagedata);

- if (ImageQueue.size()==BUFFER_SIZE)

- {

- IsImageBufferFull = true;

- }

- image.close();

- mydecoder.decoder.releaseOutputBuffer(outputBufferId, true);

- }

- }

- }

- //MyProcessing();

- while ((!encoderDone) && IsImageBufferFull) {

- if (!encodeinputDone) {

- int inputBufIndex = myencoder.mediaCodec.dequeueInputBuffer(DEFAULT_TIMEOUT_US);

- if (inputBufIndex >= 0) {

- long ptsUsec = myencoder.computePresentationTime(generateIndex);

- if (generateIndex == NUMFRAMES) {

- myencoder.mediaCodec.queueInputBuffer(inputBufIndex, 0, 0, ptsUsec,

- MediaCodec.BUFFER_FLAG_END_OF_STREAM);

- encodeinputDone = true;

- if (VERBOSE) Log.d(TAG, "sent input EOS (with zero-length frame)");

- } else {

- frameData=ImageQueue.poll();

- ByteBuffer inputBuf = encoderInputBuffers[inputBufIndex];

- // the buffer should be sized to hold one full frame

- inputBuf.clear();

- inputBuf.put(frameData);

- myencoder.mediaCodec.queueInputBuffer(inputBufIndex, 0, frameData.length, ptsUsec, 0);

- if (ImageQueue.size()==0)

- {

- IsImageBufferFull=false;

- }

- if (VERBOSE) Log.d(TAG, "submitted frame " + generateIndex + " to enc");

- }

- generateIndex++;

- } else {

- // either all in use, or we timed out during initial setup

- if (VERBOSE) Log.d(TAG, "input buffer not available");

- }

- }

- // Check for output from the encoder. If there's no output yet, we either need to

- // provide more input, or we need to wait for the encoder to work its magic. We

- // can't actually tell which is the case, so if we can't get an output buffer right

- // away we loop around and see if it wants more input.

- //

- // Once we get EOS from the encoder, we don't need to do this anymore.

- if (!encoderDone) {

- int encoderStatus = myencoder.mediaCodec.dequeueOutputBuffer(encodeinfo, DEFAULT_TIMEOUT_US);

- if (encoderStatus == MediaCodec.INFO_TRY_AGAIN_LATER) {

- // no output available yet

- if (VERBOSE) Log.d(TAG, "no output from encoder available");

- } else if (encoderStatus == MediaCodec.INFO_OUTPUT_BUFFERS_CHANGED) {

- // not expected for an encoder

- encoderOutputBuffers = myencoder.mediaCodec.getOutputBuffers();

- if (VERBOSE) Log.d(TAG, "encoder output buffers changed");

- } else if (encoderStatus == MediaCodec.INFO_OUTPUT_FORMAT_CHANGED) {

- // not expected for an encoder

- MediaFormat newFormat = myencoder.mediaCodec.getOutputFormat();

- if (VERBOSE) Log.d(TAG, "encoder output format changed: " + newFormat);

- if (myencoder.mMuxerStarted) {

- throw new RuntimeException("format changed twice");

- }

- Log.d(TAG, "encoder output format changed: " + newFormat);

- // now that we have the Magic Goodies, start the muxer

- myencoder.mTrackIndex = myencoder.mMuxer.addTrack(newFormat);

- myencoder.mMuxer.start();

- myencoder.mMuxerStarted = true;

- } else if (encoderStatus < 0) {

- Log.d(TAG,"unexpected result from encoder.dequeueOutputBuffer: " + encoderStatus);

- } else { // encoderStatus >= 0

- ByteBuffer encodedData = encoderOutputBuffers[encoderStatus];

- if (encodedData == null) {

- Log.d(TAG,"encoderOutputBuffer " + encoderStatus + " was null");

- }

- if ((encodeinfo.flags & MediaCodec.BUFFER_FLAG_CODEC_CONFIG) != 0) {

- MediaFormat format =

- MediaFormat.createVideoFormat(MIME_TYPE, mWidth, mHeight);

- format.setByteBuffer("csd-0", encodedData);

- encodeinfo.size = 0;

- } else {

- // Get a decoder input buffer, blocking until it's available

- if ((encodeinfo.flags & MediaCodec.BUFFER_FLAG_END_OF_STREAM) != 0)

- encoderDone = true;

- if (VERBOSE) Log.d(TAG, "passed " + encodeinfo.size + " bytes to decoder"

- + (encoderDone ? " (EOS)" : ""));

- }

- // It's usually necessary to adjust the ByteBuffer values to match BufferInfo.

- if (encodeinfo.size != 0) {

- encodedData.position(encodeinfo.offset);

- encodedData.limit(encodeinfo.offset + encodeinfo.size);

- myencoder.mMuxer.writeSampleData(myencoder.mTrackIndex, encodedData, encodeinfo);

- }

- myencoder.mediaCodec.releaseOutputBuffer(encoderStatus, false);

- }

- }

- }

- }

- }

- public void close()

- {

- myencoder.close();

- mydecoder.close();

- }

- }

useful links:

- 顶

- 0

- 踩

- 0

- 上一篇光流法小结

- 下一篇OpenGL ES学习

我的同类文章

- •刷新android中的媒体库2016-12-12

- •关于OpenGL中FrameBuffer Object的使用2016-10-14

- •android 中的引用2016-09-07

- •OpenGL ES学习之三——贴图2016-08-17

- •OpenGL ES学习2016-08-15

- •如何在手机用OpenGL ES实现投影变换2016-12-12

- •NDK编译选项的几个解释2016-09-19

- •基于Surface的视频编解码与OpenGL ES渲染2016-09-02

- •OpenGL ES学习之二2016-08-15

- •关于执行ndk-build后的代码2016-07-18

参考知识库

-

Android知识库

-

Java SE知识库

-

Java EE知识库

-

Java 知识库

- 猜你在找

- android MediaCodec 音频编解码的实现转码

- Android MediaCodec API实现的音视频编解码

- Android MediaCodec实现摄像头数据硬编解码全过程

- mediacodec编解码少帧问题

- 利用MediaCodec对音频编解码

这篇关于android 使用MediaCodec 编解码总结(最全)的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!

2楼 一个长颈鹿2016-12-05 17:53发表 [回复]-

- 您好,我用了VideoDecode,但是解码出来的JPG颜色不对是什么情况?

Re: GH_HOME3天前 15:39发表 [回复]-

- 回复一个长颈鹿:hi,我觉得是YUV的问题,首先图像的内容对了,说明解码出来的图像数组是没有问题的,但是图像的颜色不对就是色彩空间转换的问题了,建议查看下YUV转换。android中我的手机对应的YUV是NV21

1楼 jirixi2016-10-20 16:40发表 [回复] [引用] [举报]-

- 您好,使用文中的VideoEncode 程序,在运行时,出现错误 java.lang.IllegalArgumentException: trackIndex is invalid 。

这个VideoEncode 执行的话,和平台版本有关系吗? 我的是安卓4.2.2的

Re: GH_HOME2016-10-21 11:21发表 [回复] [引用] [举报]-

- 回复jirixi:hi, 我是在android5.1版本正常通过的,这部分代码我是参考bigflake写的,文末有他的链接,理论上应该是所有android平台都可以用,如果你能告诉我在代码多少行出现这个Exception也可以