本文主要是介绍朴素贝叶斯——垃圾邮件分类,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

垃圾邮件分类

- 朴素贝叶斯的介绍:

贝叶斯分类是一类分类算法的总称,这类算法均以贝叶斯定理为基础,故统称为贝叶斯分类。而朴素贝叶斯(Naive Bayes)分类是贝叶斯分类中最简单,也是常见的一种分类方法。

朴素贝叶斯算法的核心思想是通过考虑特征概率来预测分类,即对于给出的待分类样本,求解在此样本出现的条件下各个类别出现的概率,哪个最大,就认为此待分类样本属于哪个类别。 - 朴素贝叶斯的优缺点:

优点:在数据较少的情况下依然有效,可以处理多类别问题

缺点:对于输入数据的准备方式较为敏感 - 朴素贝叶斯的算法:

朴素贝叶斯模型的基本思想是:对于给定的待分类项 X { a 1 , a 2 , a 3 , ⋯ , a n } X \left{ a_1,a_2,a_3,⋯,a_n \right}X{a 1,a 2,a 3,⋯,a n },求解在此项出现的条件下各个类别yi出现的概率,哪个P ( yi ∣ X ) 最大,就把此待分类项归属于哪个类别。

这里用数学问题来介绍一下:

一号箱子放有红色球和白色球各 20 个,二号箱子放油白色球 10 个,红色球 30 个。现在随机挑选一个箱子,取出来一个球的颜色是红色的,请问这个球来自一号箱子的概率是多少?

P(A)=取出红球的概率。P(B)=一号箱的概率。

P(A|B)=当选择一号箱时,取出红色球的概率。

P(B|A)=当条件 A 发生时,B 的概率是多少。代入:当球是红色时,来自一号箱的概率是多少?

P(B|A)=P(A|B)*P(B)/P(A)

P ( A ) 是先验概率,一般都是人主观给出的。贝叶斯中的先验概率一般特指它。

P ( B ) 是先验概率,在贝叶斯的很多应用中不重要(因为只要最大后验不求绝对值),需要时往往用全概率公式计算得到。

P ( B ∣ A ) 是条件概率,又叫似然概率,一般是通过历史数据统计得到。

P ( A ∣ B ) 是后验概率,一般是我们求解的目标。 - 垃圾邮件的分类实现

1.数据集的收集:

邮件的收集来源于网上,保存在email文件夹中。其中email分两个子文件,一个为right文件夹(保存非垃圾邮件),另一个为wrong文件夹(保存垃圾邮件)。right与wrong中各保存25各邮件,保存格式为x.txt(x为1到25)。

2.训练集和测试集的选取:

取80%的邮件作为训练集,其方式为随机选取。剩余20%邮件作为测试集。 - 代码的实现

import math

import os

import re

from collections import Counterclass Spamfilter:"""A naive Bayesian spam filter"""def __init__(self, training_dir):""" inits Spamfilter with training data:param training_dir: path of training directory with subdirectories'/ham' and '/spam'"""print("Training filter with known ham ...")self.ham_table = dict(Counter(dir_tokens(training_dir + "ham/")))print("Training filter with known spam...")self.spam_table = dict(Counter(dir_tokens(training_dir + "spam/")))self.uniq_h_toks = len(self.ham_table)self.uniq_s_toks = len(self.spam_table)self.total_h_toks = sum(self.ham_table.values())self.total_s_toks = sum(self.spam_table.values())self.tok_arr = sorted(list(self.ham_table.keys()) + list(self.spam_table.keys()))self.freq_tab = self.create_frequency_table()self.file_count = 0self.count_spam = 0self.count_ham = 0self.spam_list = []self.ham_list = []def create_frequency_table(self):""" Generates token frequency table from training emails:return: dict{k,v}: spam/ham frequenciesk = (str)token, v = {spam_freq: , ham_freq:, prob_spam:, prob_ham:}"""freq_table = {}for tok in self.tok_arr:entry = {}s_freq = self.spam_table.get(tok, 0)entry["spam_freq"] = s_freqh_freq = self.ham_table.get(tok, 0)entry["ham_freq"] = h_freqs_prob = (s_freq + 1 / float(self.uniq_s_toks)) / (self.total_s_toks + 1)entry["prob_spam"] = s_probh_prob = (h_freq + 1 / float(self.uniq_h_toks)) / (self.total_h_toks + 1)entry["prob_ham"] = h_probfreq_table[tok] = entryreturn freq_tabledef prob_spam(self, token):"""calculates the probability that 'token' is found in spam emails:param token: (str):return: (float) probability 'token' is spam based on training emails"""val = self.freq_tab.get(token)if val is not None:return val["prob_spam"]return (1.0 / self.uniq_s_toks) / (self.total_s_toks + 1)def prob_ham(self, token):"""calculates the probability that 'token' is found in ham emails:param token: (str):return: (float) probability 'token' is ham based on training emails"""val = self.freq_tab.get(token)if val is not None:return val["prob_ham"]return (1.0 / self.uniq_h_toks) / (self.total_h_toks + 1)def prob_msg_spam(self, filepath):"""Calculates the probability that a message is spam:param filepath: (str) path of email:return: (float) probability message is spam"""toks = file_tokens(filepath)sm = 0for tok in toks:sm += math.log10(self.prob_spam(tok))return smdef prob_msg_ham(self, filepath):"""Calculates the probability that a message is ham:param filepath: (str) path of email:return: (float) probability message is ham"""toks = file_tokens(filepath)sm = 0for tok in toks:sm += math.log10(self.prob_ham(tok))return smdef classify(self, filepath):"""classifies a file as spam or ham based on training data:param filepath::return: (boolean) True->spam, False->ham"""self.file_count += 1if self.prob_msg_spam(filepath) > self.prob_msg_ham(filepath):self.count_spam += 1self.spam_list.append(filepath)return Trueelse:self.count_ham += 1self.ham_list.append(filepath)return Falsedef classify_all(self, dir_path, known_type="spam"):"""Classifies all emails in a testing directory and maintains count of errors:param dir_path: path of testing directory:param known_type: str: the known type of testing directory"""self.ham_list = []self.spam_list = []self.file_count = 0self.count_spam = 0self.count_ham = 0print("\nClassifying all emails found in directory: ./" + dir_path)try:for f in os.listdir(dir_path):self.classify(dir_path + f)if known_type == "spam":correct = self.count_spam / float(self.file_count)else:correct = self.count_ham / float(self.file_count)print("Total spam:{:8d}".format(self.count_spam))print("Total ham: {:8d}".format(self.count_ham))print("Correctly classified: {:6.2f}%".format(correct * 100))except FileNotFoundError as e:print("ERROR: classify_all() failed " + str(e))def clean_table(self, min_freq):"""Removes entries from frequency table if they are deemed poor indicators.or if combined spam/ham frequency is below 'min_freq':param min_freq: if total token count below threshold, delete from table"""rm_keys = []for k, v in self.freq_tab.items():if (v["spam_freq"] + v["ham_freq"] < min_freqor 0.45 < (v["prob_spam"] / (v["prob_spam"] + v["prob_ham"])) < 0.55):rm_keys.append(k)for k in rm_keys:print("deleting " + str(k) + " from freq table in clean()")del self.freq_tab[k]def print_table_info(self):""" Print training info:- unique tokens in ham and spam, number of emails in training set"""print("\n=======================================")print("TRAINING AND FREQUENCY TABLE INFO")print("=======================================")print("Unique tokens in spam messages:{:8d}".format(len(self.spam_table)))print("Unique tokens in ham messages: {:8d}".format(len(self.ham_table)))print("Unique tokens in ALL messages: {:8d}".format(len(self.freq_tab)))print("Num spam e-mails:{:22d}".format(len(os.listdir("emails/testing/spam/"))))print("Num ham e-mails: {:22d}".format(len(os.listdir("emails/testing/ham/"))))def tokens(text, tok_size=3):""" Returns a list of all substrings contained in 'text' of size 'tok_size':param text: (string) text to tokenize:param tok_size: length of substrings:return: (list) tokens of 'text'"""return [text[i : i + tok_size] for i in range(len(text) - tok_size + 1)]def clean_split(in_str):""" Removes all non-alphanum chars and splits string at whitespace, downcase:param in_str: (str) target string:return: (list) cleaned strings"""return re.sub(r"[^\s\w]|_", "", in_str).lower().split()def file_tokens(filepath):""" tokenizes all strings contained in 'filepath' after removing \all non-alphanum chars and splitting strings at whitespace:param filepath: path of target file:return: list of tokens"""toks = []try:with open(filepath, encoding="utf8", errors="ignore") as fp:for line in fp:words = clean_split(line)toks.extend(words)except FileNotFoundError as e:print("Error:" + str(e))return [x for x in toks if len(x) < 10]def dir_tokens(dir_path):""" tokenizes all files contained in 'dir_path':param dir_path: directory containing files to be tokenized:return: list of tokens"""dir_toks = []try:filenames = os.listdir(dir_path)for f in filenames:dir_toks.extend(file_tokens(dir_path + f))except FileNotFoundError as e:print("Error:" + str(e))return dir_toksif __name__ == "__main__":spamfilter = Spamfilter("emails/training/")spamfilter.print_table_info()spamfilter.classify_all("emails/testing/spam/", "spam")spamfilter.classify_all("emails/testing/ham/", "ham")

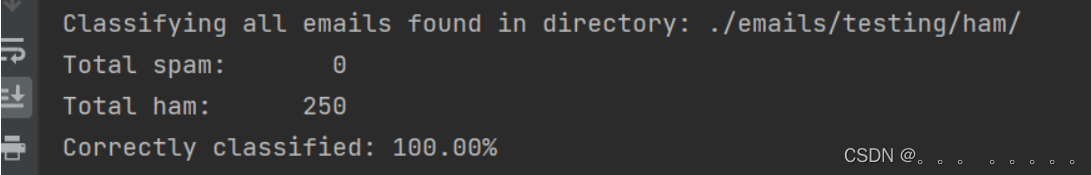

结果:

垃圾邮件的判断结果:

正常邮件的判断结果:

由结果我们得到分类的正确率还是非常高的。

这篇关于朴素贝叶斯——垃圾邮件分类的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!