本文主要是介绍DPDK使用hugepage原理总结,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

hugepage原理参考http://blog.chinaunix.net/uid-28541347-id-5783934.html

DPDK版本:17.11.2

hugepage的作用:

1. 就是减少页的切换,页表项减少,产生缺页中断的次数也减少

2. 降低TLB的miss次数

1.DPDK使用前准备

- DPDK应用使用hugepage前,应保证系统已经配置hugepage

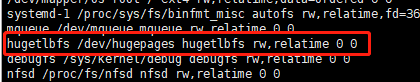

(配置参考https://blog.csdn.net/shaoyunzhe/article/details/54614077) - 将 hugetlbfs 特殊文件系统挂载到根文件系统的某个目录

mount-t hugetlbfs hugetlbfs /dev/hugepages (挂载默认的hugeage大小)mount-t hugetlbfs none /dev/hugepages_2mb-o pagesize=2MB(挂载2M的)

1G大页和2M大页必须挂载了才能使用。挂载其中一个,DPDK也能正常运行。

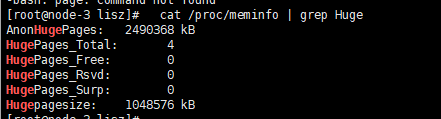

本测试时只设置了1G大页,具体信息如下:

挂载目录:cat /proc/mounts

2.DPDK使用hugepage代码分析

DPDK初始化函数rte_eal_init调用eal_hugepage_info_init初始化hugepage信息,

2.1. eal_hugepage_info_init初始化主要工作:

此函数主要收集可用hugepage信息(有多少页,挂载目录)。

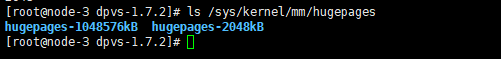

- 进入”/sys/kernel/mm/hugepages“目录

- 寻找“hugepages-”开头的目录并获取此目录有后面的数字,就是hugepage大小,比如我系统下:

- 使用struct hugepage_info 结构体保存hugepage页面大小,挂载目录,可用页数。注意:如果对应大小hugepage没有挂载,此类hugepage则不会被DPDK程序使用

eg:比如我们没有执行mount-t hugetlbfs none /dev/hugepages_2mb-o pagesize=2MB,只挂载了mount-t hugetlbfs hugetlbfs /dev/hugepages,DPDK只会使用1G hugepage。

DPDK程序执行时打印“EAL: 2048 hugepages of size 2097152 reserved, but no mounted hugetlbfs found for that size”表明2M的没有挂载。 - 以下结构体就是保存hugepage信息的,这个信息后面初始化存储有用。

-

struct hugepage_info {uint64_t hugepage_sz; /**< size of a huge page */const char *hugedir; /**< dir where hugetlbfs is mounted */uint32_t num_pages[RTE_MAX_NUMA_NODES];/**< number of hugepages of that size on each socket */int lock_descriptor; /**< file descriptor for hugepage dir */ };本实验最后大页信息是:

hugepage_sz=1048576(1048576*1024)

hugedir="/dev/hugepages"

num_pages[0]=4

int

eal_hugepage_info_init(void)

{const char dirent_start_text[] = "hugepages-";const size_t dirent_start_len = sizeof(dirent_start_text) - 1;unsigned i, num_sizes = 0;DIR *dir;struct dirent *dirent;dir = opendir(sys_dir_path); //sys_dir_path[] = "/sys/kernel/mm/hugepages"if (dir == NULL) {RTE_LOG(ERR, EAL,"Cannot open directory %s to read system hugepage info\n",sys_dir_path);return -1;}/*遍历/sys/kernel/mm/hugepages目录下以“hugepages-”开头的目录*/for (dirent = readdir(dir); dirent != NULL; dirent = readdir(dir)) {struct hugepage_info *hpi;if (strncmp(dirent->d_name, dirent_start_text,dirent_start_len) != 0)continue;if (num_sizes >= MAX_HUGEPAGE_SIZES)break;/*internal_config为DPDK全局变量*/hpi = &internal_config.hugepage_info[num_sizes];/*保存hugepage的大小,最多保存三种大小,一般也只用到了1G,2M*/hpi->hugepage_sz =rte_str_to_size(&dirent->d_name[dirent_start_len]);/*get_hugepage_dir函数会到/proc/mounts里去寻找对应大小hugepage页挂载的目录 */hpi->hugedir = get_hugepage_dir(hpi->hugepage_sz);/* first, check if we have a mountpoint */if (hpi->hugedir == NULL) {uint32_t num_pages;num_pages = get_num_hugepages(dirent->d_name);if (num_pages > 0)RTE_LOG(NOTICE, EAL,"%" PRIu32 " hugepages of size ""%" PRIu64 " reserved, but no mounted ""hugetlbfs found for that size\n",num_pages, hpi->hugepage_sz);continue;}

......

......

}2.2.rte_eal_hugepage_init初始化主要工作:

上面只是获取了hugepage信息,后面rte_eal_memory_init函数->rte_eal_hugepage_init->map_all_hugepages初始化每页具体虚拟地址,物理地址,大小等信息。

- 获取全局变量,存储分配内存相关信息

/* get pointer to global configuration */mcfg = rte_eal_get_configuration()->mem_config;- 计算一共有多少页,并分配struct hugepage_file 结构管理所有页(如果设置了1G,2M,16G,nr_hugepages最后等于所有页数的总和,本测试nr_hugepages=4)

/* calculate total number of hugepages available. at this point we haven't* yet started sorting them so they all are on socket 0 */for (i = 0; i < (int) internal_config.num_hugepage_sizes; i++) {/* meanwhile, also initialize used_hp hugepage sizes in used_hp */used_hp[i].hugepage_sz = internal_config.hugepage_info[i].hugepage_sz;nr_hugepages += internal_config.hugepage_info[i].num_pages[0];}/** allocate a memory area for hugepage table.* this isn't shared memory yet. due to the fact that we need some* processing done on these pages, shared memory will be created* at a later stage.*/tmp_hp = malloc(nr_hugepages * sizeof(struct hugepage_file));if (tmp_hp == NULL)goto fail;- 第一次调用map_all_hugepages创建内存映射文件。orig参数设置为1,下面解释了设置1或是0的作用

/** Mmap all hugepages of hugepage table: it first open a file in* hugetlbfs, then mmap() hugepage_sz data in it. If orig is set, the* virtual address is stored in hugepg_tbl[i].orig_va, else it is stored* in hugepg_tbl[i].final_va. The second mapping (when orig is 0) tries to* map contiguous physical blocks in contiguous virtual blocks.*/ static unsigned map_all_hugepages(struct hugepage_file *hugepg_tbl, struct hugepage_info *hpi,uint64_t *essential_memory __rte_unused, int orig)

eal_get_hugefile_path函数根据页的索引生成文件路径/dev/hugepages/rtemap_x(本测试是0,1,2,3),4个文件。然后调用open,mamp进行映射。然后把得到的虚拟地址存在hugepg_tbl[i].orig_va = virtaddr;

/* try to create hugepage file */fd = open(hugepg_tbl[i].filepath, O_CREAT | O_RDWR, 0600);if (fd < 0) {RTE_LOG(DEBUG, EAL, "%s(): open failed: %s\n", __func__,strerror(errno));goto out;}/* map the segment, and populate page tables,* the kernel fills this segment with zeros */virtaddr = mmap(vma_addr, hugepage_sz, PROT_READ | PROT_WRITE,MAP_SHARED | MAP_POPULATE, fd, 0);- 调用find_physaddrs函数获取每页虚拟地址对应的物理地址

/** For each hugepage in hugepg_tbl, fill the physaddr value. We find* it by browsing the /proc/self/pagemap special file.*/

static int

find_physaddrs(struct hugepage_file *hugepg_tbl, struct hugepage_info *hpi)

{unsigned int i;phys_addr_t addr;for (i = 0; i < hpi->num_pages[0]; i++) {addr = rte_mem_virt2phy(hugepg_tbl[i].orig_va);if (addr == RTE_BAD_PHYS_ADDR)return -1;hugepg_tbl[i].physaddr = addr;}return 0;

}- 调用find_numasocket获取每页对应的socket ID。因为分配页内存时,在NUMA架构中会根据NUMA的内存分配策略决定在哪个NUMA节点分配。

if (find_numasocket(&tmp_hp[hp_offset], hpi) < 0){RTE_LOG(DEBUG, EAL, "Failed to find NUMA socket for %u MB pages\n",(unsigned)(hpi->hugepage_sz / 0x100000));goto fail;}- 根据每页的物理地址进行排序,排序的是struct hugepage_file *tmp_hp,tmp_hp存储了所有hugepage信息,是在一开始时初始化的。qsort排序的单位是一个struct hugepage_file结构体大小,排序依据是每页的物理地址大小。

qsort(&tmp_hp[hp_offset], hpi->num_pages[0],sizeof(struct hugepage_file), cmp_physaddr);static int

cmp_physaddr(const void *a, const void *b)

{

#ifndef RTE_ARCH_PPC_64const struct hugepage_file *p1 = a;const struct hugepage_file *p2 = b;

#else/* PowerPC needs memory sorted in reverse order from x86 */const struct hugepage_file *p1 = b;const struct hugepage_file *p2 = a;

#endifif (p1->physaddr < p2->physaddr)return -1;else if (p1->physaddr > p2->physaddr)return 1;elsereturn 0;

}

- 然后再次调用map_all_hugepages进行第二次映射。orig参数设置为0,这次和第一次调用有所区别。主要是是保证最大物理地址和最大虚拟地址都连续对应,此前已经保证物理地址是从小到大排序好了的。最后将新映射的地址保存到:hugepg_tbl[i].final_va = virtaddr;参考map_all_hugepages函数以下代码

else if (vma_len == 0) {unsigned j, num_pages;/* reserve a virtual area for next contiguous* physical block: count the number of* contiguous physical pages. */for (j = i+1; j < hpi->num_pages[0] ; j++) {

#ifdef RTE_ARCH_PPC_64/* The physical addresses are sorted in* descending order on PPC64 */if (hugepg_tbl[j].physaddr !=hugepg_tbl[j-1].physaddr - hugepage_sz)break;

#elseif (hugepg_tbl[j].physaddr !=hugepg_tbl[j-1].physaddr + hugepage_sz)break;

#endif}num_pages = j - i;vma_len = num_pages * hugepage_sz;/* get the biggest virtual memory area up to* vma_len. If it fails, vma_addr is NULL, so* let the kernel provide the address. */vma_addr = get_virtual_area(&vma_len, hpi->hugepage_sz);if (vma_addr == NULL)vma_len = hugepage_sz;}- 最后调用unmap_all_hugepages_orig取消第一次映射

/* unmap original mappings */if (unmap_all_hugepages_orig(&tmp_hp[hp_offset], hpi) < 0)goto fail;- 然后做一些清理工作,创建共享存储,umap不需要的页,最后将页信息保存到全局变量中

if (new_memseg) {j += 1;if (j == RTE_MAX_MEMSEG)break;mcfg->memseg[j].iova = hugepage[i].physaddr;mcfg->memseg[j].addr = hugepage[i].final_va;mcfg->memseg[j].len = hugepage[i].size;mcfg->memseg[j].socket_id = hugepage[i].socket_id;mcfg->memseg[j].hugepage_sz = hugepage[i].size;}2.3.其他

rte_eal_hugepage_init只会被RTE_PROC_PRIMARY的进程调用(多进程情况下)。rte_eal_hugepage_init完成后只是将可用的大页内存物理地址,虚拟地址,socket id,大小信息保存到了全局变量中,怎么使用这些内存还需要进一步管理。

这篇关于DPDK使用hugepage原理总结的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!