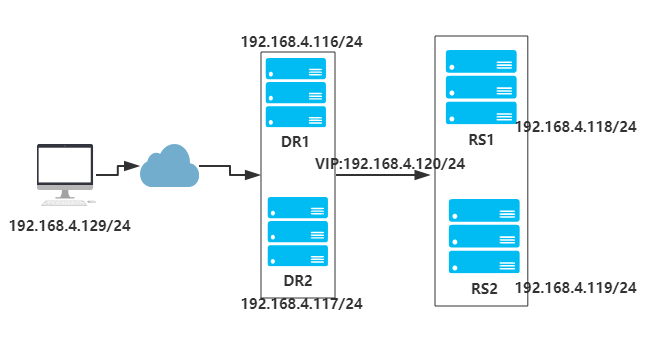

实例拓扑图:

DR1和DR2部署Keepalived和lvs作主从架构或主主架构,RS1和RS2部署nginx搭建web站点。

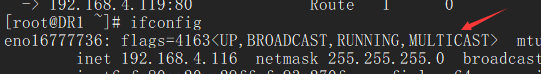

注意:各节点的时间需要同步(ntpdate ntp1.aliyun.com);关闭firewalld(systemctl stop firewalld.service,systemctl disable firewalld.service),设置selinux为permissive(setenforce 0);同时确保DR1和DR2节点的网卡支持MULTICAST(多播)通信。通过命令ifconfig可以查看到是否开启了MULTICAST:

Keepalived的主从架构

搭建RS1:

[root@RS1 ~]# yum -y install nginx #安装nginx [root@RS1 ~]# vim /usr/share/nginx/html/index.html #修改主页<h1> 192.168.4.118 RS1 server </h1> [root@RS1 ~]# systemctl start nginx.service #启动nginx服务 [root@RS1 ~]# vim RS.sh #配置lvs-dr的脚本文件#!/bin/bash#vip=192.168.4.120mask=255.255.255.255case $1 instart)echo 1 > /proc/sys/net/ipv4/conf/all/arp_ignoreecho 1 > /proc/sys/net/ipv4/conf/lo/arp_ignoreecho 2 > /proc/sys/net/ipv4/conf/all/arp_announceecho 2 > /proc/sys/net/ipv4/conf/lo/arp_announceifconfig lo:0 $vip netmask $mask broadcast $vip uproute add -host $vip dev lo:0;;stop)ifconfig lo:0 downecho 0 > /proc/sys/net/ipv4/conf/all/arp_ignoreecho 0 > /proc/sys/net/ipv4/conf/lo/arp_ignoreecho 0 > /proc/sys/net/ipv4/conf/all/arp_announceecho 0 > /proc/sys/net/ipv4/conf/lo/arp_announce;;*) echo "Usage $(basename $0) start|stop"exit 1;;esac [root@RS1 ~]# bash RS.sh start

参考RS1的配置搭建RS2。

搭建DR1:

[root@DR1 ~]# yum -y install ipvsadm keepalived #安装ipvsadm和keepalived

[root@DR1 ~]# vim /etc/keepalived/keepalived.conf #修改keepalived.conf配置文件global_defs {notification_email {root@localhost}notification_email_from keepalived@localhostsmtp_server 127.0.0.1smtp_connect_timeout 30router_id 192.168.4.116vrrp_skip_check_adv_addrvrrp_mcast_group4 224.0.0.10}vrrp_instance VIP_1 {state MASTERinterface eno16777736virtual_router_id 1priority 100advert_int 1authentication {auth_type PASSauth_pass %&hhjj99}virtual_ipaddress {192.168.4.120/24 dev eno16777736 label eno16777736:0}}virtual_server 192.168.4.120 80 {delay_loop 6lb_algo rrlb_kind DRprotocol TCPreal_server 192.168.4.118 80 {weight 1HTTP_GET {url {path /index.htmlstatus_code 200}connect_timeout 3nb_get_retry 3delay_before_retry 3}}real_server 192.168.4.119 80 {weight 1HTTP_GET {url {path /index.htmlstatus_code 200}connect_timeout 3nb_get_retry 3delay_before_retry 3}}}

[root@DR1 ~]# systemctl start keepalived

[root@DR1 ~]# ifconfigeno16777736: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500inet 192.168.4.116 netmask 255.255.255.0 broadcast 192.168.4.255inet6 fe80::20c:29ff:fe93:270f prefixlen 64 scopeid 0x20<link>ether 00:0c:29:93:27:0f txqueuelen 1000 (Ethernet)RX packets 14604 bytes 1376647 (1.3 MiB)RX errors 0 dropped 0 overruns 0 frame 0TX packets 6722 bytes 653961 (638.6 KiB)TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0eno16777736:0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500inet 192.168.4.120 netmask 255.255.255.0 broadcast 0.0.0.0ether 00:0c:29:93:27:0f txqueuelen 1000 (Ethernet)

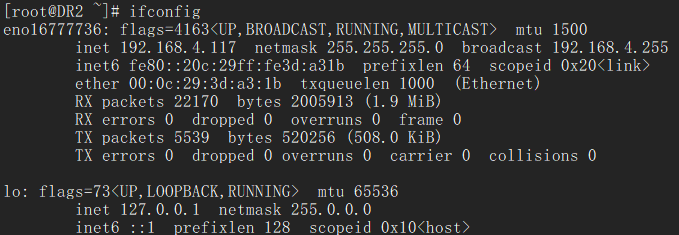

[root@DR1 ~]# ipvsadm -lnIP Virtual Server version 1.2.1 (size=4096)Prot LocalAddress:Port Scheduler Flags-> RemoteAddress:Port Forward Weight ActiveConn InActConnTCP 192.168.4.120:80 rr-> 192.168.4.118:80 Route 1 0 0 -> 192.168.4.119:80 Route 1 0 0 DR2的搭建基本同DR1,主要修改一下配置文件中/etc/keepalived/keepalived.conf的state和priority:state BACKUP、priority 90. 同时我们发现作为backup的DR2没有启用eno16777736:0的网口:

客户端进行测试:

[root@client ~]# for i in {1..20};do curl http://192.168.4.120;done #客户端正常访问

<h1> 192.168.4.119 RS2 server</h1>

<h1> 192.168.4.118 RS1 server </h1>

<h1> 192.168.4.119 RS2 server</h1>

<h1> 192.168.4.118 RS1 server </h1>

<h1> 192.168.4.119 RS2 server</h1>

<h1> 192.168.4.118 RS1 server </h1>

<h1> 192.168.4.119 RS2 server</h1>

<h1> 192.168.4.118 RS1 server </h1>

<h1> 192.168.4.119 RS2 server</h1>

<h1> 192.168.4.118 RS1 server </h1>

<h1> 192.168.4.119 RS2 server</h1>

<h1> 192.168.4.118 RS1 server </h1>

<h1> 192.168.4.119 RS2 server</h1>

<h1> 192.168.4.118 RS1 server </h1>

<h1> 192.168.4.119 RS2 server</h1>

<h1> 192.168.4.118 RS1 server </h1>

<h1> 192.168.4.119 RS2 server</h1>

<h1> 192.168.4.118 RS1 server </h1>

<h1> 192.168.4.119 RS2 server</h1>

<h1> 192.168.4.118 RS1 server </h1>

[root@DR1 ~]# systemctl stop keepalived.service #关闭DR1的keepalived服务

[root@DR2 ~]# systemctl status keepalived.service #观察DR2,可以看到DR2已经进入MASTER状态

● keepalived.service - LVS and VRRP High Availability MonitorLoaded: loaded (/usr/lib/systemd/system/keepalived.service; disabled; vendor preset: disabled)Active: active (running) since Tue 2018-09-04 11:33:04 CST; 7min agoProcess: 12983 ExecStart=/usr/sbin/keepalived $KEEPALIVED_OPTIONS (code=exited, status=0/SUCCESS)Main PID: 12985 (keepalived)CGroup: /system.slice/keepalived.service├─12985 /usr/sbin/keepalived -D├─12988 /usr/sbin/keepalived -D└─12989 /usr/sbin/keepalived -DSep 04 11:37:41 happiness Keepalived_healthcheckers[12988]: SMTP alert successfully sent.

Sep 04 11:40:22 happiness Keepalived_vrrp[12989]: VRRP_Instance(VIP_1) Transition to MASTER STATE

Sep 04 11:40:23 happiness Keepalived_vrrp[12989]: VRRP_Instance(VIP_1) Entering MASTER STATE

Sep 04 11:40:23 happiness Keepalived_vrrp[12989]: VRRP_Instance(VIP_1) setting protocol VIPs.

Sep 04 11:40:23 happiness Keepalived_vrrp[12989]: Sending gratuitous ARP on eno16777736 for 192.168.4.120

Sep 04 11:40:23 happiness Keepalived_vrrp[12989]: VRRP_Instance(VIP_1) Sending/queueing gratuitous ARPs on eno16777736 for 192.168.4.120

Sep 04 11:40:23 happiness Keepalived_vrrp[12989]: Sending gratuitous ARP on eno16777736 for 192.168.4.120

Sep 04 11:40:23 happiness Keepalived_vrrp[12989]: Sending gratuitous ARP on eno16777736 for 192.168.4.120

Sep 04 11:40:23 happiness Keepalived_vrrp[12989]: Sending gratuitous ARP on eno16777736 for 192.168.4.120

Sep 04 11:40:23 happiness Keepalived_vrrp[12989]: Sending gratuitous ARP on eno16777736 for 192.168.4.120

[root@client ~]# for i in {1..20};do curl http://192.168.4.120;done #可以看到客户端正常访问

<h1> 192.168.4.119 RS2 server</h1>

<h1> 192.168.4.118 RS1 server </h1>

<h1> 192.168.4.119 RS2 server</h1>

<h1> 192.168.4.118 RS1 server </h1>

<h1> 192.168.4.119 RS2 server</h1>

<h1> 192.168.4.118 RS1 server </h1>

<h1> 192.168.4.119 RS2 server</h1>

<h1> 192.168.4.118 RS1 server </h1>

<h1> 192.168.4.119 RS2 server</h1>

<h1> 192.168.4.118 RS1 server </h1>

<h1> 192.168.4.119 RS2 server</h1>

<h1> 192.168.4.118 RS1 server </h1>

<h1> 192.168.4.119 RS2 server</h1>

<h1> 192.168.4.118 RS1 server </h1>

<h1> 192.168.4.119 RS2 server</h1>

<h1> 192.168.4.118 RS1 server </h1>

<h1> 192.168.4.119 RS2 server</h1>

<h1> 192.168.4.118 RS1 server </h1>

<h1> 192.168.4.119 RS2 server</h1>

<h1> 192.168.4.118 RS1 server </h1> Keepalived的主主架构

修改RS1和RS2,添加新的VIP:

[root@RS1 ~]# cp RS.sh RS_bak.sh [root@RS1 ~]# vim RS_bak.sh #添加新的VIP#!/bin/bash#vip=192.168.4.121mask=255.255.255.255case $1 instart)echo 1 > /proc/sys/net/ipv4/conf/all/arp_ignoreecho 1 > /proc/sys/net/ipv4/conf/lo/arp_ignoreecho 2 > /proc/sys/net/ipv4/conf/all/arp_announceecho 2 > /proc/sys/net/ipv4/conf/lo/arp_announceifconfig lo:1 $vip netmask $mask broadcast $vip uproute add -host $vip dev lo:1;;stop)ifconfig lo:1 downecho 0 > /proc/sys/net/ipv4/conf/all/arp_ignoreecho 0 > /proc/sys/net/ipv4/conf/lo/arp_ignoreecho 0 > /proc/sys/net/ipv4/conf/all/arp_announceecho 0 > /proc/sys/net/ipv4/conf/lo/arp_announce;;*)echo "Usage $(basename $0) start|stop"exit 1;;esac [root@RS1 ~]# bash RS_bak.sh start [root@RS1 ~]# ifconfig...lo:0: flags=73<UP,LOOPBACK,RUNNING> mtu 65536inet 192.168.4.120 netmask 255.255.255.255loop txqueuelen 0 (Local Loopback)lo:1: flags=73<UP,LOOPBACK,RUNNING> mtu 65536inet 192.168.4.121 netmask 255.255.255.255loop txqueuelen 0 (Local Loopback) [root@RS1 ~]# scp RS_bak.sh root@192.168.4.119:~ root@192.168.4.119's password: RS_bak.sh 100% 693 0.7KB/s 00:00[root@RS2 ~]# bash RS_bak.sh #直接运行脚本添加新的VIP [root@RS2 ~]# ifconfig...lo:0: flags=73<UP,LOOPBACK,RUNNING> mtu 65536inet 192.168.4.120 netmask 255.255.255.255loop txqueuelen 0 (Local Loopback)lo:1: flags=73<UP,LOOPBACK,RUNNING> mtu 65536inet 192.168.4.121 netmask 255.255.255.255loop txqueuelen 0 (Local Loopback)

修改DR1和DR2:

[root@DR1 ~]# vim /etc/keepalived/keepalived.conf #修改DR1的配置文件,添加新的实例,配置服务器组...vrrp_instance VIP_2 {state BACKUPinterface eno16777736virtual_router_id 2priority 90advert_int 1authentication {auth_type PASSauth_pass UU**99^^}virtual_ipaddress {192.168.4.121/24 dev eno16777736 label eno16777736:1}}virtual_server_group ngxsrvs {192.168.4.120 80192.168.4.121 80}virtual_server group ngxsrvs {...} [root@DR1 ~]# systemctl restart keepalived.service #重启服务 [root@DR1 ~]# ifconfig #此时可以看到eno16777736:1,因为DR2还未配置eno16777736: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500inet 192.168.4.116 netmask 255.255.255.0 broadcast 192.168.4.255inet6 fe80::20c:29ff:fe93:270f prefixlen 64 scopeid 0x20<link>ether 00:0c:29:93:27:0f txqueuelen 1000 (Ethernet)RX packets 54318 bytes 5480463 (5.2 MiB)RX errors 0 dropped 0 overruns 0 frame 0TX packets 38301 bytes 3274990 (3.1 MiB)TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0eno16777736:0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500inet 192.168.4.120 netmask 255.255.255.0 broadcast 0.0.0.0ether 00:0c:29:93:27:0f txqueuelen 1000 (Ethernet)eno16777736:1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500inet 192.168.4.121 netmask 255.255.255.0 broadcast 0.0.0.0ether 00:0c:29:93:27:0f txqueuelen 1000 (Ethernet) [root@DR1 ~]# ipvsadm -lnIP Virtual Server version 1.2.1 (size=4096)Prot LocalAddress:Port Scheduler Flags-> RemoteAddress:Port Forward Weight ActiveConn InActConnTCP 192.168.4.120:80 rr-> 192.168.4.118:80 Route 1 0 0 -> 192.168.4.119:80 Route 1 0 0 TCP 192.168.4.121:80 rr-> 192.168.4.118:80 Route 1 0 0 -> 192.168.4.119:80 Route 1 0 0[root@DR2 ~]# vim /etc/keepalived/keepalived.conf #修改DR2的配置文件,添加实例,配置服务器组...vrrp_instance VIP_2 {state MASTERinterface eno16777736virtual_router_id 2priority 100advert_int 1authentication {auth_type PASSauth_pass UU**99^^}virtual_ipaddress {192.168.4.121/24 dev eno16777736 label eno16777736:1}}virtual_server_group ngxsrvs {192.168.4.120 80192.168.4.121 80}virtual_server group ngxsrvs {...} [root@DR2 ~]# systemctl restart keepalived.service #重启服务 [root@DR2 ~]# ifconfigeno16777736: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500inet 192.168.4.117 netmask 255.255.255.0 broadcast 192.168.4.255inet6 fe80::20c:29ff:fe3d:a31b prefixlen 64 scopeid 0x20<link>ether 00:0c:29:3d:a3:1b txqueuelen 1000 (Ethernet)RX packets 67943 bytes 6314537 (6.0 MiB)RX errors 0 dropped 0 overruns 0 frame 0TX packets 23250 bytes 2153847 (2.0 MiB)TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0eno16777736:1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500inet 192.168.4.121 netmask 255.255.255.0 broadcast 0.0.0.0ether 00:0c:29:3d:a3:1b txqueuelen 1000 (Ethernet) [root@DR2 ~]# ipvsadm -lnIP Virtual Server version 1.2.1 (size=4096)Prot LocalAddress:Port Scheduler Flags-> RemoteAddress:Port Forward Weight ActiveConn InActConnTCP 192.168.4.120:80 rr-> 192.168.4.118:80 Route 1 0 0 -> 192.168.4.119:80 Route 1 0 0 TCP 192.168.4.121:80 rr-> 192.168.4.118:80 Route 1 0 0 -> 192.168.4.119:80 Route 1 0 0

客户端测试:

[root@client ~]# for i in {1..20};do curl http://192.168.4.120;done<h1> 192.168.4.119 RS2 server</h1><h1> 192.168.4.118 RS1 server </h1><h1> 192.168.4.119 RS2 server</h1><h1> 192.168.4.118 RS1 server </h1><h1> 192.168.4.119 RS2 server</h1><h1> 192.168.4.118 RS1 server </h1><h1> 192.168.4.119 RS2 server</h1><h1> 192.168.4.118 RS1 server </h1><h1> 192.168.4.119 RS2 server</h1><h1> 192.168.4.118 RS1 server </h1><h1> 192.168.4.119 RS2 server</h1><h1> 192.168.4.118 RS1 server </h1><h1> 192.168.4.119 RS2 server</h1><h1> 192.168.4.118 RS1 server </h1><h1> 192.168.4.119 RS2 server</h1><h1> 192.168.4.118 RS1 server </h1><h1> 192.168.4.119 RS2 server</h1><h1> 192.168.4.118 RS1 server </h1><h1> 192.168.4.119 RS2 server</h1><h1> 192.168.4.118 RS1 server </h1>

[root@client ~]# for i in {1..20};do curl http://192.168.4.121;done<h1> 192.168.4.119 RS2 server</h1><h1> 192.168.4.118 RS1 server </h1><h1> 192.168.4.119 RS2 server</h1><h1> 192.168.4.118 RS1 server </h1><h1> 192.168.4.119 RS2 server</h1><h1> 192.168.4.118 RS1 server </h1><h1> 192.168.4.119 RS2 server</h1><h1> 192.168.4.118 RS1 server </h1><h1> 192.168.4.119 RS2 server</h1><h1> 192.168.4.118 RS1 server </h1><h1> 192.168.4.119 RS2 server</h1><h1> 192.168.4.118 RS1 server </h1><h1> 192.168.4.119 RS2 server</h1><h1> 192.168.4.118 RS1 server </h1><h1> 192.168.4.119 RS2 server</h1><h1> 192.168.4.118 RS1 server </h1><h1> 192.168.4.119 RS2 server</h1><h1> 192.168.4.118 RS1 server </h1><h1> 192.168.4.119 RS2 server</h1><h1> 192.168.4.118 RS1 server </h1>