本文主要是介绍Keepalived+Lvs 原来如此简单,so easy,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

前言

在lvs架构中,当后端的rs宕掉时,调度器仍会把请求转发到宕掉的rs上,而使用keepalived就可以解决该问题。

keepalived已经嵌入了lvs功能,无需使用ipvsadm & 无需编写lvs相关脚本。完整的keepalived+lvs需要两台调度器实现高可用,提供调度服务的只需要一台,另外一台作为备用。

用了lvs加keepalived可以使用一个VIP,LVS和keepalived整合的话,哪我们不需要手工去配置VIP操作了

[root@localhost ~]# ipvsadm -A -t 192.168.179.199:80 -s rr

[root@localhost ~]# ipvsadm -a -t 192.168.179.199:80 -r 192.168.179.100 -g -w 100这些步骤都不再需要了,只需要ipvs模块就行了,即有ipvsadm管理命令就行了

结构:

主keepalived(调度器):192.168.179.102

备keepalived(调度器):192.168.179.103

真实服务器rs1:192.168.179.99

真实服务器rs1:192.168.179.104

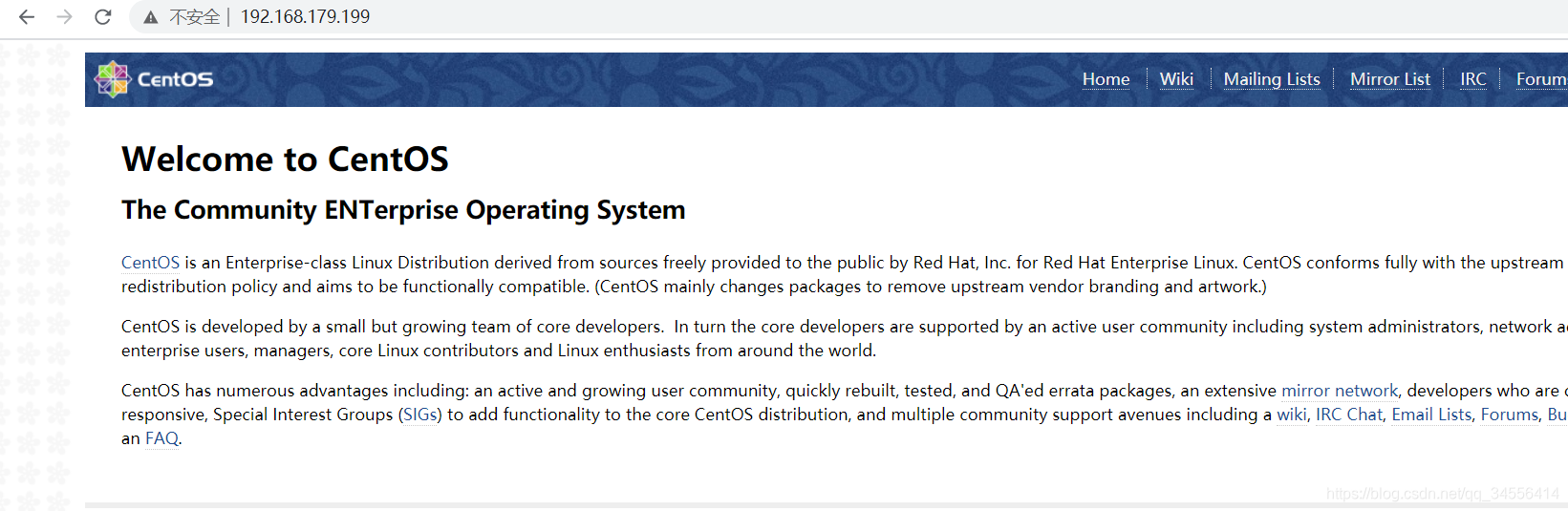

VIP:192.168.179.199

lvs为dr模式,rs主机上运行着提供80服务的nginx

keepalived 配置文件结构

vrrp_instance VI_1

vrrp_instance VI_1 {state MASTER interface ens33virtual_router_id 51priority 100 advert_int 5nopreemptauthentication {auth_type PASSauth_pass 1111}virtual_ipaddress {192.168.179.199 }

}# Virtualserver也用这个VIP

virtual_server 192.168.179.199 80 {

}virtual_server

virtual_server 192.168.1.199 80 {

这个是LVS的配置 后面指定的是VIP

lb_algo wrr 算法

lb_kind DR 指定转发方式是DR模式virtual_server 192.168.179.199 80 {delay_loop 6 #监控检查时间间隔,keepalived可以检查后端机器的服务状态(Keepalived会每隔6秒去探测后端real srver的80端口是不是能够通,不通踢出LVS均衡,如果正常访问会加到均衡里面来)lb_algo rr #调度算法,一般是rr模式lb_kind DR #转发方式persistence_timeout 60 #会话保持时间,五秒之内用户请求都在后端一台机器上protocol TCP后面一个real server配置一个段,要将后端的真实IP写进去,有多少个机器加多少个

real_serverreal_server 192.168.33.12 80 {weight 100TCP_CHECK {connect_timeout 10nb_get_retry 3delay_before_retry 3connect_port 80}

}Ipvsadmin还需要安装,安装目的是通过-Ln查看

配置真实服务器real-server

在192.168.179.99,192.168.179.104 nginx服务器上怕配置VIP和抑制ARP请求

简单说一下: 假设A为前端负载均衡服务器, B,C为后端真实服务器。 A接收到数据包以后,会把数据包的MAC地址改成B的(根据调度算法,假设发给B服务器),然后把数据包重新发出去,交换机收到数据包根据MAC地址找到B,把数据包交给B。 这时B会收到数据包,同时验证请求IP地址,由于数据包里的IP地址是给A的,所以正常情况下B会丢弃数据包,为了防止这种情况,需要在B机器的回环网卡上配置A的IP地址。并设置ARP压制。

通过下面脚本在lo网卡上配置VIP和抑制ARP请求

[root@localhost ~]# cat real-server.sh

#!/bin/bash

#description : start realserver

VIP=192.168.179.199case "$1" in

start)echo "start LVS of REALServer"/sbin/ifconfig lo:0 $VIP broadcast $VIP netmask 255.255.255.255 upecho "1" >/proc/sys/net/ipv4/conf/lo/arp_ignoreecho "2" >/proc/sys/net/ipv4/conf/lo/arp_announceecho "1" >/proc/sys/net/ipv4/conf/all/arp_ignoreecho "2" >/proc/sys/net/ipv4/conf/all/arp_announce

;;

stop)/sbin/ifconfig lo:0 downecho "close LVS EEALServer"echo "0" >/proc/sys/net/ipv4/conf/lo/arp_ignoreecho "0" >/proc/sys/net/ipv4/conf/lo/arp_announceecho "0" >/proc/sys/net/ipv4/conf/all/arp_ignoreecho "0" >/proc/sys/net/ipv4/conf/all/arp_announce

;;

*)echo "Usage: $0 {start|stop}"exit 1

esac[root@localhost ~]# ./real-server.sh start

start LVS of REALServer# 输入ifconfig查看虚拟IP是否已经绑定到回环网卡

[root@localhost ~]# ifconfig

lo:0: flags=73<UP,LOOPBACK,RUNNING> mtu 65536inet 192.168.179.199 netmask 255.255.255.255loop txqueuelen 1 (Local Loopback)# 在lvs机器上192.168.179.102上ping192.168.179.199可以看到ARP被抑制了

[root@localhost ~]# ping 192.168.179.199

PING 192.168.179.199 (192.168.179.199) 56(84) bytes of data.

From 192.168.179.102 icmp_seq=1 Destination Host Unreachable

From 192.168.179.102 icmp_seq=2 Destination Host Unreachable

From 192.168.179.102 icmp_seq=3 Destination Host Unreachable

^C

--- 192.168.179.199 ping statistics ---

5 packets transmitted, 0 received, +3 errors, 100% packet loss, time 4029ms

pipe 4

配置Keepalived

Master 192.169.179.102 配置

[root@localhost ~]# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {router_id LVS_DEVEL

}# VIP1

vrrp_instance VI_1 {state MASTER interface ens32virtual_router_id 51priority 100 advert_int 5authentication {auth_type PASSauth_pass 1111}virtual_ipaddress {192.168.179.199 }

}virtual_server 192.168.179.199 80 { # 这里配置虚拟服务器 (192.168.179.199 为虚拟IP, 监听80端口)客户端就访问这个地址delay_loop 6 # 健康检查时间间隔,单位是秒lb_algo rr # 负载均衡调度算法,互联网应用常使用 wlc 或 rrlb_kind DR # 负载均衡转发规则。一般包括 DR,NAT,TUN3 种,在我的方案中,都使用DR 的方式。persistence_timeout 60 # 会话保持时间,单位是秒。这个会话是网络7层传输协议中的(第三层)会话。比如我们把它设置为60,那么客户端60秒内访问LVS虚拟服务器的请求都会被转到到同1个后台服务器节点。如果设置为0,那么每次请求都会根据配置的调度情况进行分发。protocol TCP # 转发协议 protocol,一般有 tcp 和 udp 两种。实话说,我还没尝试过 udp 协议类的转发。real_server 192.168.179.104 80 { # 这里配置真实服务器的地址与端口weight 100 # 权重,不解释了。一般都是机器性能好的高一些,低的小一些。TCP_CHECK {connect_timeout 10 # 连接超时时间nb_get_retry 3 # 重连次数delay_before_retry 3 # 重连间隔时间connect_port 80 # 检测端口}}real_server 192.168.179.99 80 { weight 100TCP_CHECK {connect_timeout 10nb_get_retry 3delay_before_retry 3connect_port 80}}

}启动Master的keepalived(最重要的就是查看日志和使用ipvsadm工具来查看)

[root@localhost ~]# tail -f /var/log/messages

Nov 25 10:46:57 localhost Keepalived_vrrp[38768]: VRRP_Instance(VI_1) Entering MASTER STATE

Nov 25 10:46:57 localhost Keepalived_vrrp[38768]: VRRP_Instance(VI_1) setting protocol VIPs.

Nov 25 10:46:57 localhost Keepalived_vrrp[38768]: Sending gratuitous ARP on ens32 for 192.168.179.199

Nov 25 10:46:57 localhost Keepalived_vrrp[38768]: VRRP_Instance(VI_1) Sending/queueing gratuitous ARPs on ens32 for 192.168.179.199

Nov 25 10:46:57 localhost Keepalived_vrrp[38768]: Sending gratuitous ARP on ens32 for 192.168.179.199

Nov 25 10:46:57 localhost Keepalived_vrrp[38768]: Sending gratuitous ARP on ens32 for 192.168.179.199

Nov 25 10:46:57 localhost Keepalived_vrrp[38768]: Sending gratuitous ARP on ens32 for 192.168.179.199

Nov 25 10:46:57 localhost Keepalived_vrrp[38768]: Sending gratuitous ARP on ens32 for 192.168.179.199

Nov 25 10:47:00 localhost Keepalived_healthcheckers[38767]: TCP connection to [192.168.179.99]:80 success.

Nov 25 10:47:00 localhost Keepalived_healthcheckers[38767]: Adding service [192.168.179.99]:80 to VS [192.168.179.199]:80

Nov 25 10:47:00 localhost Keepalived_healthcheckers[38767]: Gained quorum 1+0=1 <= 100 for VS [192.168.179.199]:80

Nov 25 10:47:02 localhost Keepalived_vrrp[38768]: Sending gratuitous ARP on ens32 for 192.168.179.199

Nov 25 10:47:02 localhost Keepalived_vrrp[38768]: VRRP_Instance(VI_1) Sending/queueing gratuitous ARPs on ens32 for 192.168.179.199

Nov 25 10:47:02 localhost Keepalived_vrrp[38768]: Sending gratuitous ARP on ens32 for 192.168.179.199

Nov 25 10:47:02 localhost Keepalived_vrrp[38768]: Sending gratuitous ARP on ens32 for 192.168.179.199

Nov 25 10:47:02 localhost Keepalived_vrrp[38768]: Sending gratuitous ARP on ens32 for 192.168.179.199

Nov 25 10:47:02 localhost Keepalived_vrrp[38768]: Sending gratuitous ARP on ens32 for 192.168.179.199

Nov 25 10:47:06 localhost Keepalived_healthcheckers[38767]: TCP connection to [192.168.179.104]:80 success.

Nov 25 10:47:06 localhost Keepalived_healthcheckers[38767]: Adding service [192.168.179.104]:80 to VS [192.168.179.199]:80# 可以看到通过健康检查加入了后端

[root@localhost ~]# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.179.199:80 rr persistent 60-> 192.168.179.99:80 Route 100 0 0 -> 192.168.179.104:80 Route 100 0 0 # 单独的keepalived是没有健康检查的,单独的keepalive是用shell脚本来实现健康检查的,这里用到的是keepalived内部的模块,通过TCP端口监测,如果是mysql就监测3306端口,如果是nginx那么就监测80端口

# delay_loop 6每隔6s去探测主机80端口是否通 ,如果不通将real server剔除出去,如果又可以正常访问就就加到均衡里面来

[root@localhost ~]# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.179.199:80 rr persistent 60-> 192.168.179.99:80 Route 100 0 0 -> 192.168.179.104:80 Route 100 2 0 192.168.179.103 slave 配置

[root@localhost ~]# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {router_id LVS_DEVEL

}# VIP1

vrrp_instance VI_1 {state BACKUP interface ens32virtual_router_id 51priority 50advert_int 5authentication {auth_type PASSauth_pass 1111}virtual_ipaddress {192.168.179.199 }

}virtual_server 192.168.179.199 80 {delay_loop 6 lb_algo rr lb_kind DR persistence_timeout 60 protocol TCP real_server 192.168.179.104 80 {weight 100 TCP_CHECK {connect_timeout 10 nb_get_retry 3delay_before_retry 3connect_port 80}}real_server 192.168.179.99 80 {weight 100TCP_CHECK {connect_timeout 10nb_get_retry 3delay_before_retry 3connect_port 80}}

}[root@localhost ~]# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.179.199:80 rr persistent 60-> 192.168.179.99:80 Route 100 0 0 -> 192.168.179.104:80 Route 100 0 0 上面整个配置下来可以看到LVS和keepalived整合只是加了一段代码virtual_server {real_server}

健康检查 TCP方式检查

来看看下面的作用

TCP_CHECK {connect_timeout 10 nb_get_retry 3delay_before_retry 3connect_port 80}现在后端两台nginx正常

[root@localhost ~]# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.179.199:80 rr persistent 60-> 192.168.179.99:80 Route 100 0 0 -> 192.168.179.104:80 Route 100 0 0 现在来到192.168.179.99将nginx干掉

[root@localhost ~]# pkill nginx再来看看主备keepalived的日志

Master

Nov 25 11:17:50 localhost Keepalived_healthcheckers[38767]: TCP connection to [192.168.179.99]:80 failed.

Nov 25 11:17:53 localhost Keepalived_healthcheckers[38767]: TCP connection to [192.168.179.99]:80 failed.

Nov 25 11:17:53 localhost Keepalived_healthcheckers[38767]: Check on service [192.168.179.99]:80 failed after 1 retry.

Nov 25 11:17:53 localhost Keepalived_healthcheckers[38767]: Removing service [192.168.179.99]:80 from VS [192.168.179.199]:80Slave

Nov 25 11:17:52 localhost Keepalived_healthcheckers[7827]: TCP connection to [192.168.179.99]:80 failed.

Nov 25 11:17:52 localhost Keepalived_healthcheckers[7827]: Check on service [192.168.179.99]:80 failed after 1 retry.

Nov 25 11:17:52 localhost Keepalived_healthcheckers[7827]: Removing service [192.168.179.99]:80 from VS [192.168.179.199]:80# 可以看到健康检查失败,会将其踢出后端节点Master

[root@localhost ~]# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.179.199:80 rr persistent 60-> 192.168.179.104:80 Route 100 0 0 Slave

[root@localhost ~]# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.179.199:80 rr persistent 60-> 192.168.179.104:80 Route 100 0 0 现在将nginx拉起来

[root@localhost ~]# nginx再来看看keepalived的日志

Master

Nov 25 11:21:54 localhost Keepalived_healthcheckers[38767]: TCP connection to [192.168.179.99]:80 success.

Nov 25 11:21:54 localhost Keepalived_healthcheckers[38767]: Adding service [192.168.179.99]:80 to VS [192.168.179.199]:80Slave

Nov 25 11:21:55 localhost Keepalived_healthcheckers[7827]: TCP connection to [192.168.179.99]:80 success.

Nov 25 11:21:55 localhost Keepalived_healthcheckers[7827]: Adding service [192.168.179.99]:80 to VS [192.168.179.199]:80Master

[root@localhost ~]# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.179.199:80 rr persistent 60-> 192.168.179.99:80 Route 100 0 0 -> 192.168.179.104:80 Route 100 0 0 slave

[root@localhost ~]# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.179.199:80 rr persistent 60-> 192.168.179.99:80 Route 100 0 0 -> 192.168.179.104:80 Route 100 0 0 #健康检查通过,将之前踢出的机器又重新加回来了

健康检查 HTTP_GET方式检查

HTTP_GET:配置健康检查

real_server 192.168.179.104 80 {weight 100HTTP_GET {url {path /index.htmlstatus_code 200}nb_get_retry 3delay_before_retry 3connect_timeout 5}}connect_timeout 5 :连接超时时间

nb_get_retry 3 :重连次数

delay_before_retry 3 :重连间隔时间

connect_port 8080 :检测端口通过上诉命令计算digest 然后写到配置文件上就可以了。

Keepalived Master发生故障(故障转移)

Master(如果将keeplived关闭,那么LVS也不可用了)

[root@localhost ~]# systemctl stop keepalived

[root@localhost ~]# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags-> RemoteAddress:Port Forward Weight ActiveConn InActConnBackup(可以看到vip发生了飘移)

[root@localhost ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00inet 127.0.0.1/8 scope host lovalid_lft forever preferred_lft foreverinet6 ::1/128 scope host valid_lft forever preferred_lft forever

2: ens32: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000link/ether 00:0c:29:61:90:c1 brd ff:ff:ff:ff:ff:ffinet 192.168.179.103/24 brd 192.168.179.255 scope global ens32valid_lft forever preferred_lft foreverinet 192.168.179.199/32 scope global ens32valid_lft forever preferred_lft foreverinet6 fe80::f54d:5639:6237:2d0e/64 scope link valid_lft forever preferred_lft forever#继续对外提供服务

[root@localhost ~]# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.179.199:80 rr persistent 60-> 192.168.179.99:80 Route 100 0 0 -> 192.168.179.104:80 Route 100 2 0 通过上面我相信你对kkepalived+lvs有个清晰了解,本片文章就介绍到这。

这篇关于Keepalived+Lvs 原来如此简单,so easy的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!