本文主要是介绍spark任务爆出大量的java.lang.StackOverflowError,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

一、异常现象

最近数据开发的同学提交的pySpark任务,在查询完数据后,在输出csv文件时,爆出大量的java.lang.StackOverflowError。

LogType:container-localizer-syslog

Log Upload Time:Mon Aug 15 23:25:59 +0800 2022

LogLength:170

Log Contents:

2022-08-15 23:24:12,698 WARN [main] org.apache.hadoop.yarn.server.nodemanager.containermanager.localizer.ContainerLocalizer: Localization running as yarn_user not yi.wei

End of LogType:container-localizer-syslog

LogType:stderr

Log Upload Time:Mon Aug 15 23:25:59 +0800 2022

LogLength:437947

Log Contents:

OpenJDK 64-Bit Server VM warning: ignoring option MaxPermSize=1024M; support was removed in 8.0

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/srv/BigData/data24/nm/localdir/filecache/55954/spark-assembly-2.4.3-hadoop2.6.5-1.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/share/slf4j-log4j12-1.7.26/slf4j-log4j12-1.7.26.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See SLF4J Error Codes for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

22/08/15 23:25:55 ERROR org.apache.spark.internal.Logging$class.logError(Logging.scala:91): Exception in task 5.1 in stage 31.0 (TID 3717)

java.lang.StackOverflowError

at org.codehaus.janino.CodeContext.flowAnalysis(CodeContext.java:395)

at org.codehaus.janino.CodeContext.flowAnalysis(CodeContext.java:557)

at org.codehaus.janino.CodeContext.flowAnalysis(CodeContext.java:557)

at org.codehaus.janino.CodeContext.flowAnalysis(CodeContext.java:557)

at org.codehaus.janino.CodeContext.flowAnalysis(CodeContext.java:557)

at org.codehaus.janino.CodeContext.flowAnalysis(CodeContext.java:557)

at org.codehaus.janino.CodeContext.flowAnalysis(CodeContext.java:557)

at org.codehaus.janino.CodeContext.flowAnalysis(CodeContext.java:557)

at org.codehaus.janino.CodeContext.flowAnalysis(CodeContext.java:557)

at org.codehaus.janino.CodeContext.flowAnalysis(CodeContext.java:557)

at org.codehaus.janino.CodeContext.flowAnalysis(CodeContext.java:557)

at org.codehaus.janino.CodeContext.flowAnalysis(CodeContext.java:557)

at org.codehaus.janino.CodeContext.flowAnalysis(CodeContext.java:557)

at org.codehaus.janino.CodeContext.flowAnalysis(CodeContext.java:557)

at org.codehaus.janino.CodeContext.flowAnalysis(CodeContext.java:557)

at org.codehaus.janino.CodeContext.flowAnalysis(CodeContext.java:55

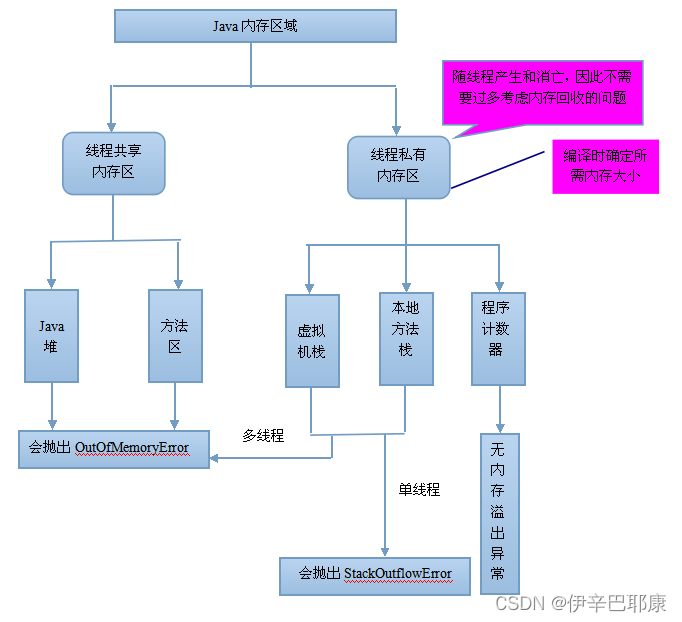

StackOverflowError异常,通常是在Jvm的常见的错误,java的线程栈内存溢出。通过参考网上大牛分享的类似问题的文章,jvm一般是java栈和本地方法栈中,报出StackOverflowError。

当报出StackOverflowError异常时,通过调整jvm参数-xss 线程栈内存大小来解决。

二、处理方式

调整spark任务的executor的jvm参数配置: spark.executor.extraJavaOptions=-Xss128M

调整后,pyspark任务顺利执行完成。如果依赖报出,可以适当调整到256M.

这篇关于spark任务爆出大量的java.lang.StackOverflowError的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!