本文主要是介绍云原生|kubernetes|kubeadm方式安装部署 kubernetes1.20(可离线安装,修订版---2022-10-15),希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

kubernetes1.20的离线安装

前言:

首先呢,kubernetes通常简称为k8s(首尾字母是k和s,中间有8个字母),下面就都使用简称了,k8s的版本不多,但版本变化剧烈,据说1.22版本后不支持docker了,现在的主流版本是1.20。

其实,k8s指的是一个各种组件组成的一个完整的分布式套件集群,和大数据组合套件一样,有各种各样的组件,比如etcd,flummer,kubelet,kubectl,kube-proxy这些组件,和CDH类似,但又不是完全相同。

既然是集群了,那么部署的时候,三个要素是必须要有的,第一,时间服务器统一集群内的所有机器时间,第二,各个服务器之间可免密登录,第三,域名解析或者简化版的hosts统一配置。当然,我说的这三个要素是一个普遍的集群部署套路,MySQL集群,Oracle集群,weblogic集群,Redis集群等等,凡是集群,目前来说,我还没发现有可以缺少这三个要素的。

那么,部署集群的时候还需要考虑一个因素,就是集群和相关依赖环境的版本匹配问题,比如,k8s集群部署的时候,是基于docker引擎来驱动的,因此,docker的版本至关重要,在本文这个示例中,k8s的版本是1.20,而docker的版本应该不高于19.3为好,如果强行使用高版本docker,比如20.7,那么,可能会有各种各样的稀奇古怪的问题产生,因此,如非必要,请使用19.3版本的docker,会更配k8s1.20哦。

现在还有一个新的问题,k8s对于硬件的需求到底是怎么样的。这里,我需要解释一下,k8s是基于go语言所写的一系列组件,因此,对于硬件的支持是比较友好的,不像大数据集群CDH,HDP这些平台,java开发的平台,十分吃内存(话说回来,大数据计算的算力需求也是吃内存的一点因素),因此,硬件配置方面,多核CPU,4g以上内存足矣(当然,硬盘没人会嫌弃大,大一点好,毕竟,扩展硬盘容量是有风险的操作哦)。

小结:

k8s的集群部署,硬件方面应该至少需要双核CPU,4G以上内存,硬盘不要太小了,如果是实验性质,50G起步是比较好的,同时,不管哪种集群,基本都会要求组成集群的服务器数量是奇数数目,比如,三台服务器,五台服务器,一般偶数是不可接受的,比如2台服务器,四台服务器。同样的,k8s集群也是需要奇数个服务器组成集群,最小为3台服务器。

环境方面,时间服务器同步各个节点的时间(这里需要说明一下,时间非常重要,如果不同步,集群运行的时候会出各种稀奇古怪的问题,),节点之间互相免密登录(集群之间的通信,以及某些文件的共享,快速的分发需要免密登录支持),主机名和IP所有映射关系都应该有(如果有DNS更好,一次配置,免去后续的烦恼)。这些,是基本的一个集群的要求。

本次实验的环境介绍:

计划使用三台服务器组建一个最小化的k8s集群,主机名为master做主节点,其余为从节点。本文其后以ip地址末尾数字代指服务器,比如,16服务器,指的是192.168.0.16 。主机名的修改就不需要说了,太基础的东西,瞧不起谁呢!~~~~对吧

| ip地址 | 主机名 | CPU | 内存 | 防火墙以及selinux |

|---|---|---|---|---|

| 192.168.0.16 | master | 2核心2CPU | 4G | 关闭防火墙和selinux |

| 192.168.0.17 | slave1 | 2核心2CPU | 4G | 关闭防火墙和selinux |

| 192.168.0.18 | slave2 | 2核心2CPU | 4G | 关闭防火墙和selinux |

本次集群部署采用的方式为yum安装方式,后面我会提供离线包,可以完全不需要互联网安装k8s集群。

一,时间服务器的搭建

16服务器上:yum install ntp -y && systemctl enable ntpd &&systemctl start ntpd

编辑ntp服务器的配置文件 /etc/ntp.conf ,将多余的server 字段删除,只保留以下server字段:

server 127.127.1.0

fudge 127.127.1.0 stratum 10

重启ntpd服务,systemctl restart ntpd

17和18服务器上相同的操作:

yum install ntp -y && systemctl enable ntpd &&systemctl start ntpd

编辑ntp服务器的配置文件 /etc/ntp.conf ,将多余的server 字段删除,只保留以下server字段:

server 192.168.0.16这个时间服务器其实指的的是192.168.0.16这个服务器,该服务器给17和18服务器提供授时服务。该种时间服务器是局域网内的通常做法,如果可连接互联网,那么,在16服务器上先手动同步一次比如阿里云的时间服务器,然后以本机时间为第一层级,阿里云时间服务器为第二层级即可,具体的互联网时间服务器请百度,在此不在赘述。

二,服务器之间的免密登录

在16服务器上执行四个命令:

ssh-keygen -t rsa (这个需要回车到底)

ssh-copy-id 192.168.0.16 (根据提示,输入yes,输入16的密码)

ssh-copy-id 192.168.0.17 (根据提示,输入yes,输入17的密码)

ssh-copy-id 192.168.0.18 (根据提示,输入yes,输入18的密码)

在17服务器上执行四个命令:

ssh-keygen -t rsa (这个需要回车到底)

ssh-copy-id 192.168.0.16 (根据提示,输入yes,输入16的密码)

ssh-copy-id 192.168.0.17 (根据提示,输入yes,输入17的密码)

ssh-copy-id 192.168.0.18 (根据提示,输入yes,输入18的密码)

在18服务器上执行四个命令:

ssh-keygen -t rsa (这个需要回车到底)

ssh-copy-id 192.168.0.16 (根据提示,输入yes,输入16的密码)

ssh-copy-id 192.168.0.17 (根据提示,输入yes,输入17的密码)

ssh-copy-id 192.168.0.18 (根据提示,输入yes,输入18的密码)

三,简单的dns手动解析

vim /etc/hosts 在该文件末尾添加如下内容:

192.168.0.16 master k8s1.com

192.168.0.17 slave1 k8s2.com

192.168.0.18 slave2 k8s3.com三台服务器的/etc/hosts文件内容都一样,不管什么方法,保持一样。

四,以上的环境配置检查,是否达到预期

在16,17,18 服务器上都执行同一个命令以检测时间服务器是否正常: (只要有synchronised和 time correct 这样的字段即可,如果没有,检查配置,并耐心等待片刻。)

[root@master ~]# ntpstat

synchronised to local net at stratum 6 time correct to within 11 mspolling server every 64 s

检查免密登录是否正常:

在16,17,18 上执行以下三个ssh命令,都能免密登录为正常。

[root@master ~]# ssh slave1

Last login: Sat Aug 14 11:04:22 2021 from 192.168.0.111

[root@slave1 ~]# logout

Connection to slave1 closed.

[root@master ~]# ssh slave2

Last login: Sat Aug 14 11:04:24 2021 from 192.168.0.111

[root@slave2 ~]# logout

Connection to slave2 closed.

[root@master ~]# ssh master

Last login: Sat Aug 14 11:04:19 2021 from 192.168.0.111五,docker的安装

docker采用可离线化的二进制安装包方式。具体安装方法见本人博客:docker的离线安装以及本地化配置_zsk_john的博客-CSDN博客_docker本地化部署

注意一点,前面也反复强调,使用docker-ce的19.3版本,一定不要搞错了。三台服务器都要安装哦,其实已经配置免密了,安装起来也十分简单了。

六,安装离线包内的k8s

链接:https://pan.baidu.com/s/1aVPG3Dfdu0caf8f203qvOQ

提取码:k8es

百度网盘内的文件解压后,最终是两个文件夹,一个是images.tar.gz 解压后的文件是docker引擎启动容器的镜像文件,一个是k8s.tar.gz ,里面有docker的二进制安装包和服务文件,以及k8s的各个组件的RPM安装文件,执行命令 rpm -ivh *.rpm 即可安装完k8s。

三个节点都需要安装k8s,也就是那些RPM包,也需要运行 kubectl apply -f k8s.yaml 这个命令。

七,k8s集群的初始化

(1)初始化之前,需要关闭交换内存:关闭命令为(三台服务器都执行此命令):

swapoff -a && sed -ri 's/.*swap.*/#&/' /etc/fstab

此命令意思为关闭交换内存,然后删除/etc/fstab里关于swap的挂载选项

(2)初始化命令如下:

kubeadm init --kubernetes-version=1.20.0 --apiserver-advertise-address=192.168.0.16 --image-repository registry.aliyuncs.com/google_containers --service-cidr=10.20.0.0/16 --pod-network-cidr=10.244.0.0/16

初始化的工作内容主要是生成各个组件需要的tls证书以及相关配置文件(主要存放路径是 /etc/kubernetes/这个目录下),并安装了coredns和kube-proxy 这两个核心组件以及客户端kubelet和kubectl这两个工具,下面的日志有比较清楚的记录。

该命令输出的日志如下:

[init] Using Kubernetes version: v1.20.0

[preflight] Running pre-flight checks

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local master] and IPs [192.168.0.1 192.168.0.16]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [localhost master] and IPs [192.168.0.16 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [localhost master] and IPs [192.168.0.16 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 19.505408 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.20" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node master as control-plane by adding the labels "node-role.kubernetes.io/master=''" and "node-role.kubernetes.io/control-plane='' (deprecated)"

[mark-control-plane] Marking the node master as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: 6z627c.k3x3vtcsq9j3xay0

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxyYour Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/configAlternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.0.16:6443 --token 6z627c.k3x3vtcsq9j3xay0 \

--discovery-token-ca-cert-hash sha256:1abb02d9c8f0f65fd303d91f52484f21e99720a211af9e14a9eb2b0f047da716

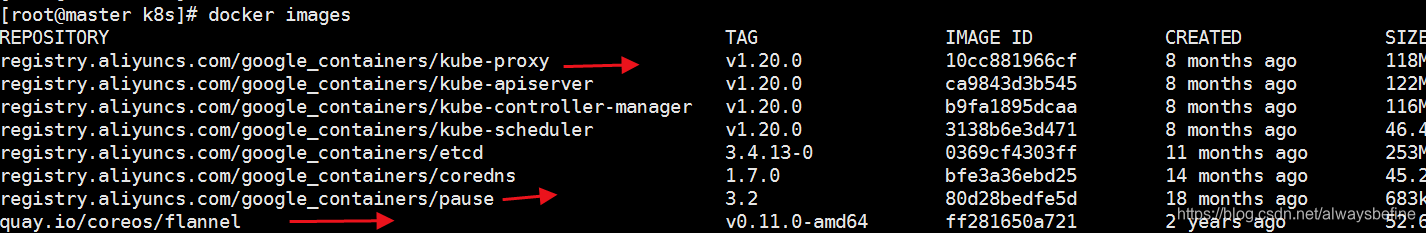

此时,我们如果docker images 这个命令查看镜像,会发现有如下输出,这证明已经拉取了7个镜像,其中有etcd,kube-scheduler这样的镜像:

[root@master k8s]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

registry.aliyuncs.com/google_containers/kube-proxy v1.20.0 10cc881966cf 8 months ago 118MB

registry.aliyuncs.com/google_containers/kube-apiserver v1.20.0 ca9843d3b545 8 months ago 122MB

registry.aliyuncs.com/google_containers/kube-controller-manager v1.20.0 b9fa1895dcaa 8 months ago 116MB

registry.aliyuncs.com/google_containers/kube-scheduler v1.20.0 3138b6e3d471 8 months ago 46.4MB

registry.aliyuncs.com/google_containers/etcd 3.4.13-0 0369cf4303ff 11 months ago 253MB

registry.aliyuncs.com/google_containers/coredns 1.7.0 bfe3a36ebd25 14 months ago 45.2MB

registry.aliyuncs.com/google_containers/pause 3.2 80d28bedfe5d 18 months ago 683kB执行命令 docker ps -a 可以看到这些镜像都已经自己启动了,但是没有开放端口

[root@master k8s]# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

715bcbf5ebde 10cc881966cf "/usr/local/bin/kube…" 25 minutes ago Up 25 minutes k8s_kube-proxy_kube-proxy-2hdjl_kube-system_34710829-fa7e-47a4-b175-a7ec229dee62_0

12dcf63a2c91 registry.aliyuncs.com/google_containers/pause:3.2 "/pause" 25 minutes ago Up 25 minutes k8s_POD_kube-proxy-2hdjl_kube-system_34710829-fa7e-47a4-b175-a7ec229dee62_0

ea86dbefae43 3138b6e3d471 "kube-scheduler --au…" 26 minutes ago Up 26 minutes k8s_kube-scheduler_kube-scheduler-master_kube-system_0378cf280f805e38b5448a1eceeedfc4_0

e858e160e8f7 ca9843d3b545 "kube-apiserver --ad…" 26 minutes ago Up 26 minutes k8s_kube-apiserver_kube-apiserver-master_kube-system_4e1d2c790855536c44774d75801dedb5_0

065e7434e5cf 0369cf4303ff "etcd --advertise-cl…" 26 minutes ago Up 26 minutes k8s_etcd_etcd-master_kube-system_7b4e517c0365eee85fee427d3dd59374_0

54aae73835eb b9fa1895dcaa "kube-controller-man…" 26 minutes ago Up 26 minutes k8s_kube-controller-manager_kube-controller-manager-master_kube-system_7829bd4a7098454e18de2f05be87e8c7_0

f256f8aa48c7 registry.aliyuncs.com/google_containers/pause:3.2 "/pause" 26 minutes ago Up 26 minutes k8s_POD_etcd-master_kube-system_7b4e517c0365eee85fee427d3dd59374_0

edb92b5cb546 registry.aliyuncs.com/google_containers/pause:3.2 "/pause" 26 minutes ago Up 26 minutes k8s_POD_kube-scheduler-master_kube-system_0378cf280f805e38b5448a1eceeedfc4_0

d8d19a31cc8d registry.aliyuncs.com/google_containers/pause:3.2 "/pause" 26 minutes ago Up 26 minutes k8s_POD_kube-controller-manager-master_kube-system_7829bd4a7098454e18de2f05be87e8c7_0

038cfba41c60 registry.aliyuncs.com/google_containers/pause:3.2 "/pause" 26 minutes ago Up 26 minutes k8s_POD_kube-apiserver-master_kube-system_4e1d2c790855536c44774d75801dedb5_0

另外提示需要部署一个pod网络

首先、创建一个yml文件,并且填入如下内容,大致的目的就是配置kubernates的安装策略

在自己电脑上创建一个k8s.yml文本文件,复制下面内容进去,然后上传该文件到服务器上

(这个是网络插件flannel的清单文件,当然,换成calico网络插件也是可以的。其中的"Network": "10.244.0.0/16" 是和上面初始化命令里的pod-cidr必须一致的,这个清单文件支持全部CPU架构部署--包括amd64,arm64和ppc64等,如果是其它架构的镜像相应修改即可。)

---

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:name: psp.flannel.unprivilegedannotations:seccomp.security.alpha.kubernetes.io/allowedProfileNames: docker/defaultseccomp.security.alpha.kubernetes.io/defaultProfileName: docker/defaultapparmor.security.beta.kubernetes.io/allowedProfileNames: runtime/defaultapparmor.security.beta.kubernetes.io/defaultProfileName: runtime/default

spec:privileged: falsevolumes:- configMap- secret- emptyDir- hostPathallowedHostPaths:- pathPrefix: "/etc/cni/net.d"- pathPrefix: "/etc/kube-flannel"- pathPrefix: "/run/flannel"readOnlyRootFilesystem: falserunAsUser:rule: RunAsAnysupplementalGroups:rule: RunAsAnyfsGroup:rule: RunAsAnyallowPrivilegeEscalation: falsedefaultAllowPrivilegeEscalation: falseallowedCapabilities: ['NET_ADMIN']defaultAddCapabilities: []requiredDropCapabilities: []hostPID: falsehostIPC: falsehostNetwork: truehostPorts:- min: 0max: 65535seLinux:# SELinux is unused in CaaSPrule: 'RunAsAny'

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:name: flannel

rules:- apiGroups: ['extensions']resources: ['podsecuritypolicies']verbs: ['use']resourceNames: ['psp.flannel.unprivileged']- apiGroups:- ""resources:- podsverbs:- get- apiGroups:- ""resources:- nodesverbs:- list- watch- apiGroups:- ""resources:- nodes/statusverbs:- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:name: flannel

roleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: flannel

subjects:

- kind: ServiceAccountname: flannelnamespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:name: flannelnamespace: kube-system

---

kind: ConfigMap

apiVersion: v1

metadata:name: kube-flannel-cfgnamespace: kube-systemlabels:tier: nodeapp: flannel

data:cni-conf.json: |{"name": "cbr0","cniVersion": "0.3.1","plugins": [{"type": "flannel","delegate": {"hairpinMode": true,"isDefaultGateway": true}},{"type": "portmap","capabilities": {"portMappings": true}}]}net-conf.json: |{"Network": "10.244.0.0/16","Backend": {"Type": "vxlan"}}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:name: kube-flannel-ds-amd64namespace: kube-systemlabels:tier: nodeapp: flannel

spec:selector:matchLabels:app: flanneltemplate:metadata:labels:tier: nodeapp: flannelspec:affinity:nodeAffinity:requiredDuringSchedulingIgnoredDuringExecution:nodeSelectorTerms:- matchExpressions:- key: beta.kubernetes.io/osoperator: Invalues:- linux- key: beta.kubernetes.io/archoperator: Invalues:- amd64hostNetwork: truetolerations:- operator: Existseffect: NoScheduleserviceAccountName: flannelinitContainers:- name: install-cniimage: quay.io/coreos/flannel:v0.11.0-amd64command:- cpargs:- -f- /etc/kube-flannel/cni-conf.json- /etc/cni/net.d/10-flannel.conflistvolumeMounts:- name: cnimountPath: /etc/cni/net.d- name: flannel-cfgmountPath: /etc/kube-flannel/containers:- name: kube-flannelimage: quay.io/coreos/flannel:v0.11.0-amd64command:- /opt/bin/flanneldargs:- --ip-masq- --kube-subnet-mgrresources:requests:cpu: "100m"memory: "50Mi"limits:cpu: "100m"memory: "50Mi"securityContext:privileged: falsecapabilities:add: ["NET_ADMIN"]env:- name: POD_NAMEvalueFrom:fieldRef:fieldPath: metadata.name- name: POD_NAMESPACEvalueFrom:fieldRef:fieldPath: metadata.namespacevolumeMounts:- name: runmountPath: /run/flannel- name: flannel-cfgmountPath: /etc/kube-flannel/volumes:- name: runhostPath:path: /run/flannel- name: cnihostPath:path: /etc/cni/net.d- name: flannel-cfgconfigMap:name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:name: kube-flannel-ds-arm64namespace: kube-systemlabels:tier: nodeapp: flannel

spec:selector:matchLabels:app: flanneltemplate:metadata:labels:tier: nodeapp: flannelspec:affinity:nodeAffinity:requiredDuringSchedulingIgnoredDuringExecution:nodeSelectorTerms:- matchExpressions:- key: beta.kubernetes.io/osoperator: Invalues:- linux- key: beta.kubernetes.io/archoperator: Invalues:- arm64hostNetwork: truetolerations:- operator: Existseffect: NoScheduleserviceAccountName: flannelinitContainers:- name: install-cniimage: quay.io/coreos/flannel:v0.11.0-arm64command:- cpargs:- -f- /etc/kube-flannel/cni-conf.json- /etc/cni/net.d/10-flannel.conflistvolumeMounts:- name: cnimountPath: /etc/cni/net.d- name: flannel-cfgmountPath: /etc/kube-flannel/containers:- name: kube-flannelimage: quay.io/coreos/flannel:v0.11.0-arm64command:- /opt/bin/flanneldargs:- --ip-masq- --kube-subnet-mgrresources:requests:cpu: "100m"memory: "50Mi"limits:cpu: "100m"memory: "50Mi"securityContext:privileged: falsecapabilities:add: ["NET_ADMIN"]env:- name: POD_NAMEvalueFrom:fieldRef:fieldPath: metadata.name- name: POD_NAMESPACEvalueFrom:fieldRef:fieldPath: metadata.namespacevolumeMounts:- name: runmountPath: /run/flannel- name: flannel-cfgmountPath: /etc/kube-flannel/volumes:- name: runhostPath:path: /run/flannel- name: cnihostPath:path: /etc/cni/net.d- name: flannel-cfgconfigMap:name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:name: kube-flannel-ds-armnamespace: kube-systemlabels:tier: nodeapp: flannel

spec:selector:matchLabels:app: flanneltemplate:metadata:labels:tier: nodeapp: flannelspec:affinity:nodeAffinity:requiredDuringSchedulingIgnoredDuringExecution:nodeSelectorTerms:- matchExpressions:- key: beta.kubernetes.io/osoperator: Invalues:- linux- key: beta.kubernetes.io/archoperator: Invalues:- armhostNetwork: truetolerations:- operator: Existseffect: NoScheduleserviceAccountName: flannelinitContainers:- name: install-cniimage: quay.io/coreos/flannel:v0.11.0-armcommand:- cpargs:- -f- /etc/kube-flannel/cni-conf.json- /etc/cni/net.d/10-flannel.conflistvolumeMounts:- name: cnimountPath: /etc/cni/net.d- name: flannel-cfgmountPath: /etc/kube-flannel/containers:- name: kube-flannelimage: quay.io/coreos/flannel:v0.11.0-armcommand:- /opt/bin/flanneldargs:- --ip-masq- --kube-subnet-mgrresources:requests:cpu: "100m"memory: "50Mi"limits:cpu: "100m"memory: "50Mi"securityContext:privileged: falsecapabilities:add: ["NET_ADMIN"]env:- name: POD_NAMEvalueFrom:fieldRef:fieldPath: metadata.name- name: POD_NAMESPACEvalueFrom:fieldRef:fieldPath: metadata.namespacevolumeMounts:- name: runmountPath: /run/flannel- name: flannel-cfgmountPath: /etc/kube-flannel/volumes:- name: runhostPath:path: /run/flannel- name: cnihostPath:path: /etc/cni/net.d- name: flannel-cfgconfigMap:name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:name: kube-flannel-ds-ppc64lenamespace: kube-systemlabels:tier: nodeapp: flannel

spec:selector:matchLabels:app: flanneltemplate:metadata:labels:tier: nodeapp: flannelspec:affinity:nodeAffinity:requiredDuringSchedulingIgnoredDuringExecution:nodeSelectorTerms:- matchExpressions:- key: beta.kubernetes.io/osoperator: Invalues:- linux- key: beta.kubernetes.io/archoperator: Invalues:- ppc64lehostNetwork: truetolerations:- operator: Existseffect: NoScheduleserviceAccountName: flannelinitContainers:- name: install-cniimage: quay.io/coreos/flannel:v0.11.0-ppc64lecommand:- cpargs:- -f- /etc/kube-flannel/cni-conf.json- /etc/cni/net.d/10-flannel.conflistvolumeMounts:- name: cnimountPath: /etc/cni/net.d- name: flannel-cfgmountPath: /etc/kube-flannel/containers:- name: kube-flannelimage: quay.io/coreos/flannel:v0.11.0-ppc64lecommand:- /opt/bin/flanneldargs:- --ip-masq- --kube-subnet-mgrresources:requests:cpu: "100m"memory: "50Mi"limits:cpu: "100m"memory: "50Mi"securityContext:privileged: falsecapabilities:add: ["NET_ADMIN"]env:- name: POD_NAMEvalueFrom:fieldRef:fieldPath: metadata.name- name: POD_NAMESPACEvalueFrom:fieldRef:fieldPath: metadata.namespacevolumeMounts:- name: runmountPath: /run/flannel- name: flannel-cfgmountPath: /etc/kube-flannel/volumes:- name: runhostPath:path: /run/flannel- name: cnihostPath:path: /etc/cni/net.d- name: flannel-cfgconfigMap:name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:name: kube-flannel-ds-s390xnamespace: kube-systemlabels:tier: nodeapp: flannel

spec:selector:matchLabels:app: flanneltemplate:metadata:labels:tier: nodeapp: flannelspec:affinity:nodeAffinity:requiredDuringSchedulingIgnoredDuringExecution:nodeSelectorTerms:- matchExpressions:- key: beta.kubernetes.io/osoperator: Invalues:- linux- key: beta.kubernetes.io/archoperator: Invalues:- s390xhostNetwork: truetolerations:- operator: Existseffect: NoScheduleserviceAccountName: flannelinitContainers:- name: install-cniimage: quay.io/coreos/flannel:v0.11.0-s390xcommand:- cpargs:- -f- /etc/kube-flannel/cni-conf.json- /etc/cni/net.d/10-flannel.conflistvolumeMounts:- name: cnimountPath: /etc/cni/net.d- name: flannel-cfgmountPath: /etc/kube-flannel/containers:- name: kube-flannelimage: quay.io/coreos/flannel:v0.11.0-s390xcommand:- /opt/bin/flanneldargs:- --ip-masq- --kube-subnet-mgrresources:requests:cpu: "100m"memory: "50Mi"limits:cpu: "100m"memory: "50Mi"securityContext:privileged: falsecapabilities:add: ["NET_ADMIN"]env:- name: POD_NAMEvalueFrom:fieldRef:fieldPath: metadata.name- name: POD_NAMESPACEvalueFrom:fieldRef:fieldPath: metadata.namespacevolumeMounts:- name: runmountPath: /run/flannel- name: flannel-cfgmountPath: /etc/kube-flannel/volumes:- name: runhostPath:path: /run/flannel- name: cnihostPath:path: /etc/cni/net.d- name: flannel-cfgconfigMap:name: kube-flannel-cfg

然后执行以下命令:

kubectl apply -f k8s.yml

八,k8s集群的节点加入集群,也就是17 18加入集群

kubeadm token create --ttl 0 --print-join-command

此条命令是重新获取taken。执行结果如下:

[root@master k8s]# !179

kubeadm token create --ttl 0 --print-join-command

kubeadm join 192.168.0.16:6443 --token p3xiss.9sa81o6hxfgg808j --discovery-token-ca-cert-hash sha256:1abb02d9c8f0f65fd303d91f52484f21e99720a211af9e14a9eb2b0f047da716

在添加节点加入集群前,有,四件事情必须要做,1,scp分发/etc/kubernetes/admin.conf这个文件到各个节点(17,18)相同的位置,此文件是master节点初始化也就是执行kubeadm init --kubernetes-version=1.20.0。。。省略 这个一长串初始化命令后所产生的。

2,17,18这个两个节点将export KUBECONFIG=/etc/kubernetes/admin.conf 这一串写入环境变量文件,并source /etc/profile 文件。

3,在17 18节点上执行 kubectl apply -f k8s.yaml 命令。输出应该如下:

[root@slave1 k8s]# kubectl apply -f k8s.yaml

podsecuritypolicy.policy/psp.flannel.unprivileged configured

clusterrole.rbac.authorization.k8s.io/flannel unchanged

clusterrolebinding.rbac.authorization.k8s.io/flannel unchanged

serviceaccount/flannel unchanged

configmap/kube-flannel-cfg unchanged

daemonset.apps/kube-flannel-ds-amd64 unchanged

daemonset.apps/kube-flannel-ds-arm64 unchanged

daemonset.apps/kube-flannel-ds-arm unchanged

daemonset.apps/kube-flannel-ds-ppc64le unchanged

daemonset.apps/kube-flannel-ds-s390x unchanged

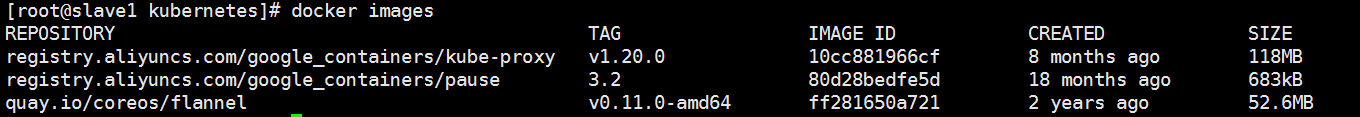

4,前面百度网盘的镜像文件,docker load 导入,导入的三个镜像是:

在17 和18服务器上,应该docker images 可看到三个镜像:

复制以上输出的这一段到从节点也就是17 18 服务器上都执行这个同一个命令即可:

kubeadm join 192.168.0.16:6443 --token p3xiss.9sa81o6hxfgg808j --discovery-token-ca-cert-hash sha256:1abb02d9c8f0f65fd303d91f52484f21e99720a211af9e14a9eb2b0f047da716

输出的结果如下:

[root@slave1 ~]# kubeadm join 192.168.0.16:6443 --token p3xiss.9sa81o6hxfgg808j --discovery-token-ca-cert-hash sha256:1abb02d9c8f0f65fd303d91f52484f21e99720a211af9e14a9eb2b0f047da716

[preflight] Running pre-flight checks[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.Run 'kubectl get nodes' on the control-plane to see this node join the cluster.此时在任意服务器上执行这个命令应该都可以看到这个:

[root@master k8s]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready control-plane,master 3h25m v1.20.0

slave1 Ready <none> 21m v1.20.0

slave2 Ready <none> 12s v1.20.0九,检测k8s集群是否安装成功

[root@master ~]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-7f89b7bc75-2mwhs 1/1 Running 0 5m21s

coredns-7f89b7bc75-46w52 1/1 Running 0 5m21s

etcd-master 1/1 Running 0 5m28s

kube-apiserver-master 1/1 Running 0 5m28s

kube-controller-manager-master 1/1 Running 0 5m28s

kube-flannel-ds-amd64-gnjzg 1/1 Running 0 2m40s

kube-proxy-cpn92 1/1 Running 0 5m21s

kube-scheduler-master 1/1 Running 0 5m28s

如果报错 coredns-7f89b7bc75-2mwhs 0/1 ContainerCreating ,也就是第一第二行是 containercreating(容器创建状态),请执行kubectl apply -f k8s.yaml (k8s.yaml文件十分重要,并且在每个节点都要执行的哦)

十,常见报错

(1)

[root@slave2 ~]# kubeadm join 192.168.0.18:6443 --token cgvzs3.gp3j4cojnecpobio --discovery-token-ca-cert-hash sha256:1abb02d9c8f0f65fd303d91f52484f21e99720a211af9e14a9eb2b0f047da716

[preflight] Running pre-flight checks[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

error execution phase preflight: couldn't validate the identity of the API Server: Get "https://192.168.0.18:6443/api/v1/namespaces/kube-public/configmaps/cluster-info?timeout=10s": dial tcp 192.168.0.18:6443: connect: connection refused

To see the stack trace of this error execute with --v=5 or higher加入节点的时候会经常报这个错,很可能的原因是token的问题。执行以下命令查询现在的token,然后将该值替换到join命令里即可。

kubeadm token list

(2)kubectl get pod -n kube-system 获取pod状态

(3)关于kubeadm reset 命令的一些说明

kubeadm reset 命令可以快速的清除集群的配置文件,将已启动的容器全部结束,结束kubelet服务的进程(总共干了三件事),如果在安装部署的时候有报端口被占用,或者配置文件已存在的错,可以使用该命令迅速地重新配置集群,在添加节点的时候此命令非常有效果。

[root@slave1 ~]# kubeadm reset

[reset] Reading configuration from the cluster...

[reset] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

W0815 01:02:38.991284 13477 reset.go:99] [reset] Unable to fetch the kubeadm-config ConfigMap from cluster: failed to get config map: Get "https://192.168.0.16:6443/api/v1/namespaces/kube-system/configmaps/kubeadm-config?timeout=10s": x509: certificate signed by unknown authority (possibly because of "crypto/rsa: verification error" while trying to verify candidate authority certificate "kubernetes")

[reset] WARNING: Changes made to this host by 'kubeadm init' or 'kubeadm join' will be reverted.

[reset] Are you sure you want to proceed? [y/N]: y

[preflight] Running pre-flight checks

W0815 01:02:40.588147 13477 removeetcdmember.go:79] [reset] No kubeadm config, using etcd pod spec to get data directory

[reset] No etcd config found. Assuming external etcd

[reset] Please, manually reset etcd to prevent further issues

[reset] Stopping the kubelet service

[reset] Unmounting mounted directories in "/var/lib/kubelet"

[reset] Deleting contents of config directories: [/etc/kubernetes/manifests /etc/kubernetes/pki]

[reset] Deleting files: [/etc/kubernetes/admin.conf /etc/kubernetes/kubelet.conf /etc/kubernetes/bootstrap-kubelet.conf /etc/kubernetes/controller-manager.conf /etc/kubernetes/scheduler.conf]

[reset] Deleting contents of stateful directories: [/var/lib/kubelet /var/lib/dockershim /var/run/kubernetes /var/lib/cni]The reset process does not clean CNI configuration. To do so, you must remove /etc/cni/net.dThe reset process does not reset or clean up iptables rules or IPVS tables.

If you wish to reset iptables, you must do so manually by using the "iptables" command.If your cluster was setup to utilize IPVS, run ipvsadm --clear (or similar)

to reset your system's IPVS tables.The reset process does not clean your kubeconfig files and you must remove them manually.

Please, check the contents of the $HOME/.kube/config file.

以上输出有一点需要注意,kubeadm reset 不会清除$HOME/.kube/config file文件,因此,如果是非root用户运行集群,需要手动将该处文件清除。当然,删除了哪些配置文件,该命令也给出了详细信息,结束了哪个进程也给出了详细的信息。

这篇关于云原生|kubernetes|kubeadm方式安装部署 kubernetes1.20(可离线安装,修订版---2022-10-15)的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!