本文主要是介绍机器真的能思考、学习和智能地行动吗?,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

In this post, we're going to define what machine learning is and how computers think and learn. We're also going to look at some history relevant to the development of the intelligent machine.

在这篇文章中,我们将定义机器学习是什么,以及计算机是如何思考和学习的。我们还将回顾一些与智能机器发展相关的历史。

There are so many introductory posts about AI and ML, and yet I decided to write this one. Do you know why? Because they're all boring. Not this one though, this one is cool. It also includes an awesome optional quiz to test your AI superpowers.

关于AI和ML的入门文章有很多,但我还是决定写这一篇。你知道为什么吗?因为其他的都很无聊。不过这篇可不一样,这篇很酷。它还包括一个很棒的可选测验,用来测试你的AI超能力。

Introduction 说明

Before we start talking about all the complicated stuff about Artificial Intelligence and Machine Learning, let's take a big-picture look at how it began and where we are now.

在我们开始讨论人工智能和机器学习的所有复杂内容之前,让我们先大致了解一下它是如何开始的以及我们现在所处的位置。

It can be hard to focus and understand what everything means with all the buzzwords floating around, but fear not! If you're new to this, we're going to break down the most important things you should know if you're just getting started.

在这么多时髦词汇中集中注意力并理解其含义可能会很难,但不必担心!如果你是这个领域的新手,我们将为你分解一些刚开始时应该知道的最重要的事情。

History 历史

So, humans have brains, we know that. In more recent news however, research in neurology has shown that the human brain is composed of a network of things called "neurons". Neurons are connected to each other and communicate using electrical pulses.

所以,人类有大脑,这是我们都知道的。然而,最近神经学的研究表明,人类的大脑是由被称为“神经元”的东西组成的网络。神经元之间彼此连接,并通过电脉冲进行通信。

So what do neurons in the human brain have to do with Artificial Intelligence? Oh, they're just the inspiration behind the design of neural networks, a digital version of our biological network of neurons. They're not the same, but they kind of work similarly.

那么人类大脑中的神经元与人工智能有什么关系呢?哦,它们只是神经网络设计的灵感来源,是我们生物神经元网络的数字版本。它们并不相同,但工作方式有些类似。

Buffalo, New York 1950s 纽约布法罗,20世纪50年代

Meet the Perceptron. The first neural network, a computing system that would have a shot at mimicking the neurons in our brains. Frank Rosenblatt, the American psychologist and computer scientist who implemented the perceptron, demonstrated the machine by showing it an image or pattern, and then it would make a decision about what the image represents.

让我们来认识一下感知器。这是第一个神经网络,是一个计算机系统,它试图模仿我们大脑中的神经元。实现感知器的美国心理学家和计算机科学家弗兰克·罗森布拉特(Frank Rosenblatt)通过向感知器展示图像或模式来演示这台机器,然后感知器会做出关于图像所代表的内容的决策。

For instance, it could distinguish between shapes such as triangles, squares, and circles. The demo was a success because the machine was able to learn and classify visual patterns and it was a major step toward the development of machine learning systems.

例如,它可以区分三角形、正方形和圆形等形状。这次演示取得了成功,因为机器能够学习和分类视觉模式,这是迈向机器学习系统开发的重要一步。

However, the perceptron wasn't perfect, and interest in it steadily declined due to the fact that it was a single-layer neural network, meaning it could only solve problems where a straight line could be drawn to separate the data into two distinct cases.

然而,感知器并不完美,由于它是一个单层神经网络,这意味着它只能解决那些可以用直线将数据分成两个不同情况的问题,因此人们对它的兴趣逐渐下降。

The perceptron is like a ruler, it could only draw straight lines. If things are all mixed up and can't be split neatly with a line, it'll get confused and fail.

感知器就像一把尺子,它只能画直线。如果事物混杂在一起,不能用一条线整齐地分割开来,它就会感到困惑并失败。

Toronto, Ontario, 1980s 安大略省多伦多,20世纪80年代

Meet Geoffrey Hinton, a computer scientist and a big name in the field of artificial intelligence and deep learning. Why? He had a huge impact on the development of deep neural networks (aka multi-layered neural network).

认识一下杰弗里·辛顿(Geoffrey Hinton),他是一位计算机科学家,也是人工智能和深度学习领域的一位重要人物。为什么呢?因为他对深度神经网络(也称为多层神经网络)的发展产生了巨大影响。

Unlike a single-layer neural network, Hinton developed a multi-layered network and contributed significantly to the backpropagation algorithm which allows the network to “learn”.

与单层神经网络不同,辛顿开发了一个多层网络,并对反向传播算法做出了重大贡献,该算法使网络能够“学习”。

To train a multi-layer neural network (or deep neural network), you'll need to strengthen the relationship between the digital neurons. Meaning, that during the training process, and using iterations, a specific input will fine-tune the connections between individual neurons so that the output becomes predictable.

要训练一个多层神经网络(或深度神经网络),你需要加强数字神经元之间的联系。这意味着在训练过程中,通过迭代,特定的输入将微调各个神经元之间的连接,从而使输出变得可预测。

The "Thinking Machine" 思考机器

Why did Hinton and Rosenblatt spend time and effort to develop artificial intelligence in the first place? Well, for the same reasons as to why we invented cars, planes, or houses. To improve life! And by having a machine do the boring work for us, oh boy how much time we'll save to focus on the real stuff that matters, like browsing through Reddit, and X (Formerly Twitter). (just kidding)

为什么辛顿(Hinton)和罗森布拉特(Rosenblatt)一开始要投入时间和精力来开发人工智能呢?其实,这和我们发明汽车、飞机或房屋的原因是一样的。那就是为了改善生活!通过让机器为我们做无聊的工作,我们可以节省多少时间来专注于真正重要的事情,比如浏览Reddit和X(以前叫Twitter)。(开玩笑啦)

Okay, so we, as humans are natural innovators, and scientists believed that they could one day teach machines to think for themselves so we can delegate all the boring repetitive tasks.

好的,我们作为人类是天然的创新者,科学家们也相信有一天他们可以教会机器自己思考,这样我们就可以把所有无聊重复的任务交给它们。

Still, while there was interest in such wild ideas, others wondered what would happen to humanity if machines could think. Will machines take over the world? Will computers become our masters? Will everyone become unemployed?

尽管如此,虽然有人对这种疯狂的想法感兴趣,但其他人也想知道如果机器能够思考,人类将会发生什么。机器会统治世界吗?电脑会成为我们的主人吗?每个人都会失业吗?

Well, to understand the possibilities of what could happen, we must understand if machines are actually able to think, and if they are, what are their capabilities and limitations.

要理解可能会发生什么,我们必须了解机器是否真的能够思考,如果它们能,它们的能力和限制是什么。

What does "think" mean? 思考是什么意思?

For humans, thinking is generally a process that involves (at least) the important steps below:

对于人类来说,思考通常是一个涉及(至少)以下重要步骤的过程:

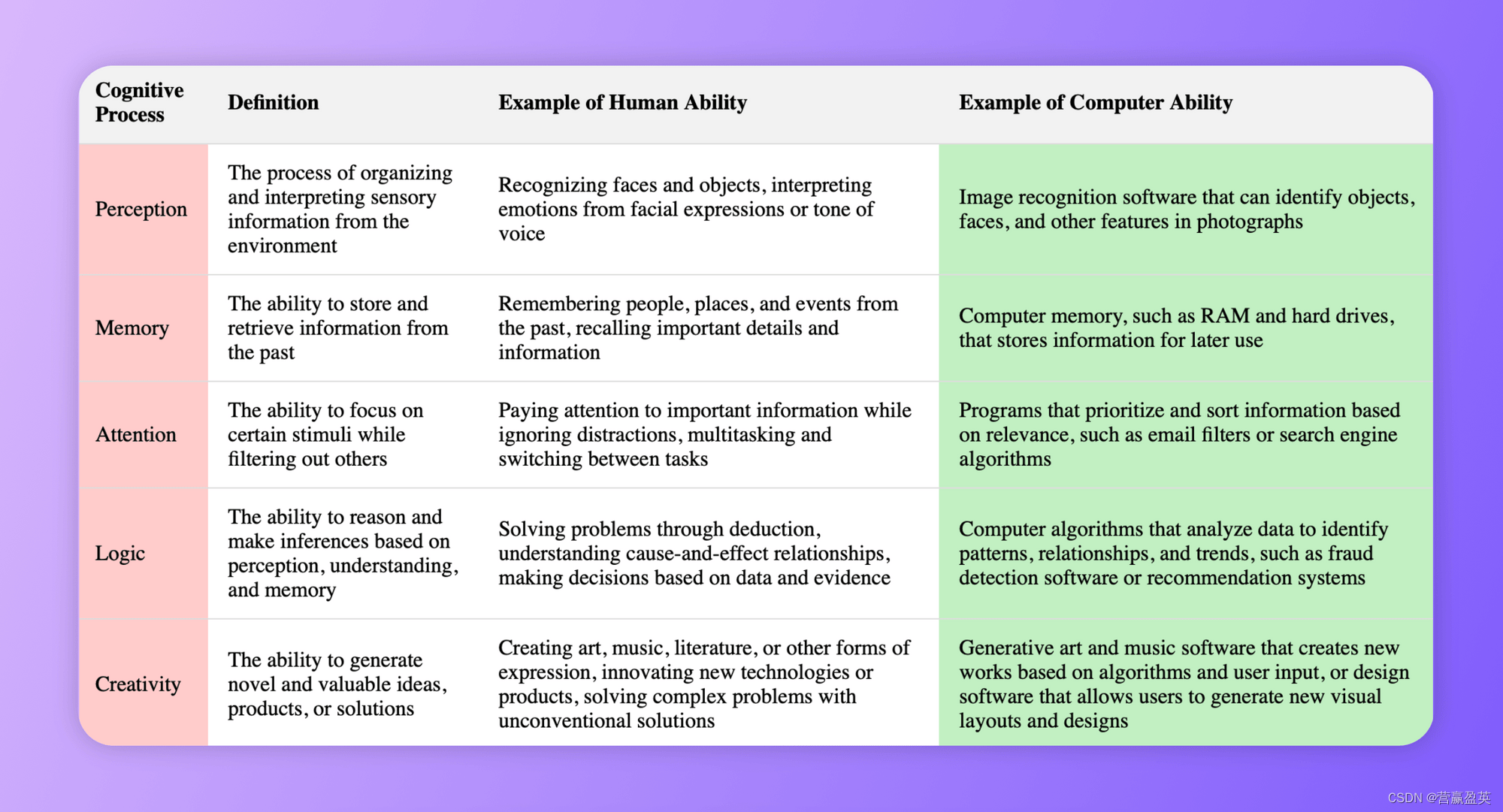

- Perception: Recognize things from the world around us.

感知:从周围的世界识别事物。

- Memory: Remember things, by accessing previous knowledge through storing them somewhere in our heads.

记忆:通过在我们的大脑中的某个地方存储它们来访问先前的知识,从而记住事物。

- Attention: Understanding intentions from given information.

注意:从给定的信息中理解意图。

- Logic: Analyzing information based on perception, understanding, memory and, determining intent.

逻辑:基于感知、理解、记忆和意图来确定并分析信息。

- Creativity: Creating new things, like art, music, stories, etc..

创造力:创造新事物,如艺术、音乐、故事等。

Today, many argue that computers can think just like humans do. To illustrate this, I’ve listed the cognitive processes in the table below and provided an example of how modern computers perform the same functions of thinking as humans do:

如今,许多人认为计算机可以像人类一样思考。为了说明这一点,我在下面的表格中列出了认知过程,并提供了现代计算机如何执行与人类相同的思维功能的示例:

Personal opinion: I do somewhat agree with the idea that computers nowadays can think, similarly to how we can. Actually, the recent progress is mind-blowing.

个人观点:在某种程度上,我同意现在的计算机可以像人类一样思考。事实上,最近的进展令人震惊。

To attain intelligence, just thinking, however, is not enough. We’ll need to teach computers so they can figure things out on their own. What this means is that we'll want computers to correctly interpret things that they've never been exposed to before.

然而,仅仅思考是不足以获得智慧的。我们需要教会计算机,让它们能够自己解决问题。这意味着我们希望计算机能够正确解释它们从未接触过的事物。

This all brings me to my final artificial intelligence formula:

thinking+learning=artificial intelligence

这一切让我得出最终的人工智能公式:思考+学习=人工智能

By implementing a neural network we'll have thinking, and by training the machine we'll fine-tune the network and achieve learning.

通过实现神经网络我们将实现思考,而通过训练机器我们将微调网络并实现学习。

Okay, now we're going to take a look at the two parts that make up artificial intelligence. First, we'll just define what neural networks are, and then briefly discuss machine learning.

好的,现在我们将看看构成人工智能的两个部分。首先,我们将定义神经网络是什么,然后简要讨论机器学习。

Introduction to Neural Networks 神经网络简介

A neural network is a subset of machine learning which itself is a subset of artificial intelligence. The structure and function of neural networks are inspired by the human brain (as we've seen earlier), in a way where connections between neurons (a network comprised of multiple neurons) are strengthened and reinforced through training to improve its overall prediction accuracy.

神经网络是机器学习的一个子集,而机器学习又是人工智能的一个子集。神经网络的结构和功能受到人类大脑的启发(正如我们之前所见),其中神经元(由多个神经元组成的网络)之间的连接通过训练得到加强和巩固,以提高其整体预测准确性。

Every single neuron (or node) in a neural network has the following properties:

- Inputs: A neuron can receive one or more inputs from other neurons in the network. Inputs can be continuous or binary values.

输入:一个神经元可以从网络中的其他神经元接收一个或多个输入。输入可以是连续值或二进制值。

- Weights: Each input to the neuron has a weight that determines the strength of the connection between this neuron and the one sending the input. Weight for each connection between neurons is then strengthened during training.

权重:神经元的每个输入都有一个权重,该权重决定了该神经元与发送输入的神经元之间的连接强度。然后,在训练过程中,神经元之间的每个连接的权重都会得到加强。

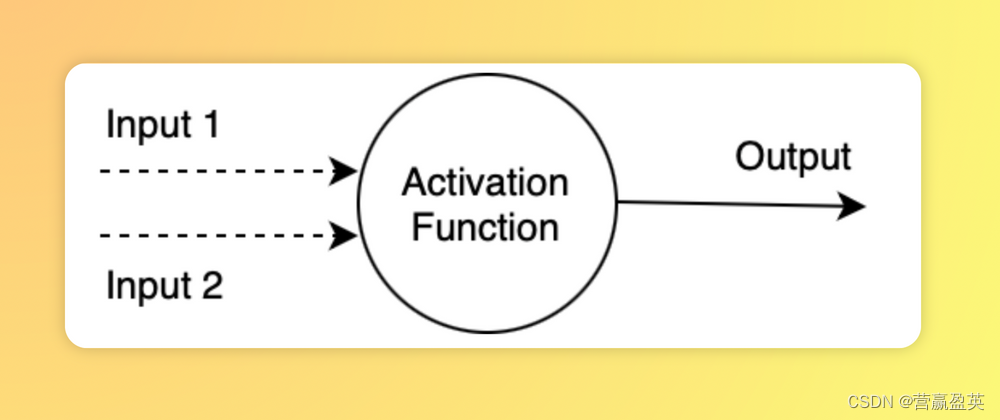

- Activation Function: A neuron will perform the necessary computation to determine the output based on the inputs and weights. Examples of this function include the sigmoid function that outputs values between 0 and 1.

激活函数:神经元会根据输入和权重执行必要的计算来确定输出。这个函数的例子包括sigmoid函数,它输出0到1之间的值。

- Bias: A bias is a value that is used to shift the activation function in a particular direction. Think of the bias as a knob that tweaks sensitivity to certain information, the higher the sensitivity the more likely this neuron will fire.

偏置:偏置是一个用于将激活函数向特定方向移动的值。可以将偏置想象成一个旋钮,用于调整对某些信息的敏感度,敏感度越高,这个神经元就越有可能被激活。

- Output: Each neuron then has an output, which is essentially the result of the activation function.

输出:然后,每个神经元都有一个输出,这本质上是激活函数的结果。

Here is a visual representation of a single neuron:

这是一个单个神经元的可视化表示:

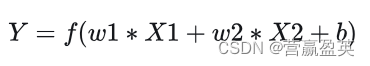

The output is the result of the activation function which will include, the inputs, weights, and bias represented as such:

输出是激活函数的结果,该函数将包括输入、权重和偏置,表示如下:

So, we've seen what every single neuron is, and as we know the collection of neurons makes up the neural network. Awesome, now let's quickly go over what Machine Learning is.

现在我们已经了解了单个神经元是什么,我们也知道神经元的集合构成了神经网络。太棒了,现在让我们快速回顾一下机器学习是什么。

Introduction to Machine Learning 机器学习简介

Ok, so now we know what a neural network is composed of and we also know that a neural network gives the machine the power of thinking. But how does it learn?

好的,现在我们已经知道神经网络是由什么组成的,我们也知道神经网络赋予机器思考的能力。但是它是如何学习的呢?

We'll need to train it. Just like teaching a child the difference between an orange and an apple through trial and error, we train the machine by providing information and correcting its guesses until it makes the right prediction.

我们需要训练它。就像通过试错教孩子区分橙子和苹果一样,我们通过提供信息并纠正其猜测来训练机器,直到它做出正确的预测。

This process is iterative, and won't stop until the machine is able to predict the desired output correctly.

这个过程是迭代的,并且不会停止,直到机器能够正确地预测出期望的输出。

I will not go into the details here to keep this simple, but this is done mathematically using algorithms that determine the best values for the weights and biases which themselves represent the strength of a connection between two neurons.

为了简化起见,我不会在这里详细介绍,但这是通过数学算法来完成的,这些算法确定权重和偏置的最佳值,这些值本身代表两个神经元之间连接的强度。

One popular example is backpropagation, which is used in conjunction with an optimization algorithm such as stochastic gradient descent.

一个常见的例子是反向传播,它与随机梯度下降等优化算法结合使用。

Basically machines learn through training iteratively, until the output matches what we want. It’s boiled down to finding the right values for the weights and biases.

基本上,机器通过迭代训练来学习,直到输出符合我们的期望。这归结为找到权重和偏置的正确值。

Let’s take this for example: 举例如下:

In a puzzle, you have the pieces but you don’t know how to put them together so it looks like the picture on the box. You try to put the pieces randomly and compare them with the picture, but hey, you notice that it doesn't look right! Now you keep repeating the process until you have the right picture. The relationship between pieces is re-adjusted and re-evaluated with every try. This is somewhat an oversimplified example of backpropagation but kind of gives you an idea of what's happening behind the scenes.

在解拼图时,你有拼图块,但你不知道如何把它们拼在一起以呈现盒子上的图片。你试着随机地摆放拼图块,然后与图片进行比较,但嘿,你发现它看起来不对!然后你不断重复这个过程,直到你拼出正确的图片。每试一次,拼图块之间的关系都会重新调整并重新评估。这是一个对反向传播过度简化的例子,但多少能让你明白幕后发生的事情。

Now it’s important to point out, that a Neural Network is a type of machine learning process, also known as deep learning that is used to train and teach machines. There are other techniques to teach machines, which we're not going to touch on in this article. Maybe in future ones? Let me know!

现在重要的是要指出,神经网络是一种机器学习过程,也称为深度学习,用于训练和教导机器。还有其他技术可以用来教导机器,但本文不会涉及这些技术。也许在未来的文章中会讨论?让我知道你的想法!

Okay, let's now define what Artificial Intelligence is since we've covered Neural Networks and Machine Learning.

好的,既然我们已经介绍了神经网络和机器学习,现在让我们来定义一下人工智能是什么。

What is Artificial Intelligence? 什么是人工智能?

Put simply, it is a broad term that covers everything that is a non-human form of intelligence. For example, Neural Network、Machine Learning、Genetic Algorithm、Robotics and so on.

简单来说,这是一个广泛的术语,涵盖了所有非人类形式的智能。例如 神经网络、机器学习、遗传算法、机器人科学 等。

Putting it all Together 把它们组合起来

We have AI on top and then all other stuff branch out. Neural Networks for example would go under Machine Learning. So the next time you're having a discussion with someone who sounds confused about the topic or mixing things together, you know where to tell them to go.

在最顶层是人工智能,然后其他所有内容都分支出来。例如,神经网络就属于机器学习的一个分支。所以,下次当你和某个对这个话题感到困惑或混淆概念的人讨论时,你就知道该怎么引导他们了。

Here's a visual representation of how things are connected:

以下是这些事物如何相互关联的直观表示:

Final Thoughts 总结

So can machines learn? Yes, they can and they’re pretty good at it and the accuracy rate is only getting better. There's even a lot of talk nowadays about technological unemployment, a term that refers to mass unemployment in the future due to machines taking over our jobs.

那么机器能学习吗?是的,它们可以,而且它们在这方面相当擅长,准确率还在不断提高。如今甚至有很多关于技术失业的讨论,这个词指的是由于机器取代我们的工作而导致的未来大规模失业。

And that's exactly why you're doing great by sharpening your skills and learning about Artificial Intelligence and its different branches so that when the time comes you'll stay relevant in the future economy.

这就是为什么你通过磨练技能并学习人工智能及其不同分支来做得很棒,这样当时间到来时,你将在未来经济中保持相关性。

这篇关于机器真的能思考、学习和智能地行动吗?的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!