本文主要是介绍基于【Lama Cleaner】一键秒去水印,轻松移除不想要的内容!,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

一、项目背景

革命性的AI图像编辑技术,让您的图片焕然一新!无论水印、logo、不想要的人物或物体,都能被神奇地移除,只留下纯净的画面。操作简单,效果出众,给你全新的视觉体验。开启图像编辑新纪元,尽在掌控!

利用去水印开源工具Lama Cleaner对照片中"杂质"进行去除!

可以去AI擦除一切应用体验!

先看效果:

二、Lama Cleaner是什么?

Lama Cleaner是一款开源且免费的人工学习图片去水印程序(个人主要学习用途),没有图片分辨率限制(个人使用暂未发现),并且保存的图片质量很高(个人觉得跟原图差不多),还能下载处理后的图片到本地。

三、操作

1、安装

In [1]

!pip install litelama==0.1.7Looking in indexes: https://mirror.baidu.com/pypi/simple/, https://mirrors.aliyun.com/pypi/simple/ Collecting litelama==0.1.7Downloading https://mirrors.aliyun.com/pypi/packages/6e/59/873f5cbaeae1f2b17e6d1ae6c74e1efde28783db4d7442346a77a6140673/litelama-0.1.7-py3-none-any.whl (21 kB) Collecting kornia>=0.7.0 (from litelama==0.1.7)Downloading https://mirrors.aliyun.com/pypi/packages/ac/fa/5612c4b1ad83b3044062e9dd0ca3c91937d8023cff0836269e18573655b0/kornia-0.7.2-py2.py3-none-any.whl (825 kB)━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 825.4/825.4 kB 1.1 MB/s eta 0:00:0000:0100:01 Requirement already satisfied: numpy>=1.24.4 in /opt/conda/envs/python35-paddle120-env/lib/python3.10/site-packages (from litelama==0.1.7) (1.26.2) Collecting omegaconf>=2.3.0 (from litelama==0.1.7)Downloading https://mirrors.aliyun.com/pypi/packages/e3/94/1843518e420fa3ed6919835845df698c7e27e183cb997394e4a670973a65/omegaconf-2.3.0-py3-none-any.whl (79 kB)━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 79.5/79.5 kB 1.1 MB/s eta 0:00:00a 0:00:01 Requirement already satisfied: opencv-python>=4.8.0.76 in /opt/conda/envs/python35-paddle120-env/lib/python3.10/site-packages (from litelama==0.1.7) (4.8.1.78) Requirement already satisfied: pillow>=10.0.1 in /opt/conda/envs/python35-paddle120-env/lib/python3.10/site-packages (from litelama==0.1.7) (10.1.0) Requirement already satisfied: requests>=2.31.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.10/site-packages (from litelama==0.1.7) (2.31.0) Requirement already satisfied: safetensors>=0.3.3 in /opt/conda/envs/python35-paddle120-env/lib/python3.10/site-packages (from litelama==0.1.7) (0.4.1) Collecting torch>=2.0.1 (from litelama==0.1.7)Downloading https://mirrors.aliyun.com/pypi/packages/33/b3/1fcc3bccfddadfd6845dcbfe26eb4b099f1dfea5aa0e5cfb92b3c98dba5b/torch-2.2.2-cp310-cp310-manylinux1_x86_64.whl (755.5 MB)━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 755.5/755.5 MB 769.7 kB/s eta 0:00:0000:0100:16 Collecting kornia-rs>=0.1.0 (from kornia>=0.7.0->litelama==0.1.7)Downloading https://mirrors.aliyun.com/pypi/packages/7b/ef/eec16e87bc8893f608a42c96739ad0c35e30877b0f64bd19d95971534cef/kornia_rs-0.1.2-cp310-cp310-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (2.4 MB)━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 2.4/2.4 MB 1.3 MB/s eta 0:00:0000:0100:01 Requirement already satisfied: packaging in /opt/conda/envs/python35-paddle120-env/lib/python3.10/site-packages (from kornia>=0.7.0->litelama==0.1.7) (23.2) Collecting antlr4-python3-runtime==4.9.* (from omegaconf>=2.3.0->litelama==0.1.7)Downloading https://mirrors.aliyun.com/pypi/packages/3e/38/7859ff46355f76f8d19459005ca000b6e7012f2f1ca597746cbcd1fbfe5e/antlr4-python3-runtime-4.9.3.tar.gz (117 kB)━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 117.0/117.0 kB 1.3 MB/s eta 0:00:00a 0:00:01Preparing metadata (setup.py) ... done Requirement already satisfied: PyYAML>=5.1.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.10/site-packages (from omegaconf>=2.3.0->litelama==0.1.7) (6.0.1) Requirement already satisfied: charset-normalizer<4,>=2 in /opt/conda/envs/python35-paddle120-env/lib/python3.10/site-packages (from requests>=2.31.0->litelama==0.1.7) (3.3.2) Requirement already satisfied: idna<4,>=2.5 in /opt/conda/envs/python35-paddle120-env/lib/python3.10/site-packages (from requests>=2.31.0->litelama==0.1.7) (3.6) Requirement already satisfied: urllib3<3,>=1.21.1 in /opt/conda/envs/python35-paddle120-env/lib/python3.10/site-packages (from requests>=2.31.0->litelama==0.1.7) (2.1.0) Requirement already satisfied: certifi>=2017.4.17 in /opt/conda/envs/python35-paddle120-env/lib/python3.10/site-packages (from requests>=2.31.0->litelama==0.1.7) (2023.11.17) Requirement already satisfied: filelock in /opt/conda/envs/python35-paddle120-env/lib/python3.10/site-packages (from torch>=2.0.1->litelama==0.1.7) (3.13.1) Requirement already satisfied: typing-extensions>=4.8.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.10/site-packages (from torch>=2.0.1->litelama==0.1.7) (4.9.0) Collecting sympy (from torch>=2.0.1->litelama==0.1.7)Downloading https://mirrors.aliyun.com/pypi/packages/d2/05/e6600db80270777c4a64238a98d442f0fd07cc8915be2a1c16da7f2b9e74/sympy-1.12-py3-none-any.whl (5.7 MB)━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 5.7/5.7 MB 1.3 MB/s eta 0:00:0000:0100:01 Collecting networkx (from torch>=2.0.1->litelama==0.1.7)Downloading https://mirrors.aliyun.com/pypi/packages/d5/f0/8fbc882ca80cf077f1b246c0e3c3465f7f415439bdea6b899f6b19f61f70/networkx-3.2.1-py3-none-any.whl (1.6 MB)━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 1.6/1.6 MB 1.3 MB/s eta 0:00:0000:0100:01 Requirement already satisfied: jinja2 in /opt/conda/envs/python35-paddle120-env/lib/python3.10/site-packages (from torch>=2.0.1->litelama==0.1.7) (3.1.2) Requirement already satisfied: fsspec in /opt/conda/envs/python35-paddle120-env/lib/python3.10/site-packages (from torch>=2.0.1->litelama==0.1.7) (2023.10.0) Collecting nvidia-cuda-nvrtc-cu12==12.1.105 (from torch>=2.0.1->litelama==0.1.7)Downloading https://mirrors.aliyun.com/pypi/packages/b6/9f/c64c03f49d6fbc56196664d05dba14e3a561038a81a638eeb47f4d4cfd48/nvidia_cuda_nvrtc_cu12-12.1.105-py3-none-manylinux1_x86_64.whl (23.7 MB)━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 23.7/23.7 MB 1.3 MB/s eta 0:00:0000:0100:01 Collecting nvidia-cuda-runtime-cu12==12.1.105 (from torch>=2.0.1->litelama==0.1.7)Downloading https://mirrors.aliyun.com/pypi/packages/eb/d5/c68b1d2cdfcc59e72e8a5949a37ddb22ae6cade80cd4a57a84d4c8b55472/nvidia_cuda_runtime_cu12-12.1.105-py3-none-manylinux1_x86_64.whl (823 kB)━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 823.6/823.6 kB 1.2 MB/s eta 0:00:0000:0100:01 Collecting nvidia-cuda-cupti-cu12==12.1.105 (from torch>=2.0.1->litelama==0.1.7)Downloading https://mirrors.aliyun.com/pypi/packages/7e/00/6b218edd739ecfc60524e585ba8e6b00554dd908de2c9c66c1af3e44e18d/nvidia_cuda_cupti_cu12-12.1.105-py3-none-manylinux1_x86_64.whl (14.1 MB)━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 14.1/14.1 MB 1.2 MB/s eta 0:00:0000:0100:01 Collecting nvidia-cudnn-cu12==8.9.2.26 (from torch>=2.0.1->litelama==0.1.7)Downloading https://mirrors.aliyun.com/pypi/packages/ff/74/a2e2be7fb83aaedec84f391f082cf765dfb635e7caa9b49065f73e4835d8/nvidia_cudnn_cu12-8.9.2.26-py3-none-manylinux1_x86_64.whl (731.7 MB)━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 731.7/731.7 MB 737.2 kB/s eta 0:00:0000:0100:16 Collecting nvidia-cublas-cu12==12.1.3.1 (from torch>=2.0.1->litelama==0.1.7)Downloading https://mirrors.aliyun.com/pypi/packages/37/6d/121efd7382d5b0284239f4ab1fc1590d86d34ed4a4a2fdb13b30ca8e5740/nvidia_cublas_cu12-12.1.3.1-py3-none-manylinux1_x86_64.whl (410.6 MB)━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 410.6/410.6 MB 1.0 MB/s eta 0:00:0000:0100:08 Collecting nvidia-cufft-cu12==11.0.2.54 (from torch>=2.0.1->litelama==0.1.7)Downloading https://mirrors.aliyun.com/pypi/packages/86/94/eb540db023ce1d162e7bea9f8f5aa781d57c65aed513c33ee9a5123ead4d/nvidia_cufft_cu12-11.0.2.54-py3-none-manylinux1_x86_64.whl (121.6 MB)━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 121.6/121.6 MB 1.3 MB/s eta 0:00:0000:0100:03 Collecting nvidia-curand-cu12==10.3.2.106 (from torch>=2.0.1->litelama==0.1.7)Downloading https://mirrors.aliyun.com/pypi/packages/44/31/4890b1c9abc496303412947fc7dcea3d14861720642b49e8ceed89636705/nvidia_curand_cu12-10.3.2.106-py3-none-manylinux1_x86_64.whl (56.5 MB)━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 56.5/56.5 MB 1.4 MB/s eta 0:00:0000:0100:01 Collecting nvidia-cusolver-cu12==11.4.5.107 (from torch>=2.0.1->litelama==0.1.7)Downloading https://mirrors.aliyun.com/pypi/packages/bc/1d/8de1e5c67099015c834315e333911273a8c6aaba78923dd1d1e25fc5f217/nvidia_cusolver_cu12-11.4.5.107-py3-none-manylinux1_x86_64.whl (124.2 MB)━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 124.2/124.2 MB 1.4 MB/s eta 0:00:0000:0100:03 Collecting nvidia-cusparse-cu12==12.1.0.106 (from torch>=2.0.1->litelama==0.1.7)Downloading https://mirrors.aliyun.com/pypi/packages/65/5b/cfaeebf25cd9fdec14338ccb16f6b2c4c7fa9163aefcf057d86b9cc248bb/nvidia_cusparse_cu12-12.1.0.106-py3-none-manylinux1_x86_64.whl (196.0 MB)━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 196.0/196.0 MB 1.2 MB/s eta 0:00:0000:0100:04 Collecting nvidia-nccl-cu12==2.19.3 (from torch>=2.0.1->litelama==0.1.7)Downloading https://mirrors.aliyun.com/pypi/packages/38/00/d0d4e48aef772ad5aebcf70b73028f88db6e5640b36c38e90445b7a57c45/nvidia_nccl_cu12-2.19.3-py3-none-manylinux1_x86_64.whl (166.0 MB)━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 166.0/166.0 MB 1.2 MB/s eta 0:00:0000:0100:04 Collecting nvidia-nvtx-cu12==12.1.105 (from torch>=2.0.1->litelama==0.1.7)Downloading https://mirrors.aliyun.com/pypi/packages/da/d3/8057f0587683ed2fcd4dbfbdfdfa807b9160b809976099d36b8f60d08f03/nvidia_nvtx_cu12-12.1.105-py3-none-manylinux1_x86_64.whl (99 kB)━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 99.1/99.1 kB 1.0 MB/s eta 0:00:00a 0:00:01 Collecting triton==2.2.0 (from torch>=2.0.1->litelama==0.1.7)Downloading https://mirrors.aliyun.com/pypi/packages/95/05/ed974ce87fe8c8843855daa2136b3409ee1c126707ab54a8b72815c08b49/triton-2.2.0-cp310-cp310-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (167.9 MB)━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 167.9/167.9 MB 1.2 MB/s eta 0:00:0000:0100:04 Collecting nvidia-nvjitlink-cu12 (from nvidia-cusolver-cu12==11.4.5.107->torch>=2.0.1->litelama==0.1.7)Downloading https://mirrors.aliyun.com/pypi/packages/58/d1/d1c80553f9d5d07b6072bc132607d75a0ef3600e28e1890e11c0f55d7346/nvidia_nvjitlink_cu12-12.4.99-py3-none-manylinux2014_x86_64.whl (21.1 MB)━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 21.1/21.1 MB 1.4 MB/s eta 0:00:0000:0100:01 Requirement already satisfied: MarkupSafe>=2.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.10/site-packages (from jinja2->torch>=2.0.1->litelama==0.1.7) (2.1.3) Collecting mpmath>=0.19 (from sympy->torch>=2.0.1->litelama==0.1.7)Downloading https://mirrors.aliyun.com/pypi/packages/43/e3/7d92a15f894aa0c9c4b49b8ee9ac9850d6e63b03c9c32c0367a13ae62209/mpmath-1.3.0-py3-none-any.whl (536 kB)━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 536.2/536.2 kB 1.3 MB/s eta 0:00:0000:0100:01 Building wheels for collected packages: antlr4-python3-runtimeBuilding wheel for antlr4-python3-runtime (setup.py) ... doneCreated wheel for antlr4-python3-runtime: filename=antlr4_python3_runtime-4.9.3-py3-none-any.whl size=144554 sha256=077a76af915c8b5e871c1a81a6cbda25ccce15c65326cd9d79be4d51a5141f99Stored in directory: /home/aistudio/.cache/pip/wheels/79/82/b1/b79d6e90f34257cd436860ed4f4a09f9e1ea8cd32da7046ea4 Successfully built antlr4-python3-runtime Installing collected packages: mpmath, antlr4-python3-runtime, triton, sympy, omegaconf, nvidia-nvtx-cu12, nvidia-nvjitlink-cu12, nvidia-nccl-cu12, nvidia-curand-cu12, nvidia-cufft-cu12, nvidia-cuda-runtime-cu12, nvidia-cuda-nvrtc-cu12, nvidia-cuda-cupti-cu12, nvidia-cublas-cu12, networkx, kornia-rs, nvidia-cusparse-cu12, nvidia-cudnn-cu12, nvidia-cusolver-cu12, torch, kornia, litelama Successfully installed antlr4-python3-runtime-4.9.3 kornia-0.7.2 kornia-rs-0.1.2 litelama-0.1.7 mpmath-1.3.0 networkx-3.2.1 nvidia-cublas-cu12-12.1.3.1 nvidia-cuda-cupti-cu12-12.1.105 nvidia-cuda-nvrtc-cu12-12.1.105 nvidia-cuda-runtime-cu12-12.1.105 nvidia-cudnn-cu12-8.9.2.26 nvidia-cufft-cu12-11.0.2.54 nvidia-curand-cu12-10.3.2.106 nvidia-cusolver-cu12-11.4.5.107 nvidia-cusparse-cu12-12.1.0.106 nvidia-nccl-cu12-2.19.3 nvidia-nvjitlink-cu12-12.4.99 nvidia-nvtx-cu12-12.1.105 omegaconf-2.3.0 sympy-1.12 torch-2.2.2 triton-2.2.0

2、clean_object

In [2]

from litelama import LiteLama

from litelama.model import download_file

import os

from fastapi import FastAPI, BodyMODEL_PATH = "work/models/"def clean_object_init_img_with_mask(init_img_with_mask):return clean_object(init_img_with_mask['image'],init_img_with_mask['mask'])def clean_object(image,mask):Lama = LiteLama2()init_image = imagemask_image = maskinit_image = init_image.convert("RGB")mask_image = mask_image.convert("RGB")device = "cuda:0"result = Nonetry:Lama.to(device)result = Lama.predict(init_image, mask_image)except:passfinally:Lama.to("cpu")return [result]class LiteLama2(LiteLama):_instance = Nonedef __new__(cls, *args, **kw):if cls._instance is None:cls._instance = object.__new__(cls, *args, **kw)return cls._instancedef __init__(self, checkpoint_path=None, config_path=None):self._checkpoint_path = checkpoint_pathself._config_path = config_pathself._model = Noneif self._checkpoint_path is None:checkpoint_path = os.path.join(MODEL_PATH, "big-lama.safetensors")if os.path.exists(checkpoint_path) and os.path.isfile(checkpoint_path):passelse:download_file("https://huggingface.co/anyisalin/big-lama/resolve/main/big-lama.safetensors", checkpoint_path)self._checkpoint_path = checkpoint_pathself.load(location="cpu")

3、去除标记物

In [3]

from PIL import Image

from work.scripts import lama

# 打开图片文件

image = Image.open("work/scripts/1.jpg")

mask = Image.open("work/scripts/image.png")

_output = clean_object(image,mask)

print(_output)/opt/conda/envs/python35-paddle120-env/lib/python3.10/site-packages/tqdm/auto.py:21: TqdmWarning: IProgress not found. Please update jupyter and ipywidgets. See https://ipywidgets.readthedocs.io/en/stable/user_install.htmlfrom .autonotebook import tqdm as notebook_tqdm

[<PIL.Image.Image image mode=RGB size=464x712 at 0x7F5007677B20>]

4、查看结果

In [4]

_output[0].show()

<PIL.Image.Image image mode=RGB size=464x712>

四、Gradio应用部署

本文开头所示的Gradio应用已经打包在work/scripts目录下的app.gradio.py文件内,大家可按照aistudio应用部署的方法进行在线部署,也可下载文件到本地进行本地运行。

具体步骤如下:

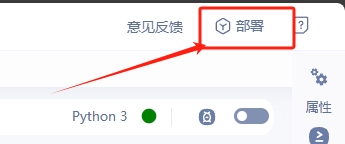

- 编辑器右上角找到部署按钮

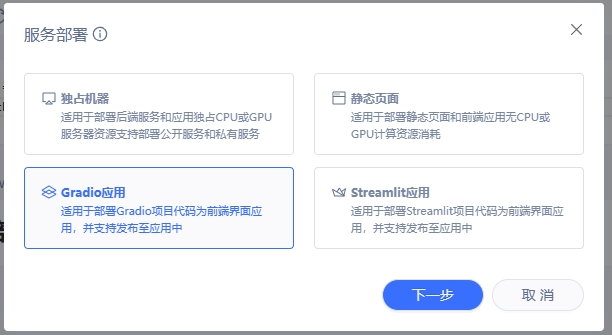

- 选择Gradio部署

- 填写应用信息,执行文件选择 app.gradio.py,部署环境选择 GPU 即可,最后点击部署,接下来耐心等待部署完成。

这篇关于基于【Lama Cleaner】一键秒去水印,轻松移除不想要的内容!的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!